基于openEuler 22.03 LTS 部署openstack(Train版)

| 节点名称 | IP地址 | 软件 |

|---|---|---|

| controller | ens160:172.173.10.110(管理),ens192:10.10.10.10 | mariadb,rabbitmq,keyston,glance,placement,nova,neutron,horizon |

| compute01 | ens160:172.173.10.111(管理),ens192:10.10.10.11 | nova,neutron, |

安装参考:OpenStack Installation Guide — Installation Guide documentation

一、环境准备

1.1 基础环境

- 配置主机名。

hostnamectl set-hostname <hostname>

- 配置主机名ip解析。

cat <<EOF>> /etc/hosts

172.173.10.110 controller

172.173.10.111 compute

EOF

- 关闭防火墙和SELinux。

systemctl disable firewalld --now && setenforce 0 && sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

- 配置时间同步。

5.禁用IPv6.

echo 1 > /proc/sys/net/ipv6/conf/all/disable_ipv6

echo 1 > /proc/sys/net/ipv6/conf/default/disable_ipv6

cat <<EOF>> /etc/sysctl.conf

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

EOF

1.2 软件仓库

参考:OpenStack packages for RHEL and CentOS — Installation Guide documentation

yum -y install openstack-release-train

yum clean all && yum makecache

1.3 安装安装 SQL DataBase

参考:SQL database for RHEL and CentOS — Installation Guide documentation

1.执行如下命令,安装软件包。

yum -y install mariadb mariadb-server python3-PyMySQL

2.执行如下命令,创建并编辑 /etc/my.cnf.d/openstack.cnf 文件。

cat >/etc/my.cnf.d/openstack.cnf<<'EOF'

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF

3.启动 DataBase 服务,并为其配置开机自启动:

systemctl enable mariadb.service --now

4.配置DataBase的默认密码(可选)

mysql_secure_installation

1.4 安装 RabbitMQ

参考:Message queue for RHEL and CentOS — Installation Guide documentation

1.执行如下命令,安装软件包。

yum -y install rabbitmq-server

2.启动 RabbitMQ 服务,并为其配置开机自启动。

systemctl enable rabbitmq-server.service --now

3.添加 OpenStack用户。

rabbitmqctl add_user openstack guojie.com

4.设置openstack用户权限,允许进行配置、写、读:

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

1.5 安装 Memcached

参考:Memcached for RHEL and CentOS — Installation Guide documentation

1.执行如下命令,安装依赖软件包。

yum -y install memcached python3-memcached

2.编辑 /etc/sysconfig/memcached 文件。

sed -i 's/^OPTIONS=.*/OPTIONS="-l 0.0.0.0"/' /etc/sysconfig/memcached

3.执行如下命令,启动 Memcached 服务,并为其配置开机启动。

systemctl enable memcached.service --now

二、安装OpenStack

2.1 Keystone 安装

参考:OpenStack Docs: Install and configure

1.创建 keystone 数据库并授权。

mysql -u root -p

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'guojie.com';

exit

2.安装软件包。

yum -y install openstack-keystone httpd mod_wsgi

3.配置keystone相关配置

cp /etc/keystone/keystone.conf{,.bak}

grep -Ev '^$|#' /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

sed -i '/^\[database\]/a connection = mysql+pymysql://keystone:guojie.com@controller/keystone' /etc/keystone/keystone.conf

sed -i '/^\[token\]/a provider = fernet' /etc/keystone/keystone.conf

4.同步数据库。

su -s /bin/sh -c "keystone-manage db_sync" keystone

mysql -ukeystone -pguojie.com -Dkeystone -e 'show tables;'

5.初始化Fernet密钥仓库。

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

6.启动服务。

keystone-manage bootstrap --bootstrap-password guojie.com \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

7.配置Apache HTTP server

sed -i 's/^#ServerName www.example.com:80/ServerName controller:80/' /etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

8.启动Apache HTTP服务。

systemctl enable httpd.service --now

9.创建环境变量配置。

cat << EOF > ~/.admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=guojie.com

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

10.依次创建domain, projects, users, roles,需要先安装好python3-openstackclient:

yum -y install python3-openstackclient

导入环境变量

source ~/.admin-openrc

创建project service,其中 domain default 在 keystone-manage bootstrap 时已创建

openstack domain create --description "An Example Domain" example

openstack project create --domain default --description "Service Project" service

注意:官网还有介绍创建我的用户的,但是我们这里不用了,直接用admin管理。

2.2 Glance 安装

参考:OpenStack Docs: Install and configure (Red Hat)

1.在控制节点上创建数据库、服务凭证和 API 端点

创建数据库:

mysql -u root -p

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'guojie.com';

exit

创建服务凭证

source ~/.admin-openrc

openstack user create --domain default --password guojie.com glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

创建镜像服务API端点:

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

2.安装软件包

yum -y install openstack-glance

3.配置glance相关配置:

cp /etc/glance/glance-api.conf{,.bak}

grep -Ev '^#|^$' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

vi /etc/glance/glance-api.conf

[DEFAULT]

log_file = /var/log/glance/glance-api.log

[database]

connection = mysql+pymysql://glance:guojie.com@controller/glance

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = guojie.com

[paste_deploy]

flavor = keystone

完整配置:

[root@controller ~]# cat /etc/glance/glance-api.conf

[DEFAULT]

log_file = /var/log/glance/glance-api.log

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:guojie.com@controller/glance

[file]

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.sheepdog.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[image_format]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = guojie.com

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

4.同步数据库:

su -s /bin/sh -c "glance-manage db_sync" glance

5.启动服务

systemctl enable openstack-glance-api.service --now

查看9292端口是否监听

[root@controller ~]# ss -ntl|grep 9292

LISTEN 0 4096 0.0.0.0:9292 0.0.0.0:*

6.验证

下载镜像

source ~/.admin-openrc

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

*注意*

如果您使用的环境是鲲鹏架构,请下载aarch64版本的镜像;已对镜像cirros-0.5.2-aarch64-disk.img进行测试。

向Image服务上传镜像:

openstack image create --disk-format qcow2 --container-format bare --file cirros-0.4.0-x86_64-disk.img --public cirros

确认镜像上传并验证属性:

openstack image list

2.3 Placement安装

参考:OpenStack Docs: Install and configure Placement for Red Hat Enterprise Linux and CentOS

1.控制节点创建数据库、服务凭证和 API 端点

创建数据库:

作为 root 用户访问数据库,创建 placement 数据库并授权。

mysql -u root -p

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'guojie.com';

exit

source ~/.admin-openrc

执行如下命令,创建 placement 服务凭证、创建 placement 用户以及添加‘admin’角色到用户‘placement’。

创建Placement API服务

openstack user create --domain default --password guojie.com placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

创建placement服务API端点:

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

2.安装和配置

安装软件包:

yum -y install openstack-placement-api

配置placement:

编辑 /etc/placement/placement.conf 文件:

在[placement_database]部分,配置数据库入口

在[api] [keystone_authtoken]部分,配置身份认证服务入口

cp /etc/placement/placement.conf{,.bak}

grep -Ev "^#|^$" /etc/placement/placement.conf.bak > /etc/placement/placement.conf

vi /etc/placement/placement.conf

[placement_database]

# ...

connection = mysql+pymysql://placement:guojie.com@controller/placement

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = guojie.com

完整配置:

[root@controller ~]# cat /etc/placement/placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = guojie.com

[oslo_policy]

[placement]

[placement_database]

connection = mysql+pymysql://placement:guojie.com@controller/placement

[profiler]

同步数据库:

su -s /bin/sh -c "placement-manage db sync" placement

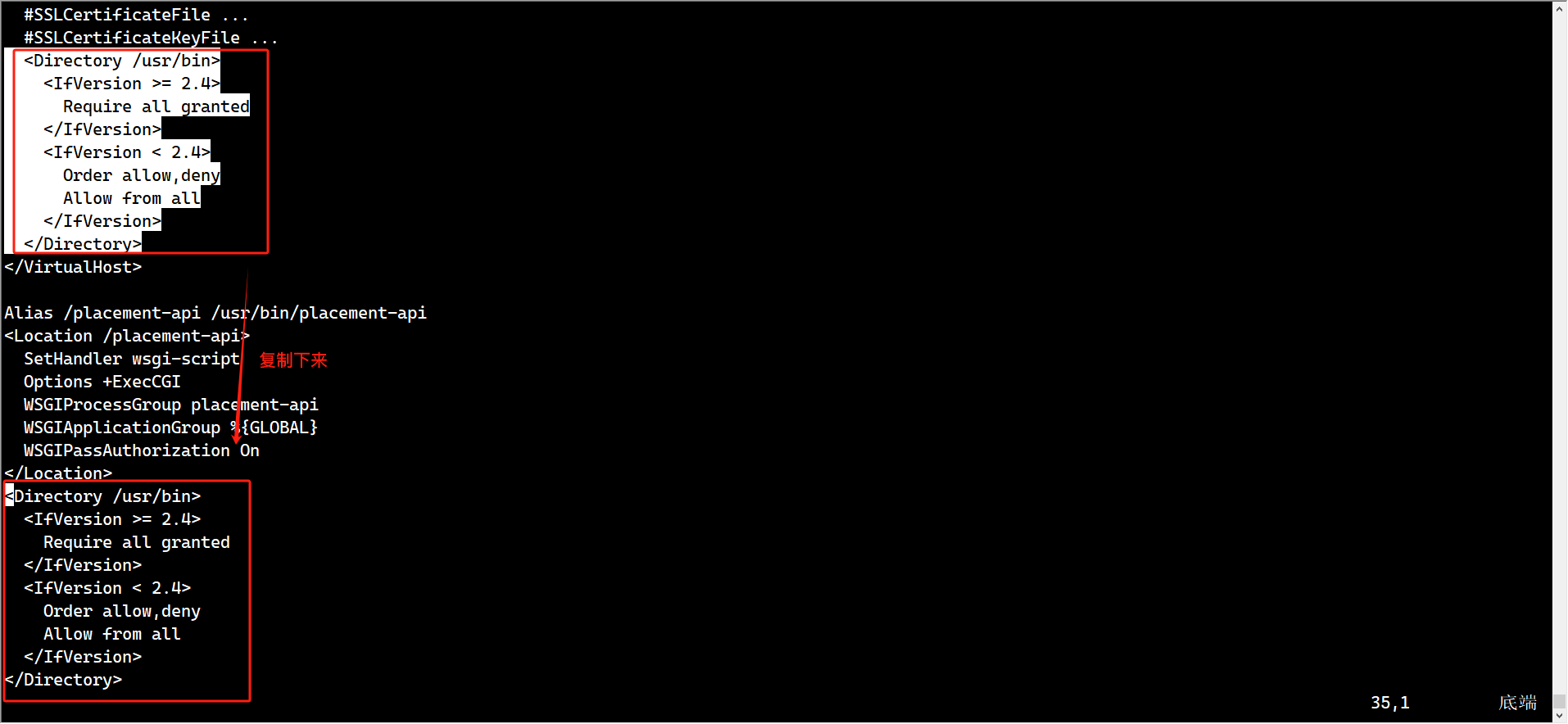

vi /etc/httpd/conf.d/00-placement-api.conf

完整配置:

[root@controller ~]# cat /etc/httpd/conf.d/00-placement-api.conf

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess placement-api processes=3 threads=1 user=placement group=placement

WSGIScriptAlias / /usr/bin/placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/placement/placement-api.log

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

</VirtualHost>

Alias /placement-api /usr/bin/placement-api

<Location /placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

启动httpd服务:

systemctl restart httpd

3.验证

执行如下命令,执行状态检查:

source ~/.admin-openrc

placement-status upgrade check

2.4 Nova 安装

参考:OpenStack Docs: Install and configure controller node for Red Hat Enterprise Linux and CentOS

1.控制节点创建数据库、服务凭证和 API 端点

创建数据库:

mysql -u root -p

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'guojie.com';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'guojie.com';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'guojie.com';

exit

source ~/.admin-openrc

创建nova服务凭证:

openstack user create --domain default --password guojie.com nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

创建nova API端点:

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

2.安装软件包

yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler

3.配置nova相关配置

cp /etc/nova/nova.conf{,.bak}

grep -Ev '^#|^$' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

vi /etc/nova/nova.conf

配置内容较多,可根据官网配置步骤修改,完整配置如下(注意修改相关组件密码和my_ip):

[root@controller ~]# cat /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:guojie.com@controller:5672/

my_ip = 172.173.10.110

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

log_file = /var/log/nova/nova.log

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:guojie.com@controller/nova_api

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

connection = mysql+pymysql://nova:guojie.com@controller/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = guojie.com

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = guojie.com

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

同步数据库:

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

验证cell0和cell1注册正确:

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

启动服务:

systemctl enable --now \

openstack-nova-api.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

制作一个脚本方便管理nova启动

cat <<EOF> nova-restart.sh

#!/bin/bash

systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

EOF

测试:

sh nova-restart.sh

查看日志:

tail -100f /var/log/nova/nova.log

2.5 计算节点部署Nova

参考:OpenStack Docs: Install and configure a compute node for Red Hat Enterprise Linux and CentOS

1.软件安装:

yum -y install openstack-nova-compute

配置:

cp /etc/nova/nova.conf{,.bak}

grep -Ev '^#|^$' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

vi /etc/nova/nova.conf

配置内容较多,可根据官网配置步骤修改,完整配置如下(注意修改相关组件密码和my_ip):

[root@compute01 ~]# cat /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:guojie.com@controller:5672/

compute_driver=libvirt.LibvirtDriver

my_ip = 172.173.10.111

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

log_file = /var/log/nova/nova-compute.log

block_device_allocate_retries = 180

[api]

auth_strategy = keystone

[api_database]

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = guojie.com

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = guojie.com

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://172.173.10.110:6080/vnc_auto.html

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

2.确定是否支持虚拟机硬件加速(x86架构):

grep -Ec '(vmx|svm)' /proc/cpuinfo

如果返回值为0则不支持硬件加速,需要配置libvirt使用QEMU而不是KVM

vi /etc/nova/nova.conf (CPT)

[libvirt]

virt_type = qemu

如果返回值为1或更大的值,则支持硬件加速,不需要进行额外的配置

启动服务:

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service #这里等一会让这个服务启动完成再启动下一个

systemctl status libvirtd.service

systemctl start openstack-nova-compute.service

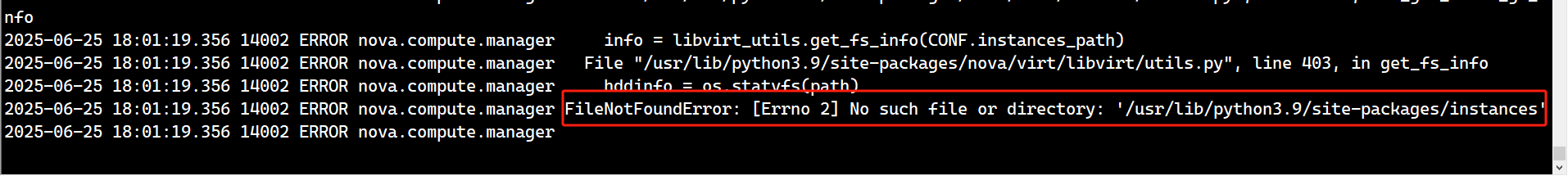

查看日志发现报错:

tail -100f /var/log/nova/nova-compute.log

手动创建该文件并重启:

mkdir /usr/lib/python3.9/site-packages/instances

chown -R nova.nova /usr/lib/python3.9/site-packages/instances

systemctl restart openstack-nova-compute.service

在控制节点上发现主机:

openstack compute service list --service nova-compute

添加计算节点到集群:

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

验证:

openstack compute service list

2.6 部署Neutron

OpenStack Docs: Install and configure controller node

1.创建数据库、服务凭证和 API 端点

创建数据库:

mysql -u root -p

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'guojie.com';

exit;

创建neutron服务凭证:

openstack user create --domain default --password guojie.com neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

创建Neutron服务API端点:

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696

2.安装软件包:

yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables ipset

3.配置neutron相关配置:

配置主体配置

cp /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

vi /etc/neutron/neutron.conf

完整配置(注意修改组件密码):

[root@controller ~]# cat /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:guojie.com@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[cors]

[database]

connection = mysql+pymysql://neutron:guojie.com@controller/neutron

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = guojie.com

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[privsep]

[ssl]

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = nova

password = guojie.com

配置ML2插件:

cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

vi /etc/neutron/plugins/ml2/ml2_conf.ini

完整配置(基本上固定配置,照搬):

[root@controller ~]# cat /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = extnal

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

创建/etc/neutron/plugin.ini的符号链接:

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

配置 Linux bridge 代理:

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

完整配置如下:

[root@controller ~]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = extnal:ens192

[vxlan]

enable_vxlan = true

local_ip = 172.173.10.110

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

替换ens192为物理网络上外网的接口;

替换172.173.10.110为控制节点的管理IP地址;

确保Linux操作系统内核支持网络桥接过滤器,配置以下所有sysctl值设置为1:

cat <<EOF>> /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

modprobe br_netfilter

sysctl -p

配置Layer-3代理:

cp /etc/neutron/l3_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

vi /etc/neutron/l3_agent.ini

完整配置(就加了一行配置):

[root@controller ~]# cat /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = linuxbridge

配置DHCP代理:

cp /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

vi /etc/neutron/dhcp_agent.ini

完整配置(加了三行):

[root@controller ~]# cat /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

配置metadata代理:

cp /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

vi /etc/neutron/metadata_agent.ini

完整配置如下:

[root@controller ~]# cat /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = guojie

[cache]

替换guojie为合适的元数据代理secret。要和下面一步对应nova配置中metadata_proxy_shared_secret配置项一致。

4.配置nova相关配置/etc/nova/nova.conf,在[neutron]项中添加如下配置:

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = guojie.com

service_metadata_proxy = true

metadata_proxy_shared_secret = guojie

5.同步数据库:

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

6.重启计算API服务:

systemctl restart openstack-nova-api.service

7.启动服务

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

写成脚本,方便管理

cat <<EOF> neutron-restart.sh

#!/bin/bash

systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

EOF

sh neutron-restart.sh

2.7 计算节点部署Neutron

参考:OpenStack Docs: Install and configure compute node

1.安装软件

yum -y install openstack-neutron-linuxbridge ebtables ipset

2.配置neutron相关配置:

配置主体配置

cp /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

vi /etc/neutron/neutron.conf

完整配置:

[root@compute01 ~]# cat /etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:guojie.com@controller

auth_strategy = keystone

[cors]

[database]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = guojie.com

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[privsep]

[ssl]

配置ML2插件:

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

完整配置:

[root@compute01 ~]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = extnal:ens192

[vxlan]

enable_vxlan = true

local_ip = 172.173.10.111

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

注意修改网卡为上外网的网卡,ip为本机管理地址。

确保Linux操作系统内核支持网络桥接过滤器,配置以下所有sysctl值设置为1:

cat <<EOF>> /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

modprobe br_netfilter

sysctl -p

3.配置nova相关配置,在[neutron]项中添加如下配置:

vi /etc/nova/nova.conf

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = guojie.com

重启nova服务

systemctl restart openstack-nova-compute.service

启动服务

systemctl enable neutron-linuxbridge-agent.service --now

在控制节点上验证:

openstack network agent list

2.8 Dashbaord(horizon)部署

参考:OpenStack Docs: Install and configure for Red Hat Enterprise Linux and CentOS

1.软件安装

yum -y install openstack-dashboard

2.配置

cp /etc/openstack-dashboard/local_settings{,.bak}

vi /etc/openstack-dashboard/local_settings

修改如下配置部分没有的要自己添加(注意以下只是部分要修改的内容,并不是完整配置):

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', ]

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

WEBROOT = '/dashboard'

POLICY_FILES_PATH = "/etc/openstack-dashboard"

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

TIME_ZONE = "Asia/Shanghai"

配置完成之后重启httpd

systemctl restart httpd.service memcached.service

3.验证 打开浏览器,输入网址http://HOSTIP/dashboard/,登录 horizon。

*注意*

替换HOSTIP为控制节点管理平面IP地址

三、测试

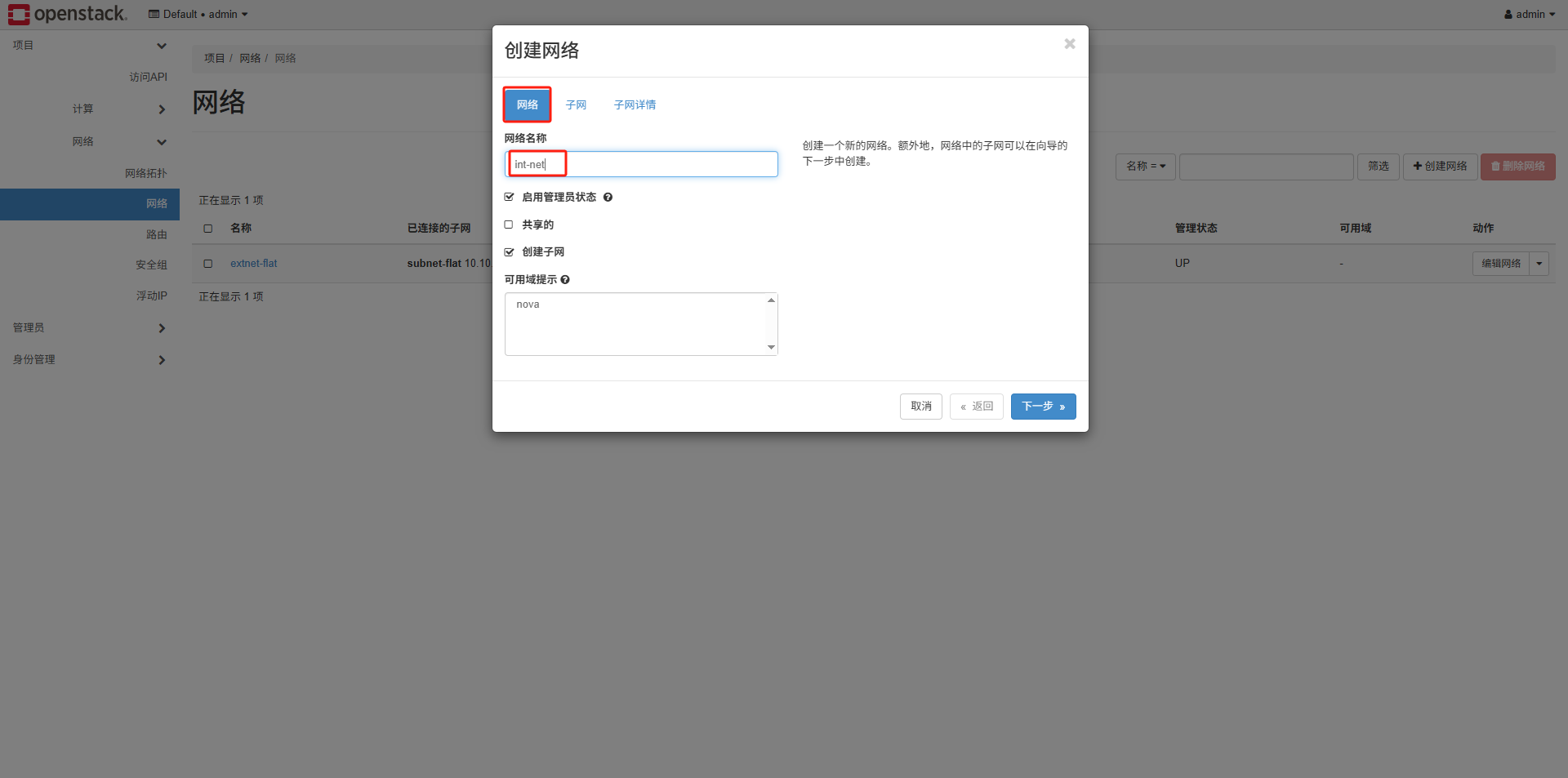

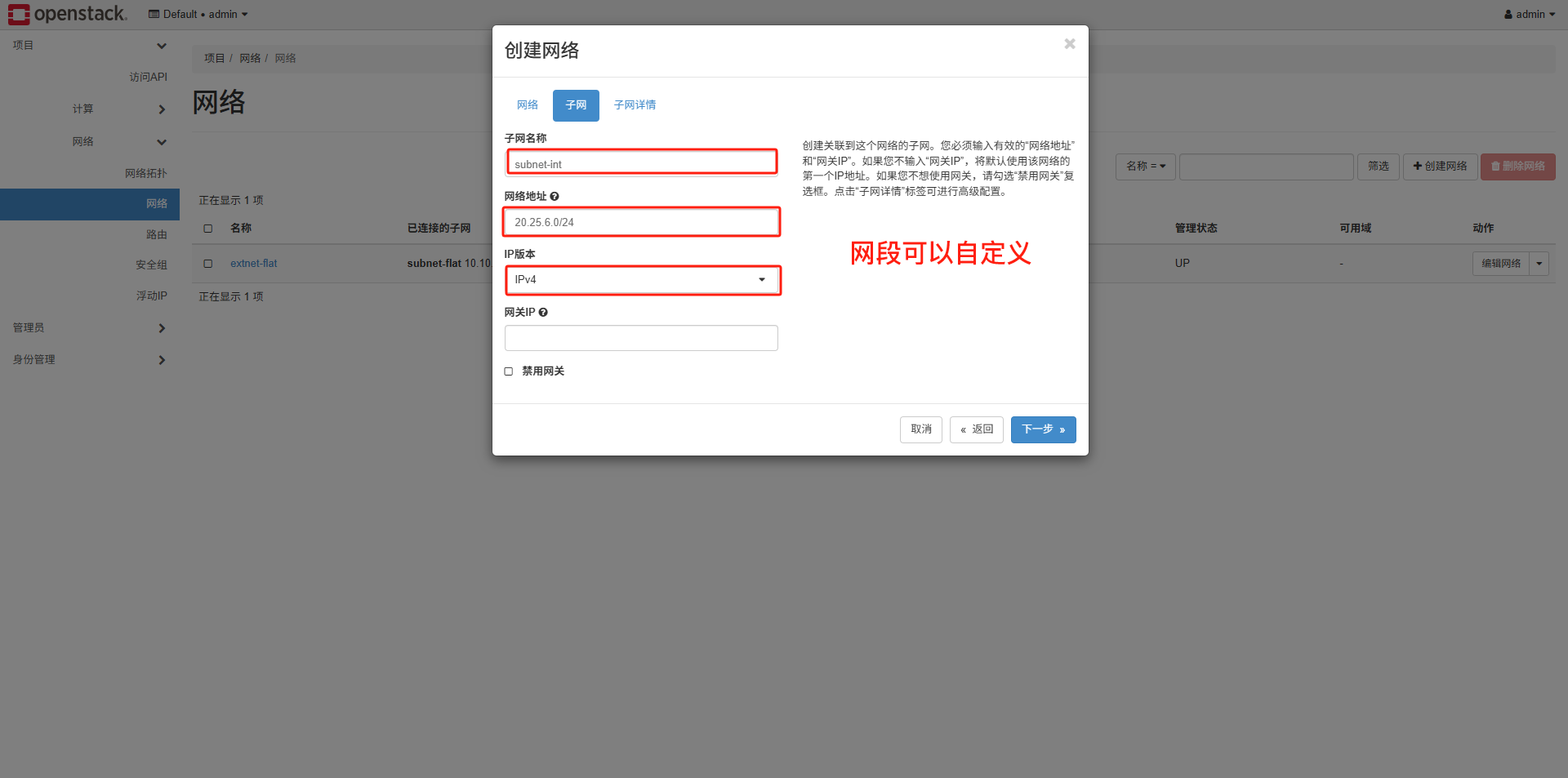

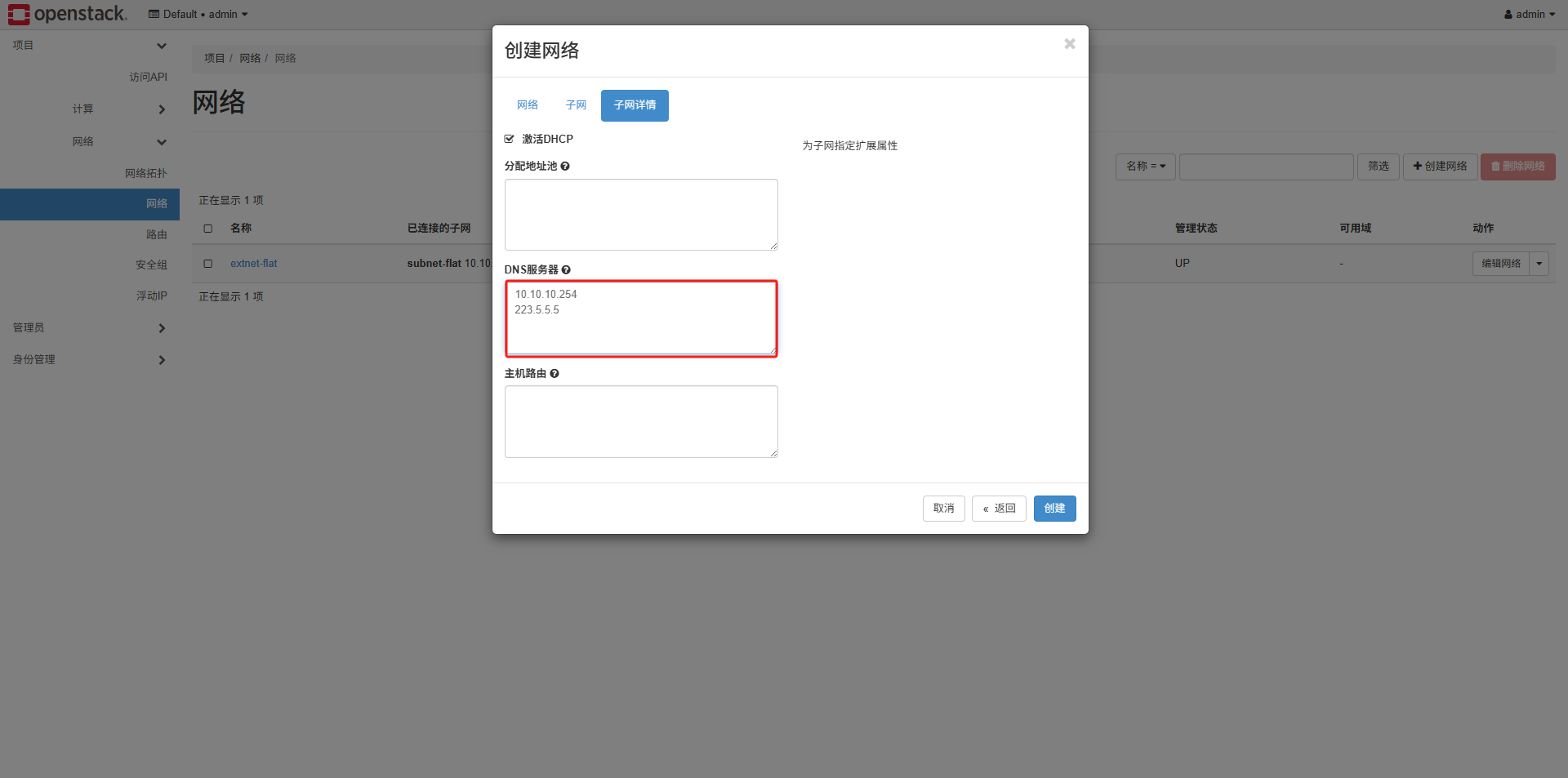

3.1 网络创建

1.创建桥接网络

2.创建内部网络

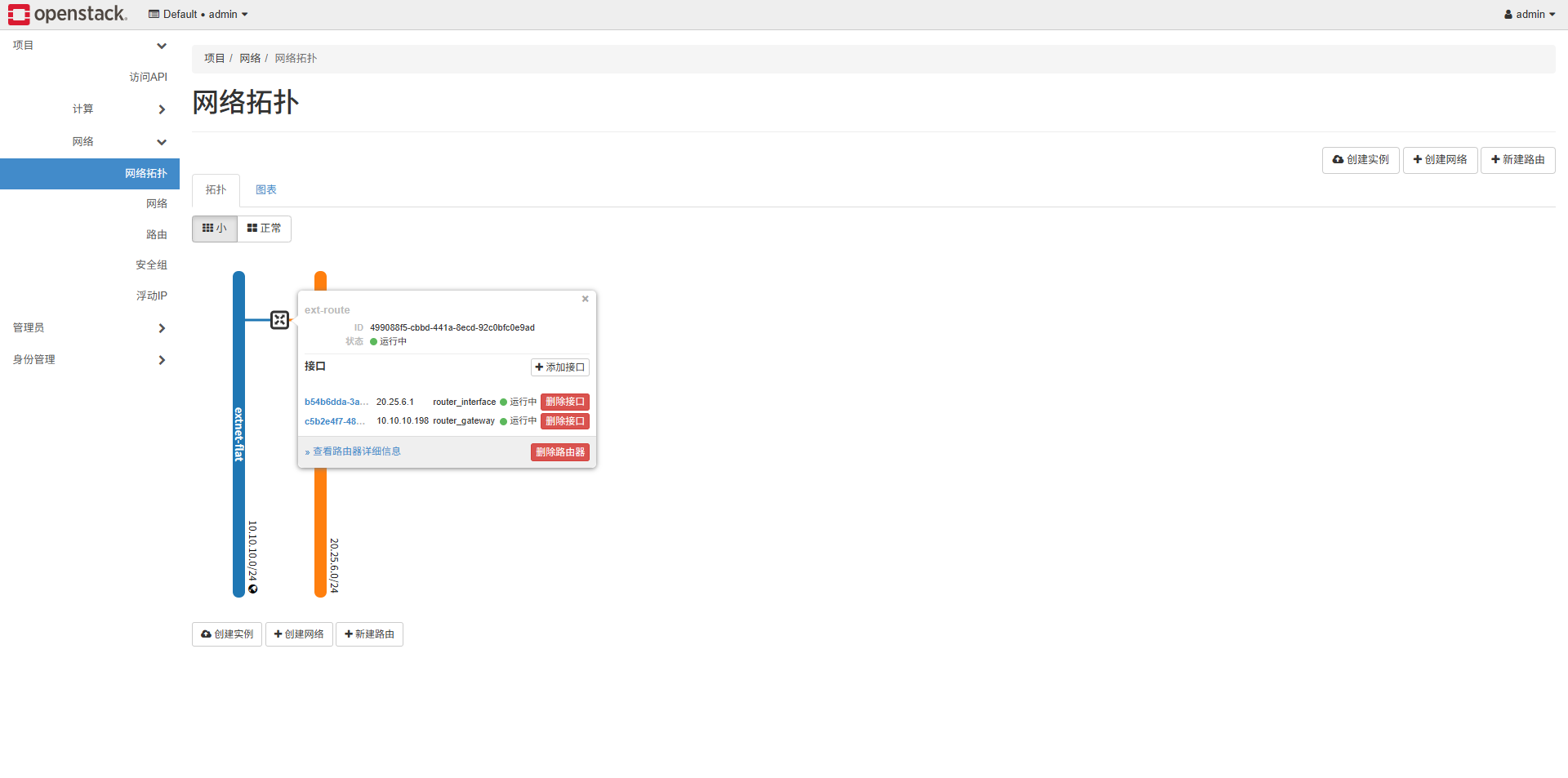

3.2 路由创建

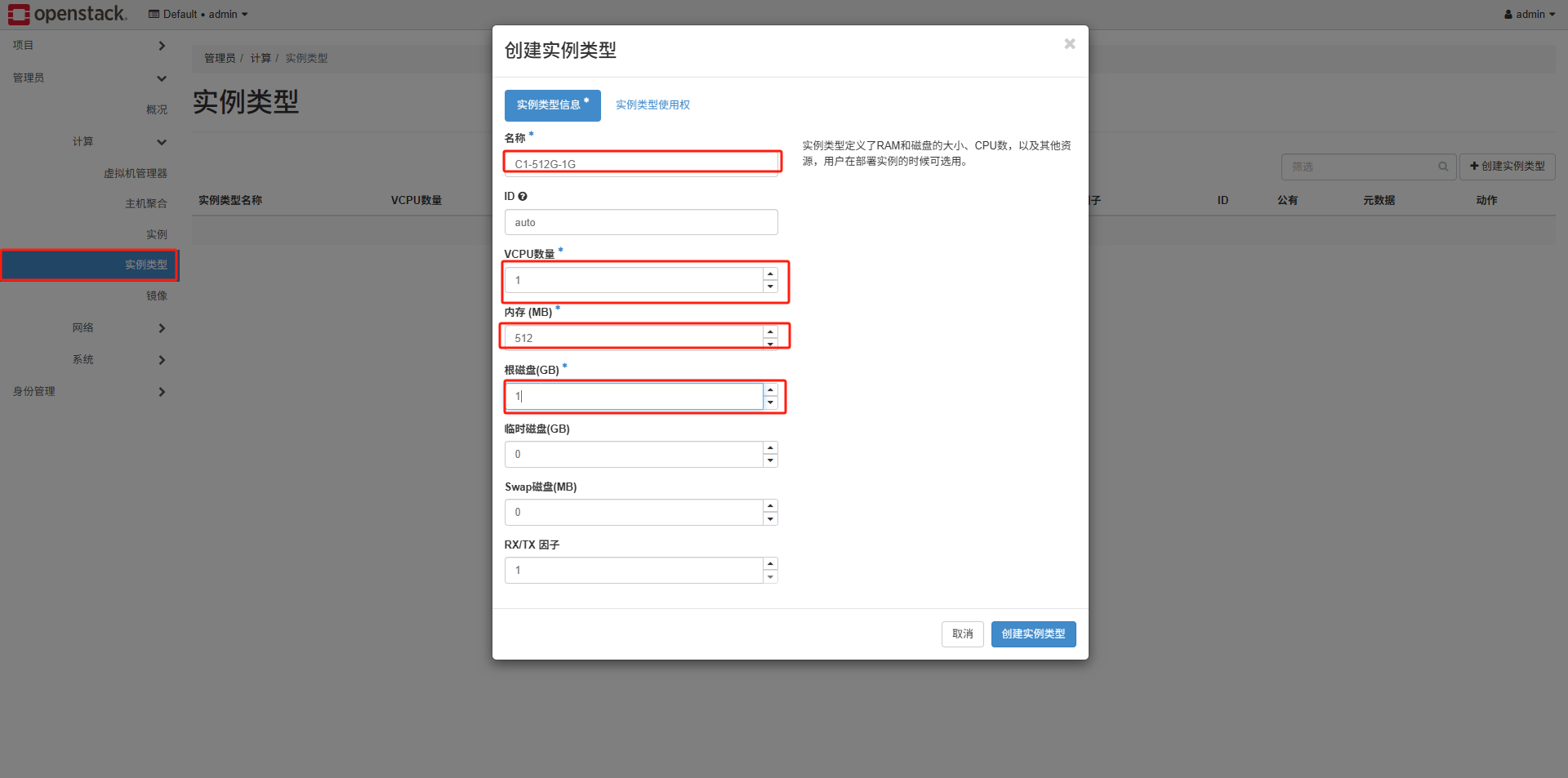

3.3 创建实例类型

3.4 创建实例

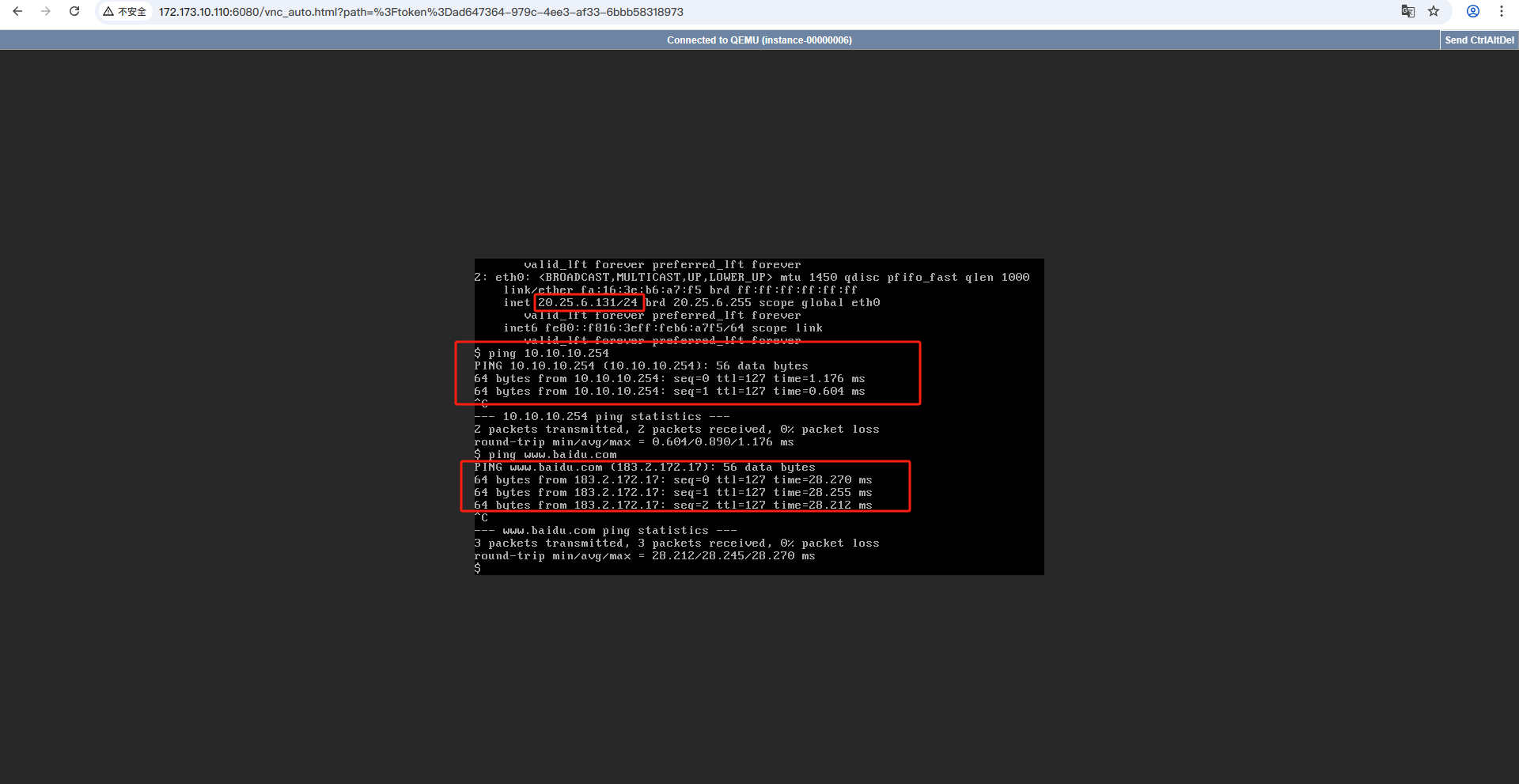

创建实例,网卡选择新建的int-net,之后启动测试查看网络是否正常。

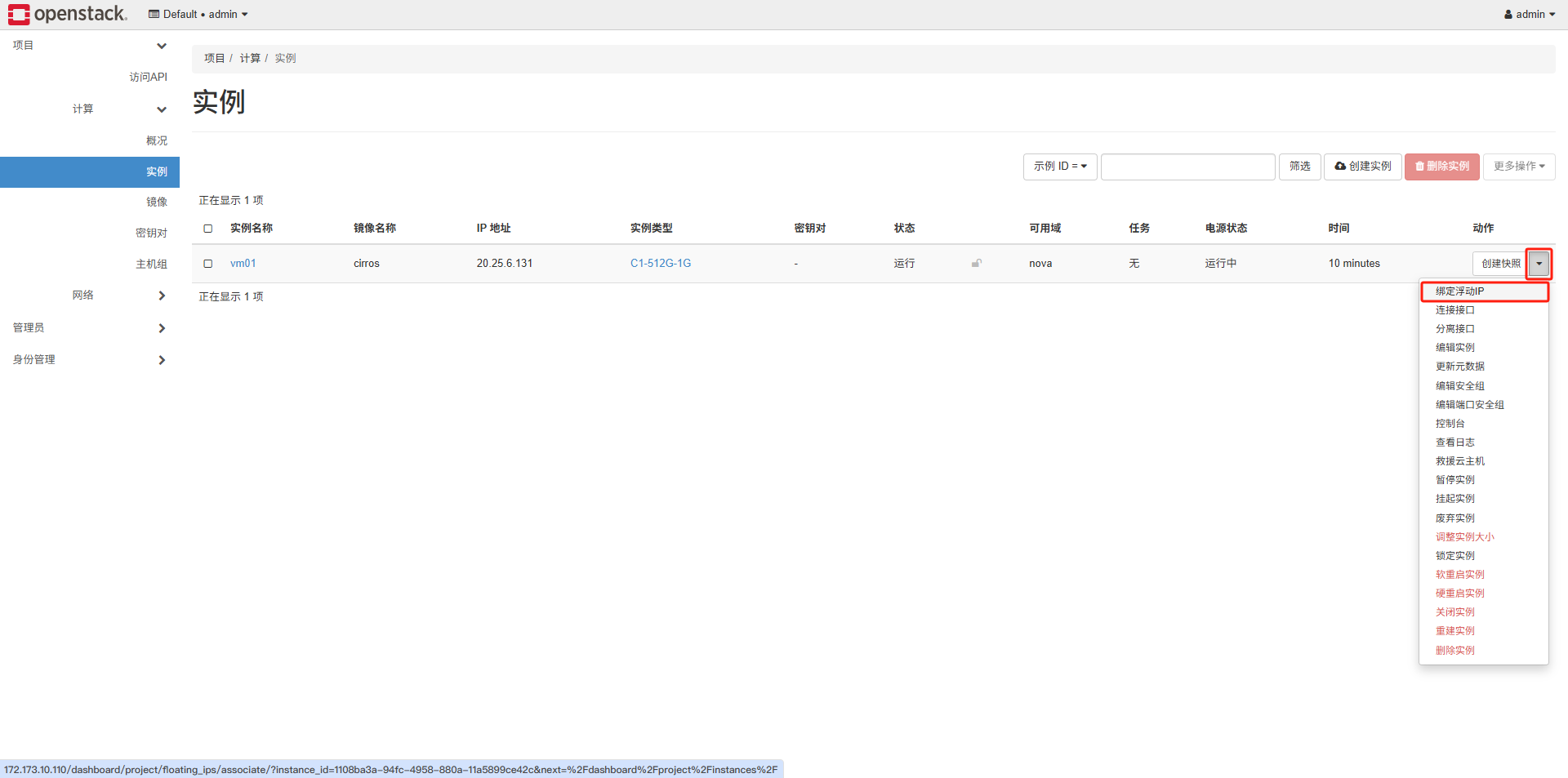

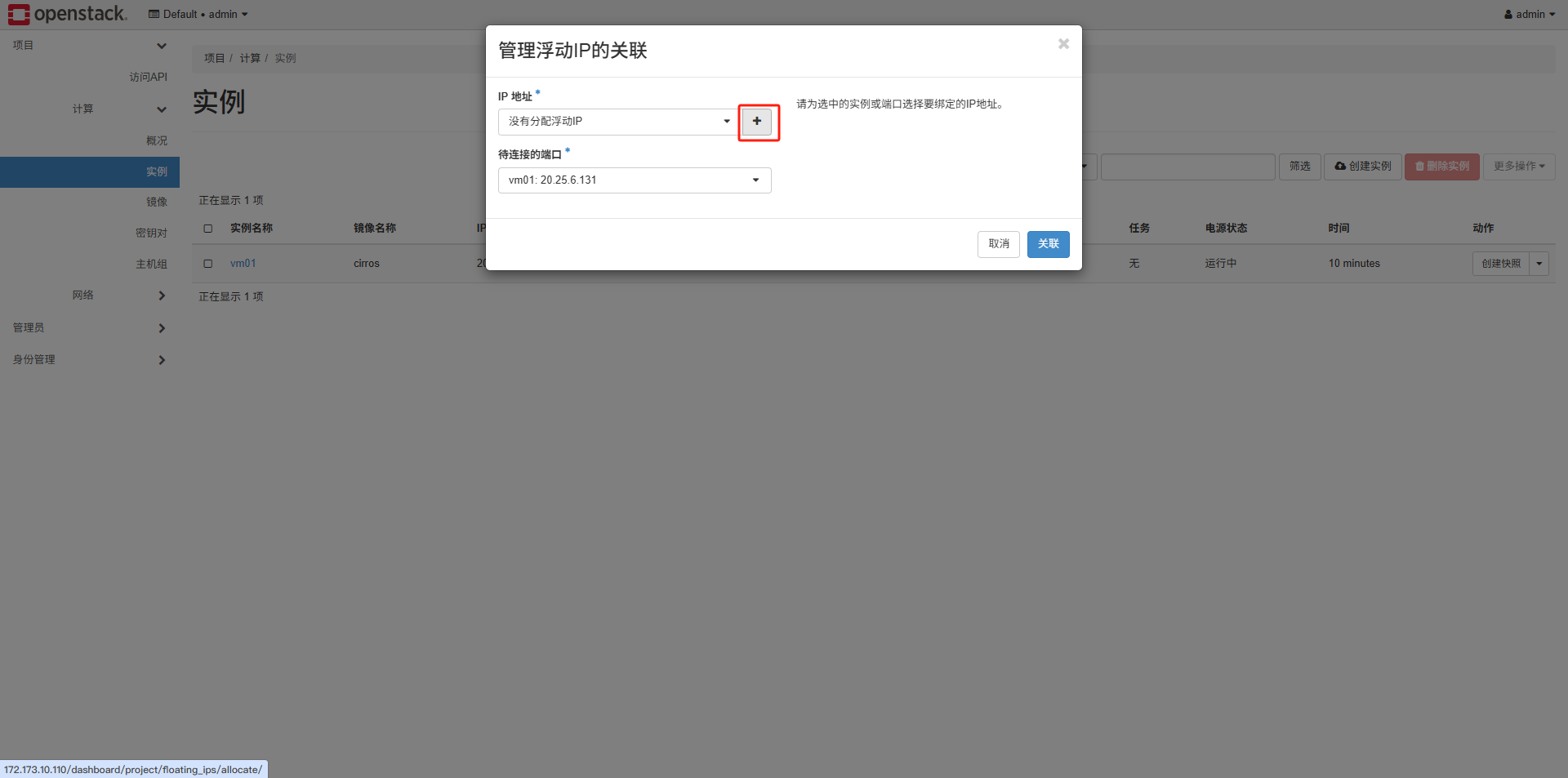

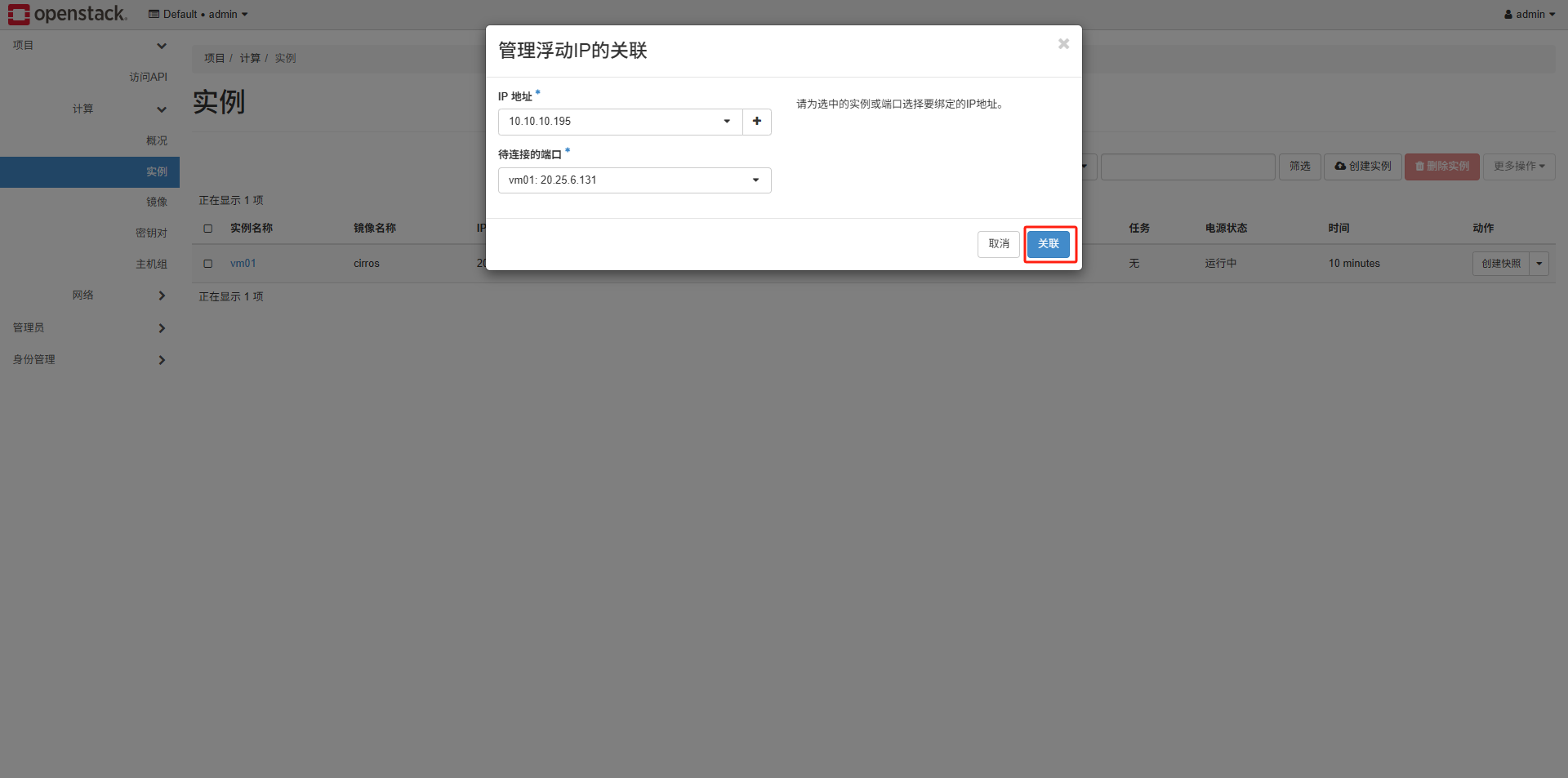

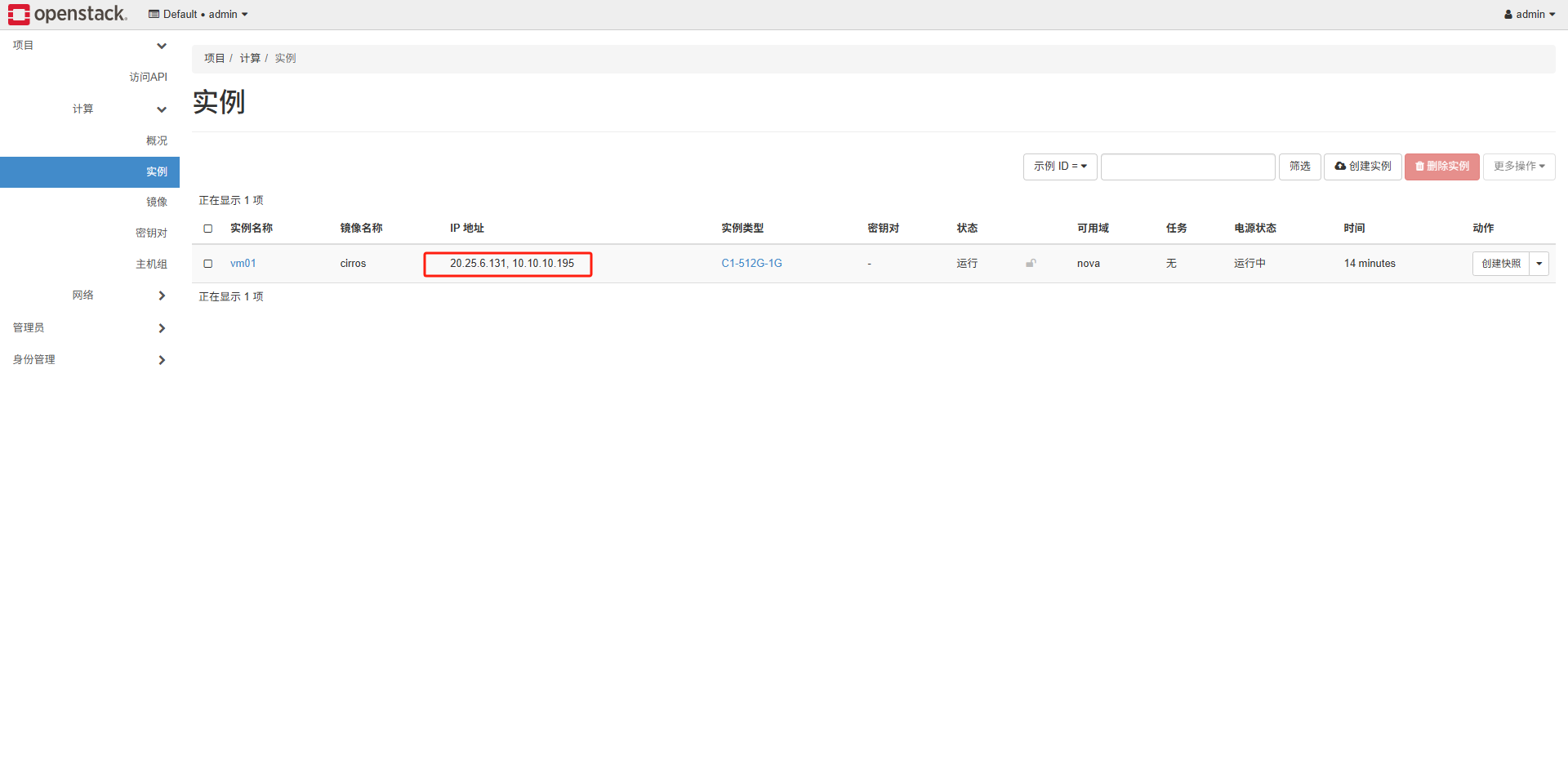

3.5 浮动ip

这样创建好之后外部网络是无法访问内部虚拟机的,需要创建一个浮动ip与之绑定:

再在安全组当中运行ping和允许ssh:

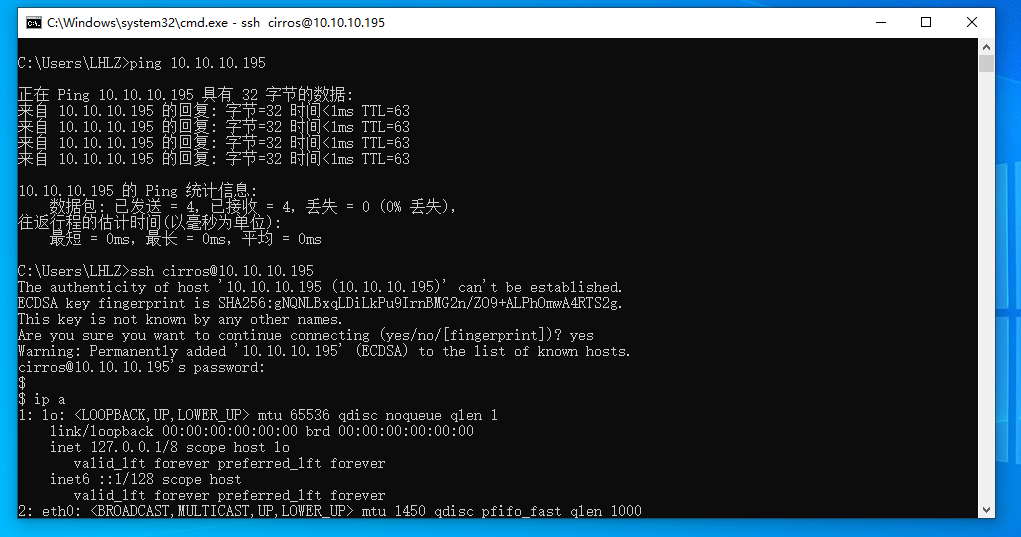

然后就可以通过浮动IP访问内部虚拟机:

四、cinder安装

4.1 控制节点部署cinder

参考:OpenStack Docs: Install and configure controller node

1.创建数据库、服务凭证和 API 端点

创建数据库:

mysql -u root -p

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'guojie.com';

exit;

创建cinder服务凭证:

source ~/.admin-openrc

openstack user create --domain default --password guojie.com cinder

openstack role add --project service --user cinder admin

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

创建块存储服务API端点:

openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%\(project_id\)s

2.安装软件包:

yum -y install openstack-cinder-api openstack-cinder-scheduler

配置cinder相关配置:

cp /etc/cinder/cinder.conf{,.bak}

grep -Ev '^$|#' /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf

vi /etc/cinder/cinder.conf

完整配置如下:

[root@controller ~]# cat /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:guojie.com@controller

auth_strategy = keystone

my_ip = 172.173.10.110

[barbican]

[cors]

[database]

connection = mysql+pymysql://cinder:guojie.com@controller/cinder

[healthcheck]

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = guojie.com

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[privsep]

[profiler]

[sample_castellan_source]

[sample_remote_file_source]

[ssl]

[vault]

3.同步数据库:

su -s /bin/sh -c "cinder-manage db sync" cinder

4.nova配置:

在[cinder]下添加如下配置

vi /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

5.重启计算API服务

systemctl restart openstack-nova-api.service

7.启动cinder服务

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service --now

4.2 存储节点部署cinder

这里我们就用compute01计算节点安装,你也可以单独使用一个节点安装该服务:

参考:OpenStack Docs: Install and configure a storage node

以nvme0n2这块盘为例:

1.软件安装

这个软件一般是自带的:

yum -y install lvm2 device-mapper-persistent-data

systemctl enable lvm2-lvmetad.service --now

2.准备存储设备,以下仅为示例:

注意我这里设备名字就叫nvme0n2,大部分情况下可能为/dev/sdb

pvcreate /dev/nvme0n2

vgcreate cinder-volumes /dev/nvme0n2

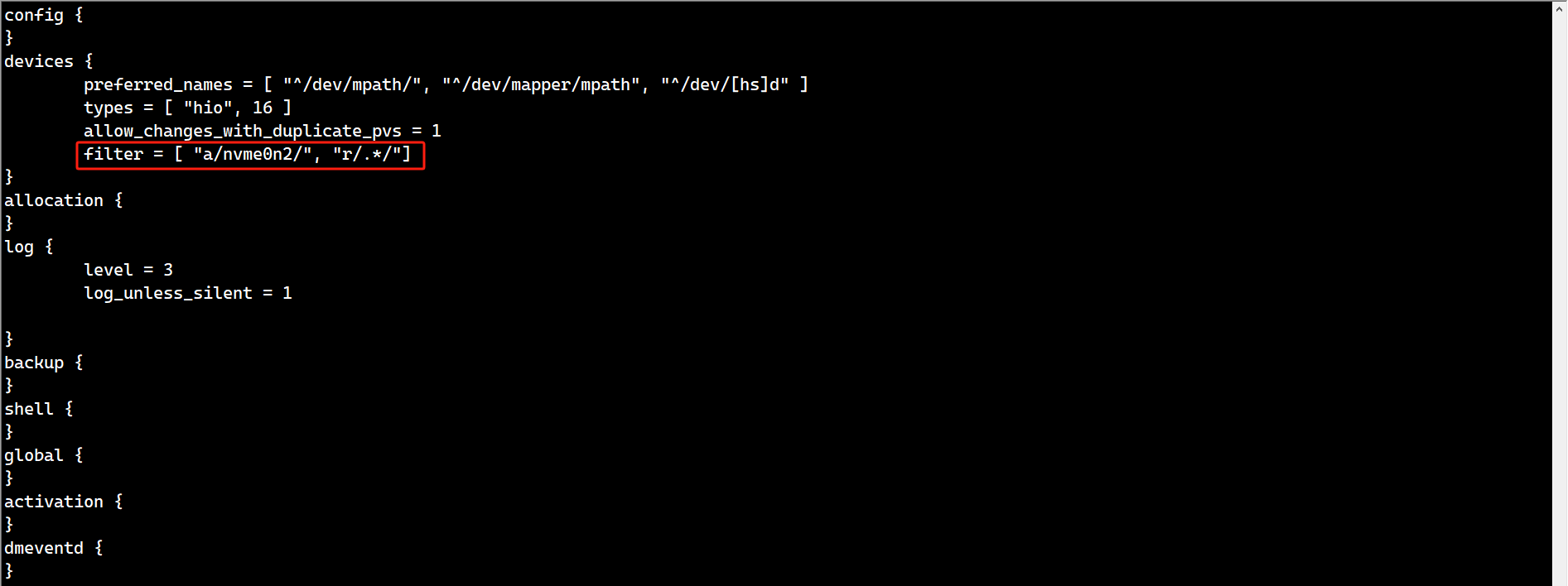

3.配置,配置/dev/nvme0n2提供给云主机,其他盘不能使用:

cp /etc/lvm/lvm.conf{,.bak}

grep -Ev '^$|#' /etc/lvm/lvm.conf.bak > /etc/lvm/lvm.conf

vi /etc/lvm/lvm.conf

filter = [ "a/sdb/", "r/.*/"]

4.安装这些软件

yum -y install openstack-cinder targetcli python-keystone

配置cinder相关配置:

cp /etc/cinder/cinder.conf{,.bak}

grep -Ev '^$|#' /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf

vi /etc/cinder/cinder.conf

完整配置:

[root@compute01 ~]# cat /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:guojie.com@controller

auth_strategy = keystone

my_ip = 172.173.10.111

enabled_backends = lvm

glance_api_servers = http://controller:9292

[barbican]

[cors]

[database]

mysql+pymysql://cinder:guojie.com@controller/cinder

[healthcheck]

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = guojie.com

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[privsep]

[profiler]

[sample_castellan_source]

[sample_remote_file_source]

[ssl]

[vault]

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = lioadm

5.启动服务

systemctl enable openstack-cinder-volume.service target.service iscsid.service --now

验证:

openstack volume service list

新建卷之后连接到虚拟机上:

格式化挂载都正常。

浙公网安备 33010602011771号

浙公网安备 33010602011771号