基于CentOS7安装Openstack (Train版)

安装参考:https://docs.openstack.org/zh_CN/install-guide/

| 角色 | 配置 | IP | |

|---|---|---|---|

| 控制节点(controller) | CPU 4+ MEM 6G+ DISK 50G+ |

172.173.10.110 (管理网) 10.1.1.10 (外部网络) |

|

| 计算节点(compute) | CPU 4+ MEM 6G+ DISK 50G+ |

172.173.10.111 (管理网) 10.1.1.11 (外部网络) |

|

| 存储节点(cinder) | CPU 4+ MEM 6G+ DISK1 50G+ DISK2 50G+ |

172.173.10.112 (管理网) |

一、环境初始化

1.1 配置静态IP并禁用NetworkManager

# 略过配置ip

systemctl disable NetworkManager --now

1.2 主机名解析

cat <<EOF>> /etc/hosts

172.173.10.110 controller

172.173.10.111 compute

172.173.10.112 cinder

EOF

1.3 关闭防火墙和selinux

systemctl disable firewalld --now && setenforce 0 && sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

1.4 配置时间同步

sed -i '/^server [0-3]\.centos\.pool\.ntp\.org iburst/d' /etc/chrony.conf

sed -i '3i server ntp.aliyun.com iburst' /etc/chrony.conf

systemctl restart chronyd && systemctl enable chronyd

1.5 配置yum源

rm -rf /etc/yum.repos.d/*

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.huaweicloud.com/repository/conf/CentOS-7-anon.repo

cat <<EOF> /etc/yum.repos.d/CentOS-OpenStack-train.repo

[openstack-train]

name=openstack-train

baseurl=https://mirrors.huaweicloud.com/centos-vault/7.9.2009/cloud/x86_64/openstack-train/

gpgcheck=0

enabled=1

[qumu-kvm]

name=qume-kvm

baseurl=https://mirrors.huaweicloud.com/centos-vault/7.9.2009/virt/x86_64/kvm-common/

gpgcheck=0

enabled=1

EOF

yum clean all && yum makecache

二、安装

2.1 所有节点安装openstack基础工具

yum install -y python-openstackclient

2.2 计算节点安装基本软件包

[root@compute ~]# yum install qemu-kvm libvirt bridge-utils -y

[root@compute ~]# ln -sv /usr/libexec/qemu-kvm /usr/bin/

三、安装支撑性服务

3.1 数据库

在控制节点安装mariadb(也可以安装单独的节点,甚至安装数据库集 群)

yum install mariadb mariadb-server python2-PyMySQL -y

增加子配置文件:

cat >/etc/my.cnf.d/openstack.cnf<<'EOF'

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF

启动数据库

systemctl enable mariadb --now

安装初始化

mysql_secure_installation

root密码建议就先不设置,他只允许本地登录

3.2 消息队列

消息队列rabbitmq的目的:

·组件之间相互通讯的工具

·异步方式信息同步

1.在控制节点安装rabbitmq:

yum install erlang socat rabbitmq-server -y

2.启动服务:

systemctl enable rabbitmq-server --now

3.创建openstack并授权:

rabbitmqctl add_user openstack guojie.com

rabbitmqctl set_user_tags openstack administrator

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

3.3 memcache部署

memcache作用:

·memcached缓存openstack各类服务的验证的token令牌。

1.在控制节点安装相关软件包

yum install memcached python-memcached -y

2.配置memcached监听

sed -i 's#127.0.0.1#0.0.0.0#g' /etc/sysconfig/memcached

3.启动

systemctl enable memcached --now

四、安装认证服务keystone

4.1 配置数据库

在安装和配置身份服务之前,您必须创建一个数据库

1.使用数据库访问客户端以root用户身份连接到数据库服务器:

mysql -u root -p

2.创建keystone数据库:

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'guojie.com';

FLUSH PRIVILEGES;

QUIT;

4.2 安装keystone组件

在控制节点安装keystone相关软件

1.运行以下命令安装包:

yum install -y openstack-keystone httpd mod_wsgi

keystone基于httpd启动

httpd需要mod_wsgi模块才能运行python开发的程序

2.编辑/etc/keystone/keystone.conf 文件并完成以下操作:

配置:

cp /etc/keystone/keystone.conf{,.bak}

grep -Ev '^$|#' /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

sed -i '/^\[database\]/a connection = mysql+pymysql://keystone:guojie.com@controller/keystone' /etc/keystone/keystone.conf

sed -i '/^\[token\]/a provider = fernet' /etc/keystone/keystone.conf

sed -i '/^\[DEFAULT\]/a transport_url = rabbit://openstack:guojie.com@controller:5672' /etc/keystone/keystone.conf

sed -i '/^\[DEFAULT\]/a log_file = /var/log/keystone/keystone.log' /etc/keystone/keystone.conf

3.初始化数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

4.初始化Fernet密钥存储库:

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

5.引导身份服务:

keystone-manage bootstrap --bootstrap-password guojie.com \

--bootstrap-admin-url http://controller:35357/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

guojie.com是我设置的openstack的管理员密码。

4.3 配置Apache HTTP服务器

1.编辑/etc/httpd/conf/httpd.conf文件,将ServerName选项配置为引用控制器节点:

sed -i 's/^#ServerName www.example.com:80/ServerName controller:80/' /etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

2.启动服务

systemctl enable httpd --now

4.4 创建domain,project,user和role

1.配置管理账户

cat << EOF > ~/.admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=guojie.com

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

2.依次创建domain, projects, users, roles,需要先安装好python3-openstackclient:

yum -y install python-openstackclient

3.导入环境变量

source ~/.admin-openrc

创建project service,其中 domain default 在 keystone-manage bootstrap 时已创建

openstack domain create --description "An Example Domain" example

openstack project create --domain default --description "Service Project" service

五、镜像服务glance

镜像服务使用户能够发现、注册和检索虚拟机映像。它提供了一个RESTAPI,允许您查询虚拟机映像元数据并检索实际映像。

您可以将通过镜像服务提供的虚拟机映像存储在各种位置,从简单的文件系统到对象存储系统,如 Openstack 对象存储。

参考文档:OpenStack Docs: Install and configure (Red Hat)

5.1 配置数据库

在安装和配置 lmage 服务之前,您必须创建数据库、服务凭证和 API终端节点。

1.要创建数据库,请完成以下步骤:

使用数据库访问客户端以root用户身份连接到数据库服务器:

mysql -uroot -p

创建glance数据库

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'guojie.com';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'guojie.com';

FLUSH PRIVILEGES;

QUIT;

5.2 权限配置

1.创建用户

source .admin-openrc

openstack user create --domain default --password guojie.com glance

openstack role add --project service --user glance admin

3.创建 glance服务

openstack service create --name glance --description "OpenStack Image" image

4.创建glance服务的API的endpoint(url访问)

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

5.3 glance安装与配置

1.安装:

yum -y install openstack-glance

2.备份配置文件:

cp /etc/glance/glance-api.conf{,.bak}

grep -Ev '^#|^$' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

vim /etc/glance/glance-api.conf

[DEFAULT]

log_file = /var/log/glance/glance-api.log

[database]

connection = mysql+pymysql://glance:guojie.com@controller/glance

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = guojie.com

[paste_deploy]

flavor = keystone

完整配置:

[root@controller ~]# cat /etc/glance/glance-api.conf

[DEFAULT]

log_file = /var/log/glance/glance-api.log

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:guojie.com@controller/glance

[file]

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.sheepdog.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[image_format]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = guojie.com

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

导入数据

su -s /bin/sh -c "glance-manage db_sync" glance

5.4 启动服务

systemctl enable openstack-glance-api --now

5.5 验证

1.下载测试镜像

wget http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img

2.上传镜像

openstack image create --disk-format qcow2 --container-format bare --file cirros-0.4.0-x86_64-disk.img --public cirros

public表示所有项目可用

3.验证镜像是否上传

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 03a823ea-6883-4a4b-9629-1b4839f0644a | cirros | active |

+--------------------------------------+--------+--------+

六、计算组件nova

参考:OpenStack Docs: Install and configure controller node for Red Hat Enterprise Linux and CentOS

6.1 nova控制节点部署

6.1 .1 配置数据库

[root@controller ~]# mysql -uroot -p

创建 nova_api, nova, nova_cell0数据库:

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

数据库授权给用户:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'guojie.com';

# 刷新授权

MariaDB [(none)]> FLUSH PRIVILEGES;

MariaDB [(none)]> QUIT;

验证:

[root@controller ~]# mysql -h controller -u nova -pguojie.com -e 'show databases'

+--------------------+

| Database |

+--------------------+

| information_schema |

| nova |

| nova_api |

| nova_cell0 |

+--------------------+

6.1.2 权限配置

获取管理员凭据以访问仅管理员的CLI命令:

[root@controller ~]# source admin-openrc.sh

1.创建nova用户:

[root@controller ~]# openstack user create --domain default --password guojie.com nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 31f7b758bfe64f16b47d3f934b8ff94b |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 验证

[root@controller ~]# openstack user list

+----------------------------------+--------+

| ID | Name |

+----------------------------------+--------+

| 281ca4a010a44d56bc3ad29ccadf15d8 | glance |

| 31f7b758bfe64f16b47d3f934b8ff94b | nova |

| 4093e7a9f5454322ba9987581b564fe4 | admin |

| e05800abc0c64c3ea73db2557dda4cb7 | demo |

+----------------------------------+--------+

2.把nova用户加入到Service项目的admin角色组

[root@controller ~]# openstack role add --project service --user nova admin

3.创建nova服务

[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 5628e23741b1491697d811c84bfefd1c |

| name | nova |

| type | compute |

+-------------+----------------------------------+

#验证

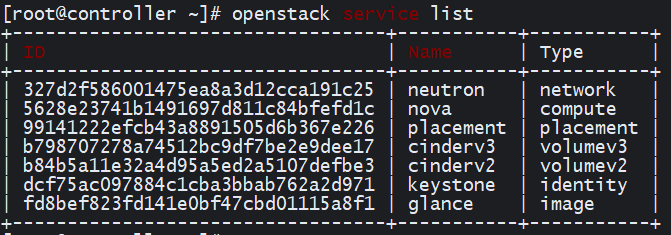

[root@controller ~]# openstack service list

+----------------------------------+----------+----------+

| ID | Name | Type |

+----------------------------------+----------+----------+

| 5628e23741b1491697d811c84bfefd1c | nova | compute |

| dcf75ac097884c1cba3bbab762a2d971 | keystone | identity |

| fd8bef823fd141e0bf47cbd01115a8f1 | glance | image |

+----------------------------------+----------+----------+

4.配置nova服务的api地址记录

[root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b8f00538c287433faee862766a97e408 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5628e23741b1491697d811c84bfefd1c |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5158c5701fd4487aae75c5edea040761 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5628e23741b1491697d811c84bfefd1c |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 06ae3fb2885647c697e7a316842be102 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5628e23741b1491697d811c84bfefd1c |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

验证:

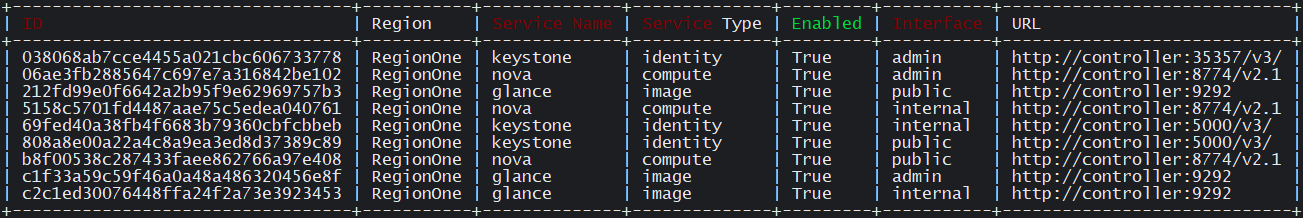

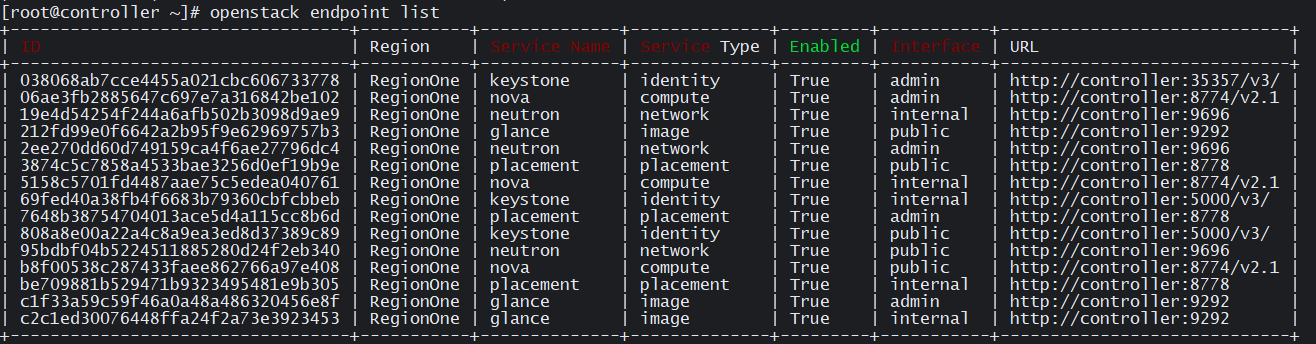

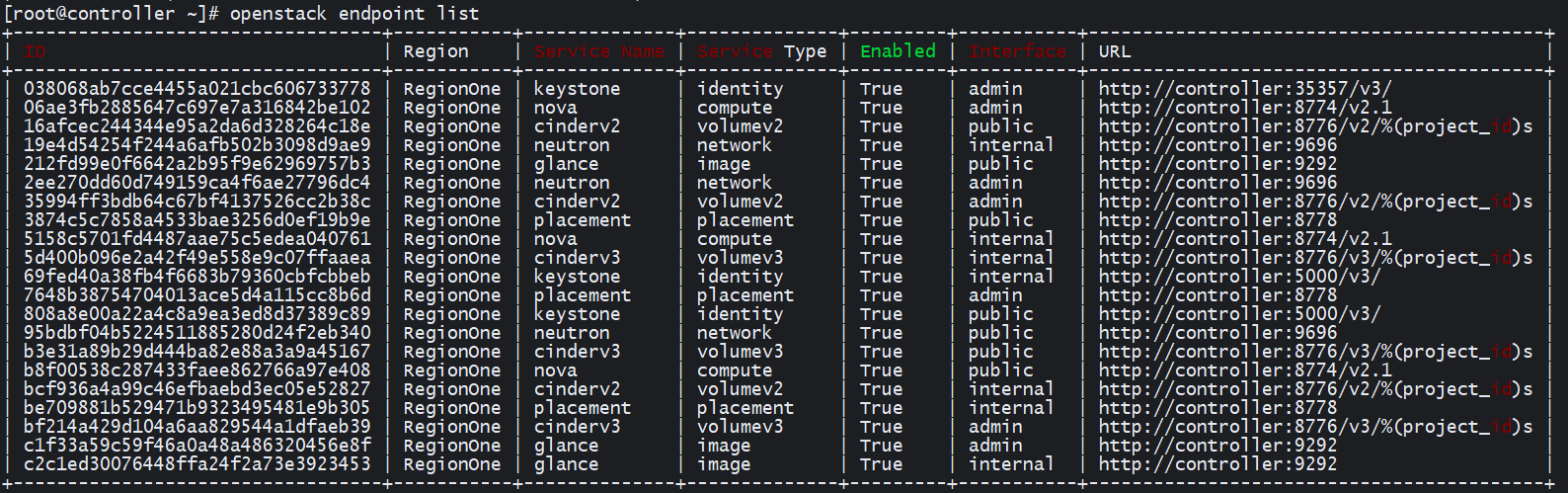

[root@controller ~]# openstack endpoint list

5.创建placement用户,用于资源的追踪记录

[root@controller ~]# openstack user create --domain default --password guojie.com placement

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 4ff73c3f796f424d94ad92de74132525 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 验证

[root@controller ~]# openstack user list

+----------------------------------+-----------+

| ID | Name |

+----------------------------------+-----------+

| 281ca4a010a44d56bc3ad29ccadf15d8 | glance |

| 31f7b758bfe64f16b47d3f934b8ff94b | nova |

| 4093e7a9f5454322ba9987581b564fe4 | admin |

| 4ff73c3f796f424d94ad92de74132525 | placement |

| e05800abc0c64c3ea73db2557dda4cb7 | demo |

+----------------------------------+-----------+

- 把placement用户加入到Service项目的admin角色组

[root@controller ~]# openstack role add --project service --user placement admin

7.创建placement服务

[root@controller ~]# openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 99141222efcb43a8891505d6b367e226 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

# 验证

[root@controller ~]# openstack service list

+----------------------------------+-----------+-----------+

| ID | Name | Type |

+----------------------------------+-----------+-----------+

| 5628e23741b1491697d811c84bfefd1c | nova | compute |

| 99141222efcb43a8891505d6b367e226 | placement | placement |

| dcf75ac097884c1cba3bbab762a2d971 | keystone | identity |

| fd8bef823fd141e0bf47cbd01115a8f1 | glance | image |

+----------------------------------+-----------+-----------+

8.创建placement服务的api地址记录

[root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3874c5c7858a4533bae3256d0ef19b9e |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 99141222efcb43a8891505d6b367e226 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778

+--------------+----------------------------------++--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | be709881b529471b9323495481e9b305 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 99141222efcb43a8891505d6b367e226 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 7648b38754704013ace5d4a115cc8b6d |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 99141222efcb43a8891505d6b367e226 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

验证:

[root@controller ~]# openstack endpoint list

6.1.3 nova安装与配置

1.在控制节点安装nova相关组件

[root@controller ~]# yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

2.备份配置文件

[root@controller ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

[root@controller ~]# cp /etc/httpd/conf.d/00-nova-placement-api.conf /etc/httpd/conf.d/00-nova-placement-api.conf.bak

3.修改nova.conf配置文件

改的东西比较多,建议直接去后面复制改好的。

2753 enabled_apis=osapi_compute,metadata

3479 connection=mysql+pymysql://nova:guojie.com@controller/nova_api

4453 connection=mysql+pymysql://nova:guojie.com@controller/nova

3130 transport_url=rabbit://openstack:guojie.com@controller

3193 auth_strategy=keystone

5771 [keystone_authtoken] #自带的,不用改

5772 uth_uri = http://controller:5000

5773 auth_url = http://controller:35357

5774 memcached_servers = controller:11211

5775 auth_type = password

5776 project_domain_name = default

5777 user_domain_name = default

5778 project_name = service

5779 username = nova

5780 password = guojie.com #写上一步权限配置忠nova的密码。

1817 use_neutron=true

2479 firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

9896 enabled=true

9918 vncserver_listen=172.173.10.110 #这里写控制节点IP

9929 vncserver_proxyclient_address=172.173.10.110 #这里写控制节点IP

5067 api_servers=http://controller:9292

7488 lock_path=/var/lib/nova/tmp

8303 [placement] #自带的,不用改

8304 os_region_name = RegionOne

8305 project_domain_name = Default

8306 project_name = service

8307 auth_type = password

8308 user_domain_name = Default

8309 auth_url = http://controller:35357/v3

8310 username = placement

8311 password = guojie.com #填上一章节权限配置placement用户的密码

验证:

[root@controller ~]# egrep -v '^#|^$' /etc/nova/nova.conf

[DEFAULT]

use_neutron=true

firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:guojie.com@controller

[api]

auth_strategy=keystone

[api_database]

connection=mysql+pymysql://nova:guojie.com@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[crypto]

[database]

connection=mysql+pymysql://nova:guojie.com@controller/nova

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers=http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

uth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = guojie.com

[libvirt]

[matchmaker_redis]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = guojie.com

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled=true

vncserver_listen=172.173.10.110

vncserver_proxyclient_address=172.173.10.110

[workarounds]

[wsgi]

[xenserver]

[xvp]

4.配置00-nova-placement-api.conf配置文件

将下面的内容加到</VirtualHost>标签当中

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

如下:

[root@controller ~]# cat /etc/httpd/conf.d/00-nova-placement-api.conf

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova group=nova

WSGIScriptAlias / /usr/bin/nova-placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/nova/nova-placement-api.log

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

</VirtualHost>

Alias /nova-placement-api /usr/bin/nova-placement-api

<Location /nova-placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

6.1.4重启apache服务

[root@controller ~]# systemctl restart httpd

6.1.5 导入相关nova相关数据库

#导入数据到nova_api库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

# 注册cell0数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

# 创建cell1数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

3c837947-854a-4d61-9af0-722cd9cbebc0

# 再次同步信息到nova库(nova库与nova_cell0库里有相关的表数据)

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova ##忽略告警信息

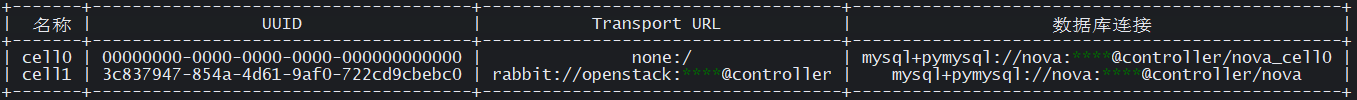

验证:

[root@controller ~]# nova-manage cell_v2 list_cells

[root@controller ~]# mysql -hcontroller -unova -pguojie.com -e 'use nova;show tables;' |wc -l

111

[root@controller ~]# mysql -hcontroller -unova -pguojie.com -e 'use nova_api;show tables;' |wc -l

33

[root@controller ~]# mysql -hcontroller -unova -pguojie.com -e 'use nova_cell0;show tables;' |wc -l

111

6.1.6启动服务

[root@controller ~]# systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service --now

验证:

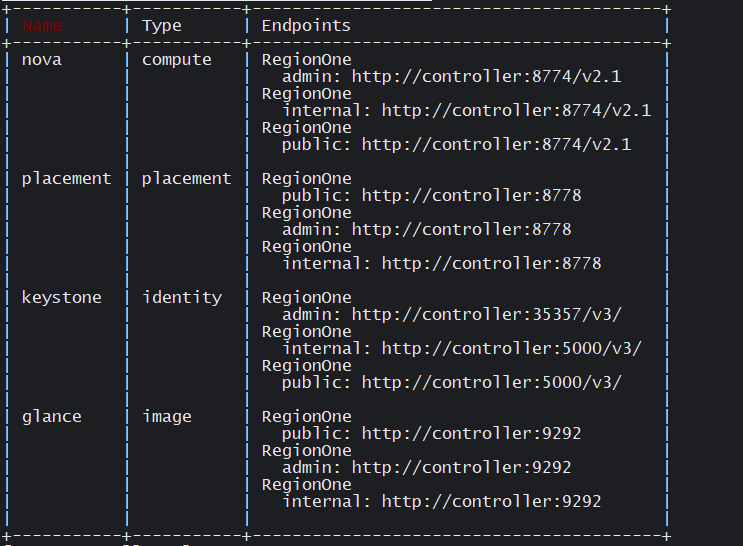

[root@controller ~]# openstack catalog list

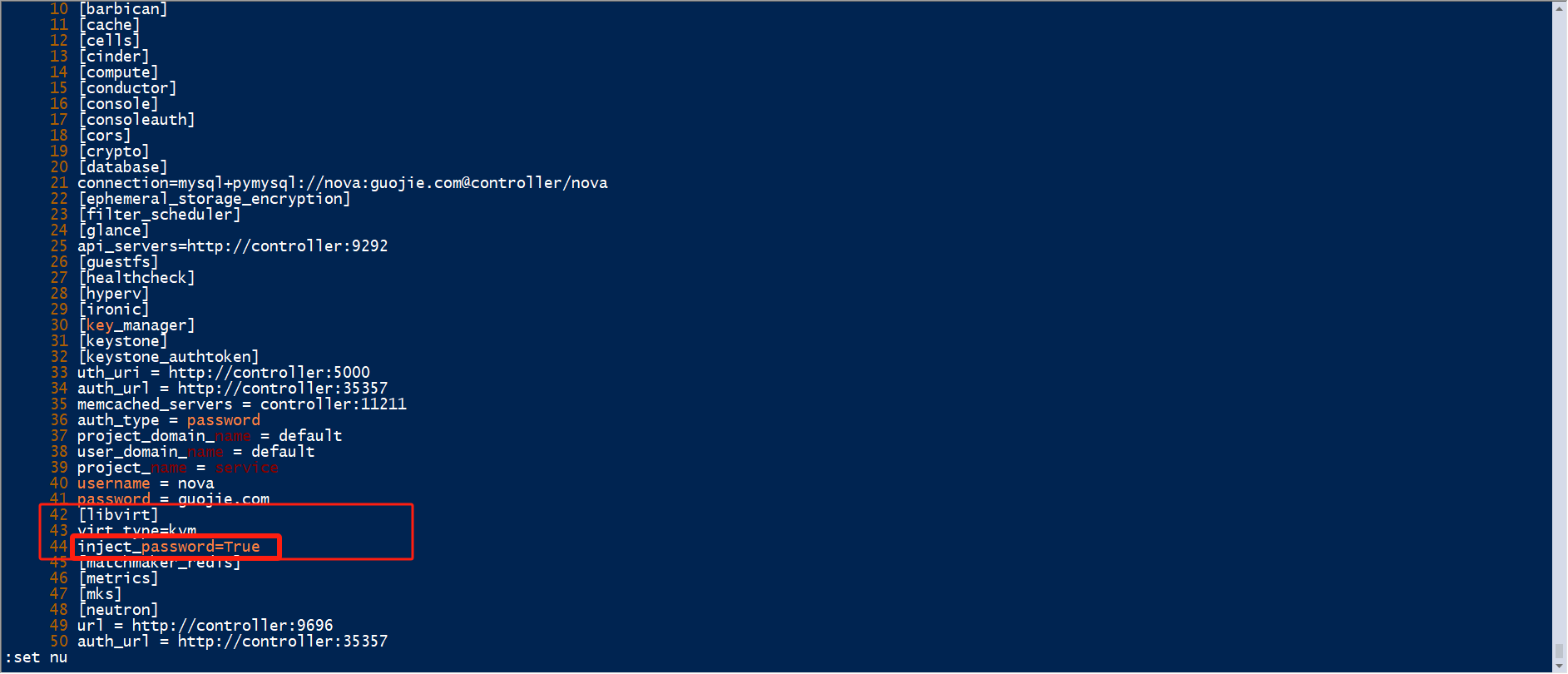

6.2 nova计算节点部署

参考:OpenStack Docs: Install and configure a compute node for Red Hat Enterprise Linux and CentOS,以下操作都在计算节点。

6.2.1 安装与配置

1.安装软件

[root@compute ~]# yum -y install openstack-nova-compute sysfsutils

2.备份配置文件

[root@compute ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

3.修改配置文件

# 这里建议直接复制控制节点的配置文件来修改

[root@compute ~]# scp root@controller:/etc/nova/nova.conf /etc/nova/nova.conf

root@controller's password:

nova.conf 100% 345KB 85.9MB/s 00:00

#修改如下几个地方:

1.[vnc]下的几个参数有所不同

vncserver_listen=0.0.0.0 监听任意地址过来连接vnc控制台

vncserver_proxyclient_address 接的IP为compute节点管理网络IP

novncproxy_base_url = http://172.173.10.110:6080/vnc_auto.html #这是控制台转发的url,里面的ip写控制节点的IP,不要写主机名,主机名试了不好使

2.[libvirt]参数组下面加上virt_type=qemu

不能使用kvm,因为我们本来就在kvm里面搭建的云平台,cat /proc/cpuinfo |egrep 'vmx|svm'是查不出来的,但如果是生产环境用物理服务器搭建就应该为virt_type=kvm

最终效果:

[root@compute ~]# egrep -v '^#|^$' /etc/nova/nova.conf

[DEFAULT]

use_neutron=true

firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:guojie.com@controller

[api]

auth_strategy=keystone

[api_database]

connection=mysql+pymysql://nova:guojie.com@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[crypto]

[database]

connection=mysql+pymysql://nova:guojie.com@controller/nova

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers=http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

uth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = guojie.com

[libvirt]

virt_type=qemu

[matchmaker_redis]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = guojie.com

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled=true

vncserver_listen=0.0.0.0

vncserver_proxyclient_address=172.173.10.111

novncproxy_base_url = http://172.173.10.110:6080/vnc_auto.html

[workarounds]

[wsgi]

[xenserver]

[xvp]

启动服务:

[root@compute ~]# systemctl enable libvirtd.service openstack-nova-compute.service --now

6.2.2 在控制节点上添加计算节点

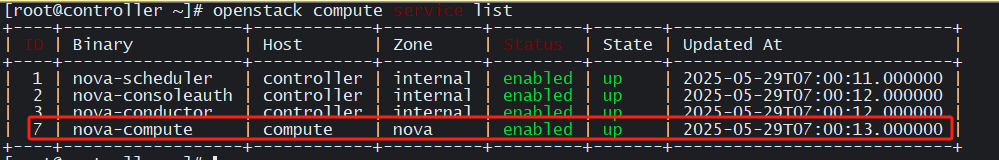

1.查看服务

[root@controller ~]# openstack compute service list

计算节点服务启动之后在控制节点上看状态就为UP,如果不是就要检查nova日志和检查配置。

2.新增计算节点记录,增加到nova数据库中

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Getting computes from cell 'cell1': 3c837947-854a-4d61-9af0-722cd9cbebc0

Checking host mapping for compute host 'compute': d9a0c826-ab7f-4b06-8059-0c23cba6adbf

Creating host mapping for compute host 'compute': d9a0c826-ab7f-4b06-8059-0c23cba6adbf

Found 1 unmapped computes in cell: 3c837947-854a-4d61-9af0-722cd9cbebc0

Skipping cell0 since it does not contain hosts.

3.验证所有API是否正常

[root@controller ~]# nova-status upgrade check

+--------------------------+

| 升级检查结果 |

+--------------------------+

| 检查: Cells v2 |

| 结果: 成功 |

| 详情: None |

+--------------------------+

| 检查: Placement API |

| 结果: 成功 |

| 详情: None |

+--------------------------+

| 检查: Resource Providers |

| 结果: 成功 |

| 详情: None |

+--------------------------+

七、网络组件neutron

7.1 neutron控制节点部署

参考文档:OpenStack Docs: Install and configure controller node

7.1.1 数据库配置

登录数据库

[root@controller ~]# mysql -uroot -p

创建neutron数据库:

MariaDB [(none)]> CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> FLUSH PRIVILEGES;

MariaDB [(none)]> QUIT;

验证:

[root@controller ~]# mysql -h controller -u neutron -pguojie.com -e 'show databases';

+--------------------+

| Database |

+--------------------+

| information_schema |

| neutron |

+--------------------+

7.1.2 权限配置

1.创建neutron用户

[root@controller ~]# source admin-openrc.sh

[root@controller ~]# openstack user create --domain default --password guojie.com neutron

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | be3796e423e0417d8f71f7fc640e5b48 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 验证

[root@controller ~]# openstack user list

+----------------------------------+-----------+

| ID | Name |

+----------------------------------+-----------+

| 281ca4a010a44d56bc3ad29ccadf15d8 | glance |

| 31f7b758bfe64f16b47d3f934b8ff94b | nova |

| 4093e7a9f5454322ba9987581b564fe4 | admin |

| 4ff73c3f796f424d94ad92de74132525 | placement |

| be3796e423e0417d8f71f7fc640e5b48 | neutron |

| e05800abc0c64c3ea73db2557dda4cb7 | demo |

+----------------------------------+-----------+

2.把neutron用户到Service项目的admin角色组

[root@controller ~]# openstack role add --project service --user neutron admin

3.创建neutron服务

[root@controller ~]# openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | 327d2f586001475ea8a3d12cca191c25 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

# 验证

[root@controller ~]# openstack service list

+----------------------------------+-----------+-----------+

| ID | Name | Type |

+----------------------------------+-----------+-----------+

| 327d2f586001475ea8a3d12cca191c25 | neutron | network |

| 5628e23741b1491697d811c84bfefd1c | nova | compute |

| 99141222efcb43a8891505d6b367e226 | placement | placement |

| dcf75ac097884c1cba3bbab762a2d971 | keystone | identity |

| fd8bef823fd141e0bf47cbd01115a8f1 | glance | image |

+----------------------------------+-----------+-----------+

4.配置neutron服务的api地址记录

[root@controller ~]# openstack endpoint create --region RegionOne network public http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 95bdbf04b5224511885280d24f2eb340 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 327d2f586001475ea8a3d12cca191c25 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne network internal http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 19e4d54254f244a6afb502b3098d9ae9 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 327d2f586001475ea8a3d12cca191c25 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne network admin http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2ee270dd60d749159ca4f6ae27796dc4 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 327d2f586001475ea8a3d12cca191c25 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

验证:

[root@controller ~]# openstack endpoint list

7.1.3 软件安装配置

这里选择第二种,参考文档:OpenStack Docs: Networking Option 2: Self-service networks

1.在控制节点安装neutron相关软件

[root@controller ~]# yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

2.备份配置文件

[root@controller ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

[root@controller ~]# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.bak

[root@controller ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak

3.配置neutron.conf文件

27 auth_strategy = keystone

30 core_plugin = ml2

33 service_plugins = router

85 allow_overlapping_ips = True

98 notify_nova_on_port_status_changes = true

102 notify_nova_on_port_data_changes = true

553 transport_url = rabbit://openstack:guojie.com@controller #注意密码要写rabbitmq的密码

560 rpc_backend = rabbit

710 connection = mysql+pymysql://neutron:guojie.com@controller/neutron

794 [keystone_authtoken] #不用改

795 auth_uri = http://controller:5000

796 auth_url = http://controller:35357

797 memcached_servers = controller:11211

798 auth_type = password

799 project_domain_name = default

800 user_domain_name = default

801 project_name = service

802 username = neutron

803 password = guojie.com ##上一章节权限配置中设置的密码

1022 [nova]

1023 auth_url = http://controller:35357

1024 auth_type = password

1025 project_domain_name = default

1026 user_domain_name = default

1027 region_name = RegionOne

1028 project_name = service

1029 username = nova

1030 password = guojie.com ##6.1.2章节当中navo权限配置nova设置的密码。

1141 lock_path = /var/lib/neutron/tmp

结果验证:

[root@controller ~]# egrep -v '^#|^$' /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

transport_url = rabbit://openstack:guojie.com@controller

rpc_backend = rabbit

[agent]

[cors]

[database]

connection = mysql+pymysql://neutron:guojie.com@controller/neutron

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = guojie.com

[matchmaker_redis]

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = guojie.com

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[quotas]

[ssl]

4.配置Modular Layer 2 (ML2)插件 ml2_conf.ini 配置文件

132 type_drivers = flat,vlan,vxlan

137 tenant_network_types = vxlan

141 mechanism_drivers = linuxbridge,l2population

146 extension_drivers = port_security

182 flat_networks = provider

235 vni_ranges = 1:1000 ##支持1000个隧道网络(注意:在193行也有1个相同参数,不要配错位置了,否则无法创建自助的私有网络)

259 enable_ipset = true

配置检查:

[root@controller ~]# egrep -v '^$|^#' /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[l2pop]

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

5.配置linuxbridge_agent.ini文件

142 physical_interface_mappings = provider:eth1 ##注意网卡为eth1,也就是走外网网卡名

175 enable_vxlan = true

196 local_ip = 172.173.10.110 ##此IP为管理网卡的IP

220 l2_population = true

155 firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

160 enable_security_group = true

验证:

[root@controller ~]# egrep -v '^$|^#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth1

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

enable_security_group = true

[vxlan]

enable_vxlan = true

local_ip = 172.173.10.110

l2_population = true

6.配置l3_agent.ini文件

[root@controller ~]# vi /etc/neutron/l3_agent.ini

# 修改第16行配置如下

16 interface_driver = linuxbridge

# 检查

[root@controller ~]# egrep -v '^$|^#' /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = linuxbridge

[agent]

[ovs]

7.配置dhcp_agent.ini文件

[root@controller ~]# vi /etc/neutron/dhcp_agent.ini

#修改如下配置

16 interface_driver = linuxbridge

37 dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

46 enable_isolated_metadata = true

#配置检查

[root@controller ~]# egrep -v '^$|^#' /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

[agent]

[ovs]

8.配置metadata_agent.ini文件

[root@controller ~]# vi /etc/neutron/metadata_agent.ini

#该如下配置

23 nova_metadata_host = controller

35 metadata_proxy_shared_secret = metadata_daniel

#注意:这里的metadata_daniel仅为一个字符串,需要和nova配置文件里的metadata_proxy_shared_secret对应

检查:

[root@controller ~]# egrep -v '^$|^#' /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = metadata_daniel

[agent]

[cache]

9.在nova.conf配置文件中加上下面一段

在[neutron]配置段下添加下面一段:

[root@controller ~]# vi /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = guojie.com #注意修改成neutron授权中的密码

service_metadata_proxy = true

metadata_proxy_shared_secret = metadata_daniel

检查:

[root@controller ~]# egrep -v '^$|^#' /etc/nova/nova.conf

[DEFAULT]

use_neutron=true

firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:guojie.com@controller

[api]

auth_strategy=keystone

[api_database]

connection=mysql+pymysql://nova:guojie.com@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[crypto]

[database]

connection=mysql+pymysql://nova:guojie.com@controller/nova

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers=http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

uth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = guojie.com

[libvirt]

[matchmaker_redis]

[metrics]

[mks]

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = guojie.com

service_metadata_proxy = true

metadata_proxy_shared_secret = metadata_daniel

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = guojie.com

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled=true

vncserver_listen=172.173.10.110

vncserver_proxyclient_address=172.173.10.110

novncproxy_base_url = http://172.173.10.110:6080/vnc_auto.html

[workarounds]

[wsgi]

[xenserver]

[xvp]

10.网络服务初始化脚本需要访问/etc/neutron/plugin.ini来指向 ml2_conf.ini配置文件,所以需要做一个软链接

[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

11.同步数据

[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

7.1.4 启动服务

重启nova服务:

[root@controller ~]# systemctl restart openstack-nova-api.service --now

启动neutron服务:

[root@controller ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service --now

7.2 neutron计算节点部署

参考文档:OpenStack Docs: Install and configure compute node

7.2.1 安装与配置

1.计算节点安装相关软件

[root@compute ~]# yum install openstack-neutron-linuxbridge ebtables ipset -y

2.备份配置文件

[root@compute ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

[root@compute ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak

3..配置neutron.conf文件

27 auth_strategy = keystone

553 transport_url = rabbit://openstack:guojie.com@controller

794 [keystone_authtoken] #自带的,不用改

795 auth_uri = http://controller:5000

796 auth_url = http://controller:35357

797 memcached_servers = controller:11211

798 auth_type = password

799 project_domain_name = default

800 user_domain_name = default

801 project_name = service

802 username = neutron

803 password = guojie.com

1134 lock_path = /var/lib/neutron/tmp

配置检查:

[root@compute ~]# egrep -v '^$|^#' /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

transport_url = rabbit://openstack:guojie.com@controller

[agent]

[cors]

[database]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = guojie.com

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[quotas]

[ssl]

4.仍然是第2类型网络配置

参考:OpenStack Docs: Networking Option 2: Self-service networks

配置linuxbridge_agent.ini文件:

[root@compute ~]# vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

#修改如下配置

142 physical_interface_mappings = provider:eth1 #为走外部网络网卡名

175 enable_vxlan = true

196 local_ip = 172.173.10.111 #本机管理网络的IP(重点注意)

220 l2_population = true

155 firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

160 enable_security_group = true

配置检查:

[root@compute ~]# egrep -v '^$|^#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth1

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

enable_security_group = true

[vxlan]

enable_vxlan = true

local_ip = 172.173.10.111

l2_population = true

5.配置nova.conf配置文件

在[neutron]下添如下内容

7185 [neutron]

7186 url = http://controller:9696

7187 auth_url = http://controller:35357

7188 auth_type = password

7189 project_domain_name = default

7190 user_domain_name = default

7191 region_name = RegionOne

7192 project_name = service

7193 username = neutron

7194 password = guojie.com

配置验证:

[root@compute ~]# egrep -v '^$|^#' /etc/nova/nova.conf

[DEFAULT]

use_neutron=true

firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:guojie.com@controller

[api]

auth_strategy=keystone

[api_database]

connection=mysql+pymysql://nova:guojie.com@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[crypto]

[database]

connection=mysql+pymysql://nova:guojie.com@controller/nova

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers=http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

uth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = guojie.com

[libvirt]

virt_type=qemu

[matchmaker_redis]

[metrics]

[mks]

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = guojie.com

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = guojie.com

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled=true

vncserver_listen=172.173.10.110

vncserver_proxyclient_address=172.173.10.111

[workarounds]

[wsgi]

[xenserver]

[xvp]

7.2.2 启动服务

[root@compute ~]# systemctl restart openstack-nova-compute.service

[root@compute ~]# systemctl enable neutron-linuxbridge-agent.service --now

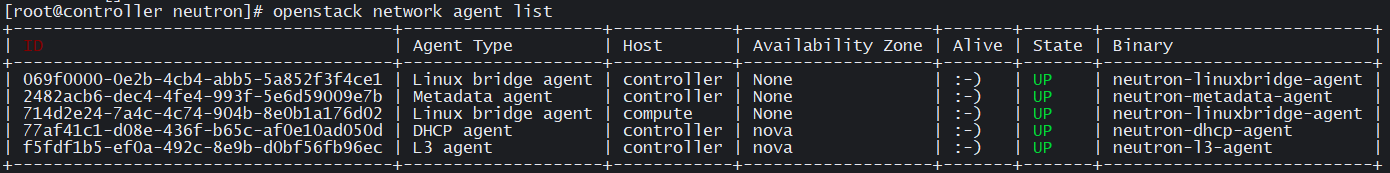

7.2.3 控制节点上验证

[root@controller ~]# source admin-openrc.sh

[root@controller ~]# openstack network agent list

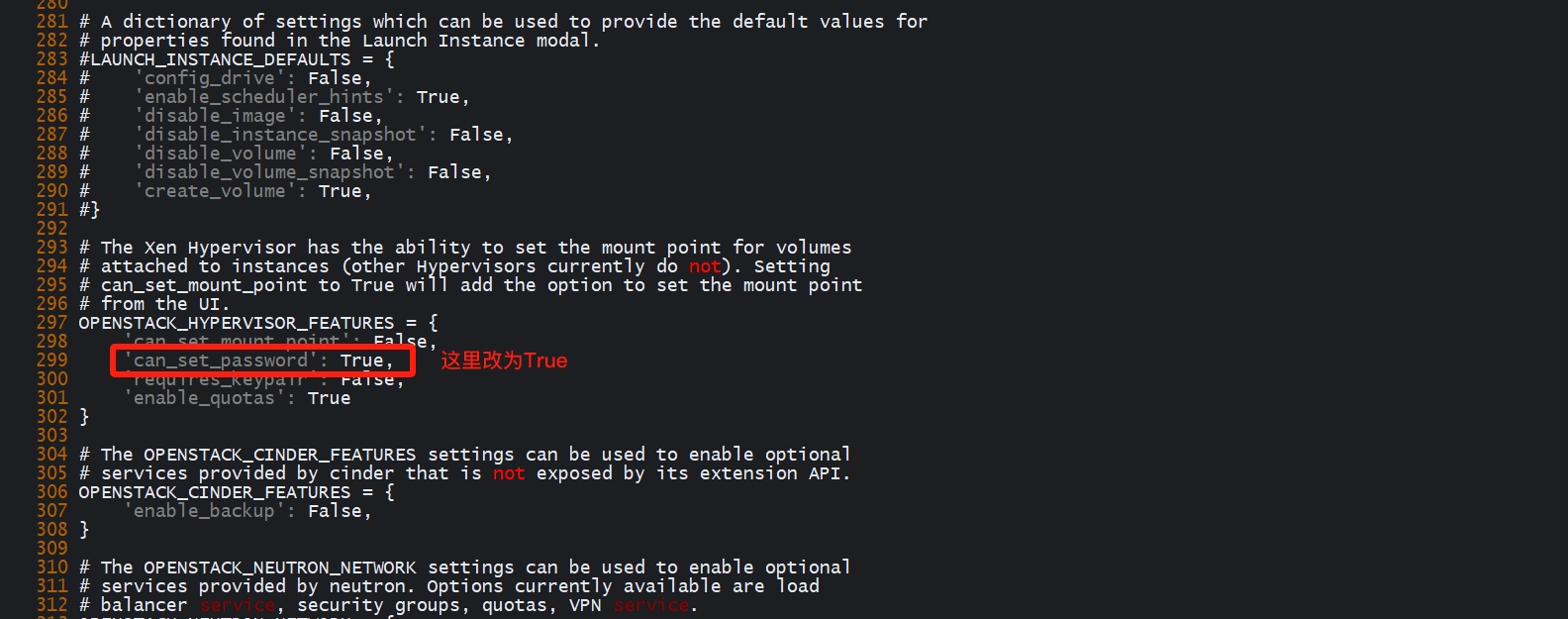

八、dashboard组件horizon

参考:OpenStack Docs: Install and configure for Red Hat Enterprise Linux and CentOS

8.1安装与配置

1.在控制节点安装组件

[root@controller neutron]# yum -y install openstack-dashboard

2.备份配置文件

[root@controller ~]# cp /etc/openstack-dashboard/local_settings /etc/openstack-dashboard/local_settings.bak

3.配置local_settings文件

38 ALLOWED_HOSTS = ['*',]

64 OPENSTACK_API_VERSIONS = {

65 "data-processing": 1.1,

66 "identity": 3,

67 "image": 2,

68 "volume": 2,

69 "compute": 2,

70 }

75 OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

97 OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'Default'

153 SESSION_ENGINE = 'django.contrib.sessions.backends.cache' ##这行没有,要自己添加

154 CACHES = {

155 'default': {

156 'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

157 'LOCATION': 'controller:11211', ##表示把会话给controller的memcache

158 },

159 }

#上面配置好之后下面就要注释起来

161 #CACHES = {

162 # 'default': {

163 # 'BACKEND': 'django.core.cache.backends.locmem.LocMemCache',

164 # },

165 #}

184 OPENSTACK_HOST = "controller"

185 OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST #改为V3而不是V2.0

186 OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user" #默认角色

# 全打开(全改为True),我们用的是第2种网络类型

313 OPENSTACK_NEUTRON_NETWORK = {

314 'enable_router': True,

315 'enable_quotas': True,

316 'enable_ipv6': True,

317 'enable_distributed_router': True,

318 'enable_ha_router': True,

319 'enable_fip_topology_check': True,

453 TIME_ZONE = "Asia/Shanghai" #时区改为上海

4.配置dashborad的httpd子配置文件

[root@controller ~]# vi /etc/httpd/conf.d/openstack-dashboard.conf

在第四行加上如下内容:

4 WSGIApplicationGroup %{GLOBAL}

检查:

[root@controller ~]# cat /etc/httpd/conf.d/openstack-dashboard.conf

WSGIDaemonProcess dashboard

WSGIProcessGroup dashboard

WSGISocketPrefix run/wsgi

WSGIApplicationGroup %{GLOBAL}

WSGIScriptAlias /dashboard /usr/share/openstack-dashboard/openstack_dashboard/wsgi/django.wsgi

Alias /dashboard/static /usr/share/openstack-dashboard/static

<Directory /usr/share/openstack-dashboard/openstack_dashboard/wsgi>

Options All

AllowOverride All

Require all granted

</Directory>

<Directory /usr/share/openstack-dashboard/static>

Options All

AllowOverride All

Require all granted

</Directory>

第4行加上这一句,在官方centos文档里没有,但ubuntu有.我们这里要 加上,否则后面dashboard访问不了

8.2 启动服务

[root@controller ~]# systemctl restart httpd memcached

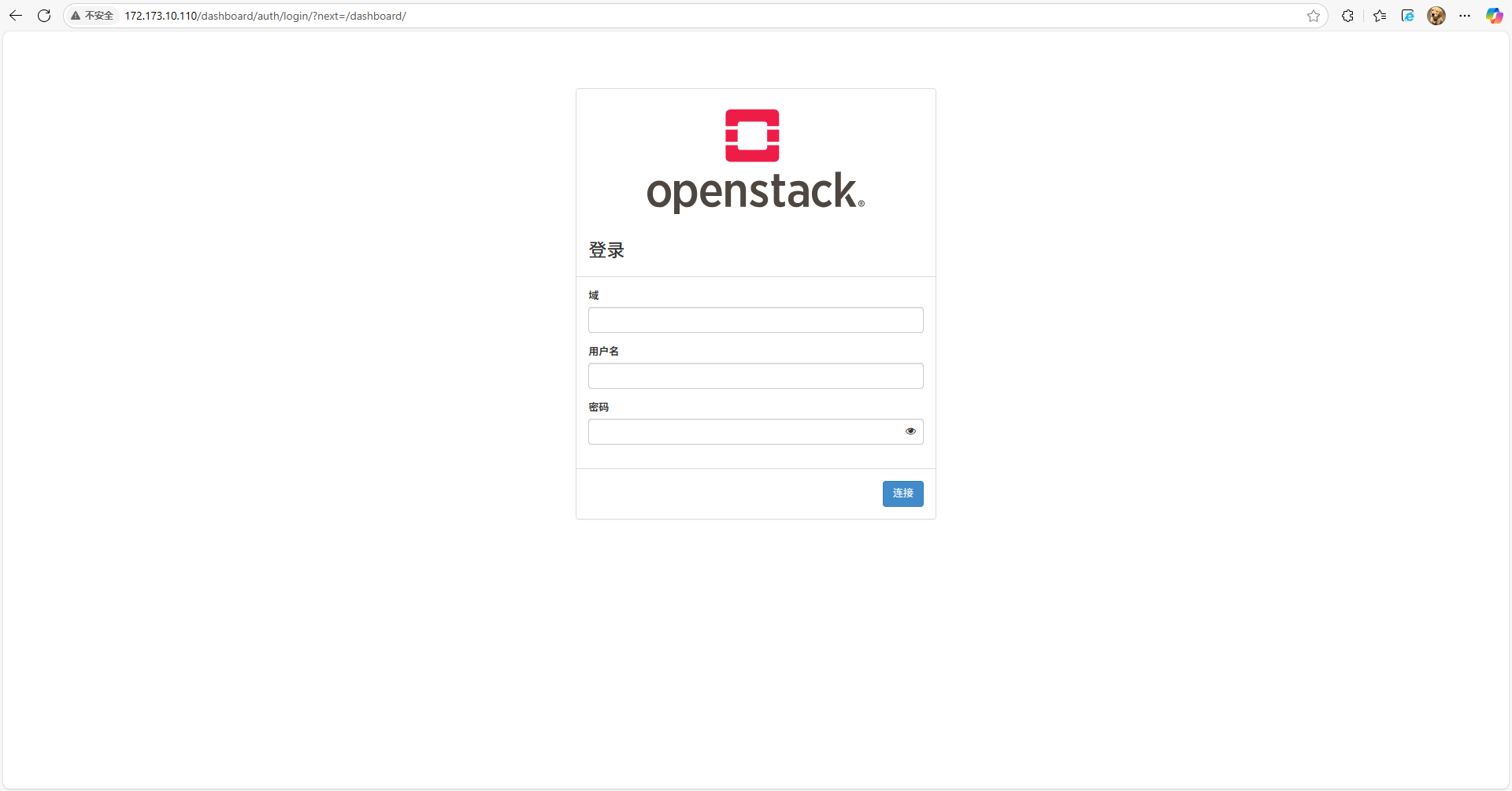

登录验证:

http://IP地址/dashboard/auth/login/?next=/dashboard/

域:default

用户名:admin

密码:guojie.com

九、块存储组件cinder

参考:https://docs.openstack.org/cinder/pike/install/

9.1 控制节点部署cinder

OpenStack Docs: Install and configure controller node

9.1.1 数据库配置

[root@controller ~]# mysql -uroot -p

MariaDB [(none)]> CREATE DATABASE cinder;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'guojie.com';

MariaDB [(none)]> FLUSH PRIVILEGES;

MariaDB [(none)]> QUIT;

#验证

[root@controller ~]# mysql -h controller -ucinder -pguojie.com -e 'show databases';

+--------------------+

| Database |

+--------------------+

| cinder |

| information_schema |

+--------------------+

9.1.2 权限配置

1.创建用户

[root@controller ~]# source admin-openrc.sh

[root@controller ~]# openstack user create --domain default --password guojie.com cinder

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 7f943f4a425840c98749a23eefa0ad69 |

| name | cinder |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 验证

[root@controller ~]# openstack user list

+----------------------------------+-----------+

| ID | Name |

+----------------------------------+-----------+

| 281ca4a010a44d56bc3ad29ccadf15d8 | glance |

| 31f7b758bfe64f16b47d3f934b8ff94b | nova |

| 4093e7a9f5454322ba9987581b564fe4 | admin |

| 4ff73c3f796f424d94ad92de74132525 | placement |

| 7f943f4a425840c98749a23eefa0ad69 | cinder |

| be3796e423e0417d8f71f7fc640e5b48 | neutron |

| e05800abc0c64c3ea73db2557dda4cb7 | demo |

+----------------------------------+-----------+

2.把cinder用户添加到service项目中,并赋予admin角色

[root@controller ~]# openstack role add --project service --user cinder admin

3.创建cinderv2和cinderv3服务

[root@controller ~]# openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | b84b5a11e32a4d95a5ed2a5107defbe3 |

| name | cinderv2 |

| type | volumev2 |

+-------------+----------------------------------+

[root@controller ~]# openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | b798707278a74512bc9df7be2e9dee17 |

| name | cinderv3 |

| type | volumev3 |

+-------------+----------------------------------+

4.创建cinder相关endpoint地址记录

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(project_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | 16afcec244344e95a2da6d328264c18e |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b84b5a11e32a4d95a5ed2a5107defbe3 |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://controller:8776/v2/%(project_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(project_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | bcf936a4a99c46efbaebd3ec05e52827 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b84b5a11e32a4d95a5ed2a5107defbe3 |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://controller:8776/v2/%(project_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(project_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | 35994ff3bdb64c67bf4137526cc2b38c |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b84b5a11e32a4d95a5ed2a5107defbe3 |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://controller:8776/v2/%(project_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%\(project_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | b3e31a89b29d444ba82e88a3a9a45167 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b798707278a74512bc9df7be2e9dee17 |

| service_name | cinderv3 |

| service_type | volumev3 |

| url | http://controller:8776/v3/%(project_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%\(project_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | 5d400b096e2a42f49e558e9c07ffaaea |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b798707278a74512bc9df7be2e9dee17 |

| service_name | cinderv3 |

| service_type | volumev3 |

| url | http://controller:8776/v3/%(project_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%\(project_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | bf214a429d104a6aa829544a1dfaeb39 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b798707278a74512bc9df7be2e9dee17 |

| service_name | cinderv3 |

| service_type | volumev3 |

| url | http://controller:8776/v3/%(project_id)s |

+--------------+------------------------------------------+

验证:

9.1.3 软件安装配置

1.控制节点安装openstack-cinder包

[root@controller ~]# yum -y install openstack-cinder

2.备份配置文件

[root@controller ~]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak

3.配置cinder.conf配置文件

[root@controller ~]# vi /etc/cinder/cinder.conf

#改如下配置

283 my_ip = 172.173.10.110

288 glance_api_servers = http://controller:9292 #官方文档没有这一句,要加上和glance的连接

400 auth_strategy = keystone

1212 transport_url = rabbit://openstack:guojie.com@controller

1219 rpc_backend = rabbit

3782 connection = mysql+pymysql://cinder:guojie.com@controller/cinder

4009 [keystone_authtoken] #自带,不用改

4010 auth_uri = http://controller:5000

4011 auth_url = http://controller:35357

4012 memcached_servers = controller:11211

4013 auth_type = password

4014 project_domain_name = default

4015 user_domain_name = default

4016 project_name = service

4017 username = cinder

4018 password = guojie.com #改成权限配置中的密码

4297 lock_path = /var/lib/cinder/tmp

验证:

[root@controller ~]# egrep -v '^#|^$' /etc/cinder/cinder.conf

[DEFAULT]

my_ip = 172.173.10.110

glance_api_servers = http://controller:9292

auth_strategy = keystone

transport_url = rabbit://openstack:guojie.com@controller

rpc_backend = rabbit

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:guojie.com@controller/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = guojie.com

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[profiler]

[ssl]

4.配置nova.conf配置文件

[root@controller ~]# vi /etc/nova/nova.conf

#找到[cinder]并在下面添加os_region_name = RegionOne

[cinder]

os_region_name = RegionOne

5.重启openstack-nova-api服务

[root@controller ~]# systemctl restart openstack-nova-api.service

6.数据库导入

[root@controller ~]# su -s /bin/sh -c "cinder-manage db sync" cinder

Option "logdir" from group "DEFAULT" is deprecated. Use option "log-dir" from group "DEFAULT".

[root@controller ~]# mysql -h controller -u cinder -pguojie.com -e 'use cinder;show tables' |wc -l

36

9.1.4 启动服务

在控制节点启动服务

[root@controller ~]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service --now

验证:

[root@controller ~]# netstat -ntlup |grep :8776

tcp 0 0 0.0.0.0:8776 0.0.0.0:* LISTEN 21454/python2

[root@controller ~]# openstack volume service list

+------------------+------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+------------+------+---------+-------+----------------------------+

| cinder-scheduler | controller | nova | enabled | up | 2025-05-30T01:56:06.000000 |

+------------------+------------+------+---------+-------+----------------------------+

9.2 存储节点部署cinder

这里在存储节点上添加一块硬盘用于演示:

[root@cindre ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part /

sdb 8:16 0 50G 0 disk

sr0 11:0 1 1024M 0 rom

#这里是sdb

参看文档:OpenStack Docs: Install and configure a storage node

9.2.1 安装与配置

1.存储节点安装LVM相关软件

[root@cindre ~]# yum -y install lvm2 device-mapper-persistent-data

2.启动服务

[root@cindre ~]# systemctl enable lvm2-lvmetad.service --now

3.创建LVM

[root@cindre ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@cindre ~]# vgcreate cinder_lvm /dev/sdb

Volume group "cinder_lvm" successfully created

验证(这里如果你安装系统是分区类型选了lvm,这里会有多个,我这里装的时候选了标准分区它就没有):

[root@cindre ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sdb cinder_lvm lvm2 a-- <50.00g <50.00g

[root@cindre ~]# vgs

VG #PV #LV #SN Attr VSize VFree

cinder_lvm 1 0 0 wz--n- <50.00g <50.00g

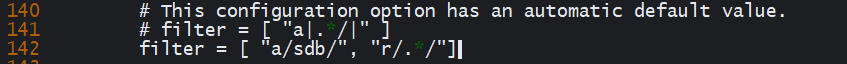

4.配置LVM的过滤

[root@cindre ~]# vi /etc/lvm/lvm.conf

# 在142行插入如下的过滤器,这里表示接受sdb并拒绝其它磁盘,避免操作系统磁盘被影响。

142 filter = [ "a/sdb/", "r/.*/"]

5.安装cinder相关软件

[root@cindre ~]# yum install openstack-cinder targetcli python-keystone -y

6.配置cinder.conf配置文件

[root@cindre ~]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak

283 my_ip = 172.173.10.112 #这里要写成存储节点的管理地址

288 glance_api_servers = http://controller:9292

400 auth_strategy = keystone

404 enabled_backends = lvm

1212 transport_url = rabbit://openstack:guojie.com@controller

1219 rpc_backend = rabbit

3782 connection = mysql+pymysql://cinder:guojie.com@controller/cinder

4009 [keystone_authtoken] # 自带的,不用修改

4010 auth_uri = http://controller:5000

4011 auth_url = http://controller:35357

4012 memcached_servers = controller:11211

4013 auth_type = password

4014 project_domain_name = default

4015 user_domain_name = default

4016 project_name = service

4017 username = cinder

4018 password = guojie.com #注意修改授权密码

4297 lock_path = /var/lib/cinder/tmp

# 在最后添加下面这一段

5174 [lvm]

5175 volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

5176 volume_group = cinder_lvm #注意改成自己前面配置的vg的名称

5177 iscsi_protocol = iscsi

5178 iscsi_helper = lioadm

配置验证:

[root@cindre ~]# egrep -v '^$|^#' /etc/cinder/cinder.conf

[DEFAULT]

my_ip = 172.173.10.112

glance_api_servers = http://controller:9292

auth_strategy = keystone

enabled_backends = lvm

transport_url = rabbit://openstack:guojie.com@controller

rpc_backend = rabbit

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:guojie.com@controller/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = guojie.com

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[profiler]

[ssl]

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder_lvm

iscsi_protocol = iscsi

iscsi_helper = lioadm

9.2.2 启动服务

1.在cinder存储节点启动服务

[root@cindre ~]# systemctl enable openstack-cinder-volume.service target.service --now

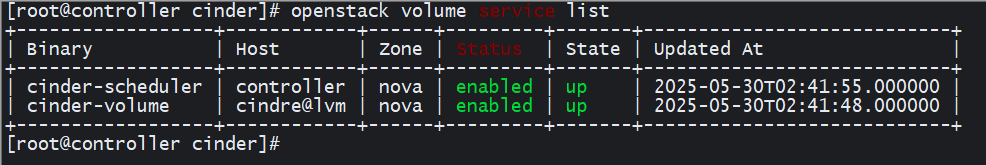

2.在控制节点上验证

[root@controller ~]# openstack volume service list

完成之后dashboard就会多出个卷,如果没有就退出重新登录。

十、云平台简单使用

参考:启动一个实例 — Installation Guide 文档

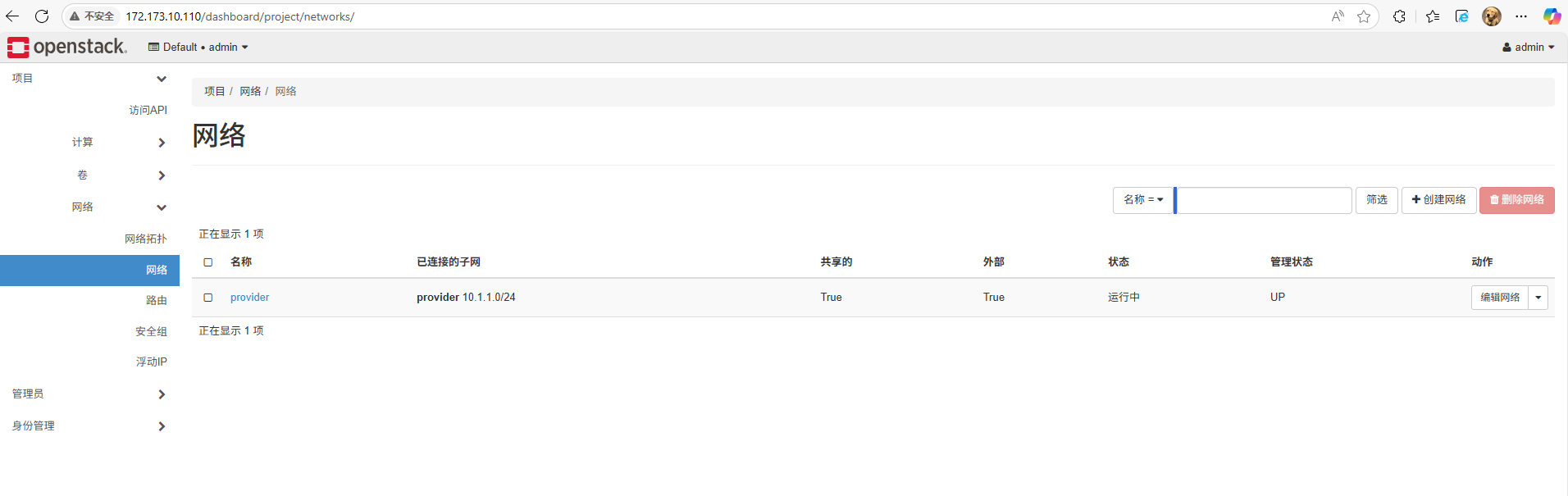

10.1 网络创建

[root@controller ~]# openstack network list

[root@controller ~]# openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2025-05-30T02:48:55Z |

| description | |

| dns_domain | None |

| id | cbd39bfd-28f2-455c-9b85-20cf78263797 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1500 |

| name | provider |

| port_security_enabled | True |

| project_id | fbe4fead10f94b8187e7661246c0f5e6 |

| provider:network_type | flat |

| provider:physical_network | provider |

| provider:segmentation_id | None |

| qos_policy_id | None |

| revision_number | 3 |

| router:external | External |

| segments | None |

| shared | True |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2025-05-30T02:48:55Z |

+---------------------------+--------------------------------------+

[root@controller ~]# openstack network list

+--------------------------------------+----------+---------+

| ID | Name | Subnets |

+--------------------------------------+----------+---------+

| cbd39bfd-28f2-455c-9b85-20cf78263797 | provider | |

+--------------------------------------+----------+---------+

10.2 创建子网

创建的网段对应我们eth1网卡的网络:

[root@controller ~]# openstack subnet create --network provider --allocation-pool start=10.1.1.100,end=10.1.1.250 --dns-nameserver 223.5.5.5 --gateway 10.1.1.254 --subnet-range 10.1.1.0/24 provider

+-------------------------+--------------------------------------+

| Field | Value |

+-------------------------+--------------------------------------+

| allocation_pools | 10.1.1.100-10.1.1.250 |

| cidr | 10.1.1.0/24 |

| created_at | 2025-05-30T02:53:55Z |

| description | |

| dns_nameservers | 223.5.5.5 |

| enable_dhcp | True |

| gateway_ip | 10.1.1.254 |

| host_routes | |

| id | e705a12f-aeb2-4414-aafe-1a676a8c87f0 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | provider |

| network_id | cbd39bfd-28f2-455c-9b85-20cf78263797 |

| project_id | fbe4fead10f94b8187e7661246c0f5e6 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2025-05-30T02:53:55Z |

| use_default_subnet_pool | None |

+-------------------------+--------------------------------------+

验证:

[root@controller ~]# openstack network list

+--------------------------------------+----------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+----------+--------------------------------------+

| cbd39bfd-28f2-455c-9b85-20cf78263797 | provider | e705a12f-aeb2-4414-aafe-1a676a8c87f0 |

+--------------------------------------+----------+--------------------------------------+

[root@controller ~]# openstack subnet list

+--------------------------------------+----------+--------------------------------------+-------------+

| ID | Name | Network | Subnet |

+--------------------------------------+----------+--------------------------------------+-------------+

| e705a12f-aeb2-4414-aafe-1a676a8c87f0 | provider | cbd39bfd-28f2-455c-9b85-20cf78263797 | 10.1.1.0/24 |

+--------------------------------------+----------+--------------------------------------+-------------+

10.3 创建虚拟机规格(flavor)

[root@controller ~]# openstack flavor list

[root@controller ~]# openstack flavor create --id 0 --vcpus 1 --ram 512 --disk 1 m1.nano

+----------------------------+---------+

| Field | Value |

+----------------------------+---------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 1 |

| id | 0 |

| name | m1.nano |

| os-flavor-access:is_public | True |

| properties | |

| ram | 512 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 1 |

+----------------------------+---------+

[root@controller ~]# openstack flavor list

+----+---------+-----+------+-----------+-------+-----------+

| ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

+----+---------+-----+------+-----------+-------+-----------+

| 0 | m1.nano | 512 | 1 | 0 | 1 | True |

+----+---------+-----+------+-----------+-------+-----------+

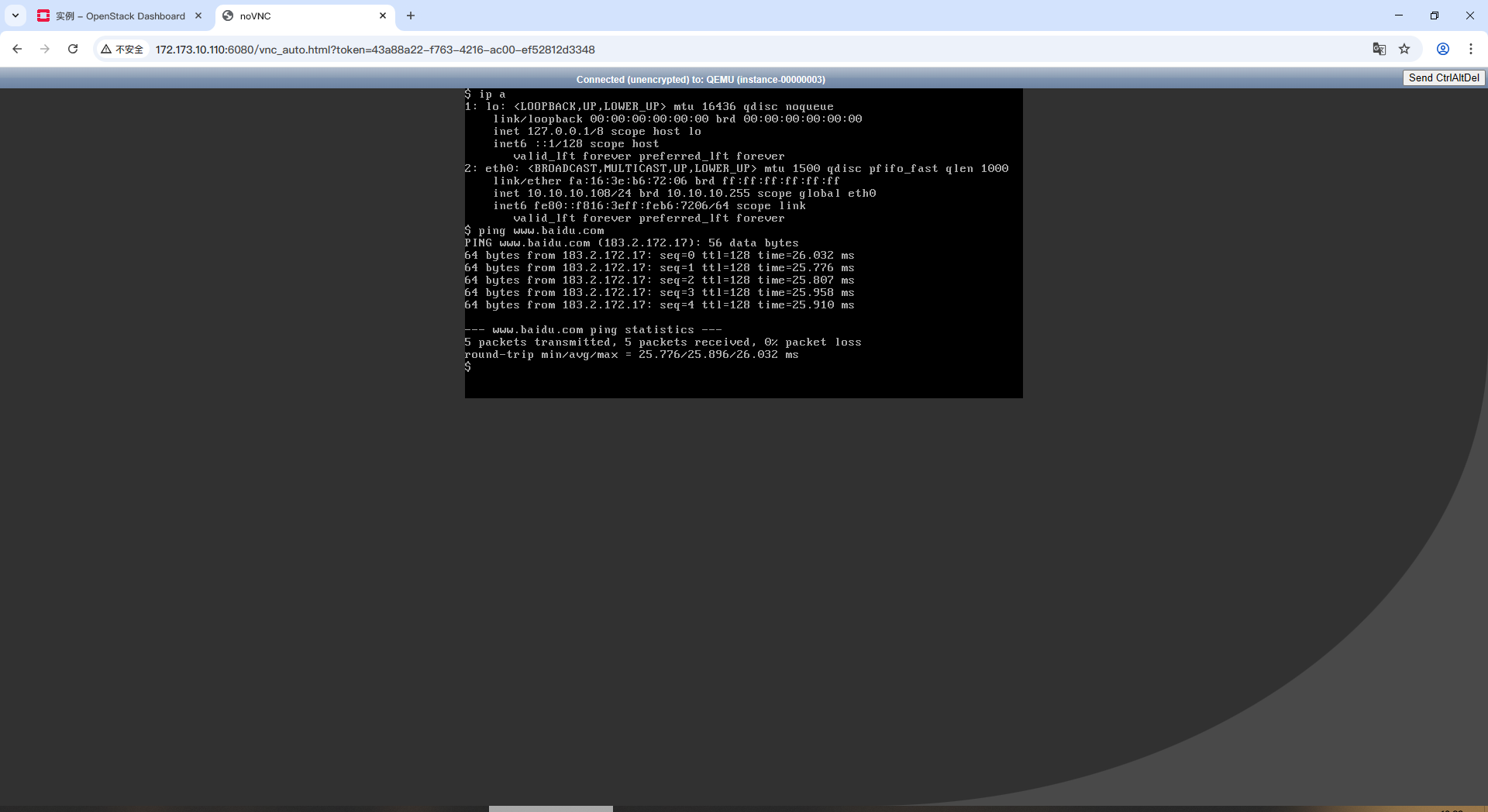

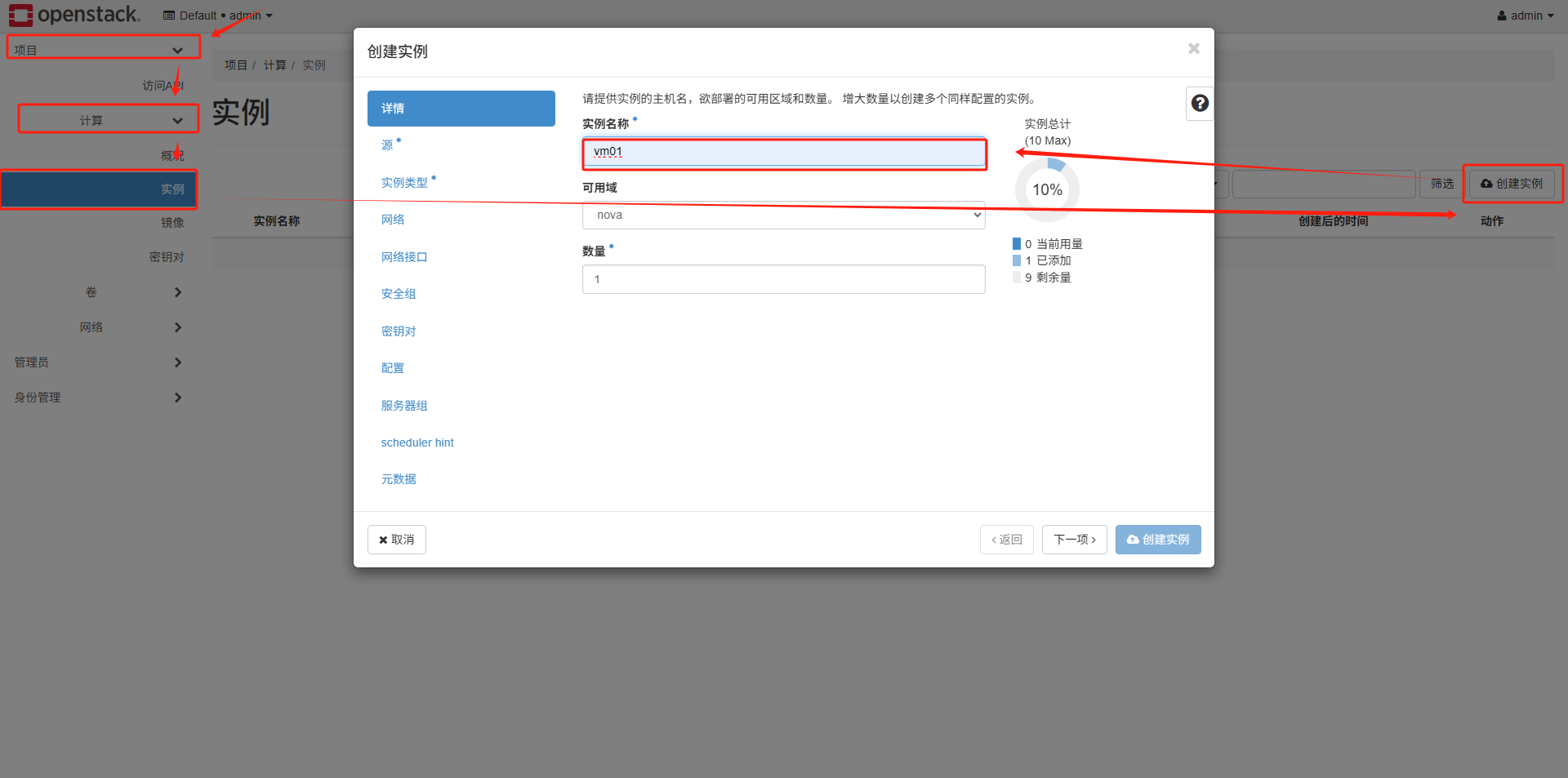

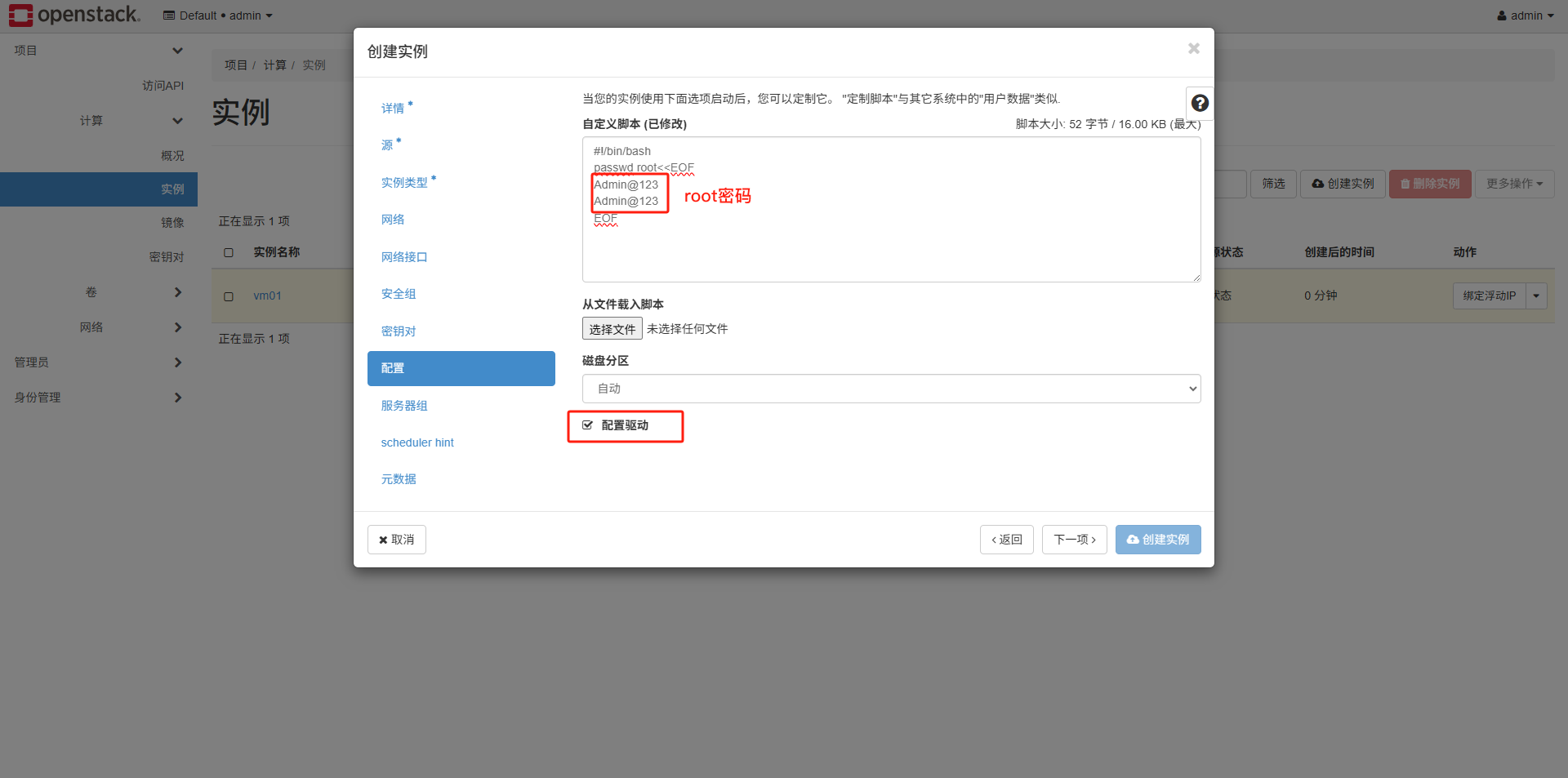

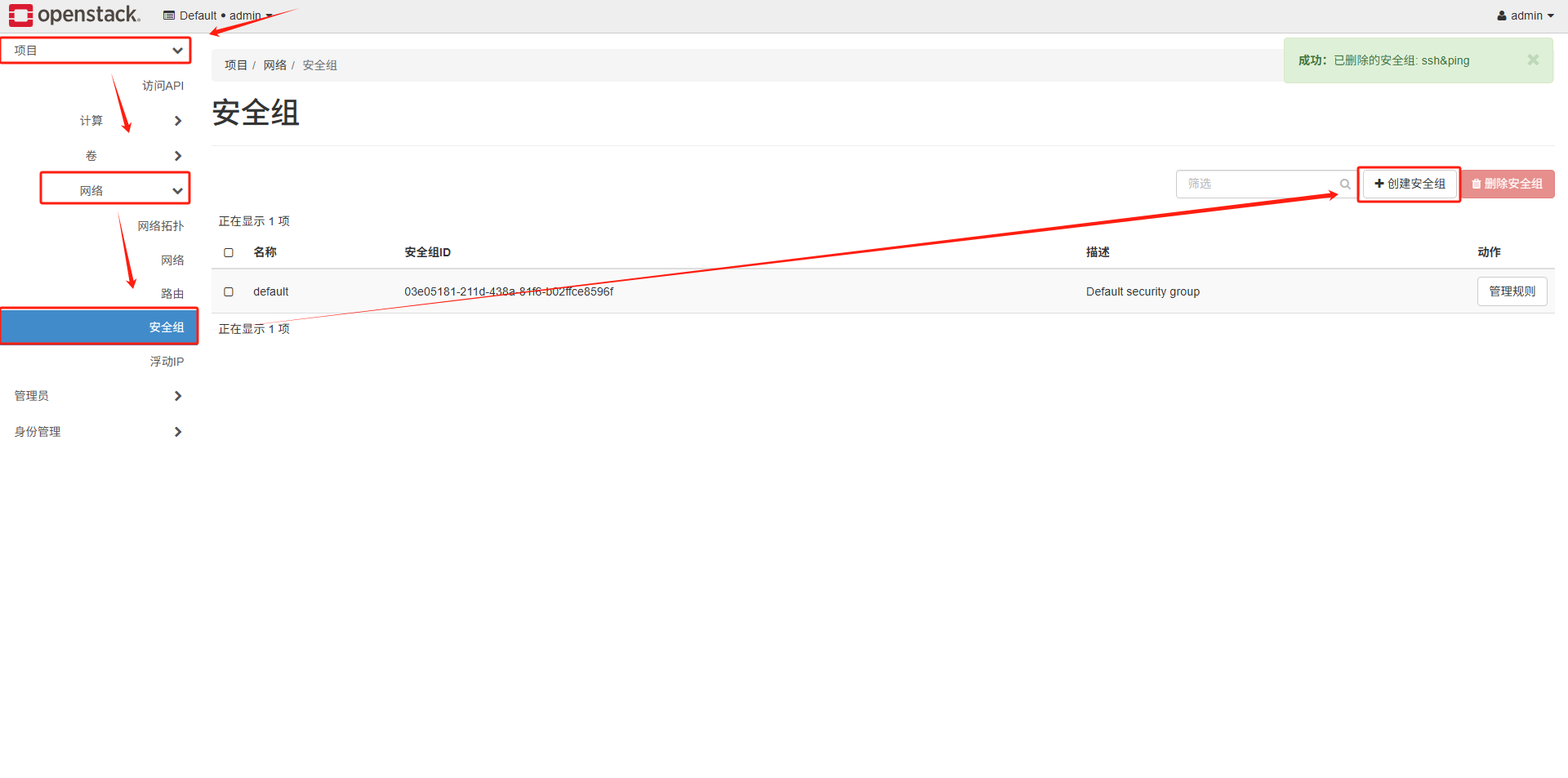

10.4 创建虚拟机实例

正常管理虚拟机不应该使用admin用户,我们在这里简单创建测试 一下

1.查看镜像,规格,网络等信息

[root@controller ~]# openstack image list

+--------------------------------------+-----------------+--------+

| ID | Name | Status |

+--------------------------------------+-----------------+--------+

| 03a823ea-6883-4a4b-9629-1b4839f0644a | cirros | active |

+--------------------------------------+-----------------+--------+

[root@controller ~]# openstack network list

+--------------------------------------+----------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+----------+--------------------------------------+

| cbd39bfd-28f2-455c-9b85-20cf78263797 | provider | e705a12f-aeb2-4414-aafe-1a676a8c87f0 |

+--------------------------------------+----------+--------------------------------------+

[root@controller ~]# openstack flavor list

+----+---------+-----+------+-----------+-------+-----------+

| ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

+----+---------+-----+------+-----------+-------+-----------+

| 0 | m1.nano | 512 | 1 | 0 | 1 | True |

+----+---------+-----+------+-----------+-------+-----------+

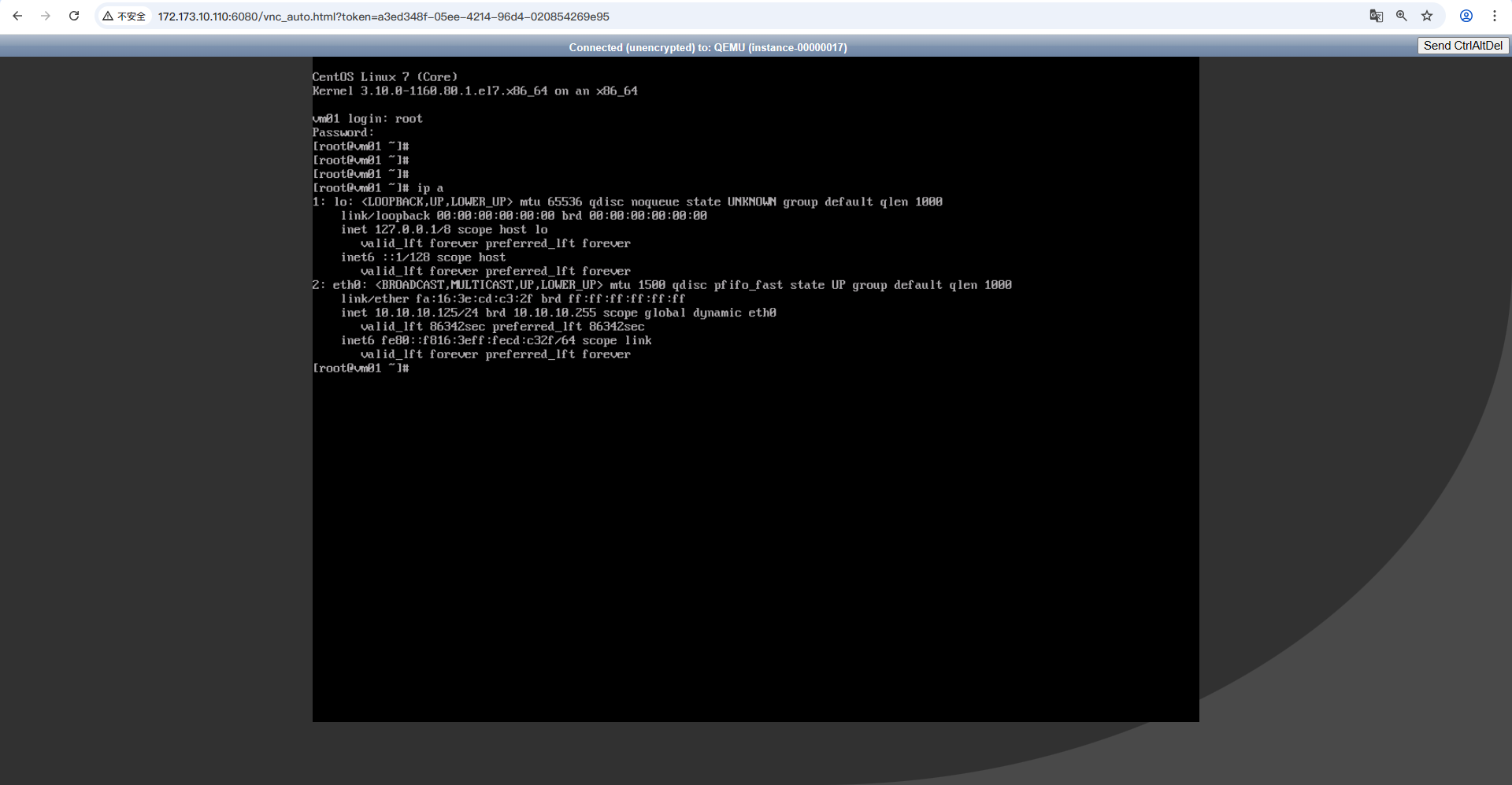

2.创建实例

[root@controller ~]# openstack server create --flavor m1.nano --image cirros --nic net-id=cbd39bfd-28f2-455c-9b85-20cf78263797 vm01

+-------------------------------------+-----------------------------------------------+

| Field | Value |

+-------------------------------------+-----------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | None |

| OS-EXT-SRV-ATTR:hypervisor_hostname | None |

| OS-EXT-SRV-ATTR:instance_name | |

| OS-EXT-STS:power_state | NOSTATE |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | wJ5piBzSJPEh |

| config_drive | |

| created | 2025-05-30T03:05:07Z |

| flavor | m1.nano (0) |

| hostId | |

| id | ce549054-5159-4ec4-8f4b-8195e8879c71 |

| image | cirros (03a823ea-6883-4a4b-9629-1b4839f0644a) |

| key_name | None |

| name | vm01 |

| progress | 0 |

| project_id | fbe4fead10f94b8187e7661246c0f5e6 |

| properties | |

| security_groups | name='default' |

| status | BUILD |

| updated | 2025-05-30T03:05:07Z |

| user_id | 4093e7a9f5454322ba9987581b564fe4 |

| volumes_attached | |

+-------------------------------------+-----------------------------------------------+

[root@controller ~]# openstack server list

+--------------------------------------+------+--------+---------------------+--------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------+--------+---------------------+--------+---------+

| ce549054-5159-4ec4-8f4b-8195e8879c71 | vm01 | ACTIVE | provider=10.1.1.113 | cirros | m1.nano |

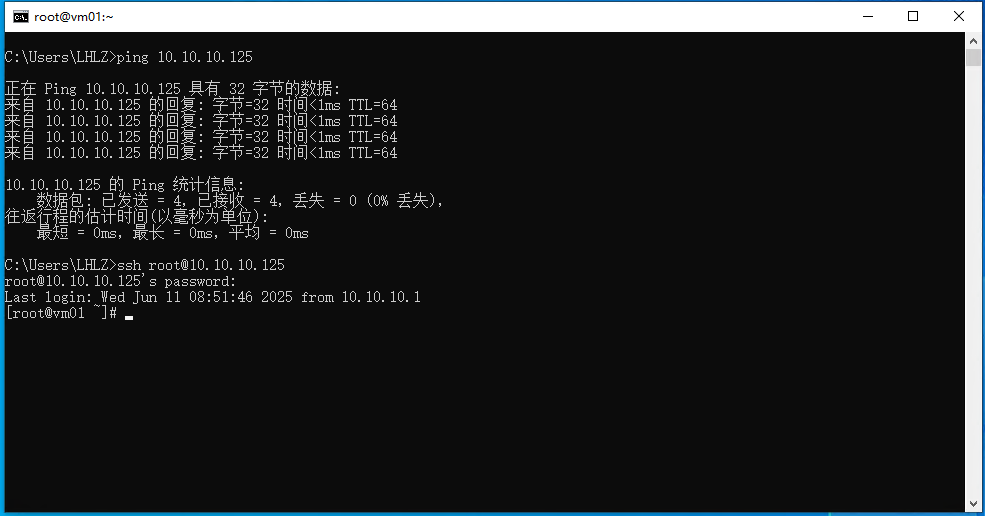

+--------------------------------------+------+--------+---------------------+--------+---------+