VGG使用块的网络

VGG使用块的网络

一.手写VGG架构

import torch

from torch import nn

import d2l

#1.VGG块

def VGG_block(nums_conv,in_chanels,out_chanels):#卷积层数,输入通道数,输出通道数

layers=[]#一个VGG里面的层

for i in range(nums_conv):

layers.append(

nn.Conv2d(in_chanels,out_chanels,3,padding=1)

)

layers.append(

nn.ReLU()

)

in_chanels=out_chanels#下一层输入等于本层输出

layers.append(

nn.MaxPool2d(kernel_size=2,stride=2)

)

return nn.Sequential(*layers)

#VGG网络由多个VGG块组成

def VGG_net(conv_info):#传入有关每一个块的卷积情况

conv_blocks=[]

in_chanels=1#默认输入通道初始是1

for (nums_conv,out_chanels) in conv_info:

conv_blocks.append(VGG_block(nums_conv,in_chanels,out_chanels))

in_chanels=out_chanels#下一层输入等于本层输出

return nn.Sequential(

*conv_blocks,nn.Flatten(),

nn.Linear(7*7*out_chanels,4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(4096,4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(4096,10)

)

conv_info=((1,64),(1,128),(2,256),(2,512),(2,512))

net=VGG_net(conv_info)

X=torch.randn((1,1,224,224))

for layer in net:

X=layer(X)

print(layer.__class__.__name__,X.shape)分析代码:

VGG网络架构,由多个VGG块以及3个全连接层组成

每个VGG块可以不同,VGG块由多个3*3padding=1的卷积层和一个2*2stride=2的最大池化层组成

(1)VGG块

def VGG_block(nums_conv,in_chanels,out_chanels):#卷积层数,输入通道数,输出通道数

layers=[]#一个VGG里面的层

for i in range(nums_conv):

layers.append(

nn.Conv2d(in_chanels,out_chanels,3,padding=1)

)

layers.append(

nn.ReLU()

)

in_chanels=out_chanels#下一层输入等于本层输出

layers.append(

nn.MaxPool2d(kernel_size=2,stride=2)

)

return nn.Sequential(*layers)(2)VGG网络

def VGG_net(conv_info):#传入有关每一个块的卷积情况

conv_blocks=[]

in_chanels=1#默认输入通道初始是1

for (nums_conv,out_chanels) in conv_info:

conv_blocks.append(VGG_block(nums_conv,in_chanels,out_chanels))

in_chanels=out_chanels#下一层输入等于本层输出

return nn.Sequential(#这一部分和AlexNet一样,三层全连接层

*conv_blocks,nn.Flatten(),

nn.Linear(7*7*out_chanels,4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(4096,4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(4096,10)

)二.训练VGG网络

import torch

from torch import nn

from d2l import torch as d2l

#1.VGG块

def VGG_block(nums_conv,in_chanels,out_chanels):#卷积层数,输入通道数,输出通道数

layers=[]#一个VGG里面的层

for i in range(nums_conv):

layers.append(

nn.Conv2d(in_chanels,out_chanels,3,padding=1)

)

layers.append(

nn.ReLU()

)

in_chanels=out_chanels#下一层输入等于本层输出

layers.append(

nn.MaxPool2d(kernel_size=2,stride=2)

)

return nn.Sequential(*layers)

#VGG网络由多个VGG块组成

def VGG_net(conv_info):#传入有关每一个块的卷积情况

conv_blocks=[]

in_chanels=1#默认输入通道初始是1

for (nums_conv,out_chanels) in conv_info:

conv_blocks.append(VGG_block(nums_conv,in_chanels,out_chanels))

in_chanels=out_chanels#下一层输入等于本层输出

nn.Flatten()

return nn.Sequential(

*conv_blocks,nn.Flatten(),

nn.Linear(7*7*out_chanels,4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(4096,4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(4096,10)

)

conv_info=((1,64),(1,128),(2,256),(2,512),(2,512))

#因为这个VGG网络层数太多运行太慢,所以把输出通道除4

ratio=4

smallconv_info=[(pair[0],pair[1]//ratio) for pair in conv_info]

net=VGG_net(smallconv_info)

batch_size=128

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size=batch_size,resize=224)#数据集

lr=0.05

nums_epochs=10

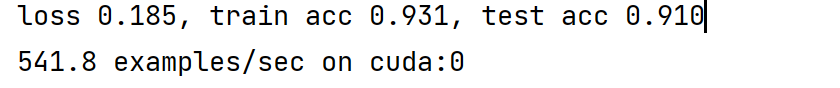

d2l.train_ch6(net,train_iter,test_iter,nums_epochs,lr,d2l.try_gpu())

浙公网安备 33010602011771号

浙公网安备 33010602011771号