emqx日志记录

数据都是从emqx接入进来,然后系统进行数据清洗等操作后再入库,这过程中会丢失错误信息,在平常运维过程中不容易及时排查出装置数据为啥上报不成功,所以想记录到emqx接收到的消息。

这里主要是通过emqx自带的插件web_hook;

web_hook的相关介绍可以看这个:https://docs.emqx.com/zh/emqx/v4.2/advanced/webhook.html

我们使用的emqx的版本比较老了,新版的可能不太一样,建议根据自己的版本来查看文档;

启用web_hook的方法可以看文档,我是使用的命令行方式:

emqx_ctl plugins load emqx_web_hook

启用之后,会一直生效,直到手动关闭此插件。

另外就是,web_hook是异步的,不会影响emqx本身的主进程,但是会多占用一些内存,web_hook发送的消息,若是失败次数达到阈值就不会再重试,会输出日志然后丢弃消息;

web_hook的配置文件在emqx主配置文件所在的目录下的plugins目录中,一般是:/etc/emqx/plugins/emqx_web_hook.conf

在这里需要配置一下web_hook,主要配置哪些消息要通过url发送到web服务器去,以及目标url是啥;

下面是示例:

cat /etc/emqx/plugins/emqx_web_hook.conf ##==================================================================== ## WebHook ##==================================================================== ## The web services URL for Hook request ## ## Value: String web.hook.api.url = http://192.168.25.36:35117/emqxLog ##-------------------------------------------------------------------- ## HTTP Request Headers ## ## The header params what you extra need ## Format: ## web.hook.headers.<param> = your-param ## Example: ## 1. web.hook.headers.token = your-token ## 2. web.hook.headers.other = others-param ## ## Value: String ## web.hook.headers.token = your-token ##-------------------------------------------------------------------- ## Encode message payload field ## ## Value: base64 | base62 ## web.hook.encode_payload = base64 ## Mysql ssl configuration. ## ## Value: on | off ## web.hook.ssl = off ##-------------------------------------------------------------------- ## CA certificate. ## ## Value: File ## web.hook.ssl.cafile = path to your ca file ## Client ssl certificate. ## ## Value: File ## web.hook.ssl.certfile = path to your clientcert file ##-------------------------------------------------------------------- ## Client ssl keyfile. ## ## Value: File ## web.hook.ssl.keyfile = path to your clientkey file ##-------------------------------------------------------------------- ## Hook Rules ## These configuration items represent a list of events should be forwarded ## ## Format: ## web.hook.rule.<HookName>.<No> = <Spec> web.hook.rule.client.connect.1 = {"action": "on_client_connect"} web.hook.rule.client.connack.1 = {"action": "on_client_connack"} web.hook.rule.client.connected.1 = {"action": "on_client_connected"} web.hook.rule.client.disconnected.1 = {"action": "on_client_disconnected"} web.hook.rule.client.subscribe.1 = {"action": "on_client_subscribe"} web.hook.rule.client.unsubscribe.1 = {"action": "on_client_unsubscribe"} web.hook.rule.session.subscribed.1 = {"action": "on_session_subscribed"} web.hook.rule.session.unsubscribed.1 = {"action": "on_session_unsubscribed"} web.hook.rule.session.terminated.1 = {"action": "on_session_terminated"} web.hook.rule.message.publish.1 = {"action": "on_message_publish"} web.hook.rule.message.delivered.1 = {"action": "on_message_delivered"} web.hook.rule.message.acked.1 = {"action": "on_message_acked"}

然后我们可以开始设计表结构了,我选择的是clickhouse来存储这些消息,表结构如下:

CREATE TABLE default.emqx_log ( `log_time` DateTime64(3,'UTC') COMMENT '记录时间', `create_time` DateTime DEFAULT now() COMMENT '入库时间', `clientid` Nullable(String) COMMENT '客户端id', `action` Nullable(String) COMMENT '消息类别', `topic` Nullable(String) COMMENT '主题', `logo` String COMMENT 'topic中的DL或者clientid', `body` Nullable(String) COMMENT '消息体' ) ENGINE = ReplicatedMergeTree('/clickhouse/tables/emqx_log/03','192.168.25.46') PRIMARY KEY (log_time,logo) ORDER BY (log_time,logo) TTL create_time + toIntervalDay(15) SETTINGS index_granularity = 8192;

建表完成之后,就可以开始着手编写python代码了:

import threading,time,copy,sched,datetime,logging,re from flask import Flask, request, jsonify from clickhouse_sqlalchemy import make_session from sqlalchemy import create_engine from sqlalchemy.sql import text,bindparam from sqlalchemy.types import TypeEngine,DateTime ''' 测试curl命令: curl http://192.168.52.6:35517/emqxLog -H "Content-type: application/json" -l -X post -d '{"username":null,"proto_ver":5,"node":"emqx@127.0.0.1","keepalive":60,"ipaddress":"127.0.0.1","clientid":"gyj_test","action":"client_connect"}' ''' app = Flask(__name__) 待写入数据库的数据=[] data_lock = threading.Lock() @app.route('/emqxLog', methods=['POST']) def emqxLog(): ''' 接受emqx发送过来的消息 ''' global 待写入数据库的数据,data_lock data = request.json log_time=datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S.%f")[:-3] clientid = data.get('clientid') if(clientid ==None): clientid = data.get('from_client_id') if(clientid==None):clientid="" action = data.get('action') body = str(data).replace("'",'"') if(action in ['client_subscribe','client_unsubscribe','session_subscribed','session_unsubscribed','message_publish','message_delivered',]): try: topic = data.get('topic') logo = topic.split('/')[2] except Exception as e: topic='' logo='' else: topic="" logo="" temp_info = [log_time,clientid,action,topic,logo,body] data_lock.acquire() try: 待写入数据库的数据.append(temp_info) finally: data_lock.release() return jsonify({"is_success":True,}), 200; def split_list(input_list:list,chunk_size:int)->list: ''' 以指定数量分割列表,返回二维列表。 ''' return [input_list[i:i+chunk_size] for i in range(0,len(input_list),chunk_size)]; def 数据写入到CK中(): global 待写入数据库的数据,data_lock 复制过来的数据=None data_lock.acquire() try: 复制过来的数据=copy.deepcopy(待写入数据库的数据) 待写入数据库的数据.clear() except Exception as e: 待写入数据库的数据.clear() print("总共{}条数据,此次复制数据出现问题:\n{}\n未进行写入".format(len(待写入数据库的数据),str(e))) return finally: data_lock.release() if(len(复制过来的数据)==0): print("总共0条数据,不进行写入操作") return print(f"即将写入{len(复制过来的数据)}条数据。") connection = 'clickhouse://default:2021@192.168.52.3:18123/default' engine= create_engine(connection,pool_size=100,pool_recycle=3600,pool_timeout=10) session = make_session(engine=engine) for datas in split_list(复制过来的数据,500): ck_sql_str_basic='INSERT INTO `default`.emqx_log_all (log_time, clientid, `action`, topic, logo, body) VALUES' for data in datas: value_str ="('{}'),".format("','".join(data)) ck_sql_str_basic+=value_str ck_sql_str_basic=ck_sql_str_basic[:-1]+';' # ck_sql_str_basic=re.sub(r'%\((\d+)\)s',r':\1',ck_sql_str_basic) ck_sql_str_basic=ck_sql_str_basic.replace(':','\\:') # ck_sql_str_basic_text=text(ck_sql_str_basic) try: session.execute(text(ck_sql_str_basic).execution_options(no_parameters=True)) session.commit() pass except Exception as e: print(datetime.datetime.now()) print(ck_sql_str_basic) print("\n\n\n") print(e) session.close() 复制过来的数据.clear(); my_scheduler = sched.scheduler(time.time, time.sleep) #定时任务 def schedule_task(): global my_scheduler my_scheduler.enter(1,1,数据写入到CK中) my_scheduler.enter(10,1,schedule_task) def run_schedule_task(): global my_scheduler schedule_task() # 启动后台定时任务 my_scheduler.run() logging.getLogger('werkzeug').setLevel(logging.ERROR) schedule_thread = threading.Thread(target=run_schedule_task) schedule_thread.start() # if __name__ == "__main__": # schedule_thread = threading.Thread(target=run_schedule_task) # schedule_thread.start() # # app.logger.setLevel(logging.ERROR) # # for h in app.logger.handlers[:]: # # app.logger.removeHandler(h) # logging.getLogger('werkzeug').setLevel(logging.ERROR) # app.run(host='0.0.0.0', port=35117) # 在内网启动服务器

我开始使用的单进程,后面发现有点扛不住,3秒钟可以有1万多条消息,担心在消息高峰期单进程处理不过来,后面用了gunicorn来多进程部署:

gunicorn -w 10 -b 0.0.0.0:35517 emqx日志记录:app

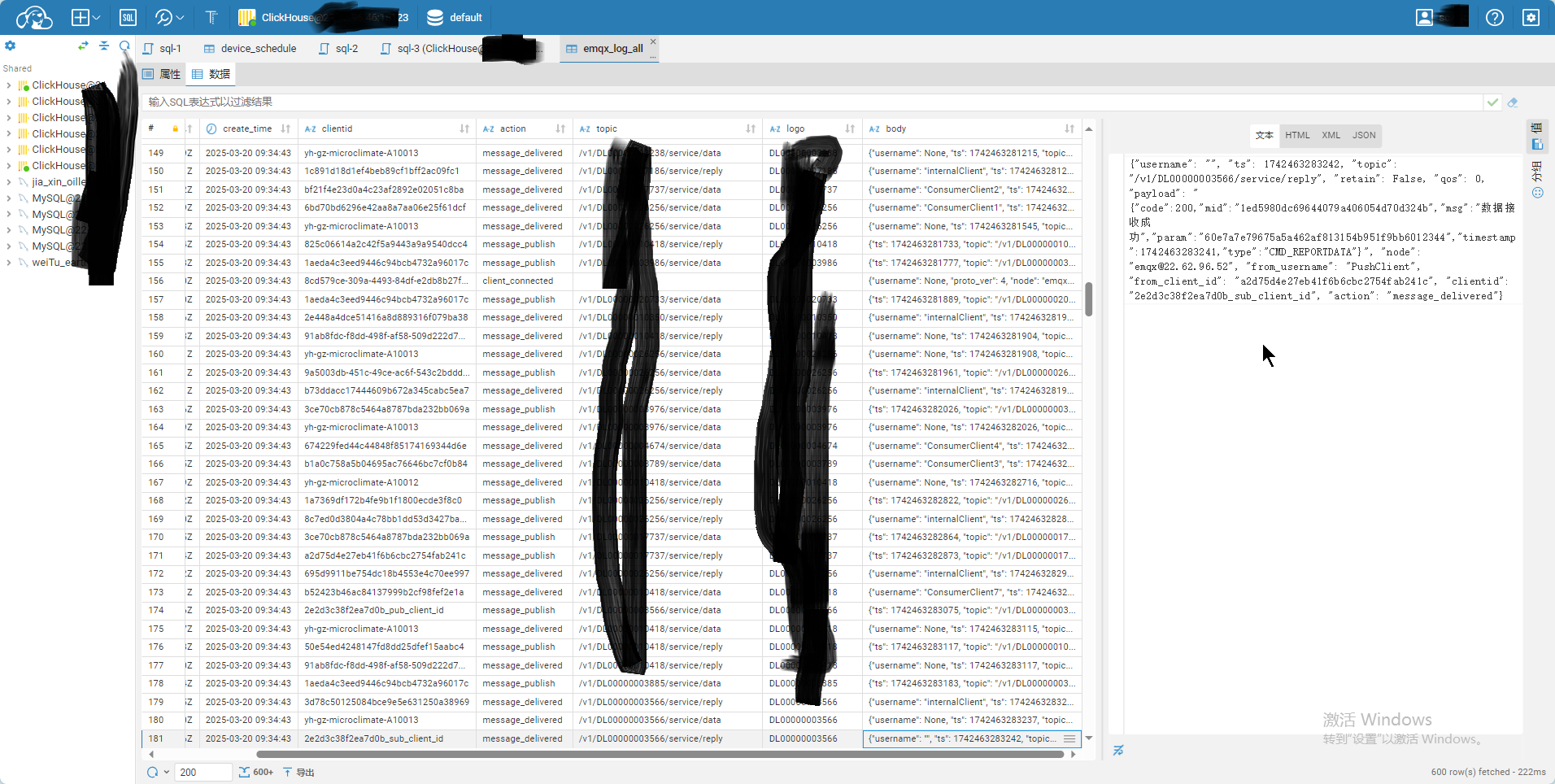

成功,然后弄出来大概这样:

浙公网安备 33010602011771号

浙公网安备 33010602011771号