flink初识

一、flink:apache开源的一款流处理框架,其核心是用Java和Scala编写的分布式流数据流引擎。Flink以数据并行和流水线方式执行任意流数据程序,Flink的流水线运行时系统可以执行批处理和流处理程序。此外,Flink的运行时本身也支持迭代算法的执行。

二、Flink是一个计算框架和分布式的计算处理引擎,基于对流(实时、无界)和批(离散、有界)数据进行有状态的计算,它可以通过集群以内存进行任意规模的数据计算。

- 高吞吐、低延迟、高性能

- 支持带有事件的窗口(window)操作

- 支持有状态的计算

- 内存计算

- 迭代计算

三、应用场景

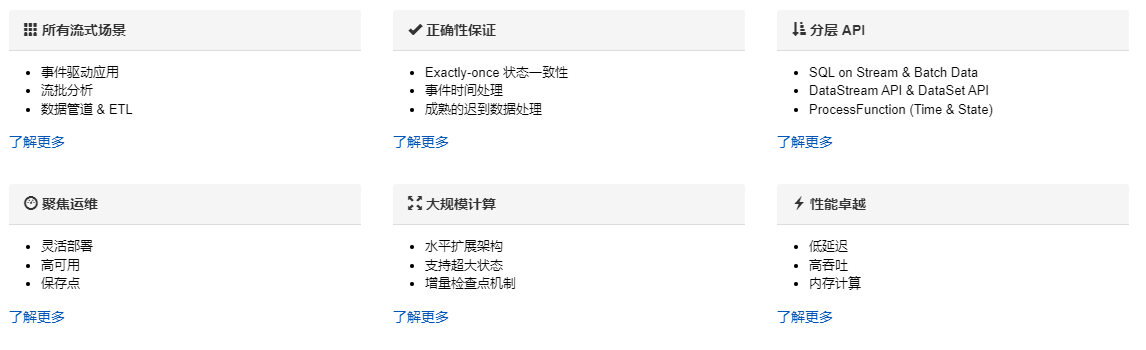

Apache Flink 功能强大,支持开发和运行多种不同种类的应用程序。它的主要特性包括:批流一体化、精密的状态管理、事件时间支持以及精确一次的状态一致性保障等。Flink 不仅可以运行在包括 YARN、 Mesos、Kubernetes 在内的多种资源管理框架上,还支持在裸机集群上独立部署。在启用高可用选项的情况下,它不存在单点失效问题。事实证明,Flink 已经可以扩展到数千核心,其状态可以达到 TB 级别,且仍能保持高吞吐、低延迟的特性。世界各地有很多要求严苛的流处理应用都运行在 Flink 之上。

四、组成:

flink 主要分为2个部分:jobmanager、taskmanager。

jobmanager:主要是处理作业。

taskmanager:通过任务槽执行具体的任务。

当然。除了2个主要的,还需要resourcemanager(资源管理器)主要协调任务的和资源的调度过程。

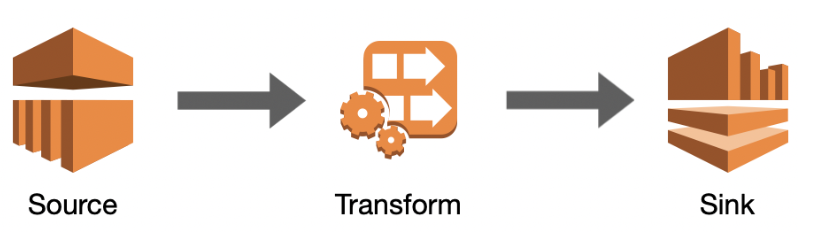

五、flink 链路流程:

1、source:数据来源

2、transform:数据转换,分析处理过程。

3、sink:结果输出下沉。

五、本地数据库采集开发测试:

1)maven依赖(具体的都是根据需要进行依赖即可):

<dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>1.16.2</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java</artifactId> <version>1.16.2</version> </dependency> <!-- client --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients</artifactId> <version>1.16.2</version> </dependency> <!-- jdbc --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-jdbc</artifactId> <version>1.16.2</version> </dependency> <!-- mysql --> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.29</version> </dependency> </dependencies>

2)demo

public class Demo { public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); // source DataStreamSource<Row> input = env.createInput(JdbcInputFormat .buildJdbcInputFormat() .setDrivername("com.mysql.cj.jdbc.Driver") .setDBUrl("jdbc:mysql://127.0.0.1:3306/test?characterEncoding=utf8&useSSL=false&serverTimezone=GMT%2B8&allowMultiQueries=true") .setUsername("root") .setPassword("root") .setQuery("select id from user") .setRowTypeInfo(new RowTypeInfo(BasicTypeInfo.STRING_TYPE_INFO)) .finish()); //transform SingleOutputStreamOperator<String> operator = input.map(new MapFunction<Row, String>() { public String map(Row row) throws Exception { return String.valueOf(row.getField(0)); } }); //sink operator.addSink(JdbcSink.sink( "INSERT INTO test(id) values(?)", new JdbcStatementBuilder<String>() { public void accept(PreparedStatement ps, String id) throws SQLException { ps.setString(1, id); } }, new JdbcConnectionOptions.JdbcConnectionOptionsBuilder() .withDriverName("com.mysql.cj.jdbc.Driver") .withUrl("jdbc:mysql://127.0.0.1:3306/test?characterEncoding=utf8&useSSL=false&serverTimezone=GMT%2B8&allowMultiQueries=true") .withUsername("root") .withPassword("root") .build() )); env.execute("demo"); } }

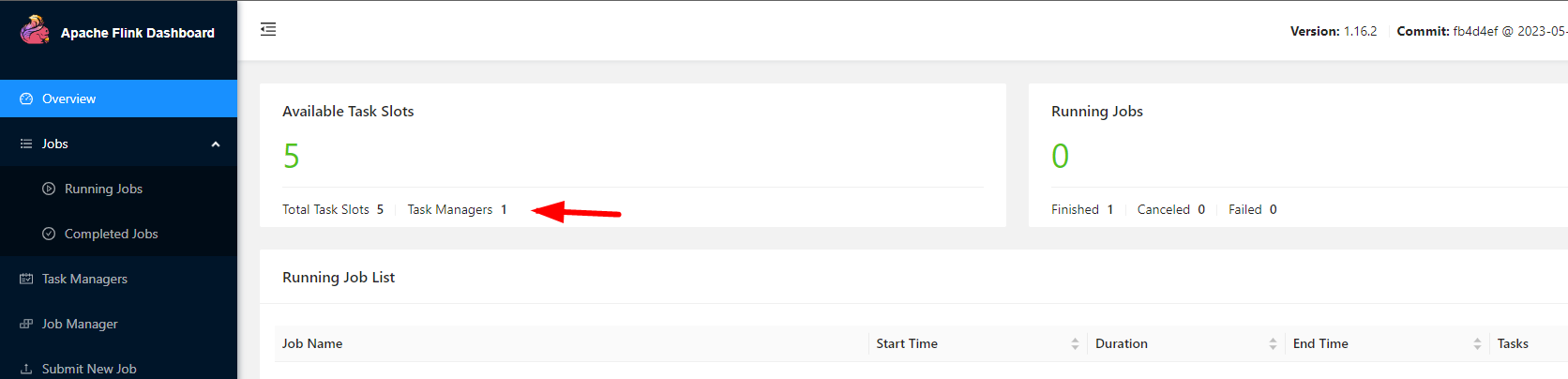

六、集群开发测试:

1)集群搭建

version: '3' services: xbd-flink-job: image: flink:1.16.2 container_name: xbd-flink-job restart: always privileged: true ports: - 8081:8081 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: xbd-flink-job parallelism.default: 2 command: jobmanager xbd-flink-task: image: flink:1.16.2 container_name: xbd-flink-task restart: always privileged: true environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: xbd-flink-job taskmanager.numberOfTaskSlots: 5 parallelism.default: 2 command: taskmanager depends_on: - xbd-flink-job

HA模式:

version: "3.8" x-zk-common: &zk-common image: zookeeper:3.8 restart: always privileged: true networks: - dsmp x-flink-common: &flink-common image: dockerhub.cetccst.dev/sjaq/dsms/flink:1.20.1 restart: always user: root privileged: true networks: - dsmp services: xbd-zk-1: <<: *zk-common container_name: xbd-zk-1 hostname: xbd-zk-1 ports: - 2181:2181 environment: - ZOO_MY_ID=1 - ZOO_CLIENT_PORT=2181 - ZOO_ADMINSERVER_ENABLED=false - ZOO_SERVERS=server.1=xbd-zk-1:2888:3888;2181 server.2=xbd-zk-2:2889:3889;2182 server.3=xbd-zk-3:2890:3890;2183 volumes: - /opt/apps/data/zookeeper/zk-1:/data xbd-zk-2: <<: *zk-common container_name: xbd-zk-2 hostname: xbd-zk-2 environment: - ZOO_MY_ID=2 - ZOO_CLIENT_PORT=2182 - ZOO_ADMINSERVER_ENABLED=false - ZOO_SERVERS=server.1=xbd-zk-1:2888:3888;2181 server.2=xbd-zk-2:2889:3889;2182 server.3=xbd-zk-3:2890:3890;2183 volumes: - /opt/apps/data/zookeeper/zk-2:/data xbd-zk-3: <<: *zk-common container_name: xbd-zk-3 hostname: xbd-zk-3 environment: - ZOO_MY_ID=3 - ZOO_CLIENT_PORT=2183 - ZOO_ADMINSERVER_ENABLED=false - ZOO_SERVERS=server.1=xbd-zk-1:2888:3888;2181 server.2=xbd-zk-2:2889:3889;2182 server.3=xbd-zk-3:2890:3890;2183 volumes: - /opt/apps/data/zookeeper/zk-3:/data flink-job-1: <<: *flink-common container_name: flink-job-1 hostname: flink-job-1 ports: - 8081:8081 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= rest.port: 8081 jobmanager.rpc.address: flink-job-1 jobmanager.memory.process.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: file:///opt/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true volumes: - /opt/flink/flink-web-upload:/opt/flink/flink-web-upload - /opt/apps/data/flink/cluster:/opt/flink/cluster command: - /bin/bash - -c - | sed -i '/web.upload.dir: \/opt\/flink\/d' /opt/flink/conf/config.yaml echo 'web.upload.dir: /opt/flink' >> /opt/flink/conf/config.yaml /docker-entrypoint.sh jobmanager flink-job-2: <<: *flink-common container_name: flink-job-2 hostname: flink-job-2 ports: - 8082:8082 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= rest.port: 8082 jobmanager.rpc.address: flink-job-2 jobmanager.memory.process.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: file:///opt/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true volumes: - /opt/flink/flink-web-upload:/opt/flink/flink-web-upload - /opt/apps/data/flink/cluster:/opt/flink/cluster command: - /bin/bash - -c - | sed -i '/web.upload.dir: \/opt\/flink\/d' /opt/flink/conf/config.yaml echo 'web.upload.dir: /opt/flink' >> /opt/flink/conf/config.yaml /docker-entrypoint.sh jobmanager flink-task-1: <<: *flink-common container_name: flink-task-1 hostname: flink-task-1 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: flink-job-1 parallelism.default: 1 taskmanager.numberOfTaskSlots: 8 taskmanager.memory.process.size: 16G taskmanager.memory.managed.fraction: 0.1 taskmanager.memory.jvm-metaspace.size: 1G taskmanager.memory.task.off-heap.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: file:///opt/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true command: taskmanager flink-task-2: <<: *flink-common container_name: flink-task-2 hostname: flink-task-2 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: flink-job-1 parallelism.default: 1 taskmanager.numberOfTaskSlots: 8 taskmanager.memory.process.size: 16G taskmanager.memory.managed.fraction: 0.1 taskmanager.memory.jvm-metaspace.size: 1G taskmanager.memory.task.off-heap.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: file:///opt/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true command: taskmanager networks: dsmp: external: true

注意:该方式是通过共享文件目录实现的,所以需要修改成其他相关服务,比如HDFS,如何是HA的HDFS解决方式如下:

准备:core-site.xml和hdfs-site.xml(同HDFS集群HA模式下的2个文件)

core-site.xml

<configuration> <property><name>ha.zookeeper.quorum</name><value>xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183</value></property> <property><name>fs.defaultFS</name><value>hdfs://hdfs-cluster</value></property> </configuration>

hdfs-site.xml

<configuration> <property><name>dfs.namenode.http-address.hdfs-cluster.xbd-nn-2</name><value>xbd-nn-2:9871</value></property> <property><name>dfs.nameservices</name><value>hdfs-cluster</value></property> <property><name>dfs.namenode.http-address.hdfs-cluster.xbd-nn-1</name><value>xbd-nn-1:9870</value></property> <property><name>dfs.namenode.http-address</name><value>0.0.0.0:9870</value></property> <property><name>dfs.ha.fencing.methods</name><value>shell(/bin/true)</value></property> <property><name>dfs.namenode.rpc-address.hdfs-cluster.xbd-nn-2</name><value>xbd-nn-2:8021</value></property> <property><name>dfs.ha.automatic-failover.enabled</name><value>true</value></property> <property><name>dfs.namenode.rpc-address.hdfs-cluster.xbd-nn-1</name><value>xbd-nn-1:8020</value></property> <property><name>dfs.permissions.enable</name><value>false</value></property> <property><name>dfs.namenode.name.dir</name><value>/data</value></property> <property><name>dfs.namenode.shared.edits.dir</name><value>qjournal://xbd-jn-1:8485;xbd-jn-2:8486;xbd-jn-3:8487/hdfs-cluster</value></property> <property><name>dfs.namenode.rpc-address</name><value>0.0.0.0:8020</value></property> <property><name>dfs.client.failover.proxy.provider.hdfs-cluster</name><value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value></property> <property><name>dfs.ha.namenodes.hdfs-cluster</name><value>xbd-nn-1,xbd-nn-2</value></property> </configuration>

一些HDFS的依赖包:commons-cli.jar和flink-shaded-hadoop-3-uber.jar

放入指定目录需要修改部分权限:Dockerfile如下:

FROM flink:1.20.1 MAINTAINER xbd COPY ./flink-shaded-hadoop-3-uber.jar /opt/flink/lib/ COPY ./commons-cli.jar /opt/flink/lib/ COPY ./core-site.xml /opt/flink/hadoop/ COPY ./hdfs-site.xml /opt/flink/hadoop/ RUN sed -i s/'echo su-exec flink'/'echo su-exec root'/g /docker-entrypoint.sh RUN sed -i s/'echo gosu flink'/'echo gosu root'/g /docker-entrypoint.sh

改动后的集群如下:

version: "3.8" x-zk-common: &zk-common image: zookeeper:3.8 restart: always privileged: true network_mode: host x-flink-common: &flink-common build: context: ./ dockerfile: ./Dockerfile-flink image: csp-flink:1.20 restart: always user: root privileged: true network_mode: host services: xbd-zk-1: <<: *zk-common container_name: xbd-zk-1 hostname: xbd-zk-1 environment: - ZOO_MY_ID=1 - ZOO_CLIENT_PORT=2181 - ZOO_ADMINSERVER_ENABLED=false - ZOO_SERVERS=server.1=xbd-zk-1:2888:3888;2181 server.2=xbd-zk-2:2889:3889;2182 server.3=xbd-zk-3:2890:3890;2183 volumes: - /opt/apps/data/zookeeper/zk-1:/data xbd-zk-2: <<: *zk-common container_name: xbd-zk-2 hostname: xbd-zk-2 environment: - ZOO_MY_ID=2 - ZOO_CLIENT_PORT=2182 - ZOO_ADMINSERVER_ENABLED=false - ZOO_SERVERS=server.1=xbd-zk-1:2888:3888;2181 server.2=xbd-zk-2:2889:3889;2182 server.3=xbd-zk-3:2890:3890;2183 volumes: - /opt/apps/data/zookeeper/zk-2:/data xbd-zk-3: <<: *zk-common container_name: xbd-zk-3 hostname: xbd-zk-3 environment: - ZOO_MY_ID=3 - ZOO_CLIENT_PORT=2183 - ZOO_ADMINSERVER_ENABLED=false - ZOO_SERVERS=server.1=xbd-zk-1:2888:3888;2181 server.2=xbd-zk-2:2889:3889;2182 server.3=xbd-zk-3:2890:3890;2183 volumes: - /opt/apps/data/zookeeper/zk-3:/data flink-job-1: <<: *flink-common container_name: flink-job-1 hostname: flink-job-1 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= rest.port: 8081 blob.server.port: 6124 jobmanager.rpc.address: flink-job-1 jobmanager.memory.process.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: hdfs://hdfs-cluster/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 env.log.level: ERROR zookeeper.sasl.disable: true env.hadoop.conf.dir: /opt/flink/hadoop volumes: - /opt/flink/flink-web-upload:/opt/flink/flink-web-upload command: - /bin/bash - -c - | sed -i '/web.upload.dir: \/opt\/flink\/d' /opt/flink/conf/config.yaml echo 'web.upload.dir: /opt/flink' >> /opt/flink/conf/config.yaml /docker-entrypoint.sh jobmanager flink-job-2: <<: *flink-common container_name: flink-job-2 hostname: flink-job-2 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= rest.port: 8082 blob.server.port: 6125 jobmanager.rpc.address: flink-job-2 jobmanager.memory.process.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: hdfs://hdfs-cluster/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true env.hadoop.conf.dir: /opt/flink/hadoop volumes: - /opt/flink/flink-web-upload:/opt/flink/flink-web-upload command: - /bin/bash - -c - | sed -i '/web.upload.dir: \/opt\/flink\/d' /opt/flink/conf/config.yaml echo 'web.upload.dir: /opt/flink' >> /opt/flink/conf/config.yaml /docker-entrypoint.sh jobmanager flink-task-1: <<: *flink-common container_name: flink-task-1 hostname: flink-task-1 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: flink-job-1 parallelism.default: 1 taskmanager.numberOfTaskSlots: 8 taskmanager.memory.process.size: 16G taskmanager.memory.managed.fraction: 0.1 taskmanager.memory.jvm-metaspace.size: 1G taskmanager.memory.task.off-heap.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: hdfs://hdfs-cluster/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true env.hadoop.conf.dir: /opt/flink/hadoop command: taskmanager flink-task-2: <<: *flink-common container_name: flink-task-2 hostname: flink-task-2 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: flink-job-1 parallelism.default: 1 taskmanager.numberOfTaskSlots: 8 taskmanager.memory.process.size: 16G taskmanager.memory.managed.fraction: 0.1 taskmanager.memory.jvm-metaspace.size: 1G taskmanager.memory.task.off-heap.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: hdfs://hdfs-cluster/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true env.hadoop.conf.dir: /opt/flink/hadoop command: taskmanager flink-task-3: <<: *flink-common container_name: flink-task-3 hostname: flink-task-3 environment: - TZ=Asia/Shanghai - | FLINK_PROPERTIES= jobmanager.rpc.address: flink-job-1 parallelism.default: 1 taskmanager.numberOfTaskSlots: 8 taskmanager.memory.process.size: 16G taskmanager.memory.managed.fraction: 0.1 taskmanager.memory.jvm-metaspace.size: 1G taskmanager.memory.task.off-heap.size: 1G high-availability.type: ZOOKEEPER high-availability.cluster-id: /flink-cluster high-availability.storageDir: hdfs://hdfs-cluster/flink/cluster high-availability.zookeeper.path.root: /flink-cluster high-availability.zookeeper.quorum: xbd-zk-1:2181,xbd-zk-2:2182,xbd-zk-3:2183 zookeeper.sasl.disable: true env.hadoop.conf.dir: /opt/flink/hadoop command: taskmanager networks: dsmp: external: true

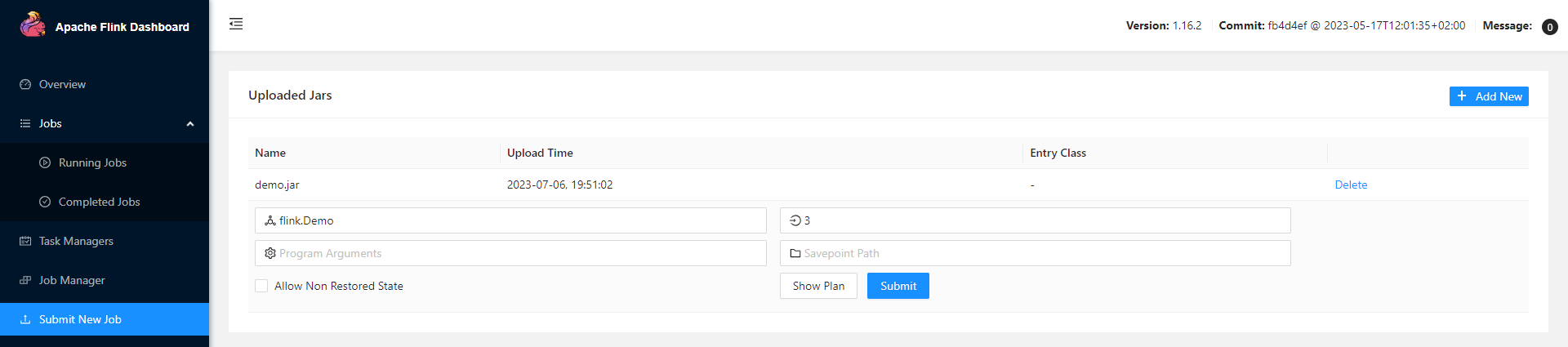

2)打包测试:

a、maven(主要为了打成jar,包含依赖)

<build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>3.2.4</version> <configuration> <createDependencyReducedPom>false</createDependencyReducedPom> </configuration> <executions> <execution> <phase>package</phase> <goals> <goal>shade</goal> </goals> </execution> </executions> </plugin> </plugins> </build>

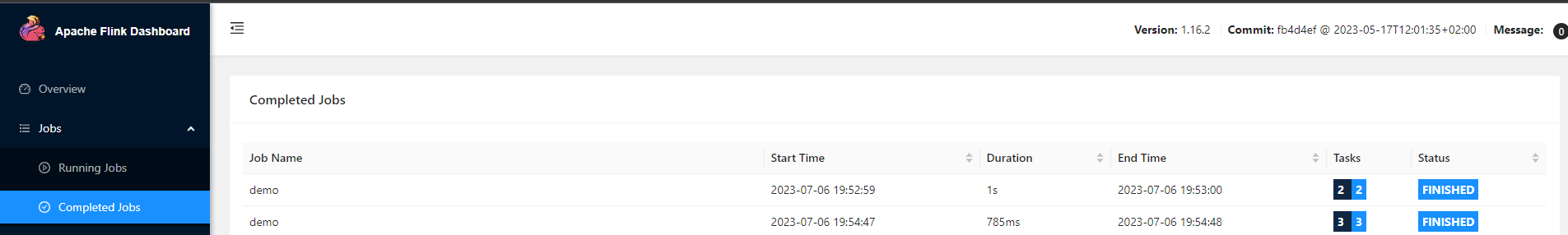

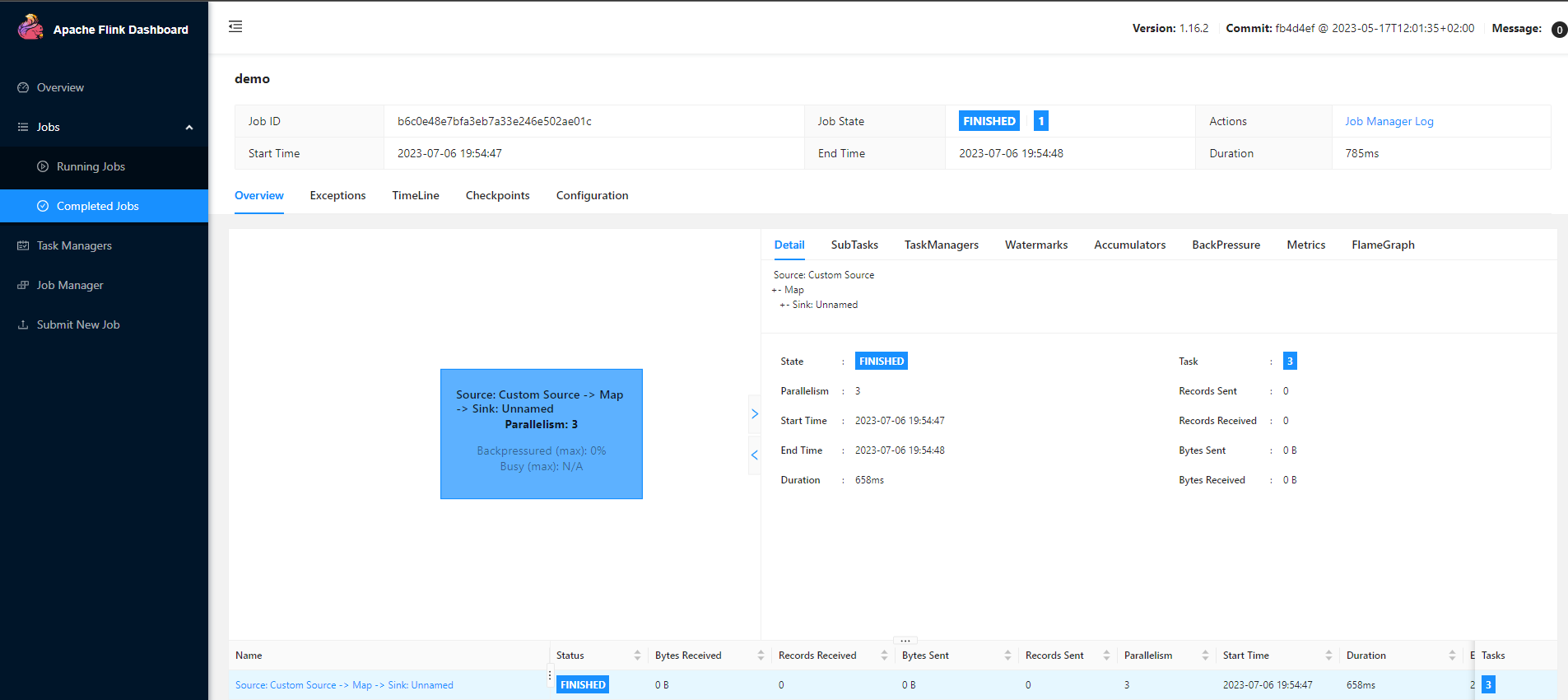

b、执行、提交:

c、结果查看

浙公网安备 33010602011771号

浙公网安备 33010602011771号