第三次作业

作业①

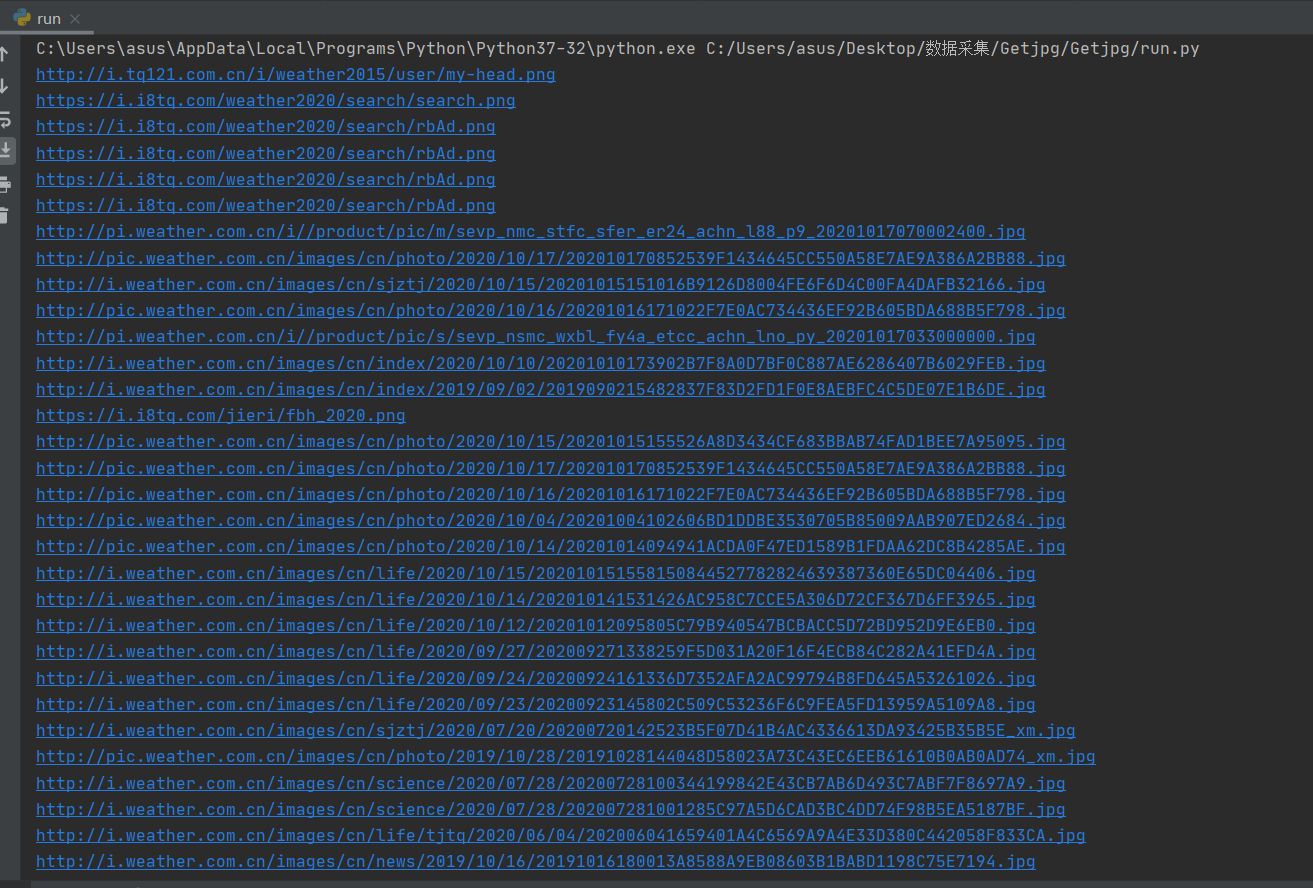

1)、爬取中国气象网的所有图片实验

<1>单线程:

import os

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

def imageSpider(start_url):

try:

urls=[]

req = urllib.request.Request(start_url,headers=headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data,["utf-8","gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data,"lxml")

images = soup.select("img")

for image in images:

try:

src = image["src"]

url = urllib.request.urljoin(start_url,src)

if url not in urls:

print(url)

urls.append(url)

download(url)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url):

global count

try:

if (url[len(url) - 4] == "."):

ext = url[len(url) - 4:]

else:

ext = ""

count = count + 1

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req,timeout = 100)

data = data.read()

fobj = open("C:/Users/asus/Desktop/数据采集/数据采集代码/images1/"+str(count)+ext,"wb")

fobj.write(data)

fobj.close()

except Exception as err:

print(err)

start_url = "http://www.weather.com.cn"

headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"}

count=0

os.mkdir('images1')

print("下载的Url信息:")

imageSpider(start_url)

<2>多线程:

import os

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import threading

def imageSpider(start_url):

global threads

global count

try:

urls=[]

req=urllib.request.Request(start_url,headers=headers)

data=urllib.request.urlopen(req)

data=data.read()

dammit=UnicodeDammit(data,["utf-8","gbk"])

data=dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

images = soup.select("img")

for image in images:

try:

src = image["src"]

url = urllib.request.urljoin(start_url, src)

if url not in urls:

print(url)

count = count + 1

T = threading.Thread(target=download, args=(url, count))

T.setDaemon(False)

T.start()

threads.append(T)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url,count):

try:

if(url[len(url)-4]=="."):

ext=url[len(url)-4:]

else:

ext=""

req=urllib.request.Request(url,headers=headers)

data=urllib.request.urlopen(req,timeout=100)

data=data.read()

fobj=open("C:/Users/asus/Desktop/数据采集/数据采集代码/images2/"+str(count)+ext,"wb")

fobj.write(data)

fobj.close()

except Exception as err:

print(err)

start_url="http://www.weather.com.cn"

headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"}

count=0

os.mkdir('images2')

threads=[]

print("下载的Url信息:")

imageSpider(start_url)

for t in threads:

t.join()

2)、心得体会

这道题难度不高,跟实践课上打的例题差不多。虽然以前也学过多线程,不过几乎忘光了,通过这次实验,我回忆起了许多有关多线程的知识,弄懂了多线程与单线程的区别。

作业②

1)、使用scrapy框架复现作业①实验

jpg(主函数):

import os

import scrapy

from ..items import GetjpgItem

class jpgSpider(scrapy.Spider):

name = 'jpg'

start_urls = ['http://www.weather.com.cn']

def parse(self, response):

srcs = response.xpath('//img/@src')

os.mkdir("C:/Users/asus/Desktop/数据采集/数据采集代码/images3/")

for src in srcs.extract():

item = GetjpgItem()

item["url"] = src

print(src) # 输出下载的Url信息

yield itempipelines:

import urllib

class GetjpgPipeline:

count = 0 # 用于图片命名

def process_item(self, item, spider):

GetjpgPipeline.count += 1

try:

url = item["url"] # 获得url地址

if (url[len(url) - 4] == "."):

ext = url[len(url) - 4:] # ext是个“.”

else:

ext = ""

req = urllib.request.Request(url)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

fobj = open("C:/Users/asus/Desktop/数据采集/数据采集代码/images3/" + str(GetjpgPipeline.count) + ext,

"wb") # 打开一个文件,这个

fobj.write(data) # 写入数据

fobj.close() # 关闭文件

except Exception as err:

print(err)

return itemitems:

import scrapy

class GetjpgItem(scrapy.Item):

url = scrapy.Field()

pass

settings部分代码:

ITEM_PIPELINES = {

'Getjpg.pipelines.GetjpgPipeline': 300,

}

2)、心得体会

刚开始弄不清楚各个py文件的关系,花了很多时间才大致弄懂。我认为,scrapy框架就是通过先items.py设置item里的field,然后通过主函数获取数据并存入相应field里,推送给pipelines.py进行爬取、存储。当然一定要记得在setting.py里去掉三个'#'号。

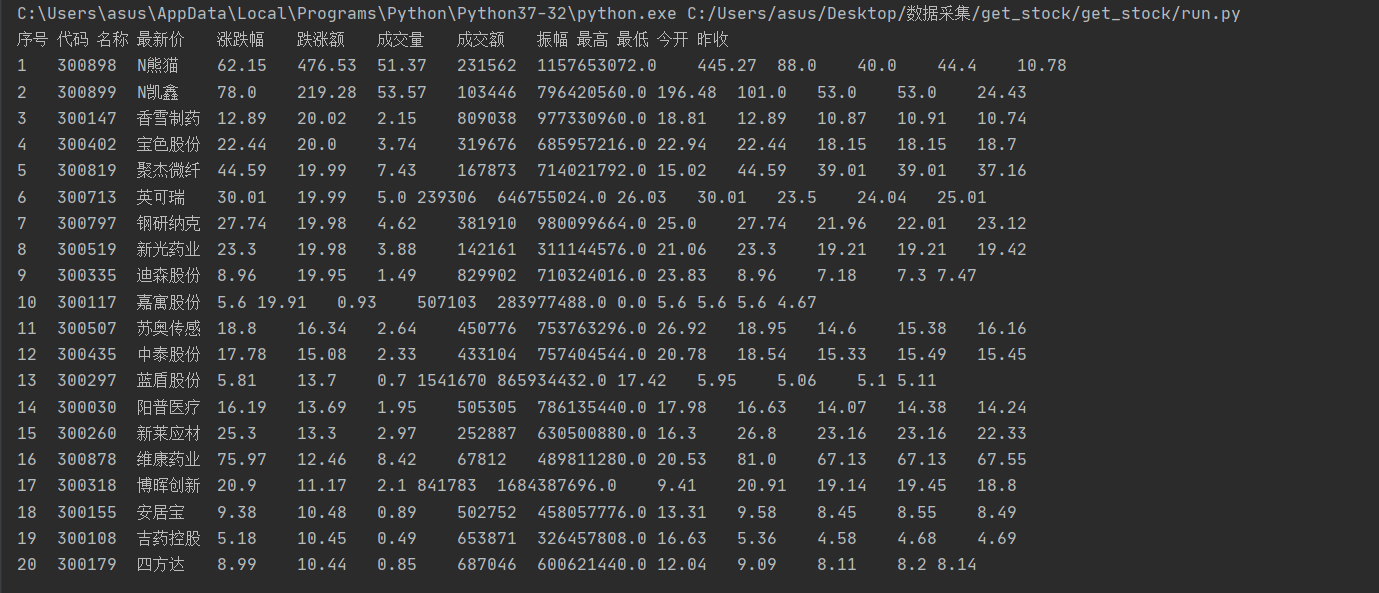

作业③

1)、使用scrapy框架爬取股票相关信息实验

stock(主函数):

import re

import urllib

from ..items import GetStockItem

import scrapy

from bs4 import UnicodeDammit, BeautifulSoup

class StockSpider(scrapy.Spider):

name = 'stock'

start_urls = ['http://quote.eastmoney.com/stock_list.html']

def parse(self, response):

url = "http://22.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406286903286457721_1602799759543&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80&fields=f12,f14,f2,f3,f4,f5,f6,f7,f15,f16,f17,f18&_=1602799759869"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ""Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3775.400 QQBrowser/10.6.4209.400"}

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, 'html.parser')

data = re.findall(r'"diff":\[(.*?)]', soup.text)

datas = data[0].strip("{").strip("}").split('},{') # 去掉头尾的"{"和"}",再通过"},{"切片

for i in range(len(datas)):

item = GetStockItem()

item["data"] = datas[i].replace('"', "").split(",") # 去掉双引号并通过","切片

yield itempipelines:

class GetStockPipeline:

number = 0

print("序号"+'\t'+"代码"+'\t'+"名称"+'\t'+"最新价"+'\t'+"涨跌幅"+'\t'+"跌涨额"+'\t'+"成交量"+'\t'+"成交额"+'\t'+"振幅"+'\t'+"最高"+'\t'+"最低"+'\t'+"今开"+'\t'+"昨收")

def process_item(self,item,spider):

GetStockPipeline.number += 1

try:

stock = item["data"]

print(str(GetStockPipeline.number)+'\t'+stock[6][4:]+'\t'+stock[7][4:]+'\t'+stock[0][3:]+'\t'+stock[1][3:]+'\t'+stock[2][3:]+'\t'+stock[3][3:]+'\t'+stock[4][3:]+'\t'+stock[5][3:]+'\t'+stock[8][4:]+'\t'+stock[9][4:]+'\t'+stock[10][4:]+'\t'+stock[11][4:])

except:

pass

return itemitems:

import scrapy

class GetStockItem(scrapy.Item):

data = scrapy.Field()

pass

settings部分代码:

ITEM_PIPELINES = {

'get_stock.pipelines.GetStockPipeline': 300,

}

2)、心得体会

结果没有存到excel文件里,直接在控制台进行输出,显示效果有点不大好。这个作业跟上个作业差得不多,而且股票爬取我们上次作业就做过了,所以花的时间不会很多。但这次作业还有不足。如何在两个文件完成制表这个问题我没有弄懂,试了几次都没能实现在pipelines.py将数据存入表格,并在主函数进行表格输出。

浙公网安备 33010602011771号

浙公网安备 33010602011771号