更换故障盘

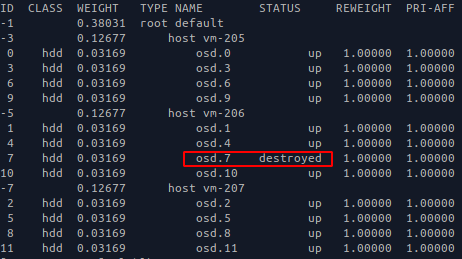

1. 查看故障盘osd id

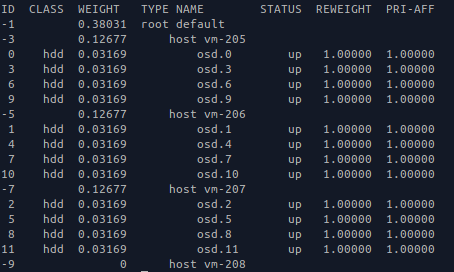

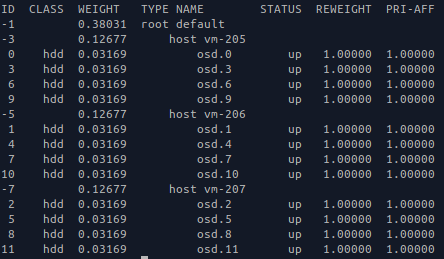

ceph osd tree

2. 销毁osd

ceph osd destroy 7 --yes-i-really-mean-it

3. 更换故障硬盘

4. 查看硬盘盘符

lsblk

5. 清除硬盘

ceph-volume lvm zap /dev/sdg --destroy

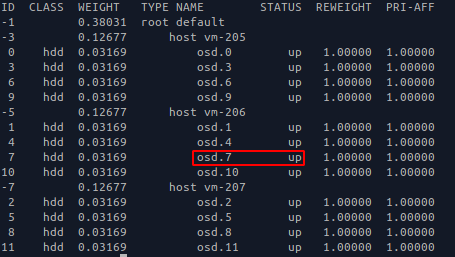

6. 预备&替换原osd

ceph-volume lvm prepare --osd-id 7 --data /dev/sdg

7. 查看osd fsid

cat /var/lib/ceph/osd/ceph-7/fsid

8. 激活osd

ceph-volume lvm activate 7 fc43ff34-0abe-4e15-8964-3a2b2e77059a

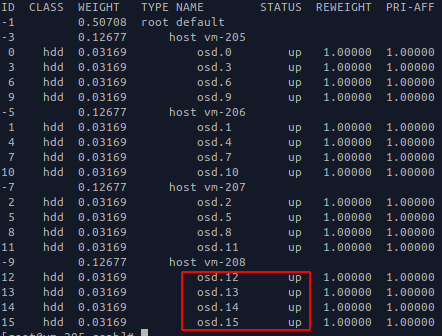

手工方式扩容osd节点

1. node admin

ssh-copy-id -i .ssh/id_rsa.pub vm-208

/etc/hosts添加 '192.168.100.208 vm-208'解析

传送ceph节点 ansible -i inventory all -m copy -a 'src=/etc/hosts dest=/etc/hosts'

2. node vm-208

scp vm-205:/etc/yum.repos.d/ceph_stable.repo /etc/yum.repos.d/ceph_stable.repo

scp vm-205:/etc/ceph/ceph.conf /etc/ceph

scp vm-205:/etc/ceph/ceph.client.admin.keyring /etc/ceph

scp vm-205:/var/lib/ceph/bootstrap-osd/ceph.keyring /var/lib/ceph/bootstrap-osd

pip3 install pip -U

pip3 config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

pip3 install six pyyaml

yum install -y ceph-osd

lsblk

ceph-volume lvm zap /dev/sdb --destroy

ceph-volume lvm zap /dev/sdc --destroy

ceph-volume lvm zap /dev/sdd --destroy

ceph-volume lvm zap /dev/sde --destroy

ceph-volume lvm zap /dev/sdf --destroy

ceph-volume lvm zap /dev/sdg --destroy

ceph-volume lvm create --data /dev/sdb --bluestore --block.db /dev/sdf --block.wal /dev/sdg --block.db-size 2.5G --block.wal-size 2.5G

ceph-volume lvm create --data /dev/sdc --bluestore --block.db /dev/sdf --block.wal /dev/sdg --block.db-size 2.5G --block.wal-size 2.5G

ceph-volume lvm create --data /dev/sdd --bluestore --block.db /dev/sdf --block.wal /dev/sdg --block.db-size 2.5G --block.wal-size 2.5G

ceph-volume lvm create --data /dev/sde --bluestore --block.db /dev/sdf --block.wal /dev/sdg --block.db-size 2.5G --block.wal-size 2.5G

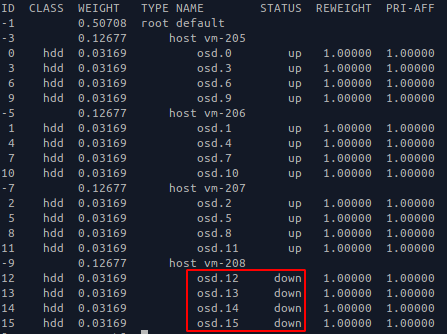

手工方式缩容osd节点

1. 停止所有osd服务

systemctl stop ceph-osd@12

systemctl stop ceph-osd@13

systemctl stop ceph-osd@14

systemctl stop ceph-osd@15

2. 销毁所有osd

ceph osd purge 12 --yes-i-really-mean-it

ceph osd purge 13 --yes-i-really-mean-it

ceph osd purge 14 --yes-i-really-mean-it

ceph osd purge 15 --yes-i-really-mean-it

3. 清除磁盘数据

ceph-volume lvm zap --osd-id 12 --destroy

ceph-volume lvm zap --osd-id 13 --destroy

ceph-volume lvm zap --osd-id 14 --destroy

ceph-volume lvm zap --osd-id 15 --destroy

4. 清除crush数据

ceph osd crush tree

ceph osd crush rm vm-208

5. 删除osd应用

yum remove -y ceph-osd ceph-common

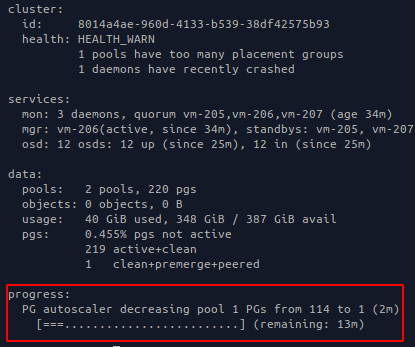

调整PG

# warn: 1 pools have too many placement groups

扩容&缩容后,osd数量变化,需要调整各个pool的pg数,参照公式

Total PGs = ((Total_number_of_OSD * 100) / max_replication_count) / pool_count, 结算的结果往上取靠近2的N次方的值。

ceph osd lspools

ceph osd pool get test pg_num

ceph osd pool get test pgp_num

ceph osd dump |grep size|grep test

ceph osd pool set test pg_num 64

ceph osd pool set test pgp_num 64

# 参考 https://docs.ceph.com/en/pacific/rados/operations/placement-groups

报警提示:1 daemons have recently crashed

处理:

ceph crash ls-new

ceph crash info

ceph crash archive-all

故障处理案例1

# ceph -c /etc/ceph/ceph.conf health detail

HEALTH_ERR 2 pgs inconsistent; 2 scrub errors

pg 2.3cb is active+clean+inconsistent, acting [56,63,8]

pg 2.6f5 is active+clean+inconsistent, acting [30,76,20]

ceph pg map 2.6f5

ceph pg map 2.3cb

ceph osd tree

ceph pg repair 2.3cb

ceph pg repair 2.6f5

故障处理案例2

故障处理案例3

浙公网安备 33010602011771号

浙公网安备 33010602011771号