实验四

作业①:

from selenium import webdriver

from selenium.webdriver.edge.options import Options

import time

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.edge.service import Service

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

import sqlite3

def spider():

index = 1

max_page = driver.find_element(By.CSS_SELECTOR,"#main-table_paginate > span.paginate_page > a:nth-child(5)").text

max_page = eval(max_page)

max_page = 5

print(max_page)

for page in range(max_page):

# WebDriverWait(driver, 10).until(expected_conditions.presence_of_element_located((By.CSS_SELECTOR,"#table_wrapper-table > tbody > tr")))

trlist = driver.find_elements(By.CSS_SELECTOR,"#table_wrapper-table > tbody > tr")

for tr in trlist:

res1 = [str(index)]

index += 1

for i in [2,3,5,6,7,8,9,10,11,12,13,14]:

res1.append(tr.find_element(By.CSS_SELECTOR,"td:nth-child(" + str(i)+ ")").text)

print(res1)

res.append(res1)

if page <= max_page - 2 :

next_button = driver.find_element(By.XPATH,'/html/body/div[1]/div[2]/div[2]/div[5]/div/div[2]/div/a[2]')

webdriver.ActionChains(driver).move_to_element(next_button ).click(next_button ).perform()

time.sleep(1.5)

edge_options = Options()

driver = webdriver.Edge(options=edge_options)

res = []

driver.get("http://quote.eastmoney.com/center/gridlist.html#sh_a_board")

spider()

driver.execute_script("window.open('http://quote.eastmoney.com/center/gridlist.html#sz_a_board','_self');")

time.sleep(1.5)

spider()

driver.execute_script("window.open('http://quote.eastmoney.com/center/gridlist.html#bj_a_board','_self');")

time.sleep(1.5)

spider()

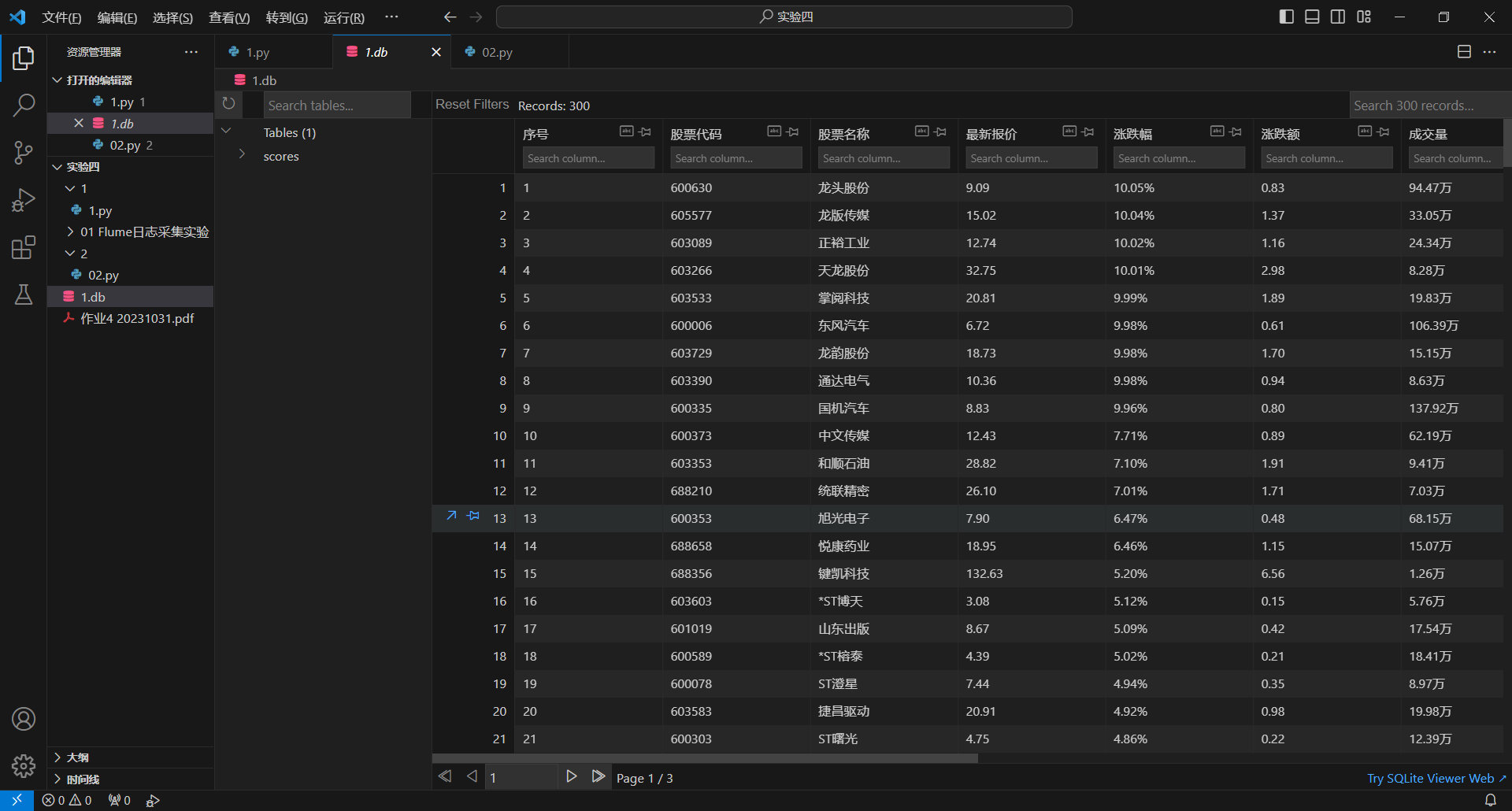

db = sqlite3.connect('1.db')

sql_text_1 = '''CREATE TABLE scores

(序号 TEXT,

股票代码 TEXT,

股票名称 TEXT,

最新报价 TEXT,

涨跌幅 TEXT,

涨跌额 TEXT,

成交量 TEXT,

成交额 TEXT,

振幅 TEXT,

最高 TEXT,

最低 TEXT,

今开 TEXT,

昨收 TEXT);'''

db.execute(sql_text_1)

db.commit()

for item in res:

sql_text_1 = "INSERT INTO scores VALUES('"+item[0] + "'"

for i in range(len(item) - 1):

sql_text_1 = sql_text_1 + ",'" + item[i+1] + "'"

sql_text_1 = sql_text_1 + ")"

print(sql_text_1)

db.execute(sql_text_1)

db.commit()

db.close()

- 运行结果:

![]()

- 心得体会:

加深了对selenium爬取网页方法的熟练程度

作业②:

- 要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

候选网站:中国mooc网:https://www.icourse163.org

输出信息:MYSQL数据库存储和输出格式

Gitee链接

- 代码:

from sqlalchemy import create_engine

import pandas as pd

from selenium import webdriver

from selenium.webdriver.common.by import By

from scrapy.selector import Selector

import time

from sqlalchemy import create_engine

browser = webdriver.Edge()

url = 'https://www.icourse163.org/'

browser.get(url)

time.sleep(2)

# 登录

denglu=browser.find_element(By.XPATH,'//*[@id="app"]/div/div/div[1]/div[3]/div[3]/div')

denglu.click()

time.sleep(3)

browser.switch_to.default_content()

browser.switch_to.frame(browser.find_elements(By.TAG_NAME,'iframe')[0])

phone=browser.find_element(By.XPATH,'/html/body/div[2]/div[2]/div[2]/form/div/div[2]/div[2]/input')

phone.clear()

phone.send_keys("19859368639")

time.sleep(4)

password=browser.find_element(By.XPATH,'/html/body/div[2]/div[2]/div[2]/form/div/div[4]/div[2]/input[2]')

password.clear()

password.send_keys("lin031120")

deng=browser.find_element(By.XPATH,'//*[@id="submitBtn"]')

deng.click()

time.sleep(4)

browser.switch_to.default_content()

select_course=browser.find_element(By.XPATH,'/html/body/div[4]/div[1]/div/div/div/div/div[7]/div[1]/div/div/div[1]/div/div/div/div/div/div/input')

select_course.send_keys("python")

dianji=browser.find_element(By.XPATH,'//html/body/div[4]/div[1]/div/div/div/div/div[7]/div[1]/div/div/div[2]/span')

dianji.click()

time.sleep(4)

content = browser.page_source

print(content)

# 退出

browser.quit()

selector = Selector(text=content)

rows = selector.xpath("//div[@class='m-course-list']/div/div")

data = []

# 遍历每个<tr>元素

for row in rows:

lis = []

course= row.xpath(".//span[@class=' u-course-name f-thide']//text()").extract()

course_string="".join(course)

school=row.xpath(".//a[@class='t21 f-fc9']/text()").extract_first()

teacher=row.xpath(".//a[@class='f-fc9']//text()").extract_first()

team = row.xpath(".//a[@class='f-fc9']//text()").extract()

team_string=",".join(team)

number = row.xpath(".//span[@class='hot']/text()").extract_first()

time = row.xpath(".//span[@class='txt']/text()").extract_first()

jianjie=row.xpath(".//span[@class='p5 brief f-ib f-f0 f-cb']//text()").extract()

jianjie_string=",".join(jianjie)

lis.append(course_string)

lis.append(school)

lis.append(teacher)

lis.append(team_string)

lis.append(number)

lis.append(time)

lis.append(jianjie_string)

data.append(lis)

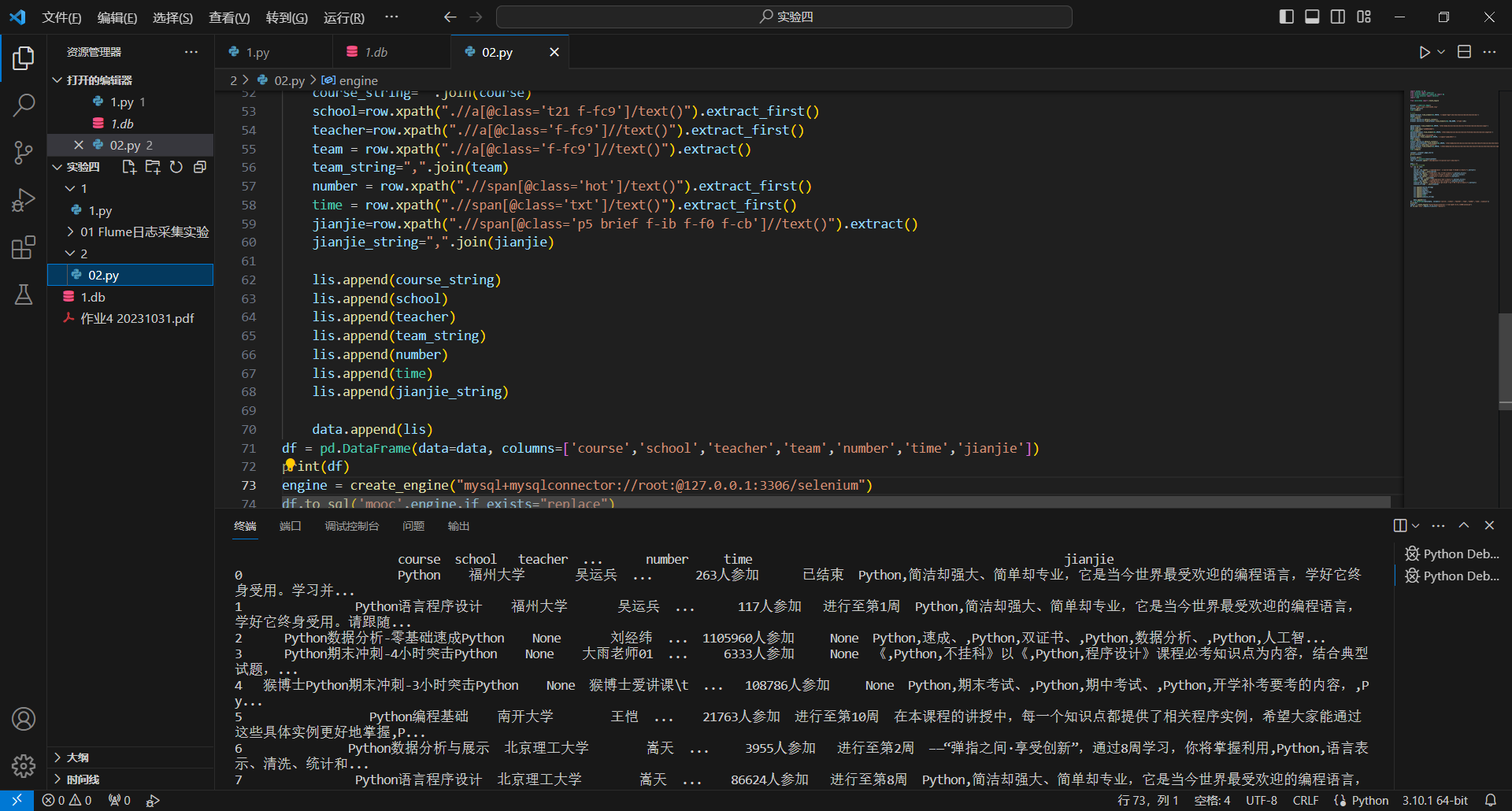

df = pd.DataFrame(data=data, columns=['course','school','teacher','team','number','time','jianjie'])

print(df)

engine = create_engine("mysql+mysqlconnector://root:@127.0.0.1:3306/selenium")

df.to_sql('mooc',engine,if_exists="replace")

engine = create_engine("mysql+mysqlconnector://root:@127.0.0.1:3306/selenium")

connection = engine.connect()

existing_data = pd.read_sql('SELECT * FROM mooc', connection)

# Only insert new data into the database

df = df[~df.duplicated(keep='first')]

new_data = df[~df.isin(existing_data)].dropna()

new_data.to_sql('mooc', engine, if_exists="append", index=False)

connection.close()

- 运行结果:

![]()

- 心得体会:

主要在登录界面卡了非常久,想了非常久都没想出来怎么登录,后来经过学习别人的代码,弄懂怎么登录moooc,收获很多

作业③:

- 要求:

掌握大数据相关服务,熟悉Xshell的使用

完成文档 华为云_大数据实时分析处理实验手册-Flume日志采集实验(部分)v2.docx 中的任务,即为下面5个任务,具体操作见文档。

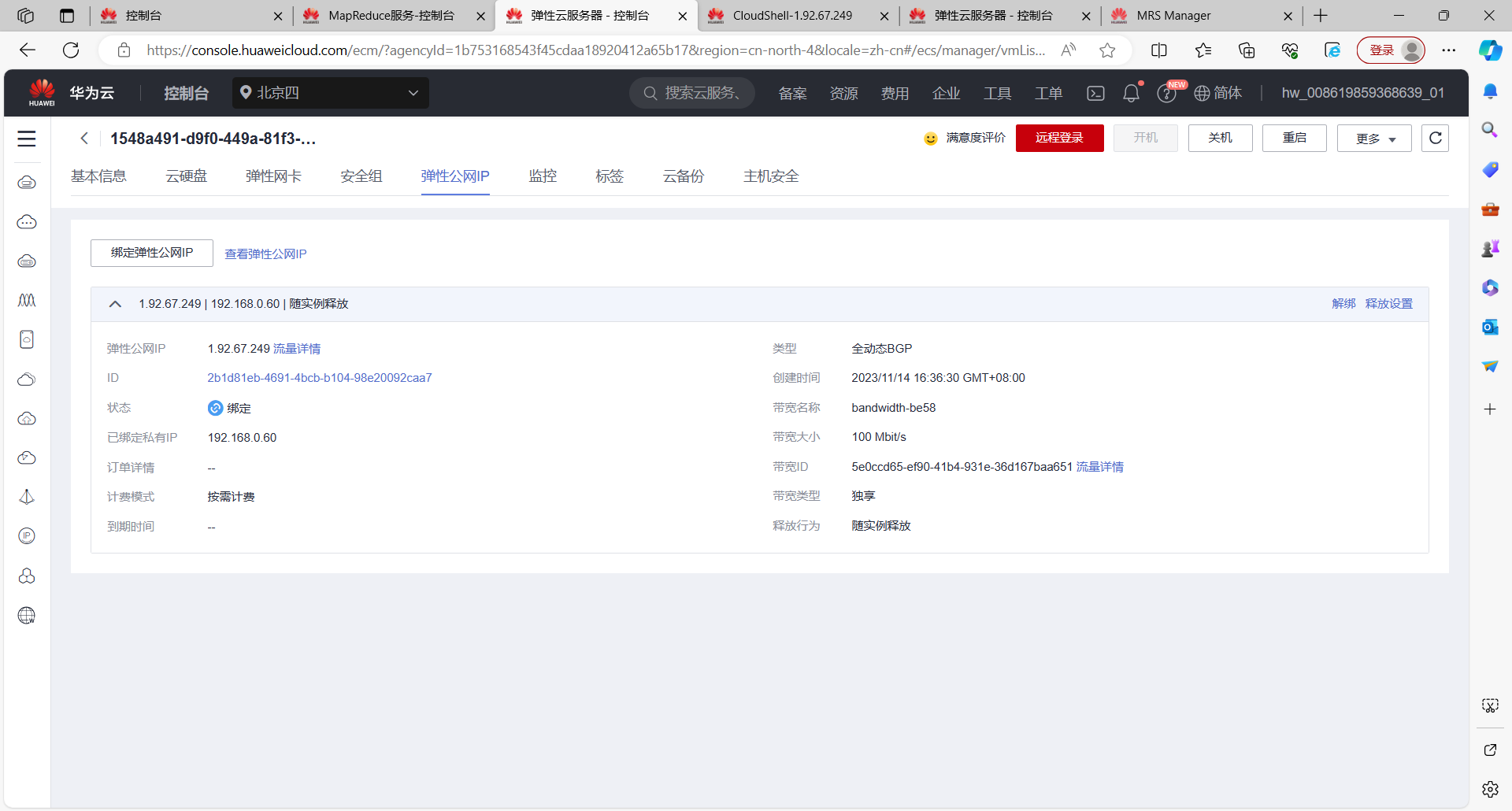

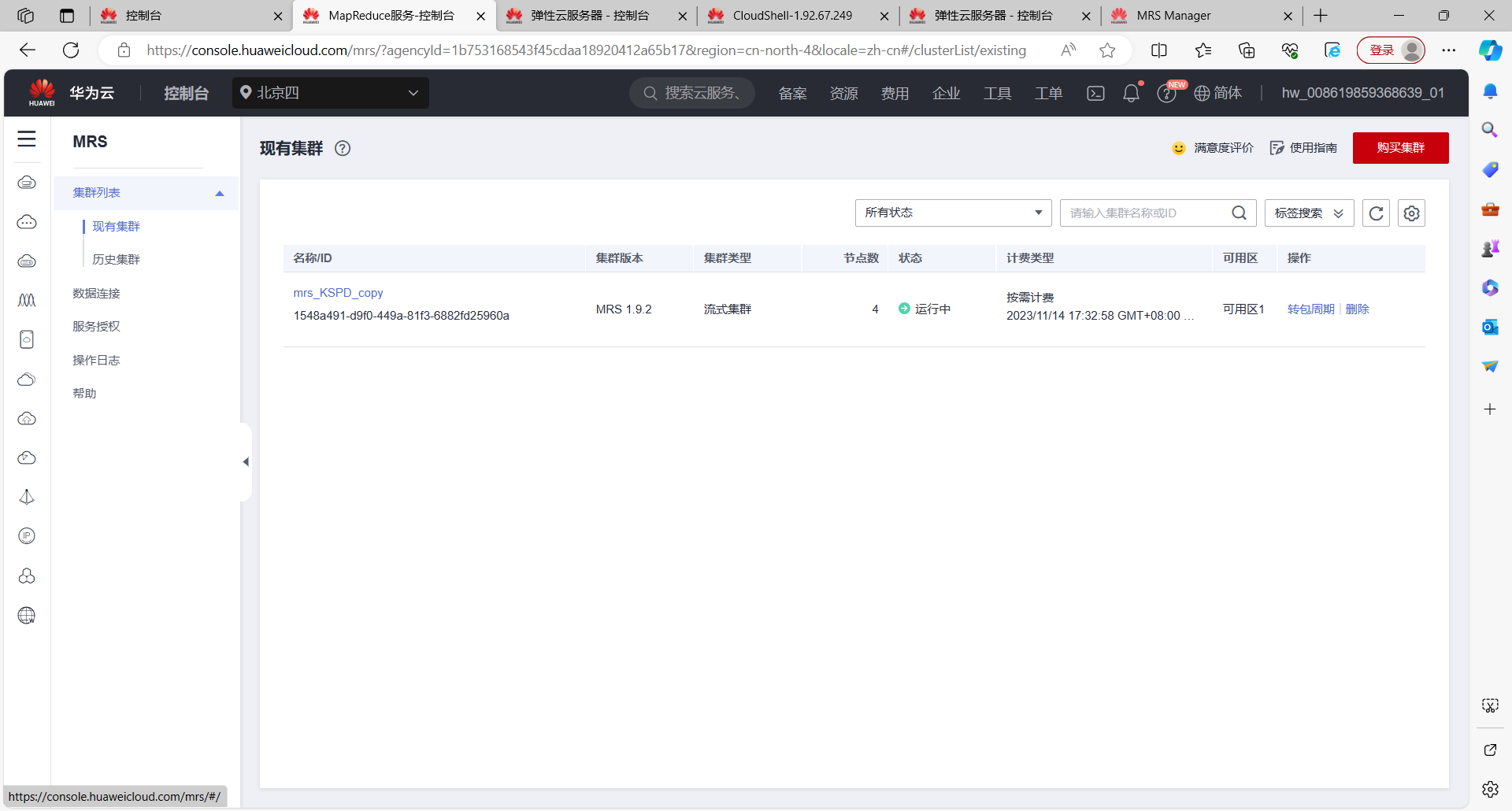

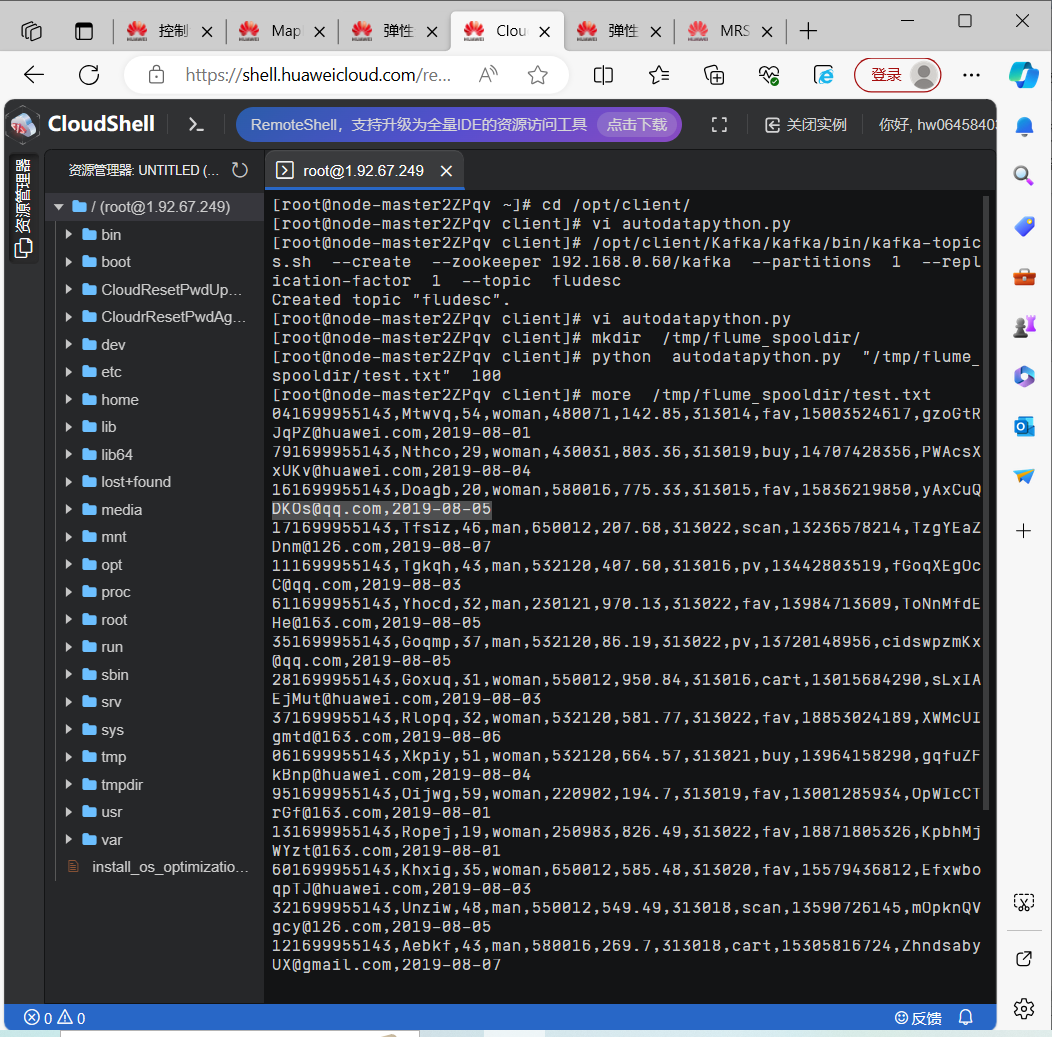

- 环境搭建:

任务一:开通MapReduce服务

![]()

![]()

- 实时分析开发实战:

- 任务一:Python脚本生成测试数据

![]()

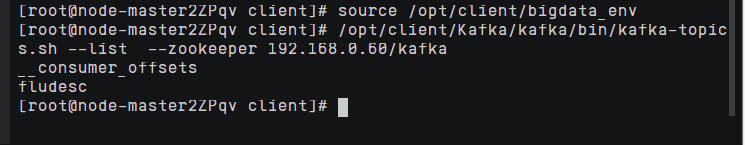

- 任务二:配置Kafka

![]()

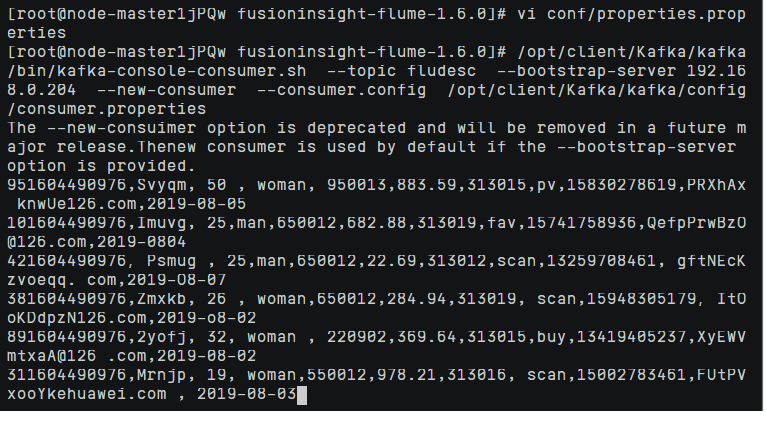

- 任务三: 安装Flume客户端

![]()

![]()

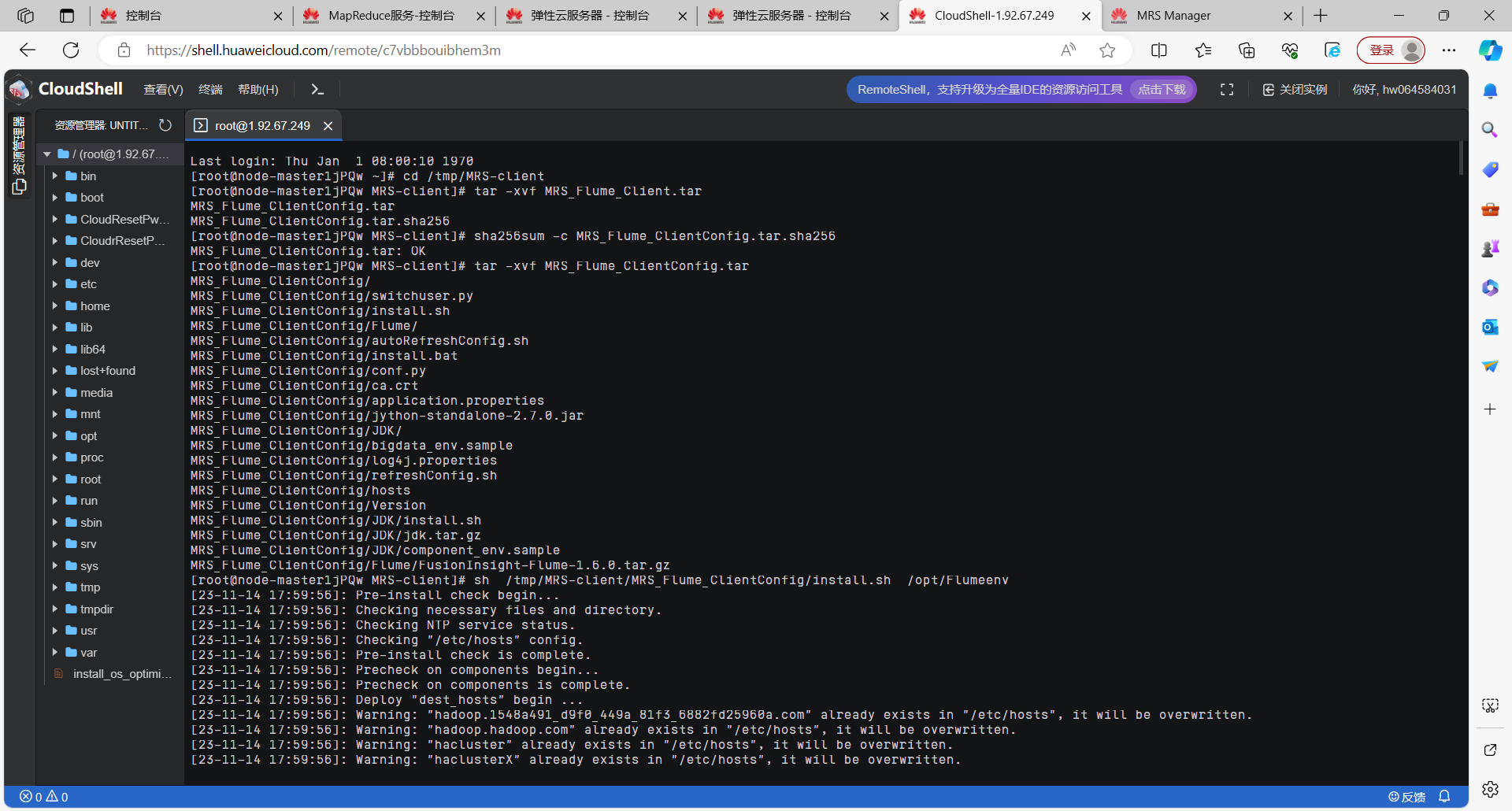

- 任务四:配置Flume采集数据

![]()

- 心得体会

学习了华为云平台的使用,并且发现原来在CloudShell里也可以打开两个终端页面,只需要找对ip地址和节点

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()