7.3.0 头文件

import torch

from torch import nn

from d2l import torch as d2l

from matplotlib import pyplot as plt

7.3.1 定义VGG11网络模型

# num_convs:该VGG块中包含的卷积层的数量

# in_channels:该VGG块输入通道的数量

# out_channels:该VGG块输出通道的数量

# 根据指定的卷积层数和输出通道数创建一个VGG块

def vgg_block(num_convs, in_channels, out_channels):

# 每一个VGG块中包含的层

layers = []

for _ in range(num_convs):

# 向VGG块中添加一个卷积层,卷积核尺寸为3×3,四周填充1层0

layers.append(nn.Conv2d(in_channels, out_channels,kernel_size=3, padding=1))

# 向VGG块中添加一个ReLU层

layers.append(nn.ReLU())

# 将前一层的输出作为后一层的输入

in_channels = out_channels

# 最后向VGG块中添加一个最大池化层,池化尺寸为2×2,步幅为2

layers.append(nn.MaxPool2d(kernel_size=2,stride=2))

# 返回一个VGG块

return nn.Sequential(*layers)

# 构建VGG网络模型

# 这个网络模型中包括8个卷积层和3个全连接层,所以被称为VGG-11

def vgg(conv_arch):

# VGG网络模型中包含的块

conv_blks = []

# 定义第一个块的输入通道数

in_channels = 1

# 把所有构建出来的VGG块放入到VGG网络模型中

for (num_convs, out_channels) in conv_arch:

conv_blks.append(vgg_block(num_convs, in_channels, out_channels))

in_channels = out_channels

# 向VGG网络模型中再加入展平层,全连接隐藏层1,ReLU激活函数1,Dropout层1,全连接隐藏层2,ReLU激活函数2,Dropout层2,全连接输出层

# 返回构建的VGG网络模型

return nn.Sequential( *conv_blks, nn.Flatten(),

nn.Linear(out_channels * 7 * 7, 4096), nn.ReLU(), nn.Dropout(0.5),

nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(0.5),

nn.Linear(4096, 10))

# 指定每个VGG块里卷积层个数和输出通道数

# 该VGG网络中一共有5个VGG块,前两个块中各有1个卷积层,后三个块中各有2个卷积层,第一个块有64个输出通道,第二个块有128个输出通道,第三个块有256个输出通道,第四个块有512个输出通道,第五个块有512个输出通道

conv_arch = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512))

# 定义VGG-11网络模型

net = vgg(conv_arch)

# 构建一个行和列都为224的单通道样本,观察每个VGG块、全连接层输出的形状

X = torch.randn(size=(1, 1, 224, 224))

for blk in net:

X = blk(X)

print(blk.__class__.__name__,'output shape:\t',X.shape)

# 输出:

# Sequential output shape: torch.Size([1, 64, 112, 112])

# Sequential output shape: torch.Size([1, 128, 56, 56])

# Sequential output shape: torch.Size([1, 256, 28, 28])

# Sequential output shape: torch.Size([1, 512, 14, 14])

# Sequential output shape: torch.Size([1, 512, 7, 7])

# Flatten output shape: torch.Size([1, 25088])

# Linear output shape: torch.Size([1, 4096])

# ReLU output shape: torch.Size([1, 4096])

# Dropout output shape: torch.Size([1, 4096])

# Linear output shape: torch.Size([1, 4096])

# ReLU output shape: torch.Size([1, 4096])

# Dropout output shape: torch.Size([1, 4096])

# Linear output shape: torch.Size([1, 10])

# 定义一个模型更小的VGG网络

ratio = 4

small_conv_arch = [(pair[0], pair[1] // ratio) for pair in conv_arch]

print(small_conv_arch)

# 输出:

# [(1, 16), (1, 32), (2, 64), (2, 128), (2, 128)]

net = vgg(small_conv_arch)

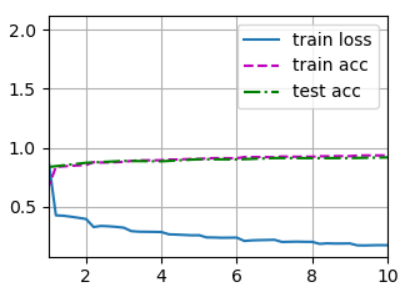

7.3.2 训练过程

# 定义学习率、训练轮数、批量大小 lr, num_epochs, batch_size = 0.05, 10, 128 # 下载fashion_mnist,并对数据集进行打乱和按批量大小进行切割的操作,得到可迭代的训练集和测试集(训练集和测试集的形式都为(特征数据集合,数字标签集合)),同时将图像从28×28放大为224×224 train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=224) # 使用GPU对模型进行训练,输出最后一轮训练时的平均损失、在训练集上的平均准确率、在测试集上的平均准测率,输出每秒能够训练多少张图像 d2l.train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

# 输出

# loss 0.175, train acc 0.935, test acc 0.918

# 102.0 examples/sec on cuda:0

7.3.3 训练结果可视化

plt.savefig('OutPut.png')

本小节完整代码如下

import torch

from torch import nn

from d2l import torch as d2l

from matplotlib import pyplot as plt

# ------------------------------定义VGG11网络模型------------------------------------

# num_convs:该VGG块中包含的卷积层的数量

# in_channels:该VGG块输入通道的数量

# out_channels:该VGG块输出通道的数量

# 根据指定的卷积层数和输出通道数创建一个VGG块

def vgg_block(num_convs, in_channels, out_channels):

# 每一个VGG块中包含的层

layers = []

for _ in range(num_convs):

# 向VGG块中添加一个卷积层,卷积核尺寸为3×3,四周填充1层0

layers.append(nn.Conv2d(in_channels, out_channels,kernel_size=3, padding=1))

# 向VGG块中添加一个ReLU层

layers.append(nn.ReLU())

# 将前一层的输出作为后一层的输入

in_channels = out_channels

# 最后向VGG块中添加一个最大池化层,池化尺寸为2×2,步幅为2

layers.append(nn.MaxPool2d(kernel_size=2,stride=2))

# 返回一个VGG块

return nn.Sequential(*layers)

# 构建VGG网络模型

# 这个网络模型中包括8个卷积层和3个全连接层,所以被称为VGG-11

def vgg(conv_arch):

# VGG网络模型中包含的块

conv_blks = []

# 定义第一个块的输入通道数

in_channels = 1

# 把所有构建出来的VGG块放入到VGG网络模型中

for (num_convs, out_channels) in conv_arch:

conv_blks.append(vgg_block(num_convs, in_channels, out_channels))

in_channels = out_channels

# 向VGG网络模型中再加入展平层,全连接隐藏层1,ReLU激活函数1,Dropout层1,全连接隐藏层2,ReLU激活函数2,Dropout层2,全连接输出层

# 返回构建的VGG网络模型

return nn.Sequential( *conv_blks, nn.Flatten(),

nn.Linear(out_channels * 7 * 7, 4096), nn.ReLU(), nn.Dropout(0.5),

nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(0.5),

nn.Linear(4096, 10))

# 指定每个VGG块里卷积层个数和输出通道数

# 该VGG网络中一共有5个VGG块,前两个块中各有1个卷积层,后三个块中各有2个卷积层,第一个块有64个输出通道,第二个块有128个输出通道,第三个块有256个输出通道,第四个块有512个输出通道,第五个块有512个输出通道

conv_arch = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512))

# 定义VGG-11网络模型

net = vgg(conv_arch)

# 构建一个行和列都为224的单通道样本,观察每个VGG块、全连接层输出的形状

X = torch.randn(size=(1, 1, 224, 224))

for blk in net:

X = blk(X)

print(blk.__class__.__name__,'output shape:\t',X.shape)

# 输出:

# Sequential output shape: torch.Size([1, 64, 112, 112])

# Sequential output shape: torch.Size([1, 128, 56, 56])

# Sequential output shape: torch.Size([1, 256, 28, 28])

# Sequential output shape: torch.Size([1, 512, 14, 14])

# Sequential output shape: torch.Size([1, 512, 7, 7])

# Flatten output shape: torch.Size([1, 25088])

# Linear output shape: torch.Size([1, 4096])

# ReLU output shape: torch.Size([1, 4096])

# Dropout output shape: torch.Size([1, 4096])

# Linear output shape: torch.Size([1, 4096])

# ReLU output shape: torch.Size([1, 4096])

# Dropout output shape: torch.Size([1, 4096])

# Linear output shape: torch.Size([1, 10])

# 定义一个模型更小的VGG网络

ratio = 4

small_conv_arch = [(pair[0], pair[1] // ratio) for pair in conv_arch]

print(small_conv_arch)

# 输出:

# [(1, 16), (1, 32), (2, 64), (2, 128), (2, 128)]

net = vgg(small_conv_arch)

# ------------------------------训练过程------------------------------------

# 定义学习率、训练轮数、批量大小

lr, num_epochs, batch_size = 0.05, 10, 128

# 下载fashion_mnist,并对数据集进行打乱和按批量大小进行切割的操作,得到可迭代的训练集和测试集(训练集和测试集的形式都为(特征数据集合,数字标签集合)),同时将图像从28×28放大为224×224

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=224)

# 使用GPU对模型进行训练,输出最后一轮训练时的平均损失、在训练集上的平均准确率、在测试集上的平均准测率,输出每秒能够训练多少张图像

d2l.train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

# 输出:

# loss 0.175, train acc 0.935, test acc 0.918

# 102.0 examples/sec on cuda:0

# ------------------------------训练结果可视化------------------------------------

plt.savefig('OutPut.png')

浙公网安备 33010602011771号

浙公网安备 33010602011771号