概念

kubeadm是在现有基础上引导kubernetes集群并执行一系列基本的维护任务,其不会涉及服务器底层基础环境的构建,而只是为集群添加必要的核心组件CoreNDS和kube-proxy。Kubeadm的核心工具为kubeadm init和kubeadm join,前者用于创建新的控制平面,后者用于将节点快速的接入到控制平面,利用这两个工具可以快速的初始化一个生产级别的k8s集群。

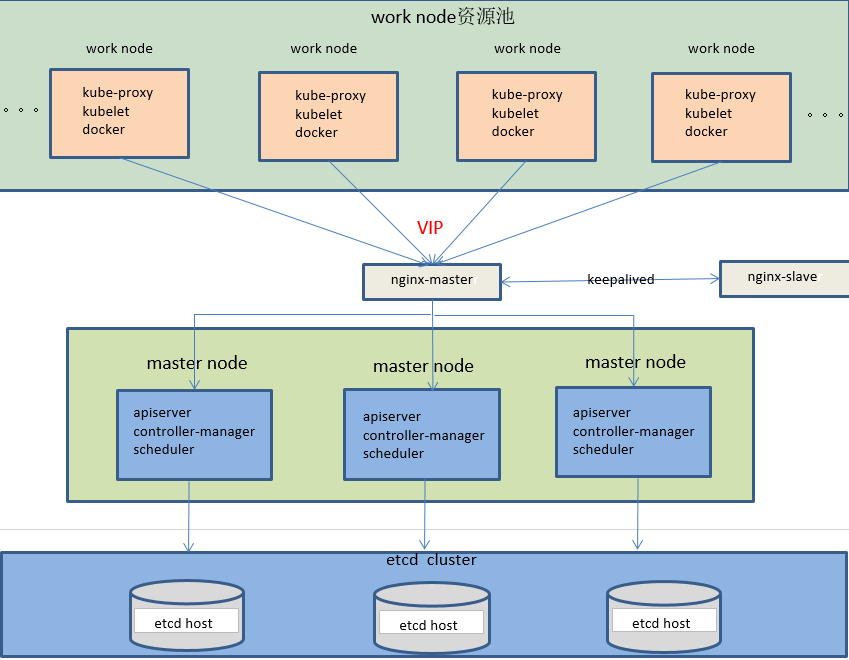

部署架构

环境准备

系统环境

- OS:CentOS 7.6

- Docker Version:19.03.8

- Kubernetes:1.18.2

| IP地址 | 主机名 | 角色 |

|---|---|---|

| 192.168.248.150 | k8s-master-01 | master |

| 192.168.248.151 | k8s-master-02 | master |

| 192.168.248.152 | k8s-master-03 | master |

| 192.168.248.153 | k8s-node-01 | worker |

| 192.168.248.154 | k8s-node-02 | worker |

| 192.168.248.155 | k8s-node-03 | worker |

系统初始化

- 升级系统内核到最新

- 关闭系统防火墙和Selinux

- 主机名解析

- 时间同步

- 禁用Swap

安装docker-ce

安装可参考Docker安装

添加阿里docker源并安装

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# yum makecache fast

# yum -y install docker-ce

# systemctl enable docker && systemctl start docker

配置docker镜像加速及存储驱动

# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors": ["https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn"]

}

# 重启docker使配置生效

修改系统参数

文件描述符

# echo "* soft nofile 65536" >> /etc/security/limits.conf

# echo "* hard nofile 65536" >> /etc/security/limits.conf

# echo "* soft nproc 65536" >> /etc/security/limits.conf

# echo "* hard nproc 65536" >> /etc/security/limits.conf

# echo "* soft memlock unlimited" >> /etc/security/limits.conf

# echo "* hard memlock unlimited" >> /etc/security/limits.conf

修改内核参数

# modprobe overlay

# modprobe br_netfilter

# cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sysctl --system

安装kubelet、kubeadm、kubectl

安装可参考安装链接

# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# yum install -y kubelet kubeadm kubectl

# systemctl enable kubelet

部署化控制平面

初始化控制平面

# kubeadm init \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.2 \

--control-plane-endpoint k8s-api-server \

--apiserver-advertise-address 192.168.248.151 \

--pod-network-cidr 10.244.0.0/16 \

--token-ttl 0

参数说明

- --image-repository 指定国内镜像源地址。由于GFW存在结界,无法拉取到国外镜像站点的镜像。

- --kubernetes-version 指定版本

- --control-plane-endpoint 指定控制平面的固定访问点,可以为ip或者DNS域名。被用于集群管理员和集群组件的kubeconfig配置文件的API Server的访问地址。

- --apiserver-advertise-address apiserver通告给其他组件的ip地址,一般为该master节点的用于集群内部通信的ip地址

- --pod-network-cidr pod网络的地址范围,flannel插件默认为:10.244.0.0/16,calico插件默认为:192.168.0.0/16。

- --token-ttl 共享令牌过期时间,默认为24h,0表示永不过期。

更多参数用法参考:链接1

出现如下提示表示初始化控制面板成功

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join k8s-api-server:6443 --token yonz9r.2025b6wu414sptes \

--discovery-token-ca-cert-hash sha256:391f19638bbb4c50d57a32b8c5b670ce8cfaddfa4f022384a03d1e8f6462430f \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-api-server:6443 --token yonz9r.2025b6wu414sptes \

--discovery-token-ca-cert-hash sha256:391f19638bbb4c50d57a32b8c5b670ce8cfaddfa4f022384a03d1e8f6462430f

按照提示进行操作

创建目录、提供配置文件及修改属主属组即可完成控制平面的部署。

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

部署flannel网络插件

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

添加工作节点

# 分别在所有工作执行如下命令,即可将工作节点接入集群。

# kubeadm join k8s-api-server:6443 --token yonz9r.2025b6wu414sptes \

--discovery-token-ca-cert-hash sha256:391f19638bbb4c50d57a32b8c5b670ce8cfaddfa4f022384a03d1e8f6462430f

添加其他控制节点

控制平面中的节点需要共享Kubernetes CA、Etcd CA和Kube-proxy CA等的证书信息和私钥信息。

做法有两种:

- 手动将第一个控制平面上生成的证书及私钥文件添加到其他master节点

- 借助kubeadm init phase命令

本次我们采用第二种方法实现

生成用于加入控制平面的secret

# kubeadm init phase upload-certs --upload-certs

W0426 01:58:31.877454 72035 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

dab5a1601dae683f429ed43a795ed345120a030681412a419d327c8893d90d74

以上命令生成的secret生命周期为2h,超过时长后,需要重新运行以上生成。

加入控制节点

# kubeadm join k8s-api-server:6443 --token yonz9r.2025b6wu414sptes \ --discovery-token-ca-cert-hash sha256:391f19638bbb4c50d57a32b8c5b670ce8cfaddfa4f022384a03d1e8f6462430f --control-plane --certificate-key dab5a1601dae683f429ed43a795ed345120a030681412a419d327c8893d90d74

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

集群状态验证(在任意一台控制节点上执行)

查看组件状态

# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

查看工作节点状态

# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready master 15h v1.18.2

k8s-master-02 Ready master 17h v1.18.2

k8s-master-03 Ready master 15h v1.18.2

k8s-node-01 Ready <none> 16h v1.18.2

k8s-node-02 Ready <none> 16h v1.18.2

k8s-node-03 Ready <none> 16h v1.18.2

查看pod运维状态

# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-btz6j 1/1 Running 1 15h

coredns-7ff77c879f-qzjnm 1/1 Running 1 15h

etcd-k8s-master-01 1/1 Running 2 15h

etcd-k8s-master-02 1/1 Running 1 17h

etcd-k8s-master-03 1/1 Running 1 15h

kube-apiserver-k8s-master-01 1/1 Running 5 15h

kube-apiserver-k8s-master-02 1/1 Running 3 17h

kube-apiserver-k8s-master-03 1/1 Running 1 15h

kube-controller-manager-k8s-master-01 1/1 Running 4 15h

kube-controller-manager-k8s-master-02 1/1 Running 7 17h

kube-controller-manager-k8s-master-03 1/1 Running 1 15h

kube-flannel-ds-amd64-77mtr 1/1 Running 1 15h

kube-flannel-ds-amd64-bx4kt 1/1 Running 2 16h

kube-flannel-ds-amd64-fhfwv 1/1 Running 1 15h

kube-flannel-ds-amd64-kwfpq 1/1 Running 1 16h

kube-flannel-ds-amd64-qcd5b 1/1 Running 1 17h

kube-flannel-ds-amd64-vbp56 1/1 Running 2 16h

kube-proxy-4bgvr 1/1 Running 1 15h

kube-proxy-7d944 1/1 Running 1 15h

kube-proxy-878d8 1/1 Running 1 16h

kube-proxy-9qf5j 1/1 Running 1 16h

kube-proxy-pbg8w 1/1 Running 1 17h

kube-proxy-wmtn4 1/1 Running 1 15h

kube-scheduler-k8s-master-01 1/1 Running 2 15h

kube-scheduler-k8s-master-02 1/1 Running 8 17h

kube-scheduler-k8s-master-03 1/1 Running 1 15h

浙公网安备 33010602011771号

浙公网安备 33010602011771号