Open Deep Research (LANGCHAIN AI)

Open Deep Research

https://github.com/langchain-ai/open_deep_research

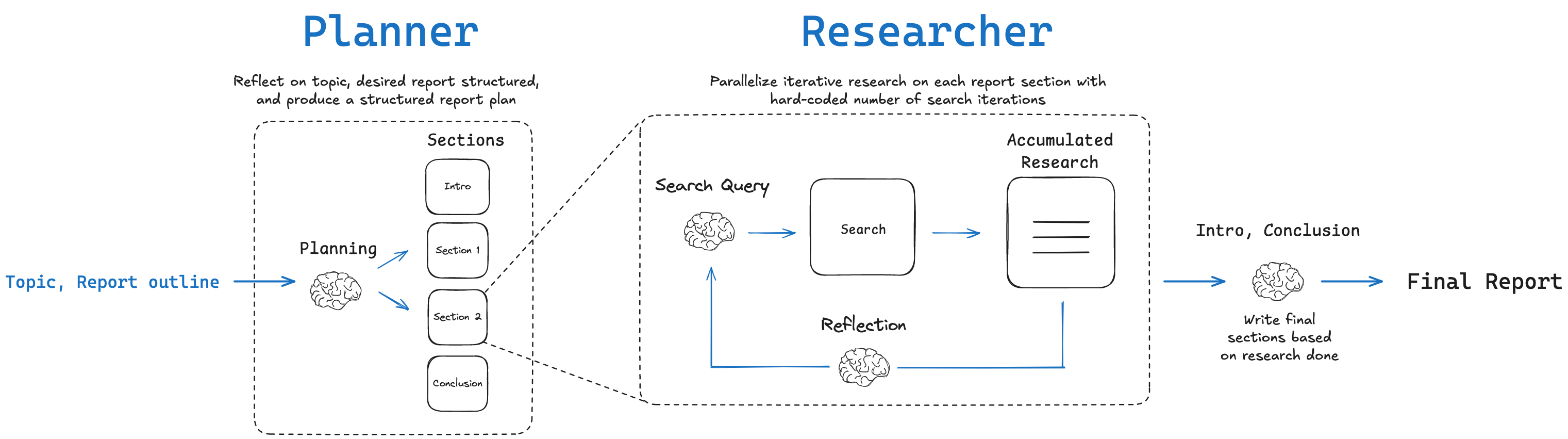

Open Deep Research is an experimental, fully open-source research assistant that automates deep research and produces comprehensive reports on any topic. It features two implementations - a workflow and a multi-agent architecture - each with distinct advantages. You can customize the entire research and writing process with specific models, prompts, report structure, and search tools.

(1) Chat with the agent about your topic of interest, and it will initiate report generation:

(2) The report is produced as markdown.

(1) Provide a Topic:

(2) This will generate a report plan and present it to the user for review.

(3) We can pass a string ("...") with feedback to regenerate the plan based on the feedback.

(4) Or, we can just pass true to the JSON input box in Studio accept the plan.

(5) Once accepted, the report sections will be generated.

The report is produced as markdown.

Available search tools:

- Tavily API - General web search

- Perplexity API - General web search

- Exa API - Powerful neural search for web content

- ArXiv - Academic papers in physics, mathematics, computer science, and more

- PubMed - Biomedical literature from MEDLINE, life science journals, and online books

- Linkup API - General web search

- DuckDuckGo API - General web search

- Google Search API/Scrapper - Create custom search engine here and get API key here

- Microsoft Azure AI Search - Cloud based vector database solution

Open Deep Research is compatible with many different LLMs:

- You can select any model that is integrated with the

init_chat_model()API - See full list of supported integrations here

Using the package

code - multi agent

from typing import List, Annotated, TypedDict, Literal, cast from pydantic import BaseModel, Field import operator import warnings from langchain.chat_models import init_chat_model from langchain_core.tools import tool, BaseTool from langchain_core.runnables import RunnableConfig from langchain_mcp_adapters.client import MultiServerMCPClient from langgraph.graph import MessagesState from langgraph.types import Command, Send from langgraph.graph import START, END, StateGraph from open_deep_research.configuration import Configuration from open_deep_research.utils import ( get_config_value, tavily_search, duckduckgo_search, get_today_str, load_mcp_server_config ) from open_deep_research.prompts import SUPERVISOR_INSTRUCTIONS, RESEARCH_INSTRUCTIONS ## Tools factory - will be initialized based on configuration def get_search_tool(config: RunnableConfig): """Get the appropriate search tool based on configuration""" configurable = Configuration.from_runnable_config(config) search_api = get_config_value(configurable.search_api) # Return None if no search tool is requested if search_api.lower() == "none": return None # TODO: Configure other search functions as tools if search_api.lower() == "tavily": search_tool = tavily_search elif search_api.lower() == "duckduckgo": search_tool = duckduckgo_search else: raise NotImplementedError( f"The search API '{search_api}' is not yet supported in the multi-agent implementation. " f"Currently, only Tavily/DuckDuckGo/None is supported. Please use the graph-based implementation in " f"src/open_deep_research/graph.py for other search APIs, or set search_api to 'tavily', 'duckduckgo', or 'none'." ) tool_metadata = {**(search_tool.metadata or {}), "type": "search"} search_tool.metadata = tool_metadata return search_tool class Section(BaseModel): """Section of the report.""" name: str = Field( description="Name for this section of the report.", ) description: str = Field( description="Research scope for this section of the report.", ) content: str = Field( description="The content of the section." ) class Sections(BaseModel): """List of section titles of the report.""" sections: List[str] = Field( description="Sections of the report.", ) class Introduction(BaseModel): """Introduction to the report.""" name: str = Field( description="Name for the report.", ) content: str = Field( description="The content of the introduction, giving an overview of the report." ) class Conclusion(BaseModel): """Conclusion to the report.""" name: str = Field( description="Name for the conclusion of the report.", ) content: str = Field( description="The content of the conclusion, summarizing the report." ) class Question(BaseModel): """Ask a follow-up question to clarify the report scope.""" question: str = Field( description="A specific question to ask the user to clarify the scope, focus, or requirements of the report." ) # No-op tool to indicate that the research is complete class FinishResearch(BaseModel): """Finish the research.""" # No-op tool to indicate that the report writing is complete class FinishReport(BaseModel): """Finish the report.""" ## State class ReportStateOutput(TypedDict): final_report: str # Final report # for evaluation purposes only # this is included only if configurable.include_source_str is True source_str: str # String of formatted source content from web search class ReportState(MessagesState): sections: list[str] # List of report sections completed_sections: Annotated[list[Section], operator.add] # Send() API key final_report: str # Final report question_asked: bool # Track if a clarifying question has been asked # for evaluation purposes only # this is included only if configurable.include_source_str is True source_str: Annotated[str, operator.add] # String of formatted source content from web search class SectionState(MessagesState): section: str # Report section completed_sections: list[Section] # Final key we duplicate in outer state for Send() API # for evaluation purposes only # this is included only if configurable.include_source_str is True source_str: str # String of formatted source content from web search class SectionOutputState(TypedDict): completed_sections: list[Section] # Final key we duplicate in outer state for Send() API # for evaluation purposes only # this is included only if configurable.include_source_str is True source_str: str # String of formatted source content from web search async def _load_mcp_tools( config: RunnableConfig, existing_tool_names: set[str], ) -> list[BaseTool]: configurable = Configuration.from_runnable_config(config) if not configurable.mcp_server_config: return [] mcp_server_config = configurable.mcp_server_config client = MultiServerMCPClient(mcp_server_config) mcp_tools = await client.get_tools() filtered_mcp_tools: list[BaseTool] = [] for tool in mcp_tools: # TODO: this will likely be hard to manage # on a remote server that's not controlled by the developer # best solution here is allowing tool name prefixes in MultiServerMCPClient if tool.name in existing_tool_names: warnings.warn( f"Trying to add MCP tool with a name {tool.name} that is already in use - this tool will be ignored." ) continue if configurable.mcp_tools_to_include and tool.name not in configurable.mcp_tools_to_include: continue filtered_mcp_tools.append(tool) return filtered_mcp_tools # Tool lists will be built dynamically based on configuration def get_supervisor_tools(config: RunnableConfig) -> list[BaseTool]: """Get supervisor tools based on configuration""" configurable = Configuration.from_runnable_config(config) search_tool = get_search_tool(config) tools = [tool(Sections), tool(Introduction), tool(Conclusion), tool(FinishReport)] if configurable.ask_for_clarification: tools.append(tool(Question)) if search_tool is not None: tools.append(search_tool) # Add search tool, if available return tools async def get_research_tools(config: RunnableConfig) -> list[BaseTool]: """Get research tools based on configuration""" search_tool = get_search_tool(config) tools = [tool(Section), tool(FinishResearch)] if search_tool is not None: tools.append(search_tool) # Add search tool, if available existing_tool_names = {cast(BaseTool, tool).name for tool in tools} mcp_tools = await _load_mcp_tools(config, existing_tool_names) tools.extend(mcp_tools) return tools async def supervisor(state: ReportState, config: RunnableConfig): """LLM decides whether to call a tool or not""" # Messages messages = state["messages"] # Get configuration configurable = Configuration.from_runnable_config(config) supervisor_model = get_config_value(configurable.supervisor_model) # Initialize the model llm = init_chat_model(model=supervisor_model) # If sections have been completed, but we don't yet have the final report, then we need to initiate writing the introduction and conclusion if state.get("completed_sections") and not state.get("final_report"): research_complete_message = {"role": "user", "content": "Research is complete. Now write the introduction and conclusion for the report. Here are the completed main body sections: \n\n" + "\n\n".join([s.content for s in state["completed_sections"]])} messages = messages + [research_complete_message] # Get tools based on configuration supervisor_tool_list = get_supervisor_tools(config) # Remove Question tool if a question has already been asked if state.get("question_asked", False): supervisor_tool_list = [tool for tool in supervisor_tool_list if tool.name != "Question"] llm_with_tools = ( llm .bind_tools( supervisor_tool_list, parallel_tool_calls=False, # force at least one tool call tool_choice="any" ) ) # Invoke return { "messages": [ await llm_with_tools.ainvoke( [ { "role": "system", "content": SUPERVISOR_INSTRUCTIONS.format(today=get_today_str()) } ] + messages ) ] } async def supervisor_tools(state: ReportState, config: RunnableConfig) -> Command[Literal["supervisor", "research_team", "__end__"]]: """Performs the tool call and sends to the research agent""" configurable = Configuration.from_runnable_config(config) result = [] sections_list = [] intro_content = None conclusion_content = None source_str = "" # Get tools based on configuration supervisor_tool_list = get_supervisor_tools(config) supervisor_tools_by_name = {tool.name: tool for tool in supervisor_tool_list} search_tool_names = { tool.name for tool in supervisor_tool_list if tool.metadata is not None and tool.metadata.get("type") == "search" } # First process all tool calls to ensure we respond to each one (required for OpenAI) for tool_call in state["messages"][-1].tool_calls: # Get the tool tool = supervisor_tools_by_name[tool_call["name"]] # Perform the tool call - use ainvoke for async tools try: observation = await tool.ainvoke(tool_call["args"], config) except NotImplementedError: observation = tool.invoke(tool_call["args"], config) # Append to messages result.append({"role": "tool", "content": observation, "name": tool_call["name"], "tool_call_id": tool_call["id"]}) # Store special tool results for processing after all tools have been called if tool_call["name"] == "Question": # Question tool was called - return to supervisor to ask the question question_obj = cast(Question, observation) result.append({"role": "assistant", "content": question_obj.question}) return Command(goto=END, update={"messages": result, "question_asked": True}) elif tool_call["name"] == "Sections": sections_list = cast(Sections, observation).sections elif tool_call["name"] == "Introduction": # Format introduction with proper H1 heading if not already formatted observation = cast(Introduction, observation) if not observation.content.startswith("# "): intro_content = f"# {observation.name}\n\n{observation.content}" else: intro_content = observation.content elif tool_call["name"] == "Conclusion": # Format conclusion with proper H2 heading if not already formatted observation = cast(Conclusion, observation) if not observation.content.startswith("## "): conclusion_content = f"## {observation.name}\n\n{observation.content}" else: conclusion_content = observation.content elif tool_call["name"] in search_tool_names and configurable.include_source_str: source_str += cast(str, observation) # After processing all tool calls, decide what to do next if sections_list: # Send the sections to the research agents return Command(goto=[Send("research_team", {"section": s}) for s in sections_list], update={"messages": result}) elif intro_content: # Store introduction while waiting for conclusion # Append to messages to guide the LLM to write conclusion next result.append({"role": "user", "content": "Introduction written. Now write a conclusion section."}) state_update = { "final_report": intro_content, "messages": result, } elif conclusion_content: # Get all sections and combine in proper order: Introduction, Body Sections, Conclusion intro = state.get("final_report", "") body_sections = "\n\n".join([s.content for s in state["completed_sections"]]) # Assemble final report in correct order complete_report = f"{intro}\n\n{body_sections}\n\n{conclusion_content}" # Append to messages to indicate completion result.append({"role": "user", "content": "Report is now complete with introduction, body sections, and conclusion."}) state_update = { "final_report": complete_report, "messages": result, } else: # Default case (for search tools, etc.) state_update = {"messages": result} # Include source string for evaluation if configurable.include_source_str and source_str: state_update["source_str"] = source_str return Command(goto="supervisor", update=state_update) async def supervisor_should_continue(state: ReportState) -> str: """Decide if we should continue the loop or stop based upon whether the LLM made a tool call""" messages = state["messages"] last_message = messages[-1] # End because the supervisor asked a question or is finished if not last_message.tool_calls or (len(last_message.tool_calls) == 1 and last_message.tool_calls[0]["name"] == "FinishReport"): # Exit the graph return END # If the LLM makes a tool call, then perform an action return "supervisor_tools" async def research_agent(state: SectionState, config: RunnableConfig): """LLM decides whether to call a tool or not""" # Get configuration configurable = Configuration.from_runnable_config(config) researcher_model = get_config_value(configurable.researcher_model) # Initialize the model llm = init_chat_model(model=researcher_model) # Get tools based on configuration research_tool_list = await get_research_tools(config) system_prompt = RESEARCH_INSTRUCTIONS.format( section_description=state["section"], number_of_queries=configurable.number_of_queries, today=get_today_str(), ) if configurable.mcp_prompt: system_prompt += f"\n\n{configurable.mcp_prompt}" return { "messages": [ # Enforce tool calling to either perform more search or call the Section tool to write the section await llm.bind_tools(research_tool_list, parallel_tool_calls=False, # force at least one tool call tool_choice="any").ainvoke( [ { "role": "system", "content": system_prompt } ] + state["messages"] ) ] } async def research_agent_tools(state: SectionState, config: RunnableConfig): """Performs the tool call and route to supervisor or continue the research loop""" configurable = Configuration.from_runnable_config(config) result = [] completed_section = None source_str = "" # Get tools based on configuration research_tool_list = await get_research_tools(config) research_tools_by_name = {tool.name: tool for tool in research_tool_list} search_tool_names = { tool.name for tool in research_tool_list if tool.metadata is not None and tool.metadata.get("type") == "search" } # Process all tool calls first (required for OpenAI) for tool_call in state["messages"][-1].tool_calls: # Get the tool tool = research_tools_by_name[tool_call["name"]] # Perform the tool call - use ainvoke for async tools try: observation = await tool.ainvoke(tool_call["args"], config) except NotImplementedError: observation = tool.invoke(tool_call["args"], config) # Append to messages result.append({"role": "tool", "content": observation, "name": tool_call["name"], "tool_call_id": tool_call["id"]}) # Store the section observation if a Section tool was called if tool_call["name"] == "Section": completed_section = cast(Section, observation) # Store the source string if a search tool was called if tool_call["name"] in search_tool_names and configurable.include_source_str: source_str += cast(str, observation) # After processing all tools, decide what to do next state_update = {"messages": result} if completed_section: # Write the completed section to state and return to the supervisor state_update["completed_sections"] = [completed_section] if configurable.include_source_str and source_str: state_update["source_str"] = source_str return state_update async def research_agent_should_continue(state: SectionState) -> str: """Decide if we should continue the loop or stop based upon whether the LLM made a tool call""" messages = state["messages"] last_message = messages[-1] if last_message.tool_calls[0]["name"] == "FinishResearch": # Research is done - return to supervisor return END else: return "research_agent_tools" """Build the multi-agent workflow""" # Research agent workflow research_builder = StateGraph(SectionState, output=SectionOutputState, config_schema=Configuration) research_builder.add_node("research_agent", research_agent) research_builder.add_node("research_agent_tools", research_agent_tools) research_builder.add_edge(START, "research_agent") research_builder.add_conditional_edges( "research_agent", research_agent_should_continue, ["research_agent_tools", END] ) research_builder.add_edge("research_agent_tools", "research_agent") # Supervisor workflow supervisor_builder = StateGraph(ReportState, input=MessagesState, output=ReportStateOutput, config_schema=Configuration) supervisor_builder.add_node("supervisor", supervisor) supervisor_builder.add_node("supervisor_tools", supervisor_tools) supervisor_builder.add_node("research_team", research_builder.compile()) # Flow of the supervisor agent supervisor_builder.add_edge(START, "supervisor") supervisor_builder.add_conditional_edges( "supervisor", supervisor_should_continue, ["supervisor_tools", END] ) supervisor_builder.add_edge("research_team", "supervisor") graph = supervisor_builder.compile()

code - workflow

from typing import Literal from langchain.chat_models import init_chat_model from langchain_core.messages import HumanMessage, SystemMessage from langchain_core.runnables import RunnableConfig from langgraph.constants import Send from langgraph.graph import START, END, StateGraph from langgraph.types import interrupt, Command from open_deep_research.state import ( ReportStateInput, ReportStateOutput, Sections, ReportState, SectionState, SectionOutputState, Queries, Feedback ) from open_deep_research.prompts import ( report_planner_query_writer_instructions, report_planner_instructions, query_writer_instructions, section_writer_instructions, final_section_writer_instructions, section_grader_instructions, section_writer_inputs ) from open_deep_research.configuration import Configuration from open_deep_research.utils import ( format_sections, get_config_value, get_search_params, select_and_execute_search, get_today_str ) ## Nodes -- async def generate_report_plan(state: ReportState, config: RunnableConfig): """Generate the initial report plan with sections. This node: 1. Gets configuration for the report structure and search parameters 2. Generates search queries to gather context for planning 3. Performs web searches using those queries 4. Uses an LLM to generate a structured plan with sections Args: state: Current graph state containing the report topic config: Configuration for models, search APIs, etc. Returns: Dict containing the generated sections """ # Inputs topic = state["topic"] # Get list of feedback on the report plan feedback_list = state.get("feedback_on_report_plan", []) # Concatenate feedback on the report plan into a single string feedback = " /// ".join(feedback_list) if feedback_list else "" # Get configuration configurable = Configuration.from_runnable_config(config) report_structure = configurable.report_structure number_of_queries = configurable.number_of_queries search_api = get_config_value(configurable.search_api) search_api_config = configurable.search_api_config or {} # Get the config dict, default to empty params_to_pass = get_search_params(search_api, search_api_config) # Filter parameters # Convert JSON object to string if necessary if isinstance(report_structure, dict): report_structure = str(report_structure) # Set writer model (model used for query writing) writer_provider = get_config_value(configurable.writer_provider) writer_model_name = get_config_value(configurable.writer_model) writer_model_kwargs = get_config_value(configurable.writer_model_kwargs or {}) writer_model = init_chat_model(model=writer_model_name, model_provider=writer_provider, model_kwargs=writer_model_kwargs) structured_llm = writer_model.with_structured_output(Queries) # Format system instructions system_instructions_query = report_planner_query_writer_instructions.format( topic=topic, report_organization=report_structure, number_of_queries=number_of_queries, today=get_today_str() ) # Generate queries results = await structured_llm.ainvoke([SystemMessage(content=system_instructions_query), HumanMessage(content="Generate search queries that will help with planning the sections of the report.")]) # Web search query_list = [query.search_query for query in results.queries] # Search the web with parameters source_str = await select_and_execute_search(search_api, query_list, params_to_pass) # Format system instructions system_instructions_sections = report_planner_instructions.format(topic=topic, report_organization=report_structure, context=source_str, feedback=feedback) # Set the planner planner_provider = get_config_value(configurable.planner_provider) planner_model = get_config_value(configurable.planner_model) planner_model_kwargs = get_config_value(configurable.planner_model_kwargs or {}) # Report planner instructions planner_message = """Generate the sections of the report. Your response must include a 'sections' field containing a list of sections. Each section must have: name, description, research, and content fields.""" # Run the planner if planner_model == "claude-3-7-sonnet-latest": # Allocate a thinking budget for claude-3-7-sonnet-latest as the planner model planner_llm = init_chat_model(model=planner_model, model_provider=planner_provider, max_tokens=20_000, thinking={"type": "enabled", "budget_tokens": 16_000}) else: # With other models, thinking tokens are not specifically allocated planner_llm = init_chat_model(model=planner_model, model_provider=planner_provider, model_kwargs=planner_model_kwargs) # Generate the report sections structured_llm = planner_llm.with_structured_output(Sections) report_sections = await structured_llm.ainvoke([SystemMessage(content=system_instructions_sections), HumanMessage(content=planner_message)]) # Get sections sections = report_sections.sections return {"sections": sections} def human_feedback(state: ReportState, config: RunnableConfig) -> Command[Literal["generate_report_plan","build_section_with_web_research"]]: """Get human feedback on the report plan and route to next steps. This node: 1. Formats the current report plan for human review 2. Gets feedback via an interrupt 3. Routes to either: - Section writing if plan is approved - Plan regeneration if feedback is provided Args: state: Current graph state with sections to review config: Configuration for the workflow Returns: Command to either regenerate plan or start section writing """ # Get sections topic = state["topic"] sections = state['sections'] sections_str = "\n\n".join( f"Section: {section.name}\n" f"Description: {section.description}\n" f"Research needed: {'Yes' if section.research else 'No'}\n" for section in sections ) # Get feedback on the report plan from interrupt interrupt_message = f"""Please provide feedback on the following report plan. \n\n{sections_str}\n \nDoes the report plan meet your needs?\nPass 'true' to approve the report plan.\nOr, provide feedback to regenerate the report plan:""" feedback = interrupt(interrupt_message) # If the user approves the report plan, kick off section writing if isinstance(feedback, bool) and feedback is True: # Treat this as approve and kick off section writing return Command(goto=[ Send("build_section_with_web_research", {"topic": topic, "section": s, "search_iterations": 0}) for s in sections if s.research ]) # If the user provides feedback, regenerate the report plan elif isinstance(feedback, str): # Treat this as feedback and append it to the existing list return Command(goto="generate_report_plan", update={"feedback_on_report_plan": [feedback]}) else: raise TypeError(f"Interrupt value of type {type(feedback)} is not supported.") async def generate_queries(state: SectionState, config: RunnableConfig): """Generate search queries for researching a specific section. This node uses an LLM to generate targeted search queries based on the section topic and description. Args: state: Current state containing section details config: Configuration including number of queries to generate Returns: Dict containing the generated search queries """ # Get state topic = state["topic"] section = state["section"] # Get configuration configurable = Configuration.from_runnable_config(config) number_of_queries = configurable.number_of_queries # Generate queries writer_provider = get_config_value(configurable.writer_provider) writer_model_name = get_config_value(configurable.writer_model) writer_model_kwargs = get_config_value(configurable.writer_model_kwargs or {}) writer_model = init_chat_model(model=writer_model_name, model_provider=writer_provider, model_kwargs=writer_model_kwargs) structured_llm = writer_model.with_structured_output(Queries) # Format system instructions system_instructions = query_writer_instructions.format(topic=topic, section_topic=section.description, number_of_queries=number_of_queries, today=get_today_str()) # Generate queries queries = await structured_llm.ainvoke([SystemMessage(content=system_instructions), HumanMessage(content="Generate search queries on the provided topic.")]) return {"search_queries": queries.queries} async def search_web(state: SectionState, config: RunnableConfig): """Execute web searches for the section queries. This node: 1. Takes the generated queries 2. Executes searches using configured search API 3. Formats results into usable context Args: state: Current state with search queries config: Search API configuration Returns: Dict with search results and updated iteration count """ # Get state search_queries = state["search_queries"] # Get configuration configurable = Configuration.from_runnable_config(config) search_api = get_config_value(configurable.search_api) search_api_config = configurable.search_api_config or {} # Get the config dict, default to empty params_to_pass = get_search_params(search_api, search_api_config) # Filter parameters # Web search query_list = [query.search_query for query in search_queries] # Search the web with parameters source_str = await select_and_execute_search(search_api, query_list, params_to_pass) return {"source_str": source_str, "search_iterations": state["search_iterations"] + 1} async def write_section(state: SectionState, config: RunnableConfig) -> Command[Literal[END, "search_web"]]: """Write a section of the report and evaluate if more research is needed. This node: 1. Writes section content using search results 2. Evaluates the quality of the section 3. Either: - Completes the section if quality passes - Triggers more research if quality fails Args: state: Current state with search results and section info config: Configuration for writing and evaluation Returns: Command to either complete section or do more research """ # Get state topic = state["topic"] section = state["section"] source_str = state["source_str"] # Get configuration configurable = Configuration.from_runnable_config(config) # Format system instructions section_writer_inputs_formatted = section_writer_inputs.format(topic=topic, section_name=section.name, section_topic=section.description, context=source_str, section_content=section.content) # Generate section writer_provider = get_config_value(configurable.writer_provider) writer_model_name = get_config_value(configurable.writer_model) writer_model_kwargs = get_config_value(configurable.writer_model_kwargs or {}) writer_model = init_chat_model(model=writer_model_name, model_provider=writer_provider, model_kwargs=writer_model_kwargs) section_content = await writer_model.ainvoke([SystemMessage(content=section_writer_instructions), HumanMessage(content=section_writer_inputs_formatted)]) # Write content to the section object section.content = section_content.content # Grade prompt section_grader_message = ("Grade the report and consider follow-up questions for missing information. " "If the grade is 'pass', return empty strings for all follow-up queries. " "If the grade is 'fail', provide specific search queries to gather missing information.") section_grader_instructions_formatted = section_grader_instructions.format(topic=topic, section_topic=section.description, section=section.content, number_of_follow_up_queries=configurable.number_of_queries) # Use planner model for reflection planner_provider = get_config_value(configurable.planner_provider) planner_model = get_config_value(configurable.planner_model) planner_model_kwargs = get_config_value(configurable.planner_model_kwargs or {}) if planner_model == "claude-3-7-sonnet-latest": # Allocate a thinking budget for claude-3-7-sonnet-latest as the planner model reflection_model = init_chat_model(model=planner_model, model_provider=planner_provider, max_tokens=20_000, thinking={"type": "enabled", "budget_tokens": 16_000}).with_structured_output(Feedback) else: reflection_model = init_chat_model(model=planner_model, model_provider=planner_provider, model_kwargs=planner_model_kwargs).with_structured_output(Feedback) # Generate feedback feedback = await reflection_model.ainvoke([SystemMessage(content=section_grader_instructions_formatted), HumanMessage(content=section_grader_message)]) # If the section is passing or the max search depth is reached, publish the section to completed sections if feedback.grade == "pass" or state["search_iterations"] >= configurable.max_search_depth: # Publish the section to completed sections update = {"completed_sections": [section]} if configurable.include_source_str: update["source_str"] = source_str return Command(update=update, goto=END) # Update the existing section with new content and update search queries else: return Command( update={"search_queries": feedback.follow_up_queries, "section": section}, goto="search_web" ) async def write_final_sections(state: SectionState, config: RunnableConfig): """Write sections that don't require research using completed sections as context. This node handles sections like conclusions or summaries that build on the researched sections rather than requiring direct research. Args: state: Current state with completed sections as context config: Configuration for the writing model Returns: Dict containing the newly written section """ # Get configuration configurable = Configuration.from_runnable_config(config) # Get state topic = state["topic"] section = state["section"] completed_report_sections = state["report_sections_from_research"] # Format system instructions system_instructions = final_section_writer_instructions.format(topic=topic, section_name=section.name, section_topic=section.description, context=completed_report_sections) # Generate section writer_provider = get_config_value(configurable.writer_provider) writer_model_name = get_config_value(configurable.writer_model) writer_model_kwargs = get_config_value(configurable.writer_model_kwargs or {}) writer_model = init_chat_model(model=writer_model_name, model_provider=writer_provider, model_kwargs=writer_model_kwargs) section_content = await writer_model.ainvoke([SystemMessage(content=system_instructions), HumanMessage(content="Generate a report section based on the provided sources.")]) # Write content to section section.content = section_content.content # Write the updated section to completed sections return {"completed_sections": [section]} def gather_completed_sections(state: ReportState): """Format completed sections as context for writing final sections. This node takes all completed research sections and formats them into a single context string for writing summary sections. Args: state: Current state with completed sections Returns: Dict with formatted sections as context """ # List of completed sections completed_sections = state["completed_sections"] # Format completed section to str to use as context for final sections completed_report_sections = format_sections(completed_sections) return {"report_sections_from_research": completed_report_sections} def compile_final_report(state: ReportState, config: RunnableConfig): """Compile all sections into the final report. This node: 1. Gets all completed sections 2. Orders them according to original plan 3. Combines them into the final report Args: state: Current state with all completed sections Returns: Dict containing the complete report """ # Get configuration configurable = Configuration.from_runnable_config(config) # Get sections sections = state["sections"] completed_sections = {s.name: s.content for s in state["completed_sections"]} # Update sections with completed content while maintaining original order for section in sections: section.content = completed_sections[section.name] # Compile final report all_sections = "\n\n".join([s.content for s in sections]) if configurable.include_source_str: return {"final_report": all_sections, "source_str": state["source_str"]} else: return {"final_report": all_sections} def initiate_final_section_writing(state: ReportState): """Create parallel tasks for writing non-research sections. This edge function identifies sections that don't need research and creates parallel writing tasks for each one. Args: state: Current state with all sections and research context Returns: List of Send commands for parallel section writing """ # Kick off section writing in parallel via Send() API for any sections that do not require research return [ Send("write_final_sections", {"topic": state["topic"], "section": s, "report_sections_from_research": state["report_sections_from_research"]}) for s in state["sections"] if not s.research ] # Report section sub-graph -- # Add nodes section_builder = StateGraph(SectionState, output=SectionOutputState) section_builder.add_node("generate_queries", generate_queries) section_builder.add_node("search_web", search_web) section_builder.add_node("write_section", write_section) # Add edges section_builder.add_edge(START, "generate_queries") section_builder.add_edge("generate_queries", "search_web") section_builder.add_edge("search_web", "write_section") # Outer graph for initial report plan compiling results from each section -- # Add nodes builder = StateGraph(ReportState, input=ReportStateInput, output=ReportStateOutput, config_schema=Configuration) builder.add_node("generate_report_plan", generate_report_plan) builder.add_node("human_feedback", human_feedback) builder.add_node("build_section_with_web_research", section_builder.compile()) builder.add_node("gather_completed_sections", gather_completed_sections) builder.add_node("write_final_sections", write_final_sections) builder.add_node("compile_final_report", compile_final_report) # Add edges builder.add_edge(START, "generate_report_plan") builder.add_edge("generate_report_plan", "human_feedback") builder.add_edge("build_section_with_web_research", "gather_completed_sections") builder.add_conditional_edges("gather_completed_sections", initiate_final_section_writing, ["write_final_sections"]) builder.add_edge("write_final_sections", "compile_final_report") builder.add_edge("compile_final_report", END) graph = builder.compile()

浙公网安备 33010602011771号

浙公网安备 33010602011771号