Image Search Application with OpenAI CLIP Model and Faiss Library

Image Search Application with OpenAI CLIP Model and Faiss Library

https://github.com/fanqingsong/clip-faiss

This repository contains an Image Search Application that leverages OpenAI's CLIP (Contrastive Language-Image Pretraining) model and Meta's Faiss (Facebook AI Similarity Search) library to enable efficient and accurate similarity search capabilities. The application allows users to perform image searches by inputting natural language queries.

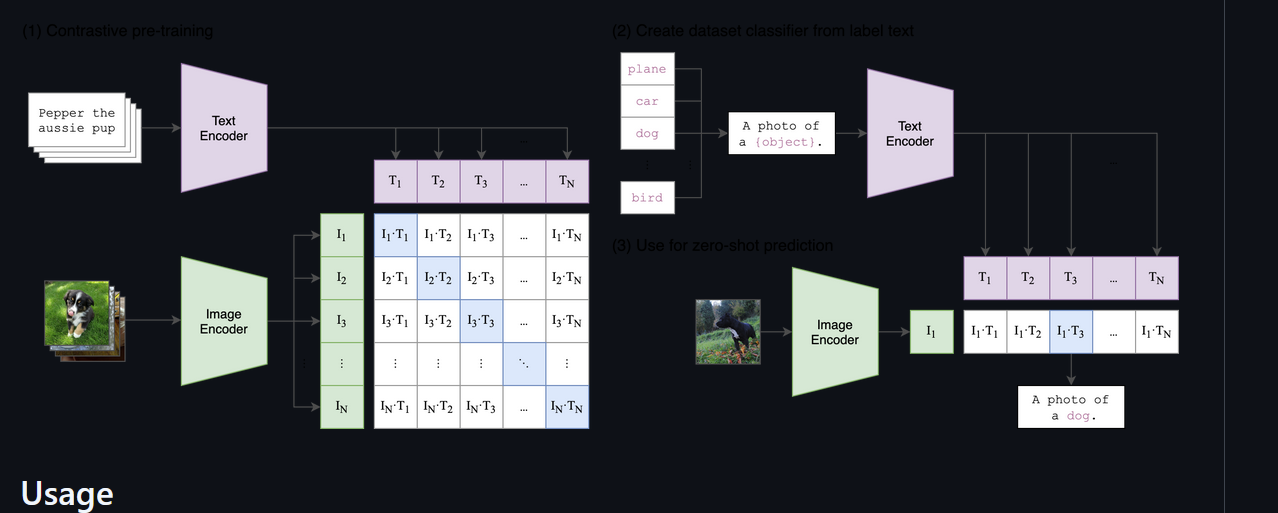

CLIP (Contrastive Language-Image Pretraining) is a state-of-the-art deep learning model developed by OpenAI, designed to bridge the gap between natural language processing and computer vision. It achieves this by jointly learning to understand images and text through contrastive learning. CLIP takes a pair of an image and a text prompt as inputs and processes them using a CNN and a transformer-based language model, respectively. The model outputs dense embeddings that encode the information of both inputs in a shared space. This enables CLIP to perform various tasks, such as image classification and image search based on natural language queries, without the need for task-specific training.

import clip import torch model, preprocess = clip.load("ViT-B/32") images = preprocess(images) texts = clip.tokenize(texts) with torch.no_grad(): image_features = model.encode_image(images) text_features = model.encode_text(texts)Faiss is an efficient and powerful library developed by Facebook AI Research (FAIR) for similarity search and clustering of dense vectors. It is specifically designed to handle large-scale datasets and high-dimensional vector spaces, making it well-suited for applications in computer vision, natural language processing, and machine learning. Its optimized implementations enable users to perform nearest neighbor searches and clustering operations with outstanding speed and memory efficiency.

import faiss index = faiss.IndexFlatIP(d) # d is the dimension of the vectors index.add(vectors) # indexing distances, indices = index.search(query_vectors, k) # k is the number of nearest neighbors to search forThe design of the Image Search Application revolves around the integration of the pretrained CLIP model and Faiss library to enable efficient image search capabilities. Initially, the application processes all images in the search space using the pretrained CLIP model, generating dense embeddings that represent the visual information contained in each image. These embeddings are then indexed and stored using Faiss, allowing for fast and accurate "nearest inner product neighbor" searches.

When a user submits a natural language query, the application takes the input and employs the same CLIP model to generate an embedding that represents the semantic information of the query. It then performs a nearest neighbor search within the Faiss index to find image embeddings that best match the query's embedding. The results are then presented to the user, displaying visually similar images based on their textual description.

安装:

需要python 3.8环境

wsl环境下安装需要添加如下环境

https://blog.csdn.net/weixin_56319483/article/details/136439533

export LD_LIBRARY_PATH=/usr/lib/wsl/lib:$LD_LIBRARY_PATH

CLIP

https://github.com/openai/CLIP

meta库,将 文本 和 图片 在同一个向量空间对齐

CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pairs. It can be instructed in natural language to predict the most relevant text snippet, given an image, without directly optimizing for the task, similarly to the zero-shot capabilities of GPT-2 and 3. We found CLIP matches the performance of the original ResNet50 on ImageNet “zero-shot” without using any of the original 1.28M labeled examples, overcoming several major challenges in computer vision.

图片下载

https://www.kaggle.com/datasets/iamsouravbanerjee/animal-image-dataset-90-different-animals?resource=download

其它相关文档

支持flask测试

https://github.com/samuelhei/faiss-api/blob/master/test_app.py

faiss存储本地

https://github.com/davidefiocco/faiss-on-disk-example/blob/master/src/faiss_training.py

langchain集成使用

https://github.com/Leon-Sander/langchain_faiss_vectorindex/tree/main

VGG抽取图片特征

https://github.com/liyaodev/image-retrieval/blob/main/index.py

本地存储二进制文件

https://docs.h5py.org/en/stable/index.html

Similarities: a toolkit for similarity calculation and semantic search. 相似度计算、匹配搜索工具包,支持亿级数据文搜文、文搜图、图搜图,python3开发,开箱即用。

https://github.com/shibing624/similarities

浙公网安备 33010602011771号

浙公网安备 33010602011771号