Machine learning model serving in Python using FastAPI and streamlit

Machine learning model serving in Python using FastAPI and streamlit

https://davidefiocco.github.io/streamlit-fastapi-ml-serving/

背景描述

ML工程师需要面向做出快速原型, 以分析模型和数据的性能。

原型需要有

前端 -- 仅仅提供原型展示, 生产级别的界面需要专业前端人员开发。

后端 -- 提供模型应用接口服务, 和 接口文档, 可以提供给正式应用的开发同事使用。

快速容易地实现这两个目的,对于ML工程师是友好的,特别是不用学习前端知识,

这样ML工程师可以聚焦于数据开发, 和 模型开发工作。

streamlit, FastAPI and Docker combined enable the creation of both the frontend and backend for machine learning applications, in pure Python. Go straight to the example code!

In my current job I train machine learning models. When experiments show that one of these models can solve some need of the company, we sometimes serve it to users in the form of a “prototype” deployed on internal servers. While such a prototype may not be production-ready yet, it can be useful to show to users strengths and weaknesses of the proposed solution and get feedback so to release better iterations.

Such a prototype needs to have:

- a frontend (a user interface aka UI), so that users can interact with it;

- a backend with API documentation, so that it can process a lot of requests in bulk and moved easily to production and integrated with other applications later on.

Also, it’d be nice to create these easily, quickly and concisely, so that more attention and time can be devoted to better data and model development!

当前问题

作者涉足过前端,用于创建UI, 和创建后端服务, 但是问题来了:

前端不是那么容易实现,且做的效果有点丑陋

后端也仅仅是提供API,不能提供文档。

In the recent past I have dabbled in HTML and JavaScript to create UIs, and used Flask to create the underlying backend services. This did the job, but:

- I could just create very simple UIs (using bootstrap and jQuery), but had to bug my colleagues to make them functional and not totally ugly.

- My Flask API endpoints were very simple and they didn’t have API documentation. They also served results using the server built-in Flask which is not suitable for production.

推出方案

所以作者希望能用python来完成 ML 模型的原型展示工作, 同时也提供API给后续正式应用使用。

经过调研, 两个库在ML应用社区,已经引起很多的关注:

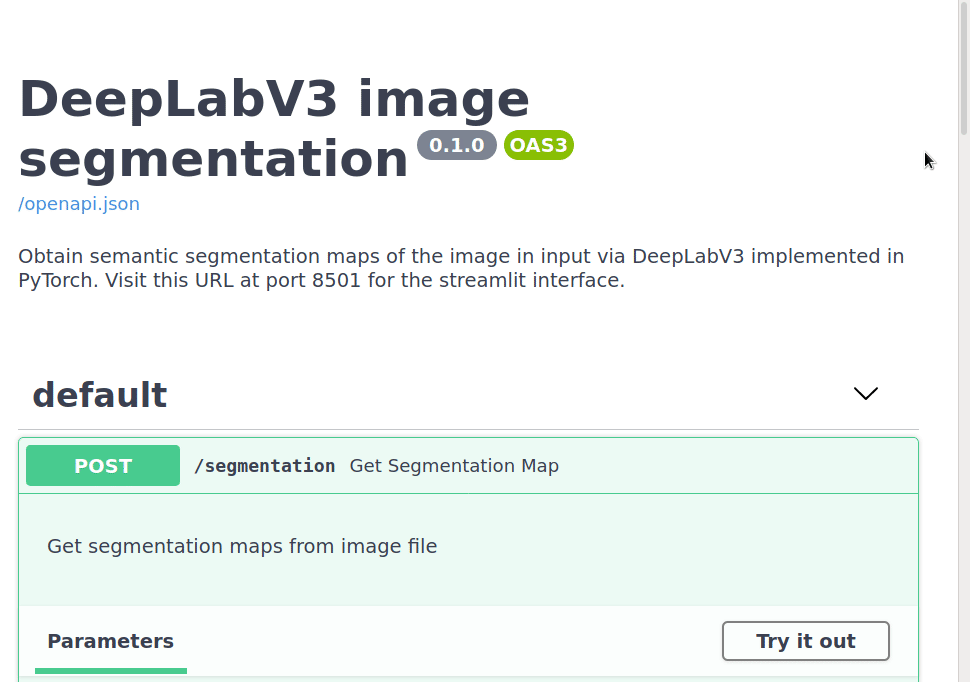

FASTAPI 是一个正在崛起的web框架, 它可以提供文档,完全根据API来生成, 且满足 openapi 规范。

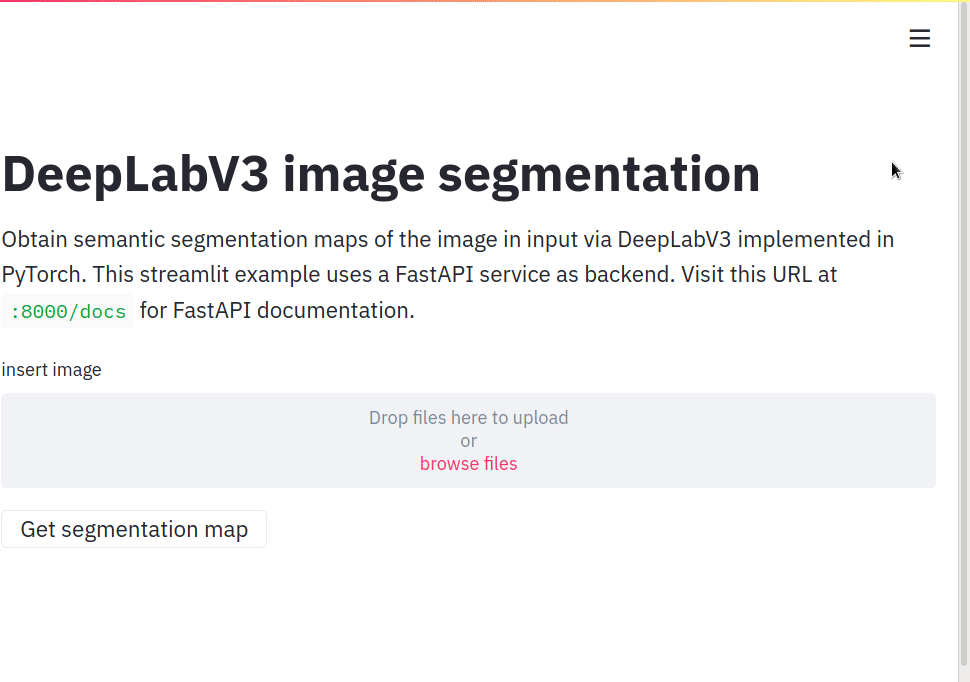

streamlit 同样获得关注, 可以创建相当复杂的UI界面, 不用web前端知识, 仅仅使用python。事实上此框架就是为ML工程师打造的。

结合这两个工具,可以满足我们的需求。

What if both frontend and backend could be easily built with (little) Python?

You may already have heard of FastAPI and streamlit, two Python libraries that lately are getting quite some attention in the applied ML community.

FastAPI is gaining popularity among Python frameworks. It is thoroughly documented, allows to code APIs following OpenAPI specifications and can use

uvicornbehind the scenes, allowing to make it “good enough” for some production use. Its syntax is also similar to that of Flask, so that it’s easy to switch to it if you have used Flask before.streamlit is getting traction as well. It allows to create pretty complex UIs in pure Python. It can be used to serve ML models without further ado, but (as of today) you can’t build REST endpoints with it.

So why not combine the two, and get the best of both worlds?

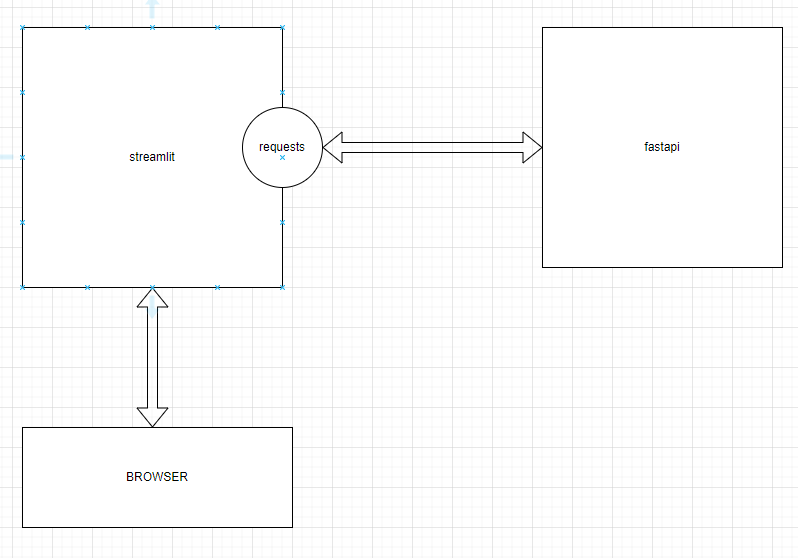

架构

展示案例

https://github.com/davidefiocco/streamlit-fastapi-model-serving

作者给出了一个图像分割的例子。

A simple “full-stack” application: image semantic segmentation with DeepLabV3Permalink

As an example, let’s take image segmentation, which is the task of assigning to each pixel of a given image to a category (for a primer on image segmentation, check out the fast.ai course).

Semantic segmentation can be done using a model pre-trained on images labeled using predefined list of categories. An example in this sense is the DeepLabV3 model, which is already implemented in PyTorch.

项目提供两个微服务, 两个服务都运行在docker容器中, 并使用docker-compose配置文件管理:

streamlit用于实现前端。

fastapi用于实现模型服务的后端。

How can we serve such a model in an app with a streamlit frontend and a FastAPI backend?

One possibility is to have two services deployed in two Docker containers, orchestrated with

docker-compose:

version: '3'

services:

fastapi:

build: fastapi/

ports:

- 8000:8000

networks:

- deploy_network

container_name: fastapi

streamlit:

build: streamlit/

depends_on:

- fastapi

ports:

- 8501:8501

networks:

- deploy_network

container_name: streamlit

networks:

deploy_network:

driver: bridge

前端使用requests库调用后端fastapi提供的接口。

The

streamlitservice serves a UI that calls (using therequestspackage) the endpoint exposed by thefastapiservice, while UI elements (text, file upload, buttons, display of results), are declared with calls tostreamlit:

import streamlit as st from requests_toolbelt.multipart.encoder import MultipartEncoder import requests from PIL import Image import io st.title('DeepLabV3 image segmentation') # fastapi endpoint url = 'http://fastapi:8000' endpoint = '/segmentation' st.write('''Obtain semantic segmentation maps of the image in input via DeepLabV3 implemented in PyTorch. This streamlit example uses a FastAPI service as backend. Visit this URL at `:8000/docs` for FastAPI documentation.''') # description and instructions image = st.file_uploader('insert image') # image upload widget def process(image, server_url: str): m = MultipartEncoder( fields={'file': ('filename', image, 'image/jpeg')} ) r = requests.post(server_url, data=m, headers={'Content-Type': m.content_type}, timeout=8000) return r if st.button('Get segmentation map'): if image == None: st.write("Insert an image!") # handle case with no image else: segments = process(image, url+endpoint) segmented_image = Image.open(io.BytesIO(segments.content)).convert('RGB') st.image([image, segmented_image], width=300) # output dyptich

后端使用fastapi来开发模型服务API

from fastapi import FastAPI, File from starlette.responses import Response import io from segmentation import get_segmentator, get_segments model = get_segmentator() app = FastAPI(title="DeepLabV3 image segmentation", description='''Obtain semantic segmentation maps of the image in input via DeepLabV3 implemented in PyTorch. Visit this URL at port 8501 for the streamlit interface.''', version="0.1.0", ) @app.post("/segmentation") def get_segmentation_map(file: bytes = File(...)): '''Get segmentation maps from image file''' segmented_image = get_segments(model, file) bytes_io = io.BytesIO() segmented_image.save(bytes_io, format='PNG') return Response(bytes_io.getvalue(), media_type="image/png")

效果

To test the application locally one can simply execute in a command line

docker-compose build docker-compose upand then visit http://localhost:8000/docs with a web browser to interact with the FastAPI-generated documentation, which should look a bit like this:

The streamlit-generated page can be visited at http://localhost:8501, and after uploading an image example and pressing the button, you should see the original image and the semantic segmentation generated by the model:

浙公网安备 33010602011771号

浙公网安备 33010602011771号