第七章 手写数字识别(终)

将前文的代码解耦为三个部分:

- 定义的类和函数的nn_core.py

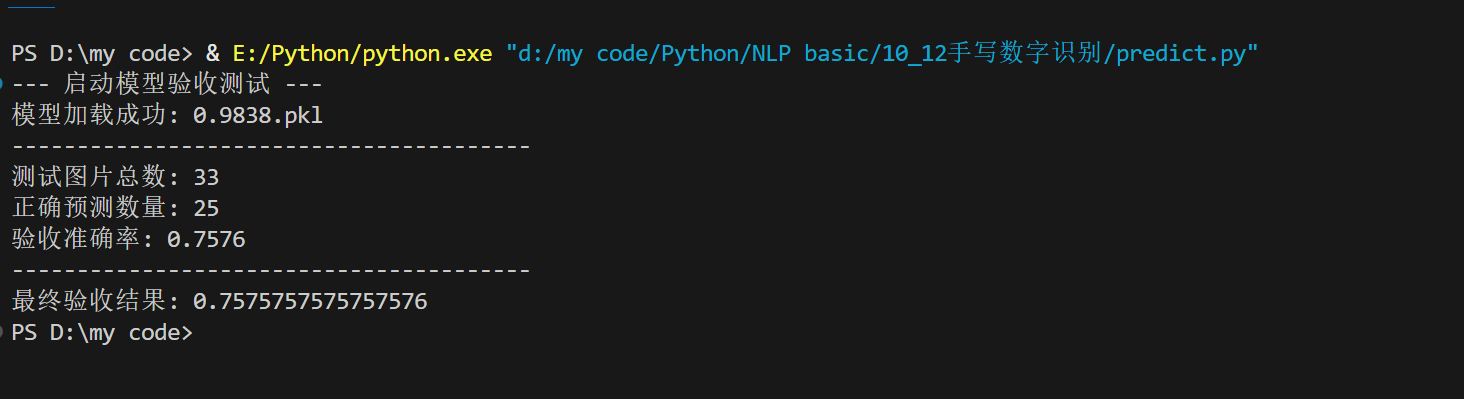

- 模型训练和测试集验证并保存最优模型的main_train.py

- 验收 (自定义图片预测)的脚本predict.py

至此,手写数字识别的NLP任务完全结束,至于更多的优化目前我不准备做了。

下面是源码:

nn_core.py

# 更新

# 新增:验收图像预处理函数和验收主函数

# 导入必要的库

import numpy as np

import os

import struct

import pickle

import cv2

# 定义导入函数

def load_images(path):

with open(path, "rb") as f:

data = f.read()

magic_number, num_items, rows, cols = struct.unpack(">iiii", data[:16])

return np.asanyarray(bytearray(data[16:]), dtype=np.uint8).reshape(

num_items, 28, 28

)

def load_labels(path):

with open(path, "rb") as f:

data = f.read()

return np.asanyarray(bytearray(data[8:]), dtype=np.int32)

# 激活函数

# 定义sigmoid函数

def sigmoid(x):

result = np.zeros_like(x)

positive_mask = x >= 0

result[positive_mask] = 1 / (1 + np.exp(-x[positive_mask]))

negative_mask = x < 0

exp_x = np.exp(x[negative_mask])

result[negative_mask] = exp_x / (1 + exp_x)

return result

# 定义softmax函数

def softmax(x):

max_x = np.max(x, axis=-1, keepdims=True)

x = x - max_x

ex = np.exp(x)

sum_ex = np.sum(ex, axis=1, keepdims=True)

result = ex / sum_ex

result = np.clip(result, 1e-10, 1e10)

return result

# 训练集编码处理

# 定义独热编码函数

def make_onehot(labels, class_num):

result = np.zeros((labels.shape[0], class_num))

for idx, cls in enumerate(labels):

result[idx, cls] = 1

return result

# 定义dataset类

class Dataset:

def __init__(self, all_images, all_labels):

self.all_images = all_images

self.all_labels = all_labels

def __getitem__(self, index):

image = self.all_images[index]

label = self.all_labels[index]

return image, label

def __len__(self):

return len(self.all_images)

# 定义dataloader类

class DataLoader:

def __init__(self, dataset, batch_size, shuffle=True):

self.dataset = dataset

self.batch_size = batch_size

self.shuffle = shuffle

self.idx = np.arange(len(self.dataset))

def __iter__(self):

# 如果需要打乱,则在每个 epoch 开始时重新排列索引

if self.shuffle:

np.random.shuffle(self.idx)

self.cursor = 0

return self

def __next__(self):

if self.cursor >= len(self.dataset):

raise StopIteration

# 使用索引来获取数据

batch_idx = self.idx[

self.cursor : min(self.cursor + self.batch_size, len(self.dataset))

]

batch_images = self.dataset.all_images[batch_idx]

batch_labels = self.dataset.all_labels[batch_idx]

self.cursor += self.batch_size

return batch_images, batch_labels

# 父类Module,查看各层结构

# 定义Module类

class Module:

def __init__(self):

self.info = "Module:\n"

self.params = []

def __repr__(self):

return self.info

# 定义Parameter类

class Parameter:

def __init__(self, weight):

self.weight = weight

self.grad = np.zeros_like(weight)

self.velocity = np.zeros_like(weight) # 🆕 新增:动量/速度向量

# 定义linear类

class Linear(Module):

def __init__(self, in_features, out_features):

super().__init__()

self.info += f"** Linear({in_features}, {out_features})"

# 🆕 修正:使用 He 初始化,适用于 ReLU

std_dev = np.sqrt(2 / in_features)

# 使用 std_dev 来初始化权重

self.W = Parameter(

np.random.normal(0, std_dev, size=(in_features, out_features))

)

# 偏置 B 最好初始化为 0,而非随机值

self.B = Parameter(np.zeros((1, out_features)))

self.params.append(self.W)

self.params.append(self.B)

def forward(self, x):

self.x = x

return np.dot(x, self.W.weight) + self.B.weight

def backward(self, G):

self.W.grad = np.dot(self.x.T, G)

self.B.grad = np.mean(G, axis=0, keepdims=True)

return np.dot(G, self.W.weight.T)

# 定义Conv2D类

class Conv2D(Module):

def __init__(self, in_channel, out_channel):

super(Conv2D, self).__init__()

self.info += f" Conv2D({in_channel, out_channel})"

std_dev = np.sqrt(2 / in_channel)

self.W = Parameter(np.random.normal(0, std_dev, size=(in_channel, out_channel)))

self.B = Parameter(np.zeros((1, out_channel)))

self.params.append(self.W)

self.params.append(self.B)

def forward(self, x):

result = x @ self.W.weight + self.B.weight

self.x = x

return result

def backward(self, G):

self.W.grad = self.x.T @ G

self.B.grad = np.mean(G, axis=0, keepdims=True)

delta_x = G @ self.W.weight.T

return delta_x

# 定义Conv1D类

class Conv1D(Module):

def __init__(self, in_channel, out_channel):

super(Conv1D, self).__init__()

self.info += f" Conv1D({in_channel,out_channel})"

self.W = Parameter(np.random.normal(0, 1, size=(in_channel, out_channel)))

self.B = Parameter(np.zeros((1, out_channel)))

self.params.append(self.W)

self.params.append(self.B)

def forward(self, x):

result = x @ self.W.weight + self.B.weight

self.x = x

return result

def backward(self, G):

self.W.grad = self.x.T @ G

self.B.grad = np.mean(G, axis=0, keepdims=True)

delta_x = G @ self.W.weight.T

return delta_x

# 优化器

# 定义Optimizer类

class Optimizer:

def __init__(self, parameters, lr):

self.parameters = parameters

self.lr = lr

def zero_grad(self):

for p in self.parameters:

p.grad.fill(0)

# 定义SGD类,学习率较大

class SGD(Optimizer):

def step(self):

for p in self.parameters:

p.weight -= self.lr * p.grad

# 定义MSGD类,学习率较大

class MSGD(Optimizer):

def __init__(self, parameters, lr, u):

super().__init__(parameters, lr)

self.u = u

def step(self):

for p in self.parameters:

# 1. 更新速度 V_t = u * V_{t-1} + p.grad

p.velocity = self.u * p.velocity + p.grad

# 2. 更新权重 W = W - lr * V_t

p.weight -= self.lr * p.velocity

# 定义Adam类,学习率一般较小10^-3到10^-6

class Adam(Optimizer):

def __init__(self, parameters, lr, beta1=0.9, beta2=0.999, e=1e-8):

super().__init__(parameters, lr)

self.beta1 = beta1

self.beta2 = beta2

self.e = e

self.t = 0

for p in self.parameters:

# p.m = 0

p.m = np.zeros_like(p.weight)

# p.v = 0

p.v = np.zeros_like(p.weight)

def step(self):

self.t += 1

for p in self.parameters:

gt = p.grad

p.m = self.beta1 * p.m + (1 - self.beta1) * gt

p.v = self.beta2 * p.v + (1 - self.beta2) * gt**2

mt_ = p.m / (1 - self.beta1**self.t)

vt_ = p.v / (1 - self.beta2**self.t)

p.weight = p.weight - self.lr * mt_ / np.sqrt(vt_ + self.e)

# 定义Sigmoid类

class Sigmoid(Module):

def __init__(self):

super().__init__()

self.info += "** Sigmoid()" # 打印信息

def forward(self, x):

self.result = sigmoid(x)

return self.result

def backward(self, G):

return G * self.result * (1 - self.result)

# 定义Tanh类

class Tanh(Module):

def __init__(self):

super().__init__()

self.info += "** Tanh()" # 打印信息

def forward(self, x):

self.result = 2 * sigmoid(2 * x) - 1

return self.result

def backward(self, G):

return G * (1 - self.result**2)

# 定义Softmax类

class Softmax(Module):

def __init__(self):

super().__init__()

self.info += "** Softmax()" # 打印信息

def forward(self, x):

self.p = softmax(x)

return self.p

def backward(self, G):

G = (self.p - G) / len(G)

return G

# 定义ReLU类

class ReLU(Module):

def __init__(self):

super().__init__()

self.info += "** ReLU()" # 打印信息

def forward(self, x):

self.x = x

return np.maximum(0, x)

def backward(self, G):

grad = G.copy()

grad[self.x <= 0] = 0

return grad

# 定义Dropout类

class Dropout(Module):

def __init__(self, p=0.3):

super().__init__()

self.info += f"** Dropout(p={p})" # 打印信息

self.p = p

self.is_training = True # 🆕 新增:训练状态标志

def forward(self, x):

if not self.is_training:

return x # 评估时直接返回

r = np.random.rand(*x.shape)

self.mask = r >= self.p # 创建掩码

# 应用掩码和缩放

return (x * self.mask) / (1 - self.p)

def backward(self, G):

if not self.is_training:

return G # 评估时直接返回梯度

G[~self.mask] = 0

return G / (1 - self.p)

## 模型

# 定义ModelList类

class ModelList:

def __init__(self, layers):

self.layers = layers

def forward(self, x):

for layer in self.layers:

x = layer.forward(x)

return x

def backward(self, G):

for layer in self.layers[::-1]:

G = layer.backward(G)

def __repr__(self):

info = ""

for layer in self.layers:

info += layer.info + "\n"

return info

# 定义Model类

class Model:

def __init__(self):

self.model_list = ModelList(

[

Linear(784, 512),

ReLU(),

Dropout(0.2),

Conv2D(512, 256),

Tanh(),

Dropout(0.1),

Linear(256, 10),

Softmax(),

]

)

def forward(self, x, label=None):

pre = self.model_list.forward(x)

if label is not None:

self.label = label

loss = -np.mean(self.label * np.log(pre))

return loss

else:

return np.argmax(pre, axis=-1)

def backward(self):

self.model_list.backward(self.label)

def train(self):

"""设置模型为训练模式 (启用 Dropout)。"""

for layer in self.model_list.layers:

# 检查层是否有 is_training 属性 (即只针对 Dropout 层)

if hasattr(layer, "is_training"):

layer.is_training = True

def eval(self):

"""设置模型为评估/推理模式 (禁用 Dropout)。"""

for layer in self.model_list.layers:

if hasattr(layer, "is_training"):

layer.is_training = False

def __repr__(self):

return self.model_list.__repr__()

def parameter(self):

all_Parameter = []

for layer in self.model_list.layers:

all_Parameter.extend(layer.params)

return all_Parameter

# 验收图像预处理函数

def preprocess_custom_image(path, filename):

"""

加载、预处理单个自定义图片,并提取其标签。

Args:

path (str): 图片的完整路径 (root_path + filename)。

filename (str): 图片文件名 (用于提取标签)。

Returns:

tuple: (processed_image_array, label), 或 (None, None)

"""

try:

# cv2 读取图片,返回 None 表示读取失败或文件不存在

img = cv2.imread(path)

if img is None:

print(f"警告: 无法读取图片 {path},跳过。")

return None, None

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # 灰度化

img_resized = cv2.resize(img_gray, (28, 28)) # 缩放为28*28

# 归一化并展平为 (1, 784)

# 注意: cv2.resize 返回的数组已经是 (28, 28)

processed_image = img_resized.flatten() / 255.0

# ⚠️ 标签提取逻辑: 根据您实际的文件名格式修改这里的切片逻辑

# 假设标签在倒数第5个位置 (例如 'image_3.png' 的 '3')

label = int(filename[-5:-4])

return processed_image, label

except ValueError:

print(

f"错误: 无法从文件名 '{filename}' 中提取数字标签 (切片结果不是数字),跳过。"

)

return None, None

except Exception as e:

print(f"处理图片 {filename} 时发生未知错误: {e}")

return None, None

# 验收主函数

def run_acceptance_test(model_path, image_folder_path):

"""

加载模型,对指定文件夹中的图片进行预测,并计算准确率。

Args:

model_path (str): 已保存的模型文件路径 (e.g., '0.9838.pkl')。

image_folder_path (str): 存放测试图片的文件夹路径。

Returns:

float: 预测准确率 (Accuracy)。

"""

print("--- 启动模型验收测试 ---")

# 1. 加载模型

try:

with open(model_path, "rb") as f:

# 确保 Model 类在当前模块或已导入,以避免 AttributeError

model = pickle.load(f)

model.eval() # 切换到评估模式 (如果您的 Model 类有 eval 方法)

print(f"模型加载成功: {os.path.basename(model_path)}")

except Exception as e:

print(f"错误: 模型加载失败,请检查文件路径和 nn_core 导入。错误: {e}")

return 0.0

# 2. 准备数据结构

images_file = os.listdir(image_folder_path)

# 过滤掉非图片文件

images_file = [

f for f in images_file if f.lower().endswith((".png", ".jpg", ".jpeg", ".bmp"))

]

if not images_file:

print("文件夹中未找到任何图片文件。")

return 0.0

# 存储所有有效图片和标签

processed_images_list = []

true_labels_list = []

# 3. 遍历和预处理图片

for image_name in images_file:

path = os.path.join(image_folder_path, image_name)

# 使用分离的预处理函数

processed_img, label = preprocess_custom_image(path, image_name)

if processed_img is not None and label is not None:

processed_images_list.append(processed_img)

true_labels_list.append(label)

if not processed_images_list:

print("没有有效的图片用于测试。")

return 0.0

# 4. 预测

test_images = np.stack(

processed_images_list

) # 将列表中的 (1, 784) 数组堆叠成 (N, 784)

true_labels = np.array(true_labels_list)

predicted_labels = model.forward(test_images)

# 5. 计算准确率

right_num = np.sum(predicted_labels == true_labels)

acc = right_num / len(true_labels)

print("-" * 40)

print(f"测试图片总数: {len(true_labels)}")

print(f"正确预测数量: {right_num}")

print(f"验收准确率: {acc:.4f}")

print("-" * 40)

return acc

main_train.py

import numpy as np

import os

import pickle

# 🆕 从自定义模块中导入所有需要的类和函数

from nn_core import (

load_images,

load_labels,

make_onehot,

Dataset,

DataLoader,

Model,

Adam,

)

# 如果 nn_core 中有其他依赖,也需要导入,或者在 nn_core 中处理好

# 主函数

if __name__ == "__main__":

# 设置随机种子

np.random.seed(1000)

# 加载训练集图片、标签

train_images = (

load_images(

os.path.join(

"Python", "NLP basic", "data", "minist", "train-images.idx3-ubyte"

)

)

/ 255

)

train_labels = make_onehot(

load_labels(

os.path.join(

"Python", "NLP basic", "data", "minist", "train-labels.idx1-ubyte"

)

),

10,

)

# 加载测试集图片、标签

dev_images = (

load_images(

os.path.join(

"Python", "NLP basic", "data", "minist", "t10k-images.idx3-ubyte"

)

)

/ 255

)

dev_labels = load_labels(

os.path.join("Python", "NLP basic", "data", "minist", "t10k-labels.idx1-ubyte")

)

# 设置超参数

epochs = 10

lr = 1e-3

batch_size = 200

best_acc = -1

# 展开图片数据

train_images = train_images.reshape(60000, 784)

dev_images = dev_images.reshape(-1, 784)

# 调用dataset类和dataloader类

train_dataset = Dataset(train_images, train_labels)

train_dataloader = DataLoader(train_dataset, batch_size)

dev_dataset = Dataset(dev_images, dev_labels)

dev_dataloader = DataLoader(dev_dataset, batch_size)

# 定义模型

model = Model()

# 定义优化器

# opt = SGD(model.parameter(), lr)

# opt=MSGD(model.parameter(),lr,0.9)

opt = Adam(model.parameter(), lr)

# print(model)

# 训练集训练过程

for e in range(epochs):

# 学习率策略

START_DECAY_EPOCH = 3 # 从第n个epoch开始衰减

DECAY_RATE = 0.8 # 衰减率

if e >= START_DECAY_EPOCH:

if (

e - START_DECAY_EPOCH

) % 1 == 0 and best_acc < 0.9825: # 确保是从开始衰减后,每隔n个epoch执行

# 更新学习率

lr *= DECAY_RATE

opt.lr = lr

# 启用训练模式

model.train()

# 训练集训练

for x, l in train_dataloader:

loss = model.forward(x, l)

model.backward()

opt.step()

opt.zero_grad()

# 验证集验证并输出预测准确率

# 切换到评估模式,禁用 Dropout

model.eval()

right_num = 0

for x, batch_labels in dev_dataloader:

pre_idx = model.forward(x)

right_num += np.sum(pre_idx == batch_labels) # 统计正确个数

acc = right_num / len(dev_images) # 计算准确率

if acc > best_acc and acc > 0.98:

best_acc = acc

# 保存最优模型参数

with open(f"{best_acc:.4f}.pkl", "wb") as f:

pickle.dump(model, f)

print(f"Epoch {e}, Acc: {acc:.4f},lr:{lr:.4f}")

predict.py

# 只需要导入您在 nn_core 中定义的函数

from nn_core import run_acceptance_test

if __name__ == "__main__":

# 路径使用 r'' 避免转义字符错误

MODEL_PATH = r"D:\my code\0.9838.pkl"

IMAGE_FOLDER = r"D:\my code\Python\NLP basic\data\test_images"

# 一行代码完成整个验收过程

final_accuracy = run_acceptance_test(MODEL_PATH, IMAGE_FOLDER)

print(f"最终验收结果: {final_accuracy}")

浙公网安备 33010602011771号

浙公网安备 33010602011771号