Kubenetes-二进制部署

初始化

略,参考我的另外一篇博客

初始化点击跳转

安装包证书部署

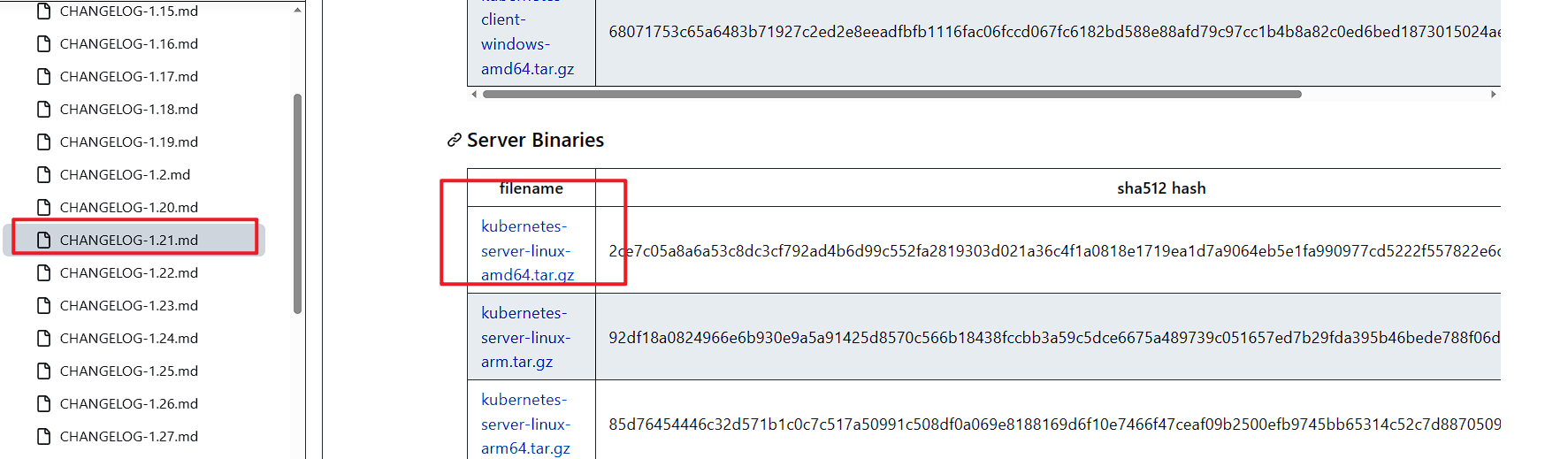

下载安装包

- 下载地址:Github | kubernetes 版本列表

- 主节点上传解压

tar -zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin/

ln -sf kubernetes/server/bin/* /usr/local/bin/

for i in Y11 Y12 Y13;do scp kube-apiserver kube-aggregator kube-controller-manager kubectl kubelet kube-proxy kube-scheduler $i:/usr/local/bin;done

- node 组件复制

for i in Y14 Y15;do scp kubelet kube-proxy $i:/usr/local/bin;done

证书创建和分发

- 从 K 8 S 的 1.8 版本开始,各组件就需要使用 TLS 证书对通信加密,也就是每个集群都需要独立的 CA 证书体系。

- CA 证书则有以下三种

- easyrsa

- openssl

- cfssl

- 这里我们选用的是 cfssl 证书

安装 CFSSL

- 下载,如果无法 wget 可以直接浏览器链接下载上传

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

- 所有 Master 节点生成证书目录

mkdir -p /etc/etcd/ssl

mkdir -p /etc/kubernetes/pki

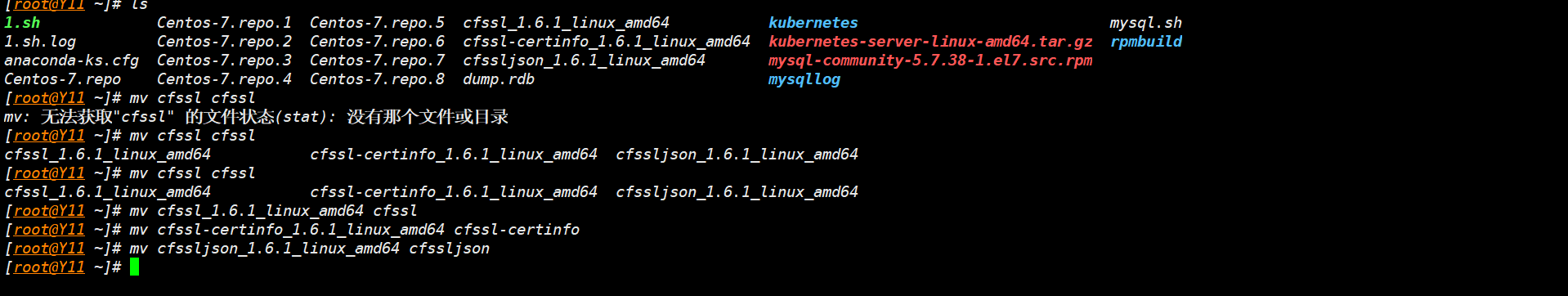

- 选一个主节点上传文件修改名称

mv cfssl_1.6.1_linux_amd64 cfssl

mv cfssl-certinfo_1.6.1_linux_amd64 cfssl-certinfo

mv cfssljson_1.6.1_linux_amd64 cfssljson

- 赋权移动

chmod +x cfssl*

mv cfssl* /usr/local/bin/

- 在一个节点上创建证书目录,并创建

ca-config.json,用来生成 CA 证书。- 注意:后续的证书生成操作都在 ssl 目录中进行,如果产生无法读取配置文件的报错,需要检查工作目录是否为 ssl 目录。

cd ~

mkdir ssl && cd ssl

- 创建根证书的配置文件

vim ca-config.json

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

signing: 表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

server auth: 表示 client 可以用该 CA 对 server 提供的证书进行验证;

client auth: 表示 server 可以用该 CA 对 client 提供的证书进行验证;

创建 K 8 S 集群证书

创建 etcd 根证书

vim etcd-ca-csr.json

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

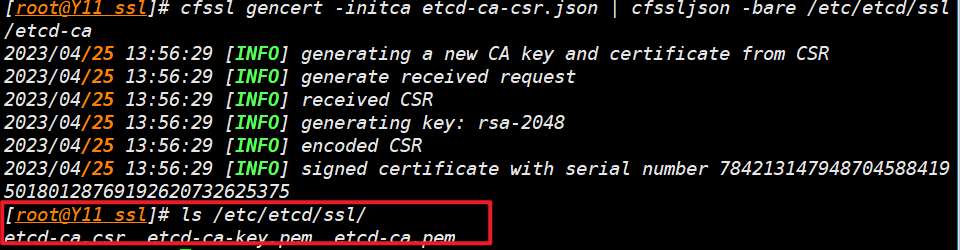

- 生成并查看

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

ls /etc/etcd/ssl/

生成 etcd 客户端证书

- 生成证书请求文件

[root@Y11 ssl]# vim etcd-csr.json

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

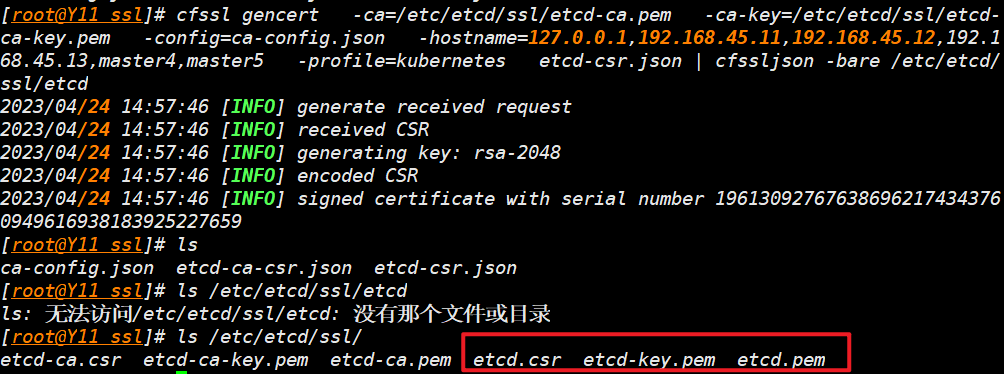

- 生成 etcd 客户端证书

cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,192.168.45.11,192.168.45.12,192.168.45.13,master4,master5 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

- 拷贝证书到其它 etcd节点

masternode="Y11 Y12 Y13"

for i in $masternode;do scp -r /etc/etcd/ssl/*.pem $i:/etc/etcd/ssl/;done

- etcd 节点创建软链接

ln -s /etc/etcd/ssl /etc/kubernetes/pki/etcd

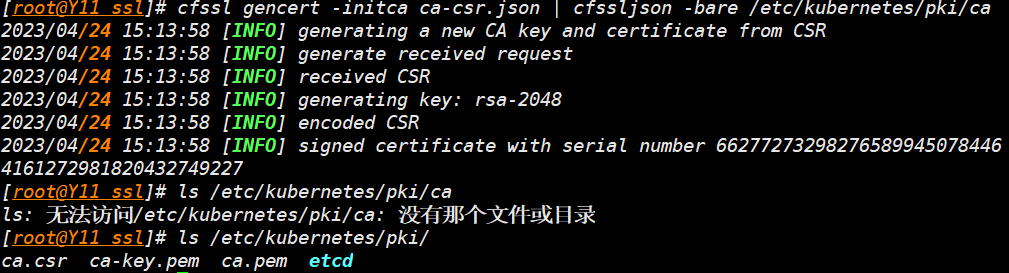

CA 证书

- ca 请求根证书配置文件

vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

- 生成 k8s CA 证书

cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

ls /etc/kubernetes/pki/

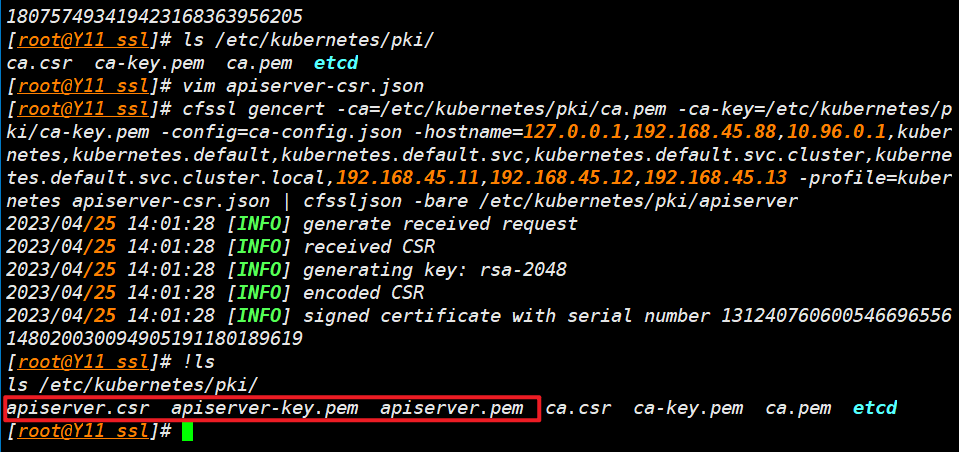

API 证书

- 创建 api-server csr 文件

vim apiserver-csr.json

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

- 创建 api-server 客户端证书

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=127.0.0.1,192.168.45.88,10.96.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.45.11,192.168.45.12,192.168.45.13 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

ls /etc/kubernetes/pki/

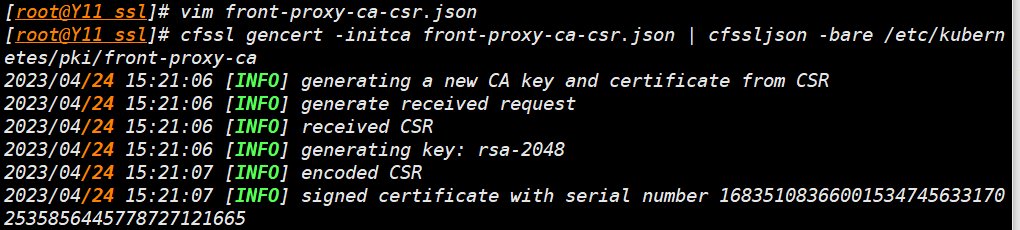

front-proxy 证书

- 创建 front-proxy-ca 配置文件

vim front-proxy-ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

- 生成 front-proxy-ca 证书

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

- 生成 front-proxy-client 证书

vim front-proxy-client-csr.json

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

- 生成证书

cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

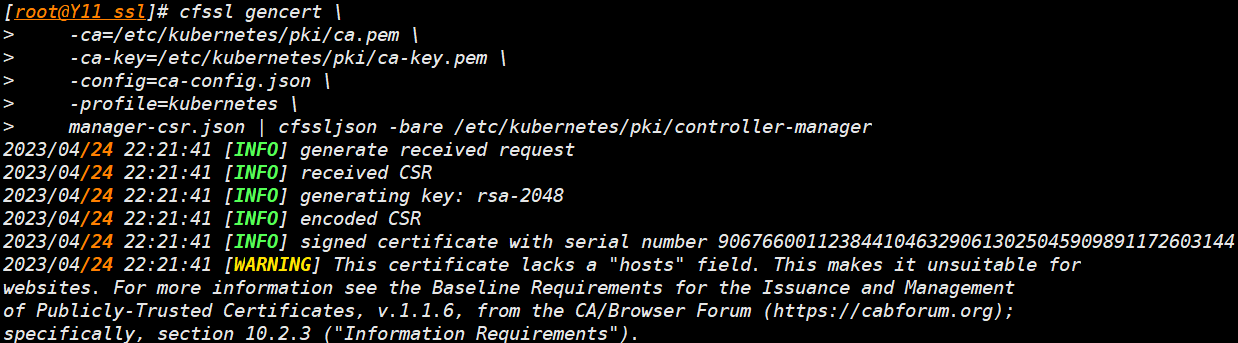

Controller Manager 证书并配置其文件

- 配置文件

vim manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

- 生成

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

- 以下类似的命令用于将证书跟相关配置写入对应组件的配置文件之中

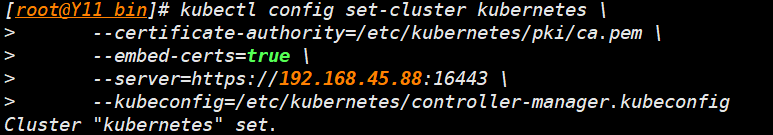

- 集群参数设置

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.45.88:16443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

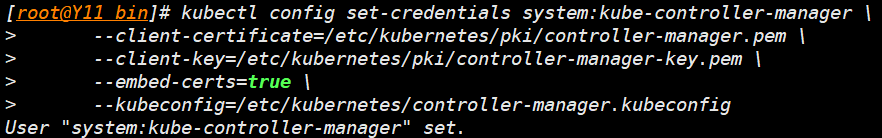

- 下面这条命令的作用是将由 kube-controller-manager 使用的凭据信息添加到 kubeconfig 文件中,并为此添加一个新的 entry。

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

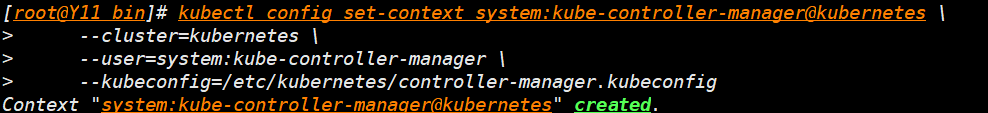

- 设置上文参数

- 这个命令的作用是为 kube-controller-manager 配置 kubeconfig 文件中的上下文。

- 一个上下文(context)包含了一个集群(cluster)

- 一个用户(user)和一个命名空间(namespace),用于描述访问 Kubernetes 集群时的基本上下文信息。

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

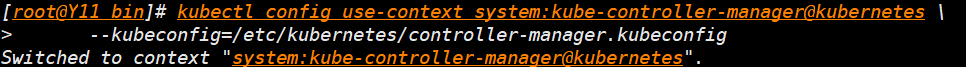

- 设置默认上下文环境

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

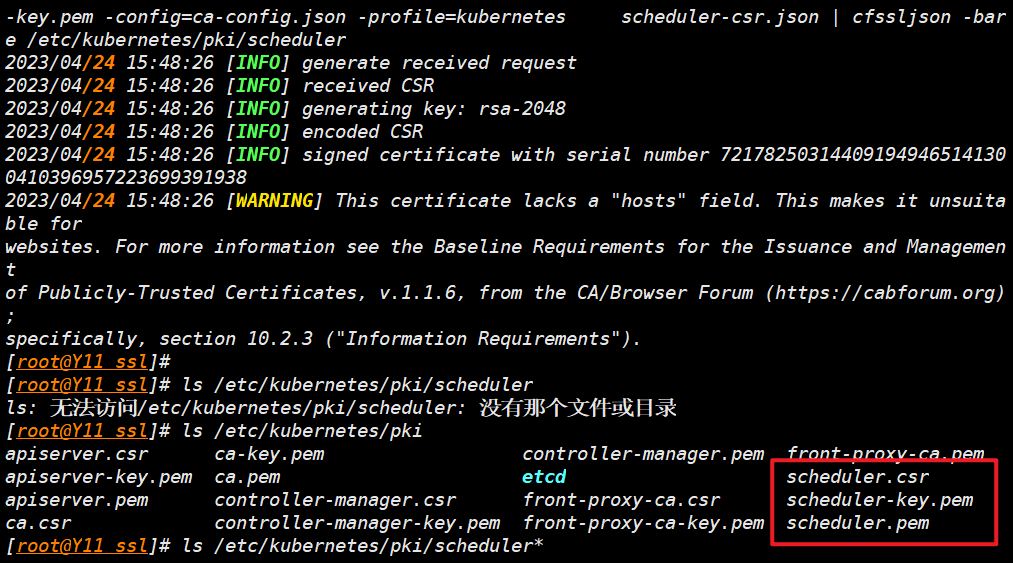

创建 scheduller 证书并配置其文件

- 编辑配置文件

vim scheduler-csr.json

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

- 生成证书

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

ls /etc/kubernetes/pki/

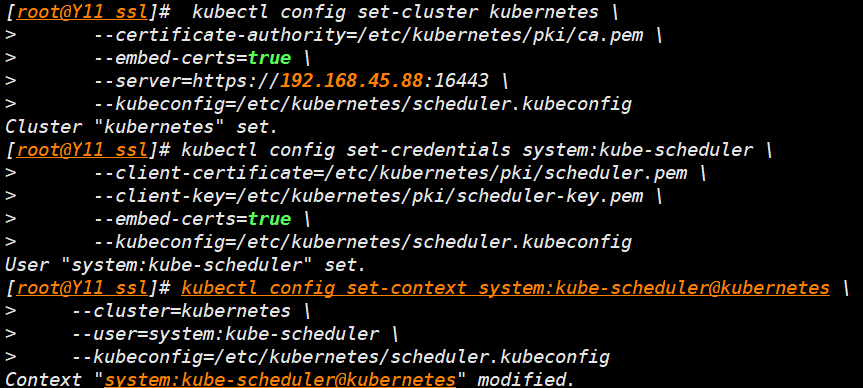

- 设置集群参数

- 命令会将相关参数写入到配置文件

/etc/kubernetes/scheduler.kubeconfig中

- 命令会将相关参数写入到配置文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.45.88:16443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

- 授权参数

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

- 设置上下文参数

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

- 设置默认上下文环境

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

创建 admin 证书

- 编辑配置文件

vim admin-csr.json

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

- 生成证书

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

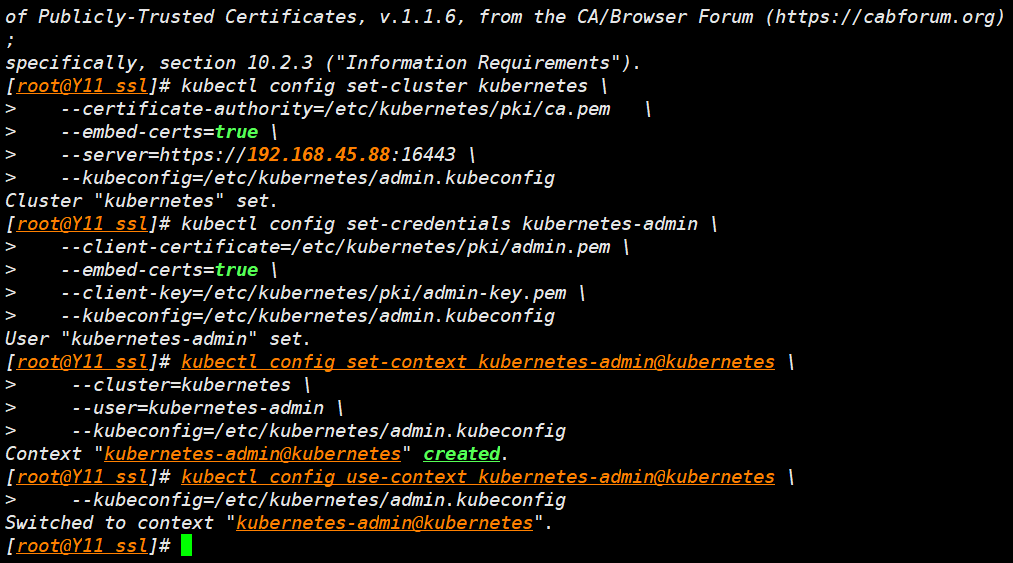

- 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.45.88:16443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

- 设置用户号凭证

kubectl config set-credentials kubernetes-admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

- 上下文参数

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

- 设置默认上下文环境

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

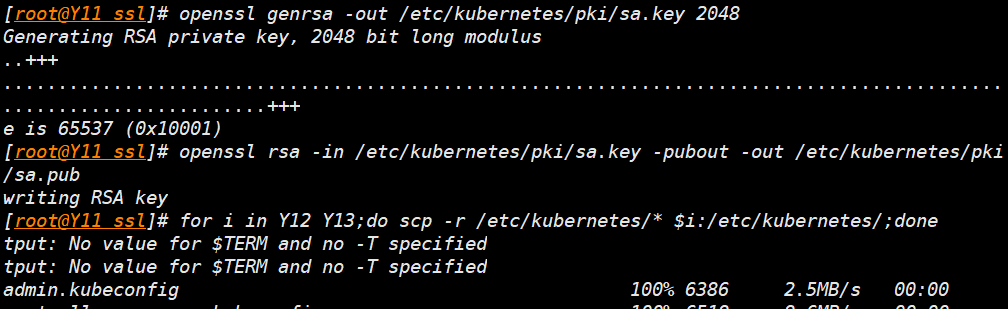

生成 service-account 的 key

- openssl 生成key

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

拷贝证书到其它master

for i in Y12 Y13;do scp -r /etc/kubernetes/* $i:/etc/kubernetes/;done

ETCD 集群部署

- 获取二进制包

wget https://storage.googleapis.com/etcd/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

- 初始化,拷贝到其它主节点

tar -zxvf etcd-v3.4.13-linux-amd64.tar.gz

cd etcd-v3.4.13-linux-amd64

for i in Y11 Y12 Y13;do scp etcd etcdctl $i:/usr/local/bin/;done

- 调整 ETCD 配置文件

vim /etc/etcd/etcd.config.yml

name: 'Y11'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.45.11:2380'

listen-client-urls: 'https://192.168.45.11:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.45.11:2380'

advertise-client-urls: 'https://192.168.45.11:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'Y11=https://192.168.45.11:2380,Y12=https://192.168.45.12:2380,Y13=https://192.168.45.13:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

- 创建 ETCD 的系统服务文件

vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

- 拷贝配置文件和服务文件到其它节点

for i in Y12 Y13;do scp /etc/etcd/etcd.config.yml $i:/etc/etcd;scp /usr/lib/systemd/system/etcd.service $i:/usr/lib/systemd/system/;done

- Y 12 主节点修改配置文件

[root@Y12 ~]# sed -i '/Y11/! s/192.168.45.11/192.168.45.12/g' /etc/etcd/etcd.config.yml

[root@Y12 ~]# sed -i '/initial-cluster/! s/Y11/Y12/g' /etc/etcd/etcd.config.yml

- Y 13 主节点修改配置文件

[root@Y13 ~]# sed -i '/Y11/! s/192.168.45.11/192.168.45.13/g' /etc/etcd/etcd.config.yml

[root@Y13 ~]# sed -i '/initial-cluster/! s/Y11/Y13/g' /etc/etcd/etcd.config.yml

- 所有节点同时一起启动 ETCD

- 注意:这里是需要一起启动的,这样才能准确建立集群关系。

systemctl daemon-reload

systemctl enable --now etcd

systemctl status etcd

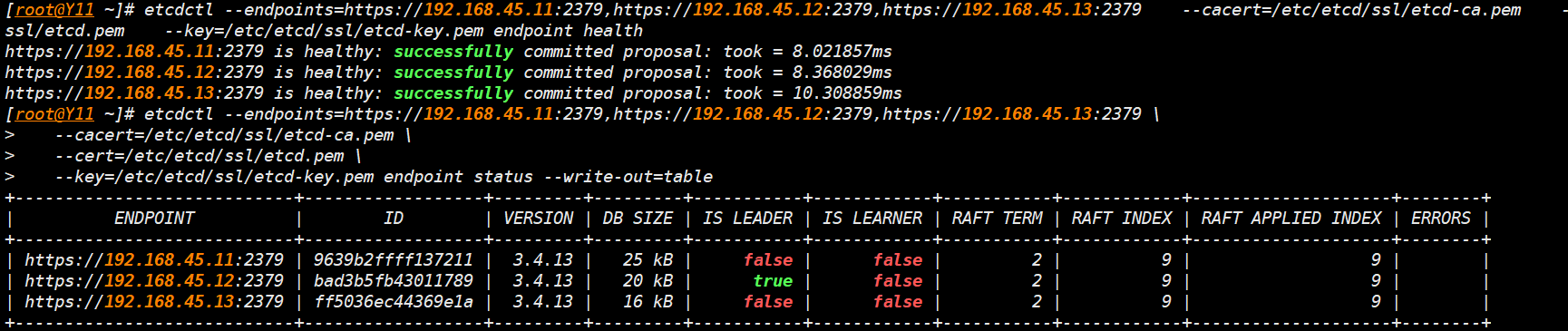

- 集群验证一

etcdctl --endpoints=https://192.168.45.11:2379,https://192.168.45.12:2379,https://192.168.45.13:2379 \

--cacert=/etc/etcd/ssl/etcd-ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem endpoint status --write-out=table

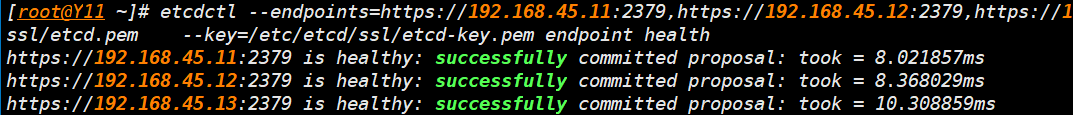

- 集群验证二

etcdctl --endpoints=https://192.168.45.11:2379,https://192.168.45.12:2379,https://192.168.45.13:2379 \

--cacert=/etc/etcd/ssl/etcd-ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem endpoint health

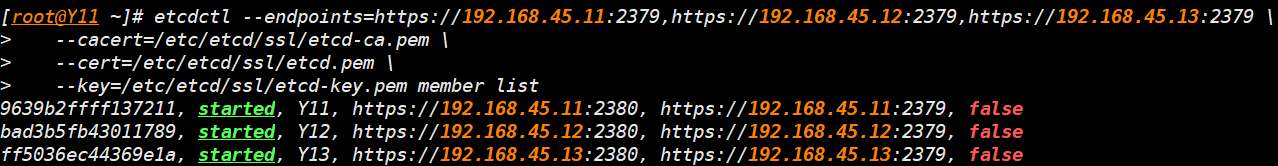

- 集群验证三

etcdctl --endpoints=https://192.168.45.11:2379,https://192.168.45.12:2379,https://192.168.45.13:2379 \

--cacert=/etc/etcd/ssl/etcd-ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem member list

Master节点部署服务

- 创建 api-server 系统服务

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.45.11 \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.45.11:2379,https://192.168.45.12:2379,https://192.168.45.13:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

- 拷贝文件到其它节点

for i in Y12 Y13;do scp /usr/lib/systemd/system/kube-apiserver.service $i:/usr/lib/systemd/system/; done

- 修改其它节点的 Service 文件

[root@Y12 ~]# sed -i '/etcd-servers/! s/192.168.45.11/192.168.45.12/g' /usr/lib/systemd/system/kube-apiserver.service

[root@Y13 ~]# sed -i '/etcd-servers/! s/192.168.45.11/192.168.45.13/g' /usr/lib/systemd/system/kube-apiserver.service

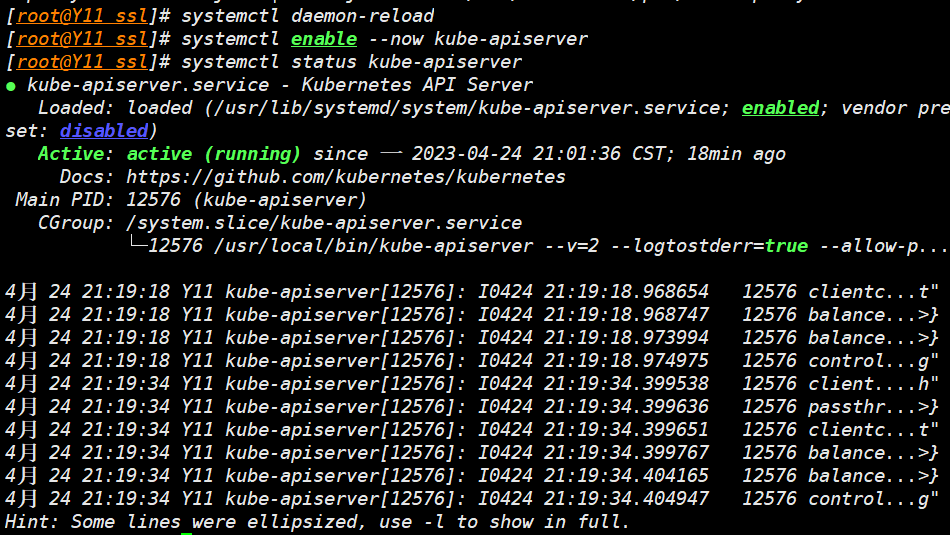

- 启动 api-server

systemctl daemon-reload

systemctl enable --now kube-apiserver

systemctl status -l kube-apiserver

-

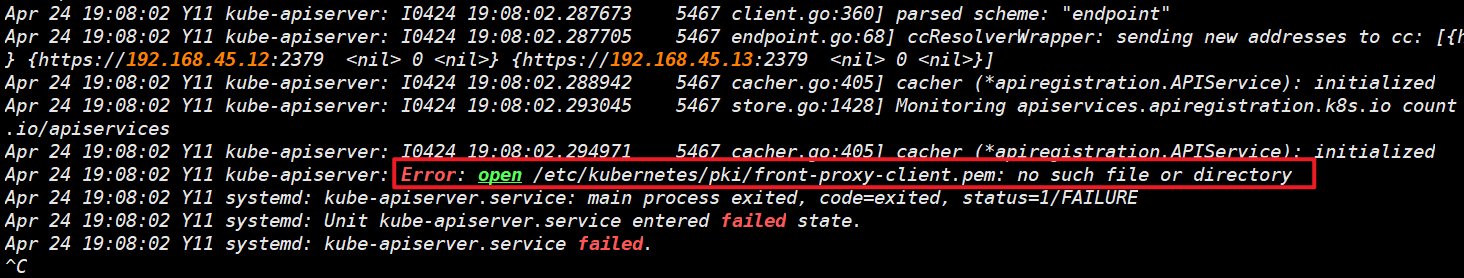

发生报错一

- 缺证书,需要生成 [[2.1-K8S-二进制部署#front-proxy 证书|front-proxy]] 证书

- 缺证书,需要生成 [[2.1-K8S-二进制部署#front-proxy 证书|front-proxy]] 证书

-

重新启动生效

-

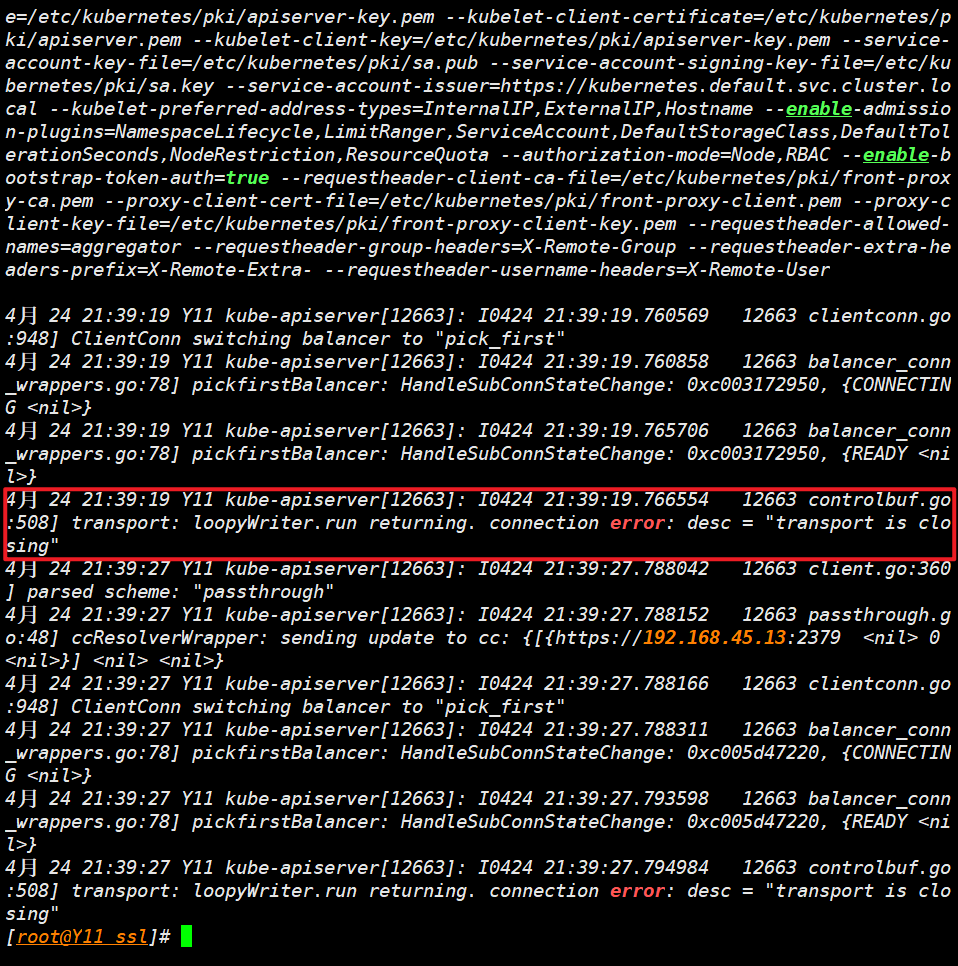

发生报错二 (正常不影响)

controlbuf.go: 508] transport: loopyWriter.run returning. connection error: desc = "transport is closing"

-

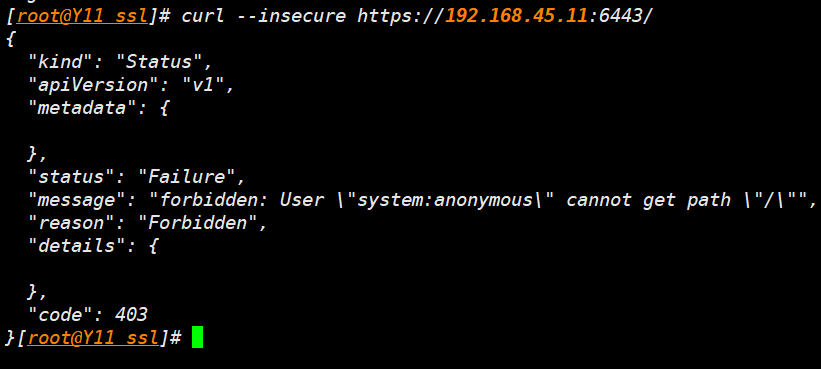

测试 api 连接

curl --insecure https://192.168.45.11:6443/

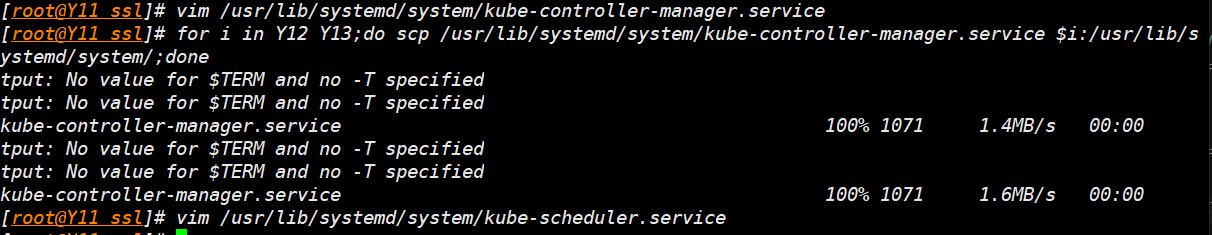

部署 Controller Manager

- 编辑配置文件

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--bind-address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.168.0.0/16 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

- 拷贝到其它节点

for i in Y12 Y13;do scp /usr/lib/systemd/system/kube-controller-manager.service $i:/usr/lib/systemd/system/;done

- 所有主节点启动服务

systemctl daemon-reload

systemctl enable --now kube-controller-manager

systemctl status kube-controller-manager

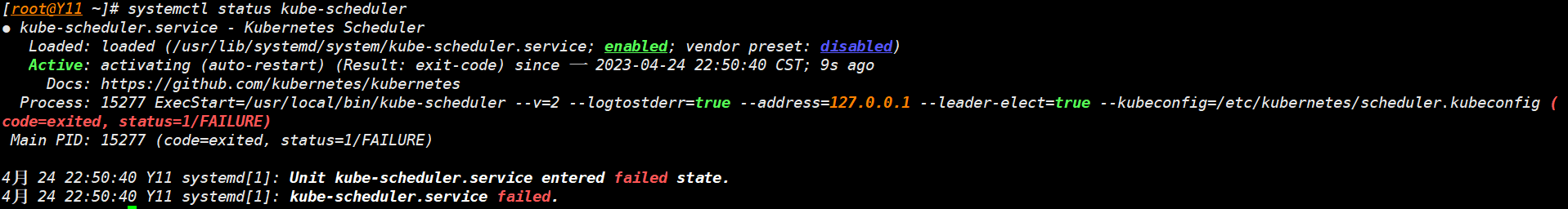

部署 Scheduller

- 创建服务文件

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

- 拷贝到其它节点

for i in Y12 Y13;do scp /usr/lib/systemd/system/kube-scheduler.service $i:/usr/lib/systemd/system/;done

- 所有主节点启动服务

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl status kube-scheduler

- 发生报错,重做证书,并重启服务解决。

创建 bootstrap

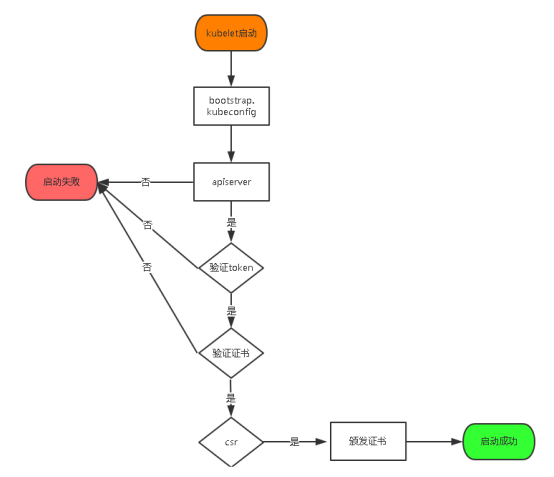

概念

-

在二进制安装 Kubernetes 时,我们需要为每一个工作节点上的 Kubelet 分别生成一个证书。

- 由于工作节点可能很多,手动生成 Kubelet 证书的过程会比较繁琐。

-

解决问题: Kubernetes 提供了 TLS bootstrapping 的方式来简化 Kubelet 证书的生成过程。

-

原理: 预先提供一个 bootstrapping token,kubelet 通过该 kubelet 调用 kube-apiserver 的证书签发 API 来生成自己需要的证书。

工作流程

- 调用 kube-apiserver 生成一个 bootstrap token。

- 将该 bootstrap token 写入到一个 kubeconfig 文件中,作为 kubelet 调用 kube-apiserver 的客户端验证方式。

- 通过

--bootstrap-kubeconfig启动参数将 bootstrap token 传递给 kubelet 进程。 - Kubelet 采用 bootstrap token 调用 kube-apiserver API,生成自己所需的服务器和客户端证书。

- 证书生成后,Kubelet 采用生成的证书和 kube-apiserver 进行通信,并删除本地的 kubeconfig 文件,以避免 bootstrap token 泄漏风险。

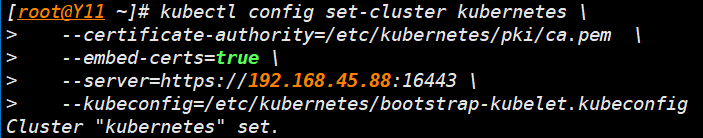

部署

- 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.45.88:16443 \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

- 设置用户凭证

kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

- 设置上下文

kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

- 设置默认上下文环境

kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

- 拷贝到其它主节点

mkdir -p /root/.kube;cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

for i in Y12 Y13;do ssh $i mkdir -p /root/.kube;scp /root/.kube/config $i:/root/.kube/;done

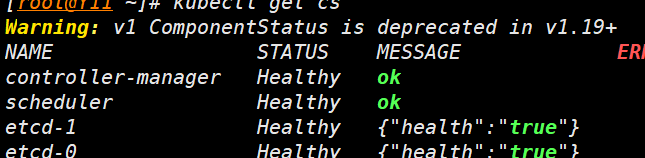

kubectl get cs

- 查看组件情况

kubectl get cs

配置 kubectl 子命令补全

- 这里个软件

bash-completion可以适配很多命令 - 控制节点配置,这里仅在 Y 11 配置

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash > ~/.kube/completion.bash.inc

source '/root/.kube/completion.bash.inc'

source $HOME/.bash_profile

- 设置开机生效

echo "source <(kubectl completion bash)" >> ~/.bashrc

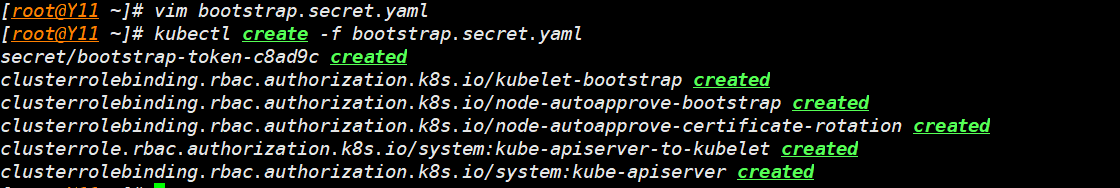

创建自动认证 Secret 资源

vim bootstrap.secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9c

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

kubectl create -f bootstrap.secret.yaml

Node 节点部署

Node 节点的认证流程图

全节点部署 kubelet

- 拷贝 etcd/ca/front-proxy 证书到 node 节点

for i in Y14 Y15;do ssh $i mkdir -p /etc/etcd/ssl /etc/kubernetes/pki/;scp /etc/etcd/ssl/{etcd-ca.pem,etcd.pem,etcd-key.pem} $i:/etc/etcd/ssl;scp /etc/kubernetes/pki/{ca.pem,ca-key.pem,front-proxy-ca.pem} $i:/etc/kubernetes/pki/;done

- 拷贝

bootstrap-kubelet.kubeconfig到其它节点

for i in Y12 Y13 Y14 Y15;do scp /etc/kubernetes/bootstrap-kubelet.kubeconfig $i:/etc/kubernetes/ ;done

- 所有节点编写服务启动文件

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

- 所有节点编写 kubeadm.conf 服务启动配置文件

mkdir /etc/systemd/system/kubelet.service.d/

vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' "

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

- 所有节点创建 kubelet 配置文件

vim /etc/kubernetes/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

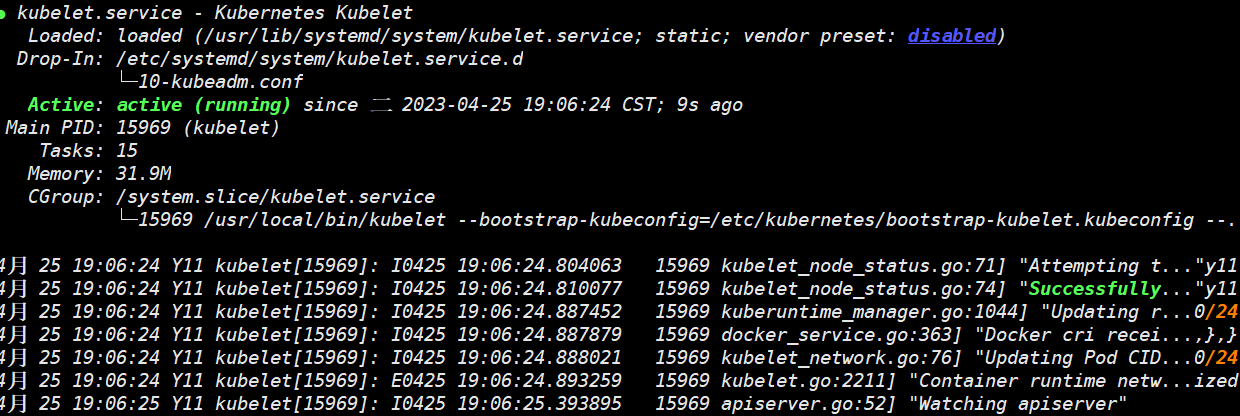

- 所有节点启动服务

systemctl daemon-reload

systemctl enable --now kubelet

systemctl status kubelet

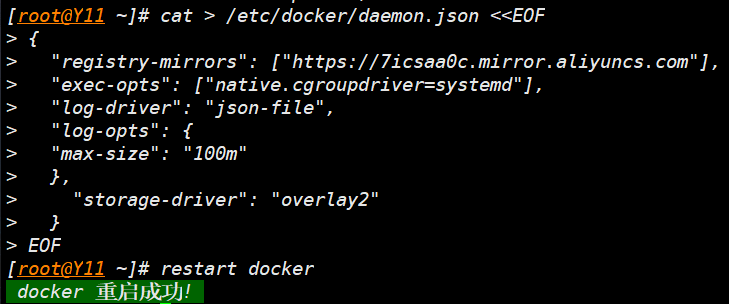

发生报错 #problem

- 报错解释:cgroup 指定有问题导致无法开启容器

"Failed to run kubelet" err="failed to run Kubelet: misconfiguration: kubelet cgroup driver: "systemd" is different from docker cgroup driver: "cgroupfs""

- 报错解决思路:

- 先排查一下网络

- 再排查一下 docker 的状态

- 重启 Docker 发现无法启动

- 移走配置文件,重启测试

cp /etc/docker/daemon.json /etc/docker/daemon.json.bak

rm -rf /etc/docker/daemon.json

- 发现启动成功,主要问题就在这个配置文件,重新订正配置文件

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://7icsaa0c.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

- 再次启动 docker,启动成功

- 再次启动 kubelet 启动成功

- 拷贝 Docker 配置文件到其它节点

for i in y12 y13 y14 y15;do scp /etc/docker/daemon.json $i:/etc/docker/daemon.json;done

-

重启 kubelet 解决问题

-

如果出现 Node 节点无法启动 Master 节点能启动 #problem

- 检查安装包是否拷贝

- 检查配置文件以及目录是否完成

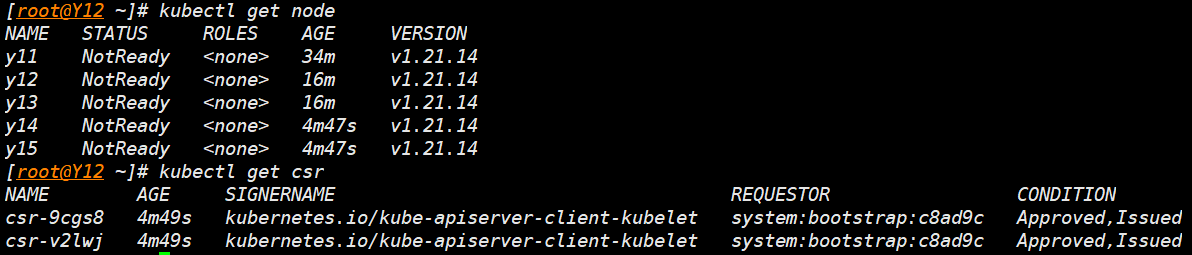

检查集群情况

kubectl get node

kubectl get csr

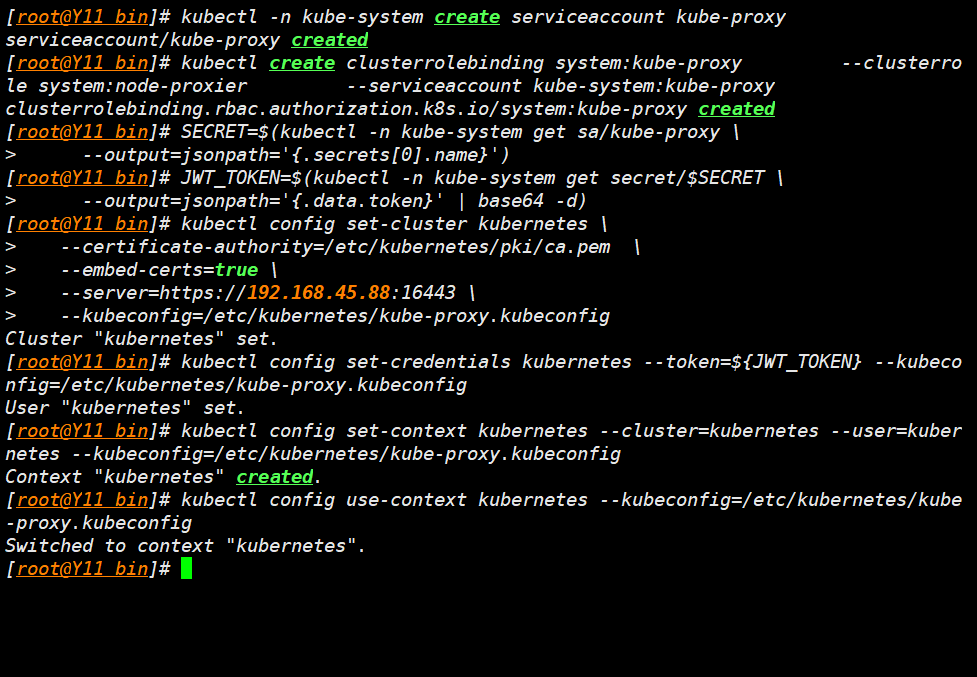

kube-proxy 部署

- 注意:以下操作在 Master 操作,最后复制到其它节点上

- 我这里操作主机是 Y 11

- 我这里操作主机是 Y 11

- 建立

serviceaccount

kubectl -n kube-system create serviceaccount kube-proxy

- 角色绑定

kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

- 收集 Token

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

- 收集集群参数到 proxy 配置文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.45.88:16443 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

- 设置用 Token 设置凭证

kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

- 设置上下文参数到配置文件

kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

- 生效上下文

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

- 创建kube-proxy kubeconfig文件

vim /etc/kubernetes/kube-proxy.conf

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.168.0.0/16

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

- proxy 配置文件

vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.conf \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

- 文件分发

for i in Y11 Y12 Y13 Y14 Y15 ;do scp /etc/kubernetes/kube-proxy.kubeconfig $i:/etc/kubernetes/;scp /etc/kubernetes/kube-proxy.conf $i:/etc/kubernetes/kube-proxy.conf;scp /usr/lib/systemd/system/kube-proxy.service $i:/usr/lib/systemd/system/kube-proxy.service;done

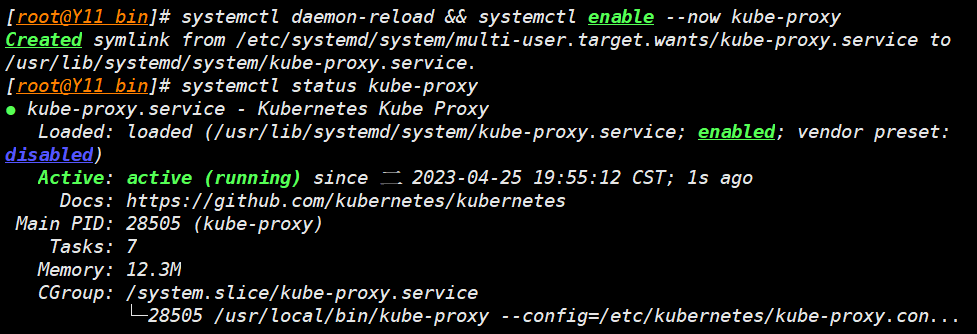

所有节点启动 Kube-proxy

systemctl daemon-reload && systemctl enable --now kube-proxy

systemctl status kube-proxy

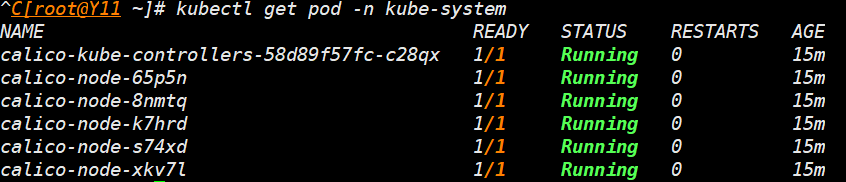

安装 calico 的 K 8 S 网络

- 过程参考:[[2-K8S kubeadm 部署#使用calico安装k8s网络|Calico网络部署]]

wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico-etcd.yaml

- 先生效配置文件,提前拉取镜像

kubectl apply -f calico-etcd.yaml

- 修改配置文件

sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.45.11:2379,https://192.168.45.12:2379,https://192.168.45.13:2379"#g' calico-etcd.yaml

- 证书 base 64 转码,收集到变量之中

ETCD_CA=`cat /etc/kubernetes/pki/etcd/etcd-ca.pem | base64 | tr -d '\n'`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/etcd.pem | base64 | tr -d '\n'`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/etcd-key.pem | base64 | tr -d '\n'`

- 添加证书到配置文件

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

- 配置 Pod 地址段

POD_SUBNET="172.168.0.0/16"

sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

- 再次生效配置文件

kubectl apply -f calico-etcd.yaml

- 查看 pod,等待拉取完成

kubectl get pod -n kube-system

安装 CoreDNS

vim coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

- 关键字段:这里是指定集群的地址

clusterIP: 10.96.0.10

- 安装

kubectl create -f coredns.yaml

- 查看 Pod

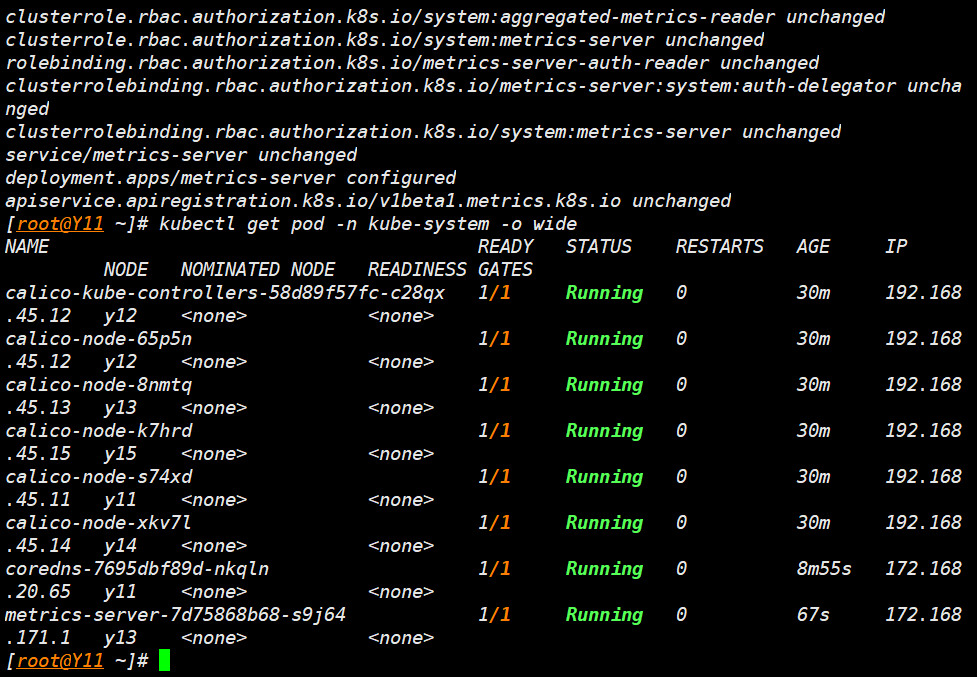

kubectl get pod -n kube-system -o wide

Metrics Server 部署

- 参考 [[2-K8S kubeadm 部署#Metrics Server 部署|Metrics Server 部署]]

vim components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --metric-resolution=30s

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

image: registry.cn-shenzhen.aliyuncs.com/zengfengjin/metrics-server:v0.5.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- name: ca-ssl

mountPath: /etc/kubernetes/pki

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl

hostPath:

path: /etc/kubernetes/pki

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

- 部署查看

kubectl apply -f components.yaml

kubectl get pod -n kube-system -o wide

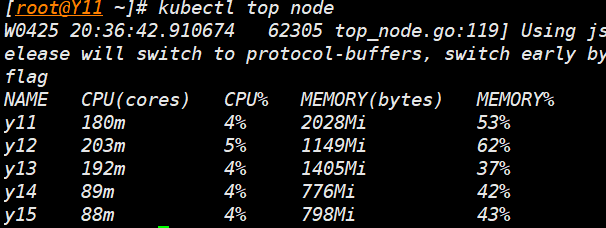

- 查看收集到的数据

kubectl top node

- 完成:基本部署完成

总结

- 二进制部署,整个过程使用了多个组件,同时需要配置双向认证的证书。

- 整个步骤前期围绕着控制平面组件、Node 组件进行

- 通过二进制的部署深入理解 K 8 S 集群的组件关系跟运行过程

浙公网安备 33010602011771号

浙公网安备 33010602011771号