概述

StorageClass 为管理员提供了描述存储 "类" 的方法。 不同的类型可能会映射到不同的服务质量等级或备份策略,或是由集群管理员制定的任意策略。

每个 StorageClass 都包含 provisioner、parameters 和 reclaimPolicy 字段, 这些字段会在 StorageClass 需要动态分配 PersistentVolume 时会使用到。

StorageClass 对象的命名很重要,用户使用这个命名来请求生成一个特定的类。 当创建 StorageClass 对象时,管理员设置 StorageClass 对象的命名和其他参数,一旦创建了对象就不能再对其更新。

更多详情参考:https://kubernetes.io/zh/docs/concepts/storage/storage-classes/

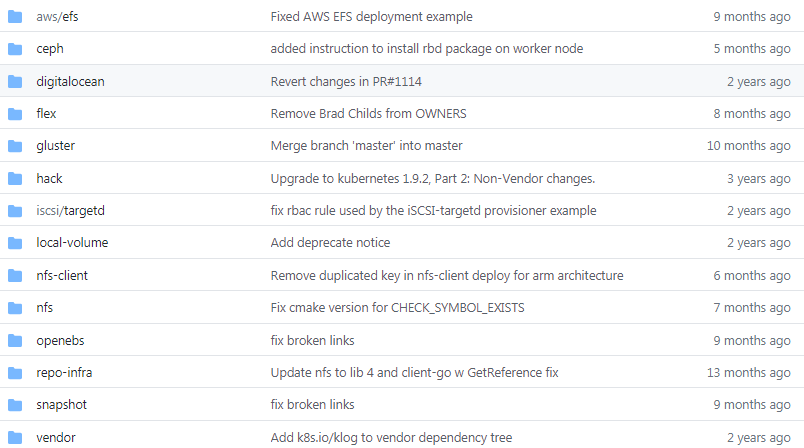

Github:https://github.com/kubernetes-retired/external-storage

以NFS服务为例

# 安装nfs服务并启动 yum -y install nfs-common nfs-utils rpcbind systemctl start nfs && systemctl enable nfs systemctl start rpcbind && systemctl enable rpcbind # 创建一个共享目录 mkdir -p /data/nfsprovisioner chmod 777 /data/nfsprovisioner chown nfsnobody /data/nfsprovisioner

cat >> /etc/exports <<EOF /data/nfsprovisioner *(rw,no_root_squash,no_all_squash,sync) EOF

systemctl restart nfs && systemctl restart rpcbind

部署nfs-client-provisioner

注意: 修改对应nfs地址和目录

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false" --- apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io --- apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner # image: quay.io/external_storage/nfs-client-provisioner:latest image: registry.cn-shanghai.aliyuncs.com/leozhanggg/storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 10.88.88.108 - name: NFS_PATH value: /data/nfsprovisioner volumes: - name: nfs-client-root nfs: server: 10.88.88.108 path: /data/nfsprovisioner

[root@ymt108 ~]# kubectl apply -f nfs-client-provisioner.yaml storageclass.storage.k8s.io/managed-nfs-storage created serviceaccount/nfs-client-provisioner created clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created deployment.apps/nfs-client-provisioner created [root@ymt108 ~]# kubectl get pod |grep nfs-client-provisioner nfs-client-provisioner-6d7b4c474c-8xftt 1/1 Running 0 39m

部署测试Pod

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: test-claim annotations: volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: - ReadWriteMany resources: requests: storage: 1Mi --- kind: Pod apiVersion: v1 metadata: name: test-pod spec: containers: - name: test-pod image: busybox command: - "/bin/sh" args: - "-c" - "touch /mnt/SUCCESS && exit 0 || exit 1" volumeMounts: - name: nfs-pvc mountPath: "/mnt" restartPolicy: "Never" volumes: - name: nfs-pvc persistentVolumeClaim: claimName: test-claim

[root@ymt108 ~]# kubectl apply -f test-pod.yaml persistentvolumeclaim/test-claim created pod/test-pod created [root@ymt108 ~]# cd /data/nfsprovisioner/ [root@ymt108 nfsprovisioner]# ls default-test-claim-pvc-5763d9c1-2860-4aee-a408-c10ef94d252d [root@ymt108 nfsprovisioner]# [root@ymt108 nfsprovisioner]# kubectl delete -f /root/test-pod.yaml persistentvolumeclaim "test-claim" deleted pod "test-pod" deleted [root@ymt108 nfsprovisioner]# ls [root@ymt108 nfsprovisioner]#

作者:Leozhanggg

出处:https://www.cnblogs.com/leozhanggg/p/13611982.html

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

浙公网安备 33010602011771号

浙公网安备 33010602011771号