Python爬虫之selenium爬取腾讯招聘

目标:腾讯招聘网

分析

要抓取的内容在大div class="recruit-wrap recruit-margin"下的div里面

xpath定位:

开始

导入库

模拟打开网址

定位到内容

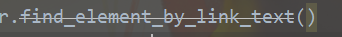

这里说一下,为什么这么用,为什么放着find_element_by_xpath()这么方便的语句不用却用find_elements()? 其实,find_element_by_xpath()用不了,在运行的时候会出现警告,让你用find_elements(),如果不用find_elements()就不让你运行,而且你会发现你输入那些命令的时候会多出一条横线,如图:

所以在上面也要多一条

的命令,就是为了代替find_element_by_xpath(),想要用对应的功能的话,就用

,比如说我想用

的功能,那么我就可以

提取内容

全部代码

下面就是每一页都爬

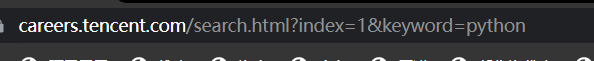

分析

可以看到,url变的只有index=后面的值,那么

selenium里面只写

就行。

浙公网安备 33010602011771号

浙公网安备 33010602011771号