从零到一k8s(三)安装Addons

Metricbeat(集群日志收集)

https://www.elastic.co/guide/en/beats/metricbeat/current/running-on-kubernetes.html

metric-server(top 指令数据源)

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

ingress(nginx)

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/cloud/deploy.yaml

externalTrafficPolicy: Local

默认情况下ingress 开启了这个选项 为了保证nginx 能接收到client真实ip 这一项默认打开。但是会造成pod 如果为分布在此节点那直接访问主机ip 进行的转发链条为空造成 SYNSEND 状态持续

ip-masq-agent(管理nat 出网规则)

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/ip-masq-agent/master/ip-masq-agent.yaml

如果你的网络模式已经确认 pod 地址段通过bgp模式 或者 switch路由方式已经发布到集群外主机,可以将主机网络的nat masquery 屏蔽 通过以下方式

cat ip-masq-agent-config nonMasqueradeCIDRs: - 10.160.105.0/24 resyncInterval: 60s root@us-test00:~# kubectl create configmap ip-masq-agent --from-file=ip-masq-agent-config --namespace=kube-system

iptables -t nat -nvL |grep -i agent

2157 136K IP-MASQ-AGENT all -- * * 0.0.0.0/0 0.0.0.0/0 /* ip-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom IP-MASQ-AGENT chain */ ADDRTYPE match dst-type !LOCAL

Chain IP-MASQ-AGENT (1 references)

0 0 RETURN all -- * * 0.0.0.0/0 169.254.0.0/16 /* ip-masq-agent: cluster-local traffic should not be subject to MASQUERADE */ ADDRTYPE match dst-type !LOCAL

3 230 RETURN all -- * * 0.0.0.0/0 10.160.105.0/24 /* ip-masq-agent: cluster-local traffic should not be subject to MASQUERADE */ ADDRTYPE match dst-type !LOCAL

2 120 MASQUERADE all -- * * 0.0.0.0/0 0.0.0.0/0 /* ip-masq-agent: outbound traffic should be subject to MASQUERADE (this match must come after cluster-local CIDR matches) */ ADDRTYPE match dst-type !LOCAL

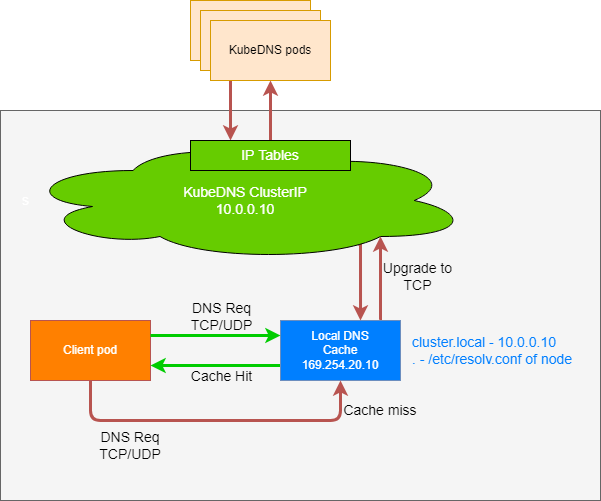

nodelocal dns

安装这一层dns 代理后 能解决pod解析常见问题:

1. contract nat 表满由于dns 请求量过大

2. 解决域名解析故障,如core dns 位于 CN,而pod 可能位于US 这样导致解析的部分A记录不可用

#安装方式 1. 下载yml 文件 https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/nodelocaldns/nodelocaldns.yaml 2. 修改yml 文件 选择一合适的本地回环地址来监听 说明: NodeLocal DNSCache 的本地侦听 IP 地址可以是任何地址,只要该地址不和你的集群里现有的 IP 地址发生冲突。 推荐使用本地范围内的地址,例如,IPv4 链路本地区段 169.254.0.0/16 内的地址, 或者 IPv6 唯一本地地址区段 fd00::/8 内的地址。 这里选择 169.254.100.100 kubedns=kubectl get svc kube-dns -n kube-system -o jsonpath={.spec.clusterIP} domain=xlqforever.com localdns=169.254.100.100

#ipvs 和 iptables 的替换模式不一致,这里用的是ipvs 模式

sed -i "s/__PILLAR__LOCAL__DNS__/$localdns/g; s/__PILLAR__DNS__DOMAIN__/$domain/g; s/__PILLAR__DNS__SERVER__//g; s/__PILLAR__CLUSTER__DNS__/$kubedns/g" nodelocaldns.yaml

如果 kube-proxy 运行在 IPVS 模式,需要修改 kubelet 的 --cluster-dns 参数为 NodeLocal DNSCache 正在侦听的 <node-local-address> 地址。 否则,不需要修改 --cluster-dns 参数,因为 NodeLocal DNSCache 会同时侦听 kube-dns 服务的 IP 地址和 <node-local-address> 的地址

promethues 监控

https://github.com/prometheus-community/helm-charts/tree/main/charts/kube-prometheus-stack

promethues-operator 已经被收录到社区,目前推荐使用helm 来安装和升级prometheus

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts helm repo update

# value 文件妥善保管,合理修改其中呢 pv设置和ingress 配置。

helm install prod-prom prometheus-community/kube-prometheus-stack -f promethues_value.yml -n prom helm update prod-prom prometheus-community/kube-prometheus-stack -f promethues_value.yml -n prom

自定义监控:

https://github.com/prometheus-operator/prometheus-operator/blob/main/Documentation/user-guides/getting-started.md

log-pilot

apiVersion: v1 kind: ConfigMap metadata: labels: app: log-pilot name: log-pilot namespace: devops data: filebeat.tpl: |- {{range .configList}} {{ if .Stdout }} - type: container {{ else }} - type: log {{ end }} enabled: true paths: - {{ .HostDir }}/{{ .File }} scan_frequency: 1s fields_under_root: true #{{if .Stdout}} #docker-json: true #json.keys_under_root: true #{{end}} {{if eq .Format "json"}} json.keys_under_root: true {{end}} fields: {{range $key, $value := .Tags}} {{ $key }}: {{ $value }} {{end}} {{range $key, $value := $.container}} {{ $key }}: {{ $value }} {{end}} tail_files: false close_inactive: 1h ignore_older: 1h max_backoff: 1s backoff_factor: 1 queue.mem: events: 20000 {{end}}

---

---

apiVersion: v1

kind: ConfigMap

metadata:

name: log-pilot-configuration

namespace: devops

data:

logging_output: "kafka"

kafka_brokers: "10.116.193.139:9092"

kafka_bulk_max_size: "10000"

kafka_channel_buffer_size: "2560"

kafka_keep_alive: "10s"

kafka_worker: "3"

# topic 在tag中用topic=xxx来指定

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: log-pilot

namespace: devops

labels:

k8s-app: log-pilot

spec:

selector:

matchLabels:

k8s-app: log-pilot

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

k8s-app: log-pilot

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: filebeat-exporter

image: int-bj-prod-registry-vpc.cn-beijing.cr.aliyuncs.com/devops/beat-exporter

- name: log-pilot

#image: registry.cn-hangzhou.aliyuncs.com/acs/log-pilot:0.9.7-filebeat

#image: hub.pri.ibanyu.com/devops/log-pilot:0.9.7-filebeat-pprof

image: int-bj-prod-registry-vpc.cn-beijing.cr.aliyuncs.com/devops/log-pilot:v7.3.3-filebeat

resources:

limits:

cpu: "15"

memory: 6Gi

requests:

cpu: 50m

memory: 50Mi

env:

- name: "LOGGING_OUTPUT"

valueFrom:

configMapKeyRef:

name: log-pilot-configuration

key: logging_output

- name: "KAFKA_BROKERS"

valueFrom:

configMapKeyRef:

name: log-pilot-configuration

key: kafka_brokers

- name: "KAFKA_BULK_MAX_SIZE"

valueFrom:

configMapKeyRef:

name: log-pilot-configuration

key: kafka_bulk_max_size

- name: "KAFKA_VERSION"

value: "2.0.0"

- name: "KAFKA_CHANNEL_BUFFER_SIZE"

valueFrom:

configMapKeyRef:

name: log-pilot-configuration

key: kafka_channel_buffer_size

- name: "KAFKA_WORKER"

valueFrom:

configMapKeyRef:

name: log-pilot-configuration

key: kafka_worker

- name: "FILEBEAT_METRICS_ENABLED"

value: "true"

- name: "FILEBEAT_HTTP_ENABLED"

value: "true"

- name: "FILEBEAT_HTTP_HOST"

value: "0.0.0.0"

- name: "NODE_NAME"

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: filebeat-tpl

mountPath: /pilot/filebeat.tpl

subPath: filebeat.tpl

- name: sock

mountPath: /var/run/docker.sock

- name: logs

mountPath: /var/log/filebeat

- name: state

mountPath: /var/lib/filebeat

- name: root

mountPath: /host

readOnly: true

- name: localtime

mountPath: /etc/localtime

# configure all valid topics in kafka

# when disable auto-create topic

securityContext:

capabilities:

add:

- SYS_ADMIN

terminationGracePeriodSeconds: 30

volumes:

- name: filebeat-tpl

configMap:

name: log-pilot

items:

- key: filebeat.tpl

path: filebeat.tpl

- name: sock

hostPath:

path: /var/run/docker.sock

type: Socket

- name: logs

hostPath:

path: /data/logs/log-pilot

type: DirectoryOrCreate

- name: state

hostPath:

path: /data/logs/log-pilot

type: DirectoryOrCreate

- name: root

hostPath:

path: /

type: Directory

- name: localtime

hostPath:

path: /etc/localtime

type: File

本文来自博客园,作者:萱乐庆foreverlove,转载请注明原文链接:https://www.cnblogs.com/leleyao/p/15980611.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号