model merge

1 introduction

depository:

https://github.com/arcee-ai/mergekit

merge two models as one model which need the two models have the same structure, tokenizer.

2 merge llama model

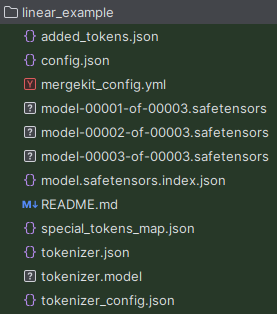

mergekit-yaml examples/linear.yml ./merged/linear_example

3 merge mistral model

3.1 tourist800/mistral_2x7b

this model is not MOE model, two models merged into a single model

link: https://huggingface.co/tourist800/mistral_2X7b

click to view the config.yaml

slices:

- sources:

- model: mistralai/Mistral-7B-Instruct-v0.2

layer_range: [0, 32]

- model: mistralai/Mistral-7B-v0.1

layer_range: [0, 32]

merge_method: slerp

base_model: mistralai/Mistral-7B-Instruct-v0.2

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

run command::~/Docker/Llama/mergkit_moe$

mergekit-yaml config.yaml merged --copy-tokenizer --allow-crimes --out-shard-size 1B --lazy-unpickle

3.2 sam-ezai/Breezeblossom-v4-mistral-2x7B

link: https://huggingface.co/sam-ezai/Breezeblossom-v4-mistral-2x7B

config.yaml

click to view the config file

base_model: MediaTek-Research/Breeze-7B-Instruct-v0_1

gate_mode: hidden

dtype: float16

experts:

- source_model: MediaTek-Research/Breeze-7B-Instruct-v0_1

positive_prompts: [ "<s>You are a helpful AI assistant built by MediaTek Research. The user you are helping speaks Traditional Chinese and comes from Taiwan. [INST] 你好,請問你可以完成什麼任務? [/INST] "]

- source_model: Azure99/blossom-v4-mistral-7b

positive_prompts: ["A chat between a human and an artificial intelligence bot. The bot gives helpful, detailed, and polite answers to the human's questions. \n|Human|: hello\n|Bot|: "]

run command:

~/Docker/Llama/mergkit_moe$

mergekit-moe config.yaml merged/mistral_moe --copy-tokenizer --allow-crimes --out-shard-size 1B --lazy-unpickle

3.3 mlabonne/Beyonder-4x7B-v2

link: https://huggingface.co/mlabonne/Beyonder-4x7B-v2

config.yaml

click to view config.yaml

base_model: mlabonne/Marcoro14-7B-slerp

experts:

- source_model: openchat/openchat-3.5-1210

positive_prompts:

- "chat"

- "assistant"

- "tell me"

- "explain"

- source_model: beowolx/CodeNinja-1.0-OpenChat-7B

positive_prompts:

- "code"

- "python"

- "javascript"

- "programming"

- "algorithm"

- source_model: maywell/PiVoT-0.1-Starling-LM-RP

positive_prompts:

- "storywriting"

- "write"

- "scene"

- "story"

- "character"

- source_model: WizardLM/WizardMath-7B-V1.1

positive_prompts:

- "reason"

- "math"

- "mathematics"

- "solve"

- "count"

run command:

mergekit-moe config.yaml Beyonder-4x7B-v2 --copy-tokenizer --allow-crimes --out-shard-size 1B --lazy-unpickle

help command

click to view the help

(mergekit) ludaze@cehsc-app-001:~/Docker/Llama/mergekit$ mergekit-yaml --help

Usage: mergekit-yaml [OPTIONS] CONFIG_FILE OUT_PATH

Options:

-v, --verbose Verbose logging

--allow-crimes / --no-allow-crimes

Allow mixing architectures [default: no-

allow-crimes]

--transformers-cache TEXT Override storage path for downloaded models

--lora-merge-cache TEXT Path to store merged LORA models

--cuda / --no-cuda Perform matrix arithmetic on GPU [default:

no-cuda]

--low-cpu-memory / --no-low-cpu-memory

Store results and intermediate values on

GPU. Useful if VRAM > RAM [default: no-low-

cpu-memory]

--out-shard-size SIZE Number of parameters per output shard

[default: 5B]

--copy-tokenizer / --no-copy-tokenizer

Copy a tokenizer to the output [default:

copy-tokenizer]

--clone-tensors / --no-clone-tensors

Clone tensors before saving, to allow

multiple occurrences of the same layer

[default: no-clone-tensors]

--trust-remote-code / --no-trust-remote-code

Trust remote code from huggingface repos

(danger) [default: no-trust-remote-code]

--random-seed INTEGER Seed for reproducible use of randomized

merge methods

--lazy-unpickle / --no-lazy-unpickle

Experimental lazy unpickler for lower memory

usage [default: no-lazy-unpickle]

--write-model-card / --no-write-model-card

Output README.md containing details of the

merge [default: write-model-card]

--safe-serialization / --no-safe-serialization

Save output in safetensors. Do this, don't

poison the world with more pickled models.

[default: safe-serialization]

--help Show this message and exit.

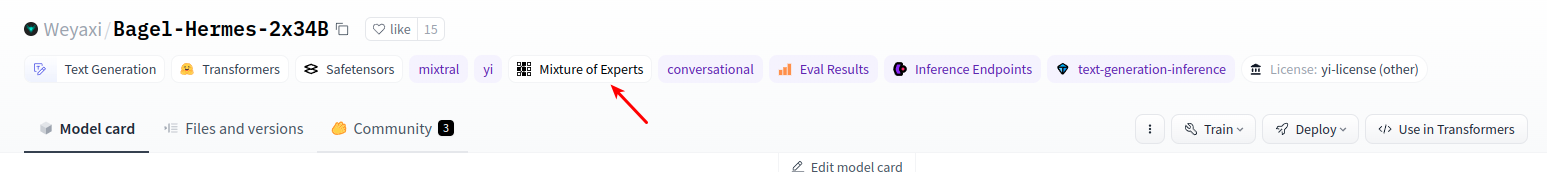

4 other example

go to huggingface, click the tag, you will get the corresponding example

浙公网安备 33010602011771号

浙公网安备 33010602011771号