llama-recipes fine-tuning 3

multiple GPUs in single node

click to view the code

torchrun --nnodes 1 --nproc_per_node 2 examples/finetuning.py --enable_fsdp --use_peft --peft_method lora --dataset medcqa_dataset --model_name meta-llama/Llama-2-7b-hf --fsdp_config.pure_bf16 --output_dir ./FINE/llama2-7b-medcqa-modify-prompt-modi-Q-T-O-multiple-gpus

please note single GPU couldn't hold even llama7B model, and 4xA6000 GPUs couldn't hold llama2 13B too.

export the model

colck to view the code

from transformers import AutoModelForCausalLM

from peft import PeftModel

base_model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-hf")

peft_model_id = "/home/ludaze/Docker/Llama/llama-recipes/FINE/llama2-7b-medcqa-modify-prompt-modi-Q-T-O-multiple-gpus"

model = PeftModel.from_pretrained(base_model, peft_model_id)

merged_model = model.merge_and_unload()

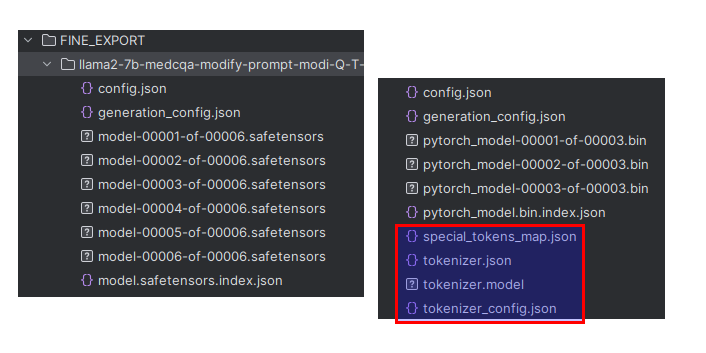

merged_model.save_pretrained("/home/ludaze/Docker/Llama/llama-recipes/FINE_EXPORT/llama2-7b-medcqa-modify-prompt-modi-Q-T-O-multiple-gpus-export")

then we need to copy the token and tokenizer file into the goal directory

浙公网安备 33010602011771号

浙公网安备 33010602011771号