102302149赖翊煊综合实践

| 这个项目属于哪个课程 | 2025数据采集与融合技术 |

|---|---|

| 组名、项目简介 | 组名:好运来 项目需求:智能运动辅助应用,针对用户上传的运动视频(以引体向上为核心),解决传统动作评估依赖主观经验、反馈延迟的问题,提供客观的动作分析与改进建议 项目目标:对用户上传的运动视频进行动作分析、评分,提供个性化改进意见,包含完整的用户成长记录和反馈系统,帮助用户科学提升运动水平 技术路线:基于Vue3+Python+openGauss的前后端分离架构,前端使用Vue3实现用户界面和可视化,后端用Python集成MediaPipe进行姿态分析算法处理,数据库采用openGauss存储用户数据和运动记录,实现引体向上动作分析系统 |

| 团队成员学号 | 102302148(谢文杰)、102302149(赖翊煊)、102302150(蔡骏)、102302151(薛雨晨)、102302108(赵雅萱)、102302111(海米沙)、102302139(尚子骐)、022304105(叶骋恺) |

| 这个项目的目标 | 通过上传的运动视频,运用人体姿态估计算法(双视角协同分析:正面看握距对称性、身体稳定性,侧面看动作完整性、躯干角度),自动识别身体关键点,分解动作周期、识别违规代偿,生成量化评分、可视化报告与个性化改进建议;同时搭建用户成长记录与反馈系统,存储用户数据与运动记录,最终打造低成本、高精度的自动化评估工具,助力个人训练、体育教育等场景的科学化训练,规避运动损伤、提升训练效果 |

| 其他参考文献 | [1] ZHOU P, CAO J J, ZHANG X Y, et al. Learning to Score Figure Skating Sport Videos [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2019. 1802.02774 [2] Toshev, A., & Szegedy, C. (2014). DeepPose: Human Pose Estimation via Deep Neural Networks. DeepPose: Human Pose Estimation via Deep Neural Networks |

| 码云链接(代码已汇总,各小组成员代码不分开放) | 前后端代码:https://gitee.com/wsxxs233/SoftWare |

一.项目背景

随着全民健身的深入与健身文化的普及,以引体向上为代表的自重训练,因其便捷性与高效性,成为衡量个人基础力量与身体素质的重要标志,广泛应用于学校体测、军事训练及大众健身。然而,传统的动作评估高度依赖教练员的肉眼观察与主观经验,存在标准不一、反馈延迟、难以量化等局限性。在缺少专业指导的环境中,训练者往往难以察觉自身动作模式的细微偏差,如借力、摆动、幅度不足等,这不仅影响训练效果,长期更可能导致运动损伤。如何将人工智能与计算机视觉技术,转化为每个人触手可及的“AI教练”,提供客观、即时、精准的动作反馈,已成为提升科学化训练水平的一个迫切需求。

二.项目概述

本项目旨在开发一套基于计算机视觉的智能引体向上动作分析与评估系统。系统通过训练者上传的视频,运用先进的人体姿态估计算法,自动识别并追踪身体关键点。针对引体向上动作的复杂性,我们创新性地构建了双视角协同分析框架:正面视角专注于分析握距对称性、身体稳定性和左右平衡,确保动作的规范与基础架构;侧面视角则着重评估动作的完整性、躯干角度与发力模式,判断动作幅度与效率。通过多维度量化指标,系统能够自动分解动作周期、识别违规代偿,并生成直观的可视化报告与改进建议。最终,本项目致力于打造一个低成本、高精度的自动化评估工具,为个人训练者、体育教育及专业机构提供一种数据驱动的科学训练辅助解决方案。

三.项目分工

蔡骏:负责用户界面前端所需前端功能的构建。

赵雅萱:负责管理员系统构建。

薛雨晨:实现功能部署到服务器的使用,以及前后端接口的书写修订。

海米沙:墨刀进行原型设计,实时记录市场调研结果并汇报分析需求,项目logo及产品名称设计,进行软件测试。

谢文杰:负责正面评分标准制定,搭建知识库。

赖翊煊:负责侧面评分标准制定,API接口接入AI

叶骋恺:负责数据库方面创建与设计

尚子琪:负责进行爬虫爬取对应相关视频,进行软件测

四.个人贡献

负责侧面评分标准制定,AI知识库训练

4.1侧面代码

主要负责侧面视频的数据处理以及评分标准的制定

点击查看代码

import cv2

import mediapipe as mp

import pandas as pd

import numpy as np

import os

import json

from scipy import signal

from scipy.interpolate import interp1d

from tqdm import tqdm

import matplotlib.pyplot as plt

class AdvancedPullUpBenchmark:

def __init__(self):

# MediaPipe初始化

self.mp_pose = mp.solutions.pose

self.mp_drawing = mp.solutions.drawing_utils

self.pose = self.mp_pose.Pose(

static_image_mode=False,

model_complexity=1,

smooth_landmarks=True,

min_detection_confidence=0.5,

min_tracking_confidence=0.5

)

# 关键点定义

self.LANDMARK_INDICES = {

'LEFT_SHOULDER': 11, 'RIGHT_SHOULDER': 12,

'LEFT_ELBOW': 13, 'RIGHT_ELBOW': 14,

'LEFT_WRIST': 15, 'RIGHT_WRIST': 16,

'LEFT_HIP': 23, 'RIGHT_HIP': 24,

'LEFT_KNEE': 25, 'RIGHT_KNEE': 26,

'LEFT_ANKLE': 27, 'RIGHT_ANKLE': 28

}

self.BENCHMARK_POINTS = [0, 25, 50, 75, 100]

def extract_comprehensive_landmarks(self, video_path, output_video_path=None):

"""提取关键点数据并生成可视化视频"""

cap = cv2.VideoCapture(video_path)

if not cap.isOpened():

print(f" 无法打开视频文件: {video_path}")

return None

fps = cap.get(cv2.CAP_PROP_FPS)

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

print(f"视频信息: {width}x{height}, FPS: {fps}, 总帧数: {total_frames}")

# 视频写入器

out = None

if output_video_path:

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

out = cv2.VideoWriter(output_video_path, fourcc, fps, (width, height))

print(f" 将生成可视化视频: {output_video_path}")

# 自定义躯干连接线

TORSO_CONNECTIONS = [

(15, 13), # 手腕-肘部

(13, 11), # 肘部-肩膀

(11, 23), # 肩膀-髋部

(23, 25), # 髋部-膝盖

(25, 27) # 膝盖-脚踝

]

landmarks_data = []

with tqdm(total=total_frames, desc="提取关键点并生成视频") as pbar:

for frame_count in range(total_frames):

success, frame = cap.read()

if not success:

break

display_frame = frame.copy()

frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

results = self.pose.process(frame_rgb)

frame_data = {

'frame': frame_count,

'timestamp': frame_count / fps if fps > 0 else frame_count

}

if results.pose_landmarks:

# 绘制骨架

self._draw_custom_skeleton(display_frame, results.pose_landmarks, TORSO_CONNECTIONS, width, height)

# 保存关键点数据(可选,如果您需要后续分析)

for name, idx in self.LANDMARK_INDICES.items():

landmark = results.pose_landmarks.landmark[idx]

frame_data[f'{name}_X'] = landmark.x

frame_data[f'{name}_Y'] = landmark.y

frame_data[f'{name}_Z'] = landmark.z

frame_data[f'{name}_VIS'] = landmark.visibility

else:

# 即使没有检测到关键点,也标记缺失数据

for name in self.LANDMARK_INDICES.keys():

frame_data[f'{name}_X'] = np.nan

frame_data[f'{name}_Y'] = np.nan

frame_data[f'{name}_Z'] = np.nan

frame_data[f'{name}_VIS'] = np.nan

# 保存到视频文件

if out:

out.write(display_frame)

frame_data.update(self._calculate_upper_stability(results.pose_landmarks))

frame_data.update(self._calculate_low_stability(results.pose_landmarks))

frame_data.update(self._calculate_height_metrics(results.pose_landmarks))

landmarks_data.append(frame_data)

pbar.update(1)

else:

# 即使没有检测到关键点,也保存原始帧到视频

if out:

out.write(display_frame)

# 标记缺失数据

frame_data.update(self._get_nan_metrics())

landmarks_data.append(frame_data)

pbar.update(1)

cap.release()

if out:

out.release()

cv2.destroyAllWindows()

if output_video_path:

print(f" 可视化视频已保存: {output_video_path}")

return pd.DataFrame(landmarks_data)

def _draw_custom_skeleton(self, frame, landmarks, connections, width, height):

"""自定义绘制骨架"""

# 1. 首先绘制连接线

for start_idx, end_idx in connections:

start_landmark = landmarks.landmark[start_idx]

end_landmark = landmarks.landmark[end_idx]

# 只绘制可见的关键点之间的连接线

if start_landmark.visibility > 0.5 and end_landmark.visibility > 0.5:

start_x = int(start_landmark.x * width)

start_y = int(start_landmark.y * height)

end_x = int(end_landmark.x * width)

end_y = int(end_landmark.y * height)

# 绘制连接线(黄色,粗细为2)

cv2.line(frame, (start_x, start_y), (end_x, end_y), (0, 255, 255), 2)

# 2. 绘制关键点

connected_points = set()

for connection in connections:

connected_points.add(connection[0])

connected_points.add(connection[1])

for point_idx in connected_points:

landmark = landmarks.landmark[point_idx]

if landmark.visibility > 0.5: # 只绘制可见的关键点

x = int(landmark.x * width)

y = int(landmark.y * height)

# 绘制关键点(绿色圆点,半径为5)

cv2.circle(frame, (x, y), 5, (0, 255, 0), -1)

# 添加白色边框

cv2.circle(frame, (x, y), 6, (255, 255, 255), 1)

def _get_landmark_point(self, landmarks, idx, width, height):

"""获取关键点像素坐标"""

landmark = landmarks.landmark[idx]

return (int(landmark.x * width), int(landmark.y * height))

def _calculate_upper_stability(self,landmarks):

metrics={}

try:

left_shoulder = np.array([landmarks.landmark[11].x, landmarks.landmark[11].y])

left_hip = np.array([landmarks.landmark[23].x, landmarks.landmark[23].y])

# 躯干向量

dx = left_shoulder[0] - left_hip[0] # 水平分量

dy = left_shoulder[1] - left_hip[1] # 垂直分量

# 计算与垂直线的夹角

angle = np.degrees(np.arctan2(dx, dy))

metrics['TORSO_ANGLE_side'] = angle

metrics['TORSO_ANGLE_ABS_side'] = abs(angle) # 绝对值表示倾斜程度

except Exception as e:

metrics['TORSO_ANGLE'] = np.nan

metrics['TORSO_ANGLE_ABS'] = np.nan

return metrics

def _calculate_low_stability(self,landmarks):

metrics={}

try:

left_knee = np.array([landmarks.landmark[25].x, landmarks.landmark[25].y])

left_hip = np.array([landmarks.landmark[23].x, landmarks.landmark[23].y])

# 躯干向量

dx = left_hip[0] - left_knee[0] # 水平分量

dy = left_hip[1] - left_knee[1] # 垂直分量

# 计算与垂直线的夹角

angle = np.degrees(np.arctan2(dx, dy))

metrics['LOWER_ANGLE_side'] = angle

metrics['LOWER_ANGLE_ABS_side'] = abs(angle) # 绝对值表示倾斜程度

except Exception as e:

metrics['LOWER_ANGLE_side'] = np.nan

metrics['LOWER_ANGLE_ABS_side'] = np.nan

return metrics

def _calculate_height_metrics(self, landmarks):

"""计算高度相关指标"""

metrics = {}

try:

# 使用归一化坐标(0-1范围)

left_wrist_y = landmarks.landmark[15].y

left_shoulder_y = landmarks.landmark[11].y

metrics['LEFT_WRIST_Y'] = left_wrist_y

metrics['LEFT_SHOULDER_Y'] = left_shoulder_y

except Exception as e:

metrics.update({key: np.nan for key in [

'LEFT_WRIST_Y', 'LEFT_SHOULDER_Y',

]})

return metrics

def detect_rep_cycles_by_shoulder_height(self, df):

"""基于肩膀高度检测引体向上周期"""

print("基于肩膀高度检测引体向上周期...")

# 使用肩膀高度作为主要信号

shoulder_heights = df['LEFT_SHOULDER_Y'].values

# 数据清理和插值

shoulder_series = pd.Series(shoulder_heights)

shoulder_interp = shoulder_series.interpolate(method='linear', limit_direction='both')

if len(shoulder_interp) < 20:

print("数据太少,无法检测周期")

return []

# 平滑信号

window_size = min(11, len(shoulder_interp) // 10 * 2 + 1)

if window_size < 3:

window_size = 3

try:

smoothed = signal.savgol_filter(shoulder_interp, window_length=window_size, polyorder=2)

except Exception as e:

print(f"平滑信号失败: {e}")

smoothed = shoulder_interp.values

# 寻找周期

rep_cycles = self._find_cycles_by_shoulder_height(smoothed)

print(f"检测到 {len(rep_cycles)} 个引体向上周期")

return rep_cycles

def _find_cycles_by_shoulder_height(self, shoulder_heights):

"""基于肩膀高度寻找周期"""

rep_cycles = []

try:

min_distance = max(15, len(shoulder_heights) // 20)

# 寻找波谷(肩膀最高点)

valleys, _ = signal.find_peaks(-shoulder_heights, distance=min_distance, prominence=0.02)

# 寻找波峰(肩膀最低点)

peaks, _ = signal.find_peaks(shoulder_heights, distance=min_distance, prominence=0.02)

print(f"肩膀高度检测: {len(peaks)}个波峰(手臂伸直), {len(valleys)}个波谷(下巴过杆)")

# 构建周期

if len(peaks) >= 2 and len(valleys) >= 1:

for i in range(len(peaks) - 1):

start_peak = peaks[i]

end_peak = peaks[i + 1]

# 在两个波峰之间寻找波谷

valleys_between = [v for v in valleys if start_peak < v < end_peak]

if valleys_between:

valley = valleys_between[0]

if self._validate_rep_cycle(shoulder_heights, start_peak, valley, end_peak):

rep_cycles.append({

'start_frame': int(start_peak),

'bottom_frame': int(valley),

'end_frame': int(end_peak),

'duration': int(end_peak - start_peak),

'amplitude': float(shoulder_heights[start_peak] - shoulder_heights[valley])

})

except Exception as e:

print(f"肩膀高度周期检测错误: {e}")

return rep_cycles

def _validate_rep_cycle(self, signal_data, start, bottom, end):

"""验证周期有效性"""

try:

if end <= start or bottom <= start or end <= bottom:

return False

duration = end - start

amplitude = signal_data[start] - signal_data[bottom]

# 宽松的验证条件

if duration < 10 or duration > 200 or amplitude < 0.02:

return False

return True

except Exception as e:

return False

def create_biomechanical_benchmark(self, df, rep_cycles):

"""创建生物力学基准"""

if not rep_cycles:

print("没有检测到周期,创建空基准")

return self._create_empty_benchmark()

# 分析每个周期

cycle_analyses = {}

for i, cycle in enumerate(rep_cycles):

cycle_name = f"cycle_{i + 1}"

cycle_analysis = self._analyze_single_cycle(df, cycle, cycle_name)

if cycle_analysis:

cycle_analyses[cycle_name] = cycle_analysis

if not cycle_analyses:

return self._create_empty_benchmark()

# 创建基准结果

benchmark = {

'analysis_summary': {

'total_cycles': len(cycle_analyses),

'total_frames': len(df),

'analysis_timestamp': pd.Timestamp.now().isoformat(),

'status': 'success'

},

'cycles': cycle_analyses

}

return benchmark

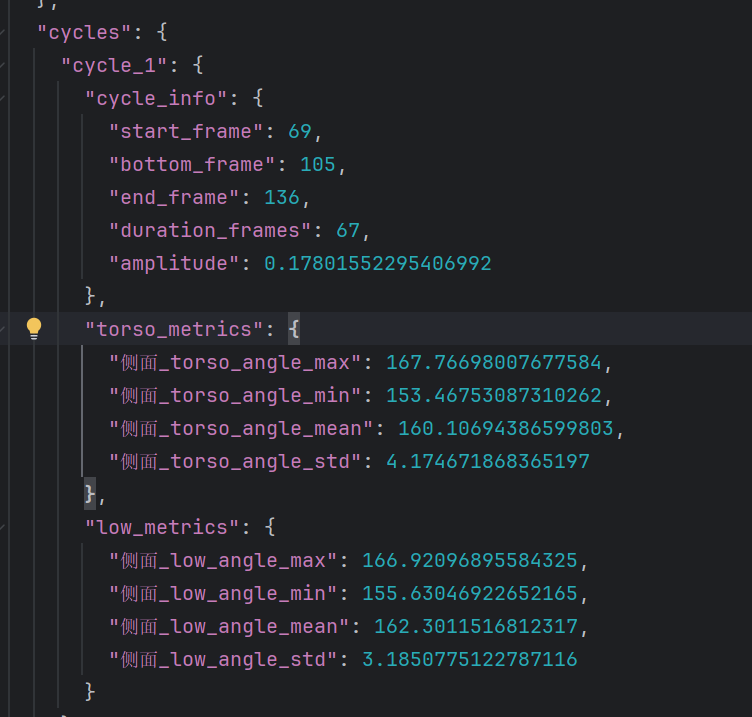

def _analyze_single_cycle(self, df, cycle, cycle_name):

"""分析单个周期"""

try:

start, bottom, end = cycle['start_frame'], cycle['bottom_frame'], cycle['end_frame']

if end >= len(df):

return None

cycle_data = df.iloc[start:end].copy()

# 计算上半身躯干角度统计

torso_angles = cycle_data['TORSO_ANGLE_ABS_side'].dropna()

torso_stats = {

'侧面_torso_angle_max': float(np.max(torso_angles)) if len(torso_angles) > 0 else np.nan,

'侧面_torso_angle_min': float(np.min(torso_angles)) if len(torso_angles) > 0 else np.nan,

'侧面_torso_angle_mean': float(np.mean(torso_angles)) if len(torso_angles) > 0 else np.nan,

'侧面_torso_angle_std': float(np.std(torso_angles)) if len(torso_angles) > 0 else np.nan

}

low_angles = cycle_data['LOWER_ANGLE_ABS_side'].dropna()

low_stats = {

'侧面_low_angle_max': float(np.max(low_angles)) if len(low_angles) > 0 else np.nan,

'侧面_low_angle_min': float(np.min(low_angles)) if len(low_angles) > 0 else np.nan,

'侧面_low_angle_mean': float(np.mean(low_angles)) if len(low_angles) > 0 else np.nan,

'侧面_low_angle_std': float(np.std(low_angles)) if len(low_angles) > 0 else np.nan

}

cycle_analysis = {

'cycle_info': {

'start_frame': int(start),

'bottom_frame': int(bottom),

'end_frame': int(end),

'duration_frames': int(end - start),

'amplitude': float(cycle['amplitude'])

},

'torso_metrics': torso_stats,

'low_metrics': low_stats

}

return cycle_analysis

except Exception as e:

print(f"分析周期 {cycle_name} 错误: {e}")

return None

def _get_nan_metrics(self):

"""返回NaN指标字典"""

return {

'LEFT_SHOULDER_Y': np.nan,

}

def _create_empty_benchmark(self):

"""创建空基准"""

return {

'analysis_summary': {

'total_cycles': 0,

'total_frames': 0,

'analysis_timestamp': pd.Timestamp.now().isoformat(),

'status': 'no_cycles_detected'

},

'cycles': {}

}

if __name__ == "__main__":

benchmark_system = AdvancedPullUpBenchmark()

# 分析视频

video_path = r"C:\Users\27387\Desktop\video\Video Project 3.mp4"

output_path = r"C:\Users\lenovo\Desktop\video\3_pose_analysis.mp4"

# 提取关键点数据并生成视频

df = benchmark_system.extract_comprehensive_landmarks(video_path, output_path)

if df is not None:

print(f" 数据提取完成,共 {len(df)} 帧")

# 检测周期

rep_cycles = benchmark_system.detect_rep_cycles_by_shoulder_height(df)

print(f" 检测到 {len(rep_cycles)} 个周期")

# 创建基准

benchmark = benchmark_system.create_biomechanical_benchmark(df, rep_cycles)

# 保存结果

with open('侧身pullup_benchmark.json', 'w', encoding='utf-8') as f:

json.dump(benchmark, f, indent=2, ensure_ascii=False)

print(" 基准分析完成!结果已保存至 pullup_benchmark.json")

# 打印结果摘要

if benchmark['analysis_summary']['status'] == 'success':

print(f"\n 分析摘要:")

print(f" 周期数: {benchmark['analysis_summary']['total_cycles']}")

for cycle_name, cycle_data in benchmark['cycles'].items():

print(f"\n{cycle_name}:")

upper = cycle_data['torso_metrics']

low = cycle_data['low_metrics']

print(f" 最大={upper['侧面_torso_angle_max']:.1f}°, "

f"最小={upper['侧面_torso_angle_min']:.1f}°, 平均={upper['侧面_torso_angle_mean']:.1f}°")

print(f" 下身躯干角度: 最大={low['侧面_low_angle_max']:.1f}°, "

f"最小={low['侧面_low_angle_min']:.1f}°, 平均={low['侧面_low_angle_mean']:.1f}°")

else:

print(" 未检测到有效的引体向上周期")

else:

print(" 数据提取失败")

代码解释

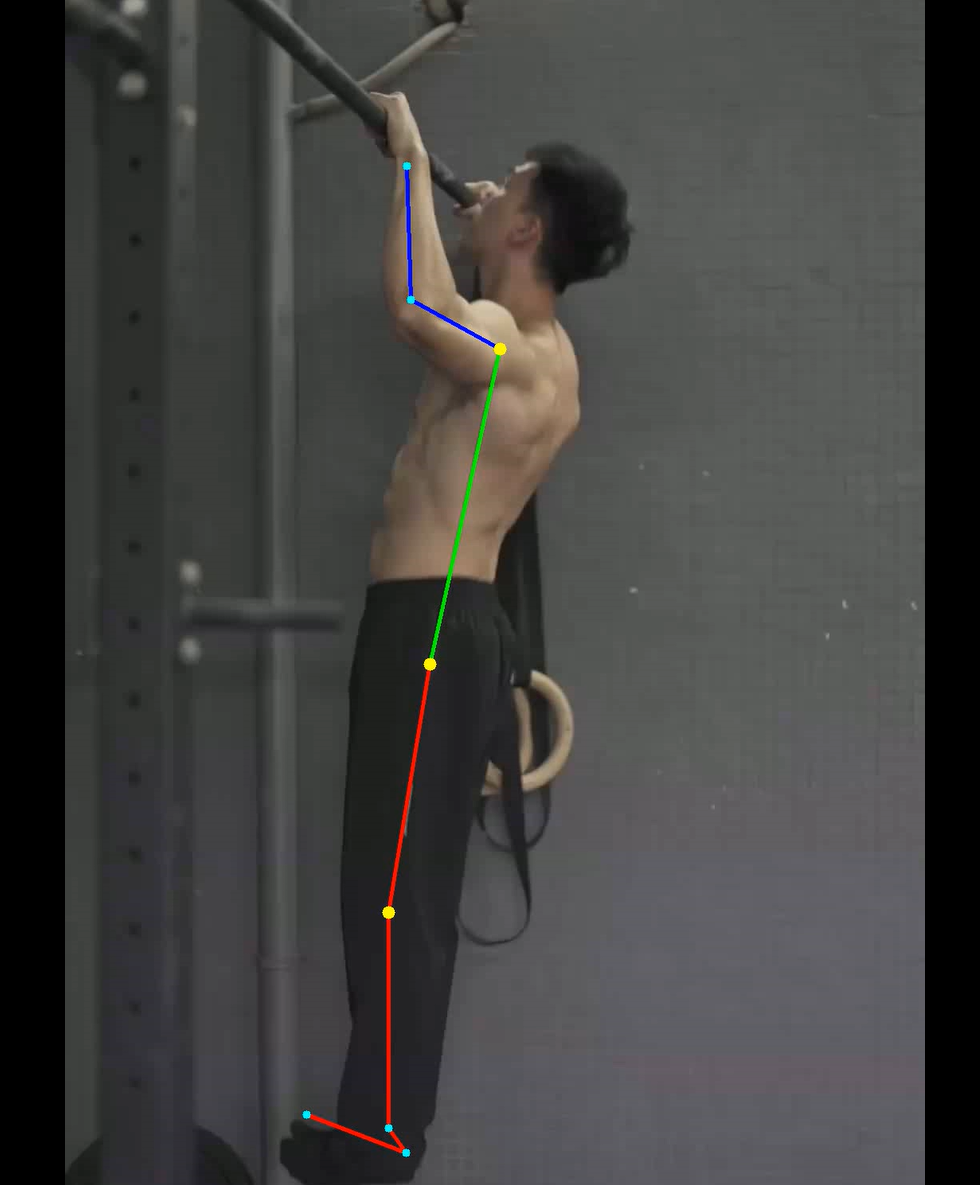

1.extract_comprehensive_landmarks方法是核心处理函数,主要对视频进行处理(光线,分辨率,帧数据等)与关键点提取

TORSO_CONNECTIONS = [

(15, 13), # 手腕-肘部

(13, 11), # 肘部-肩膀

(11, 23), # 肩膀-髋部

(23, 25), # 髋部-膝盖

(25, 27) # 膝盖-脚踝

]

landmarks_data = []

with tqdm(total=total_frames, desc="提取关键点并生成视频") as pbar:

for frame_count in range(total_frames):

success, frame = cap.read()

if not success:

break

display_frame = frame.copy()

frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

results = self.pose.process(frame_rgb)

frame_data = {

'frame': frame_count,

'timestamp': frame_count / fps if fps > 0 else frame_count

}

if results.pose_landmarks:

# 绘制骨架

self._draw_custom_skeleton(display_frame, results.pose_landmarks, TORSO_CONNECTIONS, width, height)

# 保存关键点数据(可选,如果您需要后续分析)

for name, idx in self.LANDMARK_INDICES.items():

landmark = results.pose_landmarks.landmark[idx]

frame_data[f'{name}_X'] = landmark.x

frame_data[f'{name}_Y'] = landmark.y

frame_data[f'{name}_Z'] = landmark.z

frame_data[f'{name}_VIS'] = landmark.visibility

else:

# 即使没有检测到关键点,也标记缺失数据

for name in self.LANDMARK_INDICES.keys():

frame_data[f'{name}_X'] = np.nan

frame_data[f'{name}_Y'] = np.nan

frame_data[f'{name}_Z'] = np.nan

frame_data[f'{name}_VIS'] = np.nan

2._draw_custom_skeleton方法主要是可视化人体骨架。

3._calculate_upper_stability:计算躯干(肩到髋)与垂直线的夹角,评估上半身稳定性

_calculate_low_stability:计算下半身(髋到膝)与垂直线的夹角,评估下半身稳定性

这两个计算出来的结果作为侧面的主要评判标准,用于判断整个运动过程的核心稳定性;

def _calculate_upper_stability(self,landmarks):

metrics={}

try:

left_shoulder = np.array([landmarks.landmark[11].x, landmarks.landmark[11].y])

left_hip = np.array([landmarks.landmark[23].x, landmarks.landmark[23].y])

# 躯干向量

dx = left_shoulder[0] - left_hip[0] # 水平分量

dy = left_shoulder[1] - left_hip[1] # 垂直分量

# 计算与垂直线的夹角

angle = np.degrees(np.arctan2(dx, dy))

metrics['TORSO_ANGLE_side'] = angle

metrics['TORSO_ANGLE_ABS_side'] = abs(angle) # 绝对值表示倾斜程度

except Exception as e:

metrics['TORSO_ANGLE'] = np.nan

metrics['TORSO_ANGLE_ABS'] = np.nan

return metrics

def _calculate_low_stability(self,landmarks):

metrics={}

try:

left_knee = np.array([landmarks.landmark[25].x, landmarks.landmark[25].y])

left_hip = np.array([landmarks.landmark[23].x, landmarks.landmark[23].y])

# 躯干向量

dx = left_hip[0] - left_knee[0] # 水平分量

dy = left_hip[1] - left_knee[1] # 垂直分量

# 计算与垂直线的夹角

angle = np.degrees(np.arctan2(dx, dy))

metrics['LOWER_ANGLE_side'] = angle

metrics['LOWER_ANGLE_ABS_side'] = abs(angle) # 绝对值表示倾斜程度

except Exception as e:

metrics['LOWER_ANGLE_side'] = np.nan

metrics['LOWER_ANGLE_ABS_side'] = np.nan

return metrics

4.detect_rep_cycles_by_shoulder_height方法通过肩膀高度变化检测引体向上周期:具体原理为通过寻找波峰(肩膀最低点,手臂伸直状态)和波谷(肩膀最高点,下巴过杆状态)来识别动作周期。同时还要进行有效性检验,排除帧率过长或过短周期,保证动作的合理性

def detect_rep_cycles_by_shoulder_height(self, df):

# 使用肩膀高度作为主要信号

shoulder_heights = df['LEFT_SHOULDER_Y'].values

# 数据清理和插值

shoulder_series = pd.Series(shoulder_heights)

shoulder_interp = shoulder_series.interpolate(method='linear', limit_direction='both')

if len(shoulder_interp) < 20:

print("数据太少,无法检测周期")

return []

# 平滑信号

window_size = min(11, len(shoulder_interp) // 10 * 2 + 1)

if window_size < 3:

window_size = 3

try:

smoothed = signal.savgol_filter(shoulder_interp, window_length=window_size, polyorder=2)

except Exception as e:

print(f"平滑信号失败: {e}")

smoothed = shoulder_interp.values

# 寻找周期

rep_cycles = self._find_cycles_by_shoulder_height(smoothed)

print(f"检测到 {len(rep_cycles)} 个引体向上周期")

return rep_cycles

def _validate_rep_cycle(self, signal_data, start, bottom, end):

"""验证周期有效性"""

try:

if end <= start or bottom <= start or end <= bottom:

return False

duration = end - start

amplitude = signal_data[start] - signal_data[bottom]

# 宽松的验证条件

if duration < 10 or duration > 200 or amplitude < 0.02:

return False

return True

except Exception as e:

return False

标点结果展示

大致功能为:

初始化分析系统

读取输入视频并提取关键点数据,同时生成带骨架标注的输出视频

从提取的数据中检测引体向上周期

对每个周期进行生物力学分析

将分析结果保存为 JSON 文件

打印分析摘要

输出结果展示

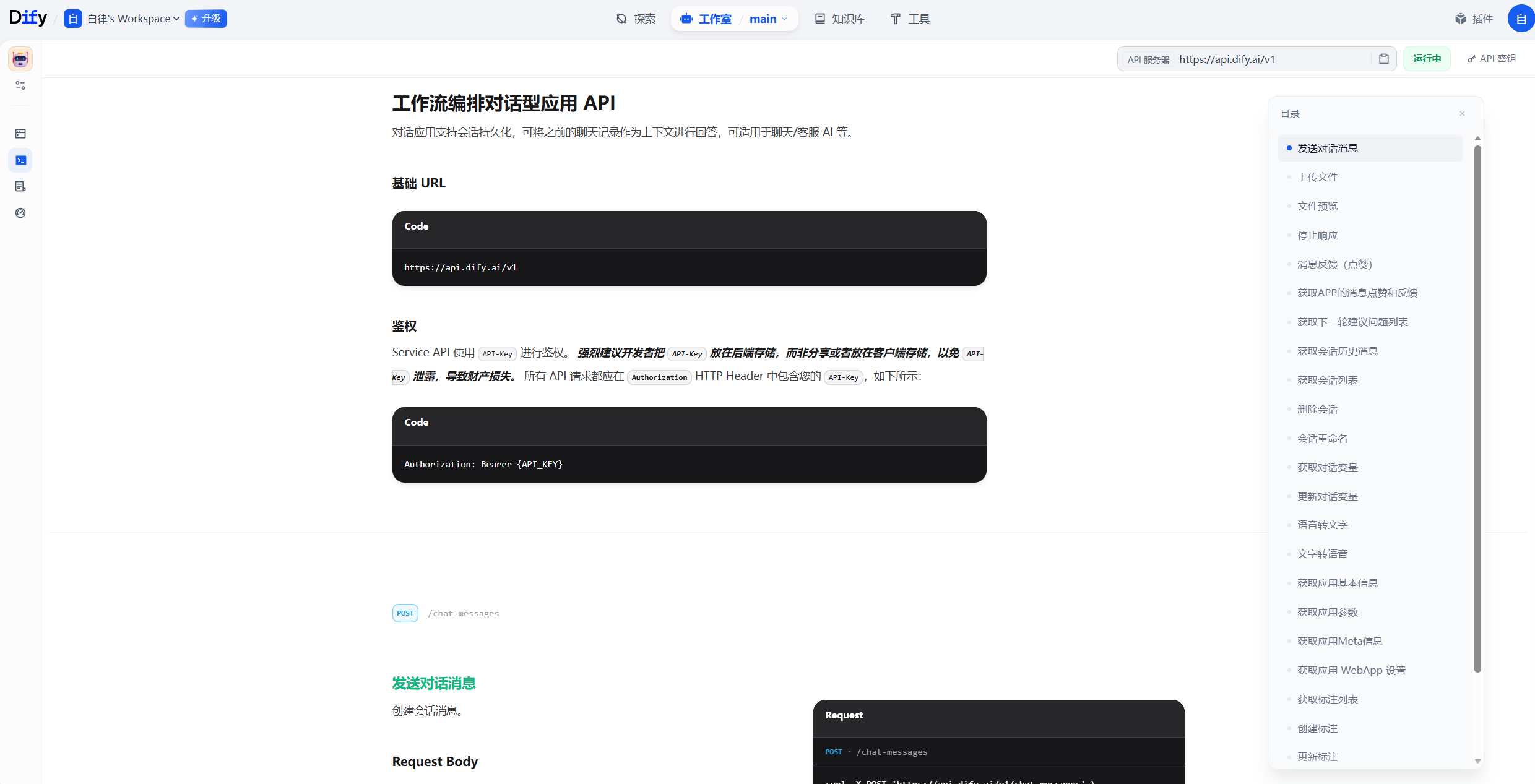

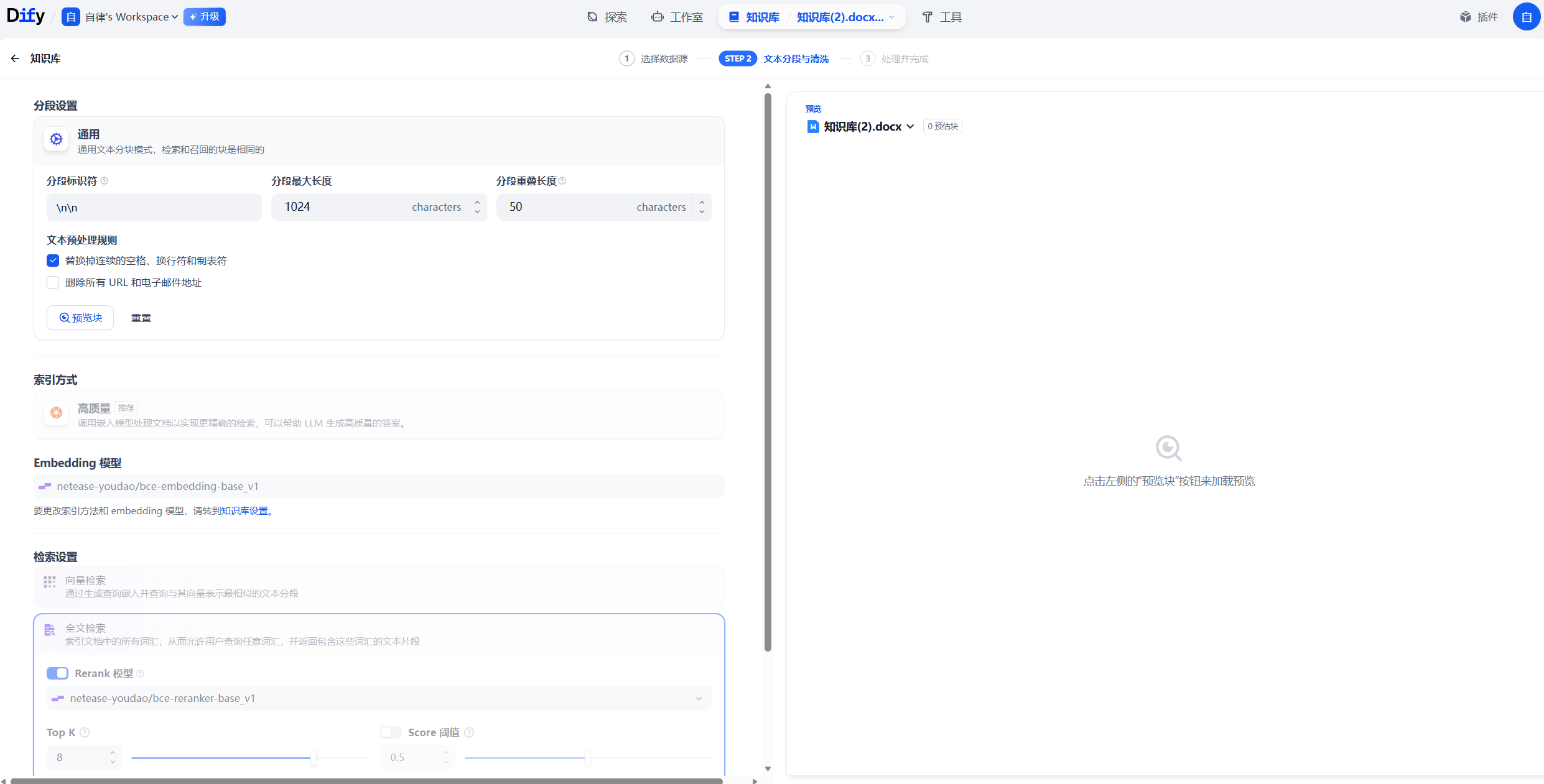

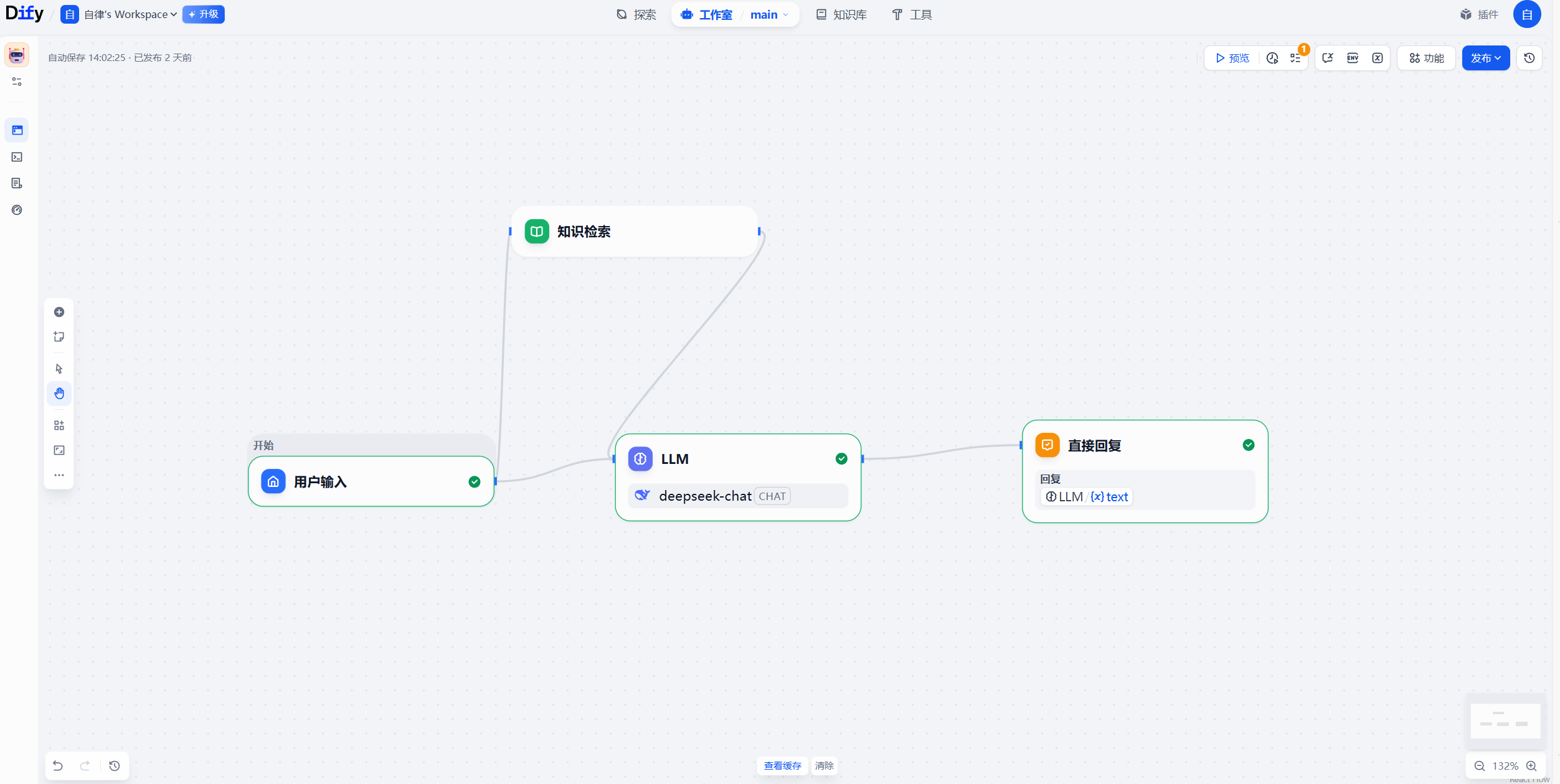

4.2AI知识库训练

API 接口配置

LLM 节点配置

知识库处理

工作流编排

五.心得体会

通过学习和研究这款引体向上动作分析系统的代码,我深切感受到计算机视觉与运动生物力学结合的强大魅力。该系统借助 MediaPipe 姿态检测技术,实现了从视频中精准提取人体关键骨骼点的功能,再通过数据处理和算法分析,将抽象的运动动作转化为可量化的生物力学指标,为运动质量评估提供了科学依据。

在代码学习过程中,我对模块化编程有了更深刻的认识。系统将初始化设置、关键点提取、骨架绘制、指标计算、周期检测等功能拆分到不同方法中,结构清晰、逻辑严谨,不仅便于理解和维护,也为后续功能扩展预留了空间。尤其是在数据处理环节,代码通过插值填补缺失值、平滑信号降噪、波峰波谷检测等技术,有效提升了分析结果的准确性,让我意识到细节处理对数据分析的重要性。

此外,该系统的实用性令我印象深刻。它不仅能生成带骨架标注的可视化视频,直观呈现运动姿态,还能通过躯干角度、下半身角度等指标的统计分析,量化评估动作的稳定性和规范性,为运动训练提供针对性指导。这种 “技术赋能运动科学” 的模式,让我看到了计算机技术在体育训练、健身指导等领域的广阔应用前景。

此次学习也让我认识到,一款实用的技术工具需要兼顾技术深度与用户需求。代码中对检测置信度阈值的设置、周期验证条件的优化等,都是在平衡技术准确性与实际应用场景,这提醒我在未来的学习和实践中,要注重理论与实际结合,让技术真正服务于实际需求。

浙公网安备 33010602011771号

浙公网安备 33010602011771号