102302139 尚子骐 数据采集与融合作业4

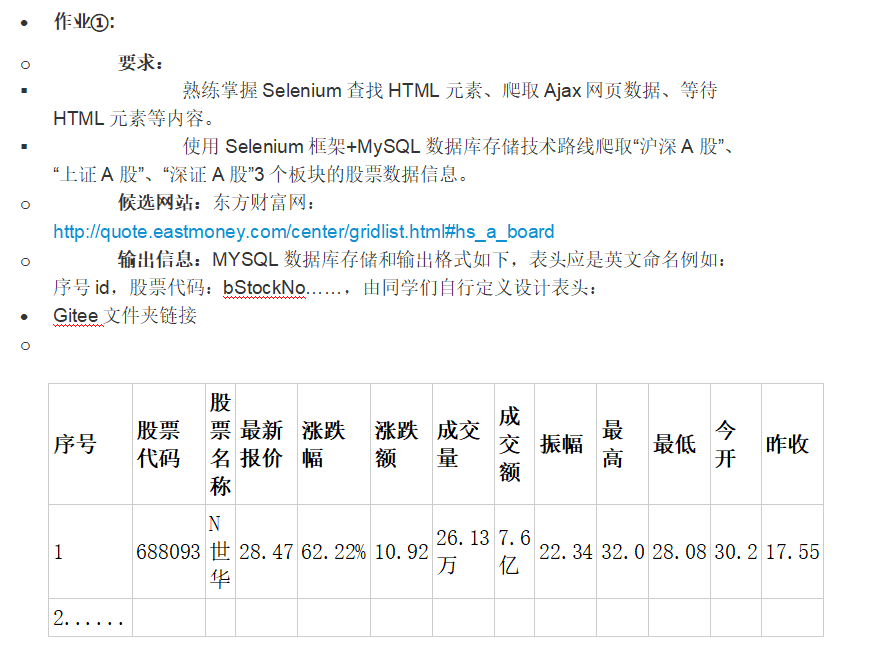

- 作业一

![image]()

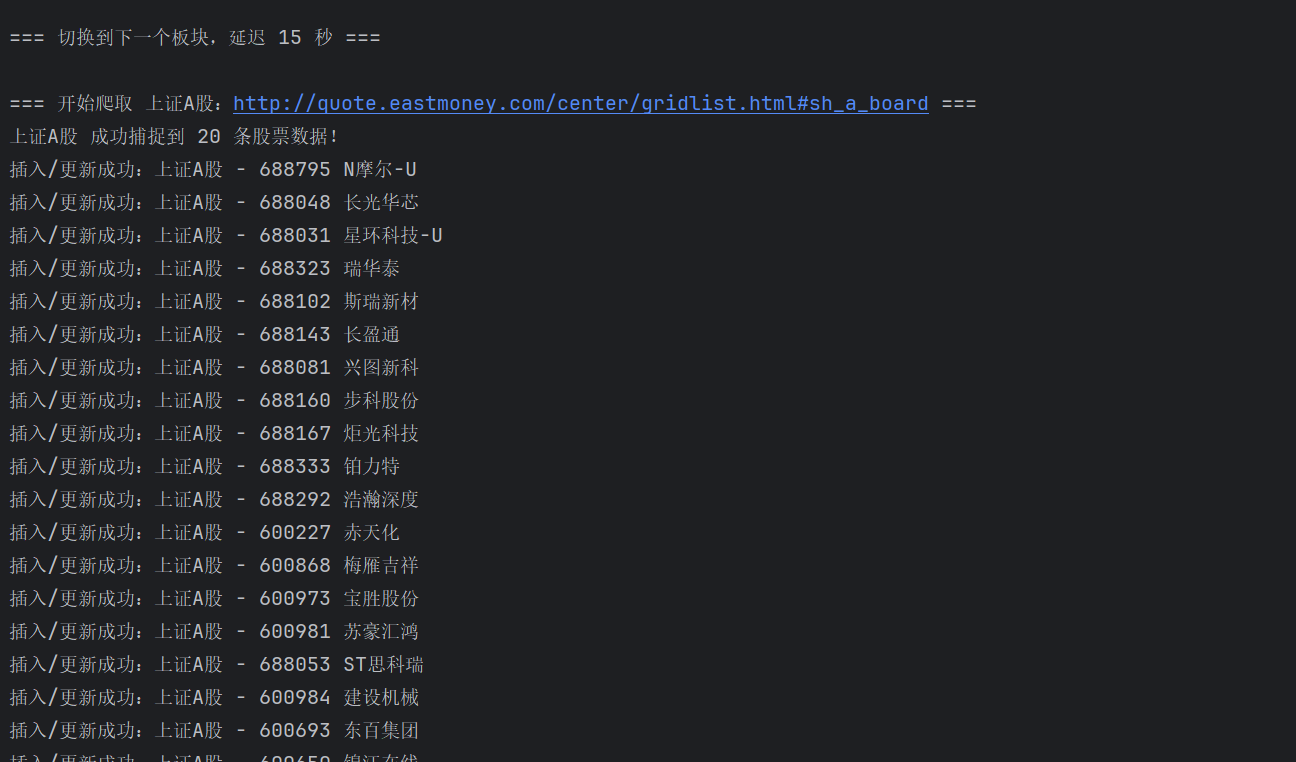

1. 完整代码及运行结果

点击查看代码

from selenium import webdriver

from selenium.webdriver.edge.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.action_chains import ActionChains

import pymysql

import time

import random

# 数据库配置

MYSQL_CONFIG = {

"host": "localhost",

"user": "账户",

"password": "密码",

"database": "spider_homework",

"port": 3306,

"charset": "utf8mb4"

}

# 3个板块的URL配置(作业要求)

STOCK_BOARDS = {

"沪深A股": "http://quote.eastmoney.com/center/gridlist.html#hs_a_board",

"上证A股": "http://quote.eastmoney.com/center/gridlist.html#sh_a_board",

"深证A股": "http://quote.eastmoney.com/center/gridlist.html#sz_a_board"

}

# 等待时间配置(适配多板块爬取)

WAIT_TIME = 50

PAGE_DELAY = random.randint(10, 15)

SCROLL_DELAY = random.uniform(1.5, 2.5)

BOARD_DELAY = random.randint(12, 18)

# 数据库工具类

class MySQLHelper:

def __init__(self):

self.conn = None

self.cursor = None

self.connect()

self.create_table()

def connect(self):

try:

self.conn = pymysql.connect(**MYSQL_CONFIG)

self.cursor = self.conn.cursor()

print("MySQL数据库连接成功!")

except Exception as e:

print(f"MySQL连接失败:{str(e)}")

raise

def create_table(self):

create_sql = """

CREATE TABLE IF NOT EXISTS stock_data (

id INT AUTO_INCREMENT PRIMARY KEY,

bStockNo VARCHAR(20) NOT NULL COMMENT '股票代码',

bStockName VARCHAR(50) COMMENT '股票名称',

latestPrice DECIMAL(10,2) COMMENT '最新报价',

priceChangeRate VARCHAR(10) COMMENT '涨跌幅',

priceChange DECIMAL(10,2) COMMENT '涨跌额',

volume VARCHAR(20) COMMENT '成交量',

turnover VARCHAR(20) COMMENT '成交额',

amplitude VARCHAR(10) COMMENT '振幅',

highest DECIMAL(10,2) COMMENT '最高',

lowest DECIMAL(10,2) COMMENT '最低',

todayOpen DECIMAL(10,2) COMMENT '今开',

yesterdayClose DECIMAL(10,2) COMMENT '昨收',

board VARCHAR(20) NOT NULL COMMENT '所属板块(沪深A股/上证A股/深证A股)',

UNIQUE KEY uk_stock_board (bStockNo, board)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='东方财富网3个A股板块股票数据';

"""

try:

self.cursor.execute(create_sql)

self.conn.commit()

print("股票数据表创建/验证成功!")

except Exception as e:

self.conn.rollback()

print(f"创建数据表失败:{str(e)}")

raise

def insert_data(self, data):

insert_sql = """

INSERT INTO stock_data (

bStockNo, bStockName, latestPrice, priceChangeRate, priceChange,

volume, turnover, amplitude, highest, lowest, todayOpen, yesterdayClose, board

) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)

ON DUPLICATE KEY UPDATE

latestPrice=%s, priceChangeRate=%s, priceChange=%s, volume=%s, turnover=%s,

amplitude=%s, highest=%s, lowest=%s, todayOpen=%s, yesterdayClose=%s;

"""

try:

params = (

data["bStockNo"], data["bStockName"], data["latestPrice"], data["priceChangeRate"],

data["priceChange"], data["volume"], data["turnover"], data["amplitude"],

data["highest"], data["lowest"], data["todayOpen"], data["yesterdayClose"],

data["board"],

data["latestPrice"], data["priceChangeRate"], data["priceChange"],

data["volume"], data["turnover"], data["amplitude"], data["highest"],

data["lowest"], data["todayOpen"], data["yesterdayClose"]

)

self.cursor.execute(insert_sql, params)

self.conn.commit()

print(f"插入/更新成功:{data['board']} - {data['bStockNo']} {data['bStockName']}")

except Exception as e:

self.conn.rollback()

print(f"插入数据失败:{str(e)} - 数据:{data}")

def close(self):

if self.cursor:

self.cursor.close()

if self.conn:

self.conn.close()

print("MySQL数据库连接已关闭!")

# 爬虫核心类(3个板块自动爬取)

class StockSpider:

def __init__(self):

# 浏览器配置

edge_options = webdriver.EdgeOptions()

edge_options.add_experimental_option("excludeSwitches", ["enable-automation", "enable-logging"])

edge_options.add_experimental_option("useAutomationExtension", False)

edge_options.add_argument("start-maximized")

edge_options.add_argument(

"user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/142.0.0.0 Safari/537.36 Edg/142.0.3595.94")

edge_options.add_argument("--blink-settings=imagesEnabled=false")

# 驱动路径

driver_path = r"C:\Users\kuku\PycharmProjects\PythonProject6\msedgedriver.exe"

self.driver = webdriver.Edge(

service=Service(driver_path),

options=edge_options

)

# 隐藏webdriver

self.driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})"

})

self.mysql_helper = MySQLHelper()

def simulate_user_behavior(self):

"""模拟用户操作,降低反爬风险"""

try:

# 滚动页面

self.driver.execute_script("window.scrollBy(0, 400)")

time.sleep(SCROLL_DELAY)

self.driver.execute_script("window.scrollBy(0, 600)")

time.sleep(SCROLL_DELAY)

self.driver.execute_script("window.scrollTo(0, 200)")

time.sleep(SCROLL_DELAY)

# hover导航栏元素

nav_elements = self.driver.find_elements(By.XPATH, '//div[@class="nav"]//a')[:4]

for elem in nav_elements:

ActionChains(self.driver).move_to_element(elem).perform()

time.sleep(random.uniform(0.6, 1.2))

except Exception as e:

print(f"模拟用户行为失败:{e}")

pass

def handle_slider_verify(self, board_name):

"""处理滑块验证(仅针对沪深A股)"""

if board_name != "沪深A股":

return True # 其他板块不处理

print(f"\n{board_name} 触发滑块验证,请手动完成验证后按回车键继续...")

# 等待用户手动完成滑块验证

input("请完成滑块验证,然后按回车键继续爬取...")

# 验证后刷新页面,确保数据加载

self.driver.refresh()

time.sleep(PAGE_DELAY)

return True

def parse_single_board(self, board_name, board_url):

"""爬取单个板块数据(增加滑块验证处理)"""

print(f"\n=== 开始爬取 {board_name}:{board_url} ===")

self.driver.get(board_url)

time.sleep(PAGE_DELAY)

# 先处理滑块验证(仅沪深A股)

self.handle_slider_verify(board_name)

try:

self.simulate_user_behavior()

# 定位股票数据行

stock_rows = WebDriverWait(self.driver, WAIT_TIME).until(

EC.presence_of_all_elements_located((By.XPATH,

'//tr[count(td)>=13 and (starts-with(td[2], "0") or starts-with(td[2], "3") or starts-with(td[2], "6"))]'

))

)

print(f"{board_name} 成功捕捉到 {len(stock_rows)} 条股票数据!")

# 每个板块爬前100条

for row in stock_rows[:100]:

try:

cols = row.find_elements(By.XPATH, './td')

stock_data = {

"bStockNo": cols[1].text.strip(),

"bStockName": cols[2].text.strip(),

"latestPrice": self.safe_float(cols[3].text.strip()),

"priceChangeRate": cols[4].text.strip(),

"priceChange": self.safe_float(cols[5].text.strip()),

"volume": cols[6].text.strip(),

"turnover": cols[7].text.strip(),

"amplitude": cols[8].text.strip(),

"highest": self.safe_float(cols[9].text.strip()),

"lowest": self.safe_float(cols[10].text.strip()),

"todayOpen": self.safe_float(cols[11].text.strip()),

"yesterdayClose": self.safe_float(cols[12].text.strip()),

"board": board_name

}

# 过滤无效数据

if stock_data["bStockNo"] and len(stock_data["bStockNo"]) == 6:

self.mysql_helper.insert_data(stock_data)

time.sleep(random.uniform(0.5, 1.0))

except Exception as e:

print(f"{board_name} 解析单条数据失败:{str(e)}")

continue

except Exception as e:

print(f"{board_name} 爬取失败:{str(e)}")

# 兜底方案

all_codes = self.driver.find_elements(By.XPATH,

'//td[(starts-with(text(), "0") or starts-with(text(), "3") or starts-with(text(), "6")) and string-length(text())=6]'

)

print(f"{board_name} 兜底提取到 {len(all_codes)} 个股票代码")

def safe_float(self, text):

"""安全转换浮点数"""

try:

cleaned_text = text.replace(',', '').strip()

if cleaned_text in ['', '-', '--']:

return 0.0

return float(cleaned_text)

except:

return 0.0

def run(self):

"""按顺序爬取3个板块"""

try:

for board_name, board_url in STOCK_BOARDS.items():

self.parse_single_board(board_name, board_url)

# 板块之间延迟

if board_name != list(STOCK_BOARDS.keys())[-1]:

print(f"\n=== 切换到下一个板块,延迟 {BOARD_DELAY} 秒 ===")

time.sleep(BOARD_DELAY)

print("\n所有板块爬取完成!")

print("数据已存入 MySQL 的 stock_data 表,board 字段标记板块名称")

finally:

self.driver.quit()

self.mysql_helper.close()

if __name__ == "__main__":

print("=== 开始执行 A 股 3 个板块爬取任务 ===")

print("爬取板块:沪深A股、上证A股、深证A股")

print("存储位置:MySQL -> spider_homework -> stock_data")

print("任务开始,请勿关闭程序...\n")

spider = StockSpider()

spider.run()

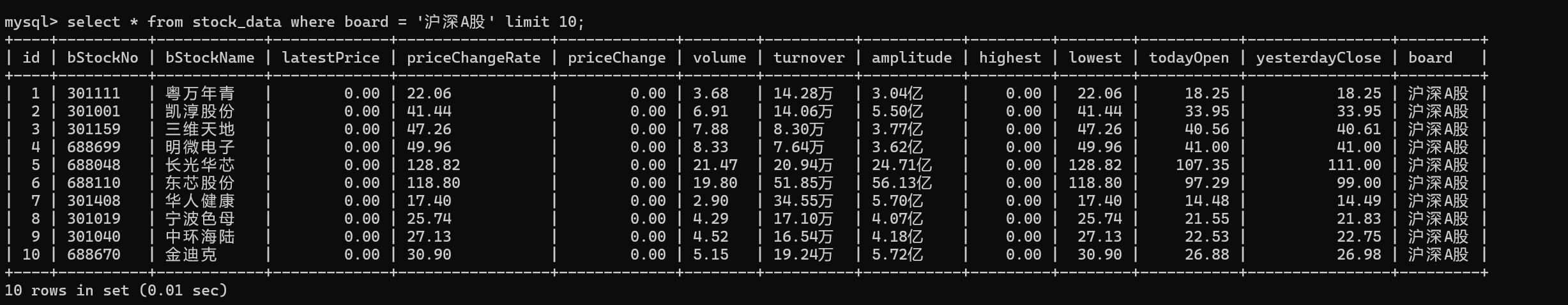

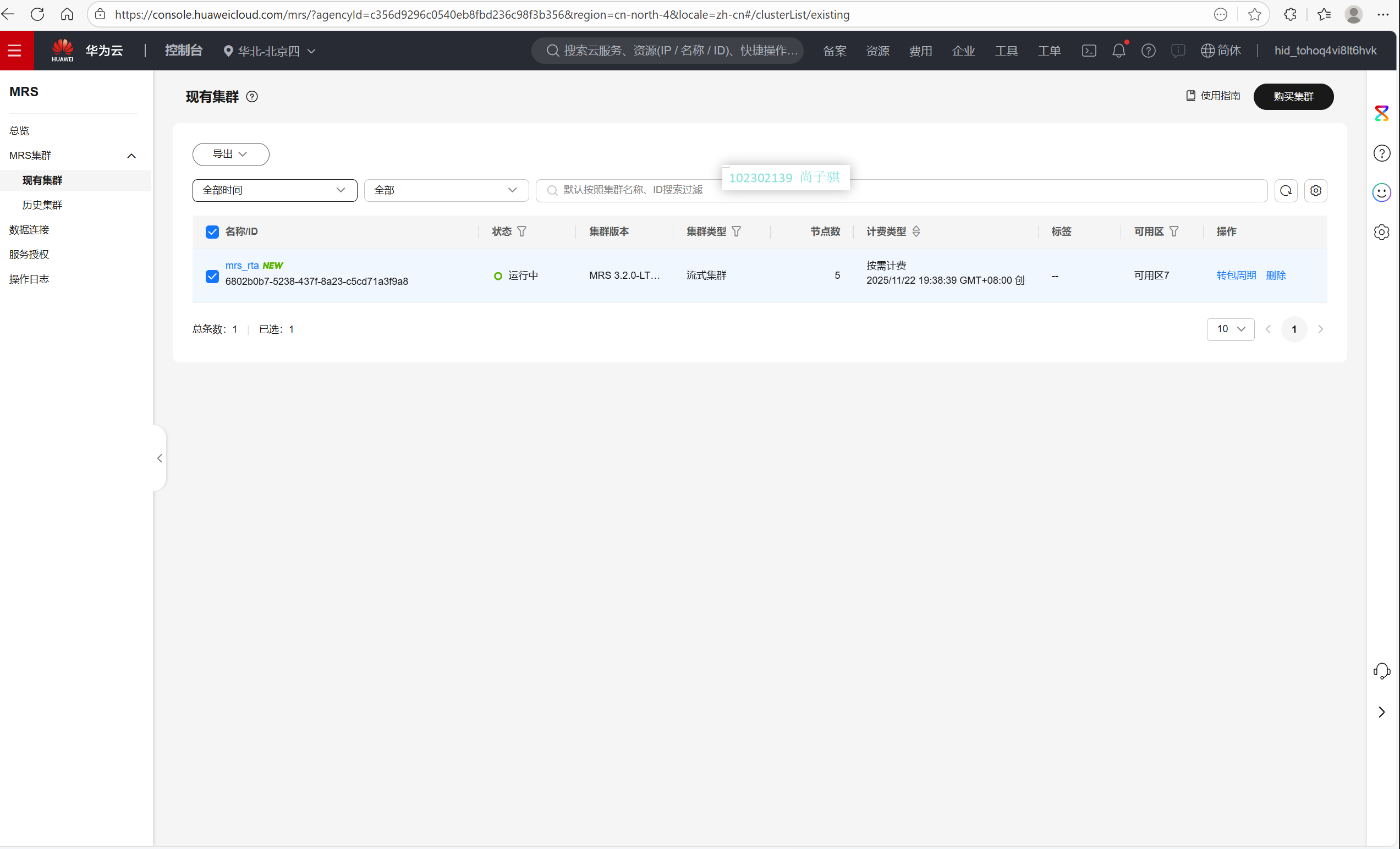

数据库验证

查看沪深A股前十数据

2. 心得体会

配置数据库以及URL

点击查看代码

# 数据库配置(root/123456)

MYSQL_CONFIG = {

"host": "localhost",

"user": "账户",

"password": "密码",

"database": "spider_homework",

"port": 3306,

"charset": "utf8mb4"

}

# 3个板块的URL配置(作业要求)

STOCK_BOARDS = {

"沪深A股": "http://quote.eastmoney.com/center/gridlist.html#hs_a_board",

"上证A股": "http://quote.eastmoney.com/center/gridlist.html#sh_a_board",

"深证A股": "http://quote.eastmoney.com/center/gridlist.html#sz_a_board"

}

# 等待时间配置(适配多板块爬取)

WAIT_TIME = 50

PAGE_DELAY = random.randint(10, 15)

SCROLL_DELAY = random.uniform(1.5, 2.5)

BOARD_DELAY = random.randint(12, 18)

在爬虫的过程中,由于切换界面过快会导致爬取失败,然后在进入页面会有广告验证,我设置了等待时间,由我手动操作刷新页面的操作,完成操作后回到终端回车继续爬虫,还怕网站反爬所以有弄自动滑动页面,模仿真人浏览网页

模仿用户

def simulate_user_behavior(self): # 模拟滚动页面(上下滚动,模拟用户浏览) self.driver.execute_script("window.scrollBy(0, 400)") time.sleep(SCROLL_DELAY) # 模拟hover导航栏(模拟用户交互) nav_elements = self.driver.find_elements(By.XPATH, '//div[@class="nav"]//a')[:4] for elem in nav_elements: ActionChains(self.driver).move_to_element(elem).perform() time.sleep(random.uniform(0.6, 1.2))

验证活动处理

def handle_slider_verify(self, board_name): if board_name != "沪深A股": return True print(f"\n{board_name} 触发滑块验证,请手动完成验证后按回车键继续...") input("请完成滑块验证,然后按回车键继续爬取...") self.driver.refresh() # 验证后刷新页面确保数据加载 time.sleep(PAGE_DELAY) return True

核心爬虫逻辑

浏览器初始化Selenium 配置 反爬策略实现,用户行为模拟 滑块验证处理针对沪深 A 股 3 个板块数据爬取与解析 数据传递给 MySQLHelper 存储

点击查看代码

def parse_single_board(self, board_name, board_url):

self.driver.get(board_url) # 访问板块URL

time.sleep(PAGE_DELAY)

self.handle_slider_verify(board_name) # 处理滑块验证

self.simulate_user_behavior() # 模拟用户行为

# 关键:Selenium显式等待(等待Ajax动态加载完成)

stock_rows = WebDriverWait(self.driver, WAIT_TIME).until(

EC.presence_of_all_elements_located((By.XPATH,

'//tr[count(td)>=13 and (starts-with(td[2], "0") or starts-with(td[2], "3") or starts-with(td[2], "6"))]'

))

)

print(f"{board_name} 成功捕捉到 {len(stock_rows)} 条股票数据!")

# 解析单条股票数据(按题目要求提取13个字段)

for row in stock_rows[:100]: # 每个板块爬前100条

cols = row.find_elements(By.XPATH, './td')

stock_data = {

"bStockNo": cols[1].text.strip(), # 股票代码(第2列)

"bStockName": cols[2].text.strip(), # 股票名称(第3列)

"latestPrice": self.safe_float(cols[3].text.strip()), # 最新报价(第4列)

# ... 其余字段按列索引提取(严格对应表格结构)

"board": board_name # 标记所属板块

}

# 过滤无效数据(股票代码为6位)

if stock_data["bStockNo"] and len(stock_data["bStockNo"]) == 6:

self.mysql_helper.insert_data(stock_data) # 存入数据库

time.sleep(random.uniform(0.5, 1.0)) # 单条数据延迟(反爬)

整体思路

1)通过STOCK_BOARDS 配置 3 个板块 URL,run方法遍历爬取3 个板块沪深 A 股、上证 A 股、深证 A 股

2)全程使用 Selenium 控制浏览器,处理 Ajax 动态加载

3)使用 XPATH 定位(By.XPATH),精准匹配股票数据行和字段,查找 HTML 元素

4)在面对第一页爬取有活动滑块验证,采取采用WebDriverWait显式等待,确保 Ajax 加载完成后解析

5)使用MySQLHelper类封装建表、插入、更新逻辑

6)输出id、股票代码、最新报价等爬取到的数据,在本机数据库验证数据内容

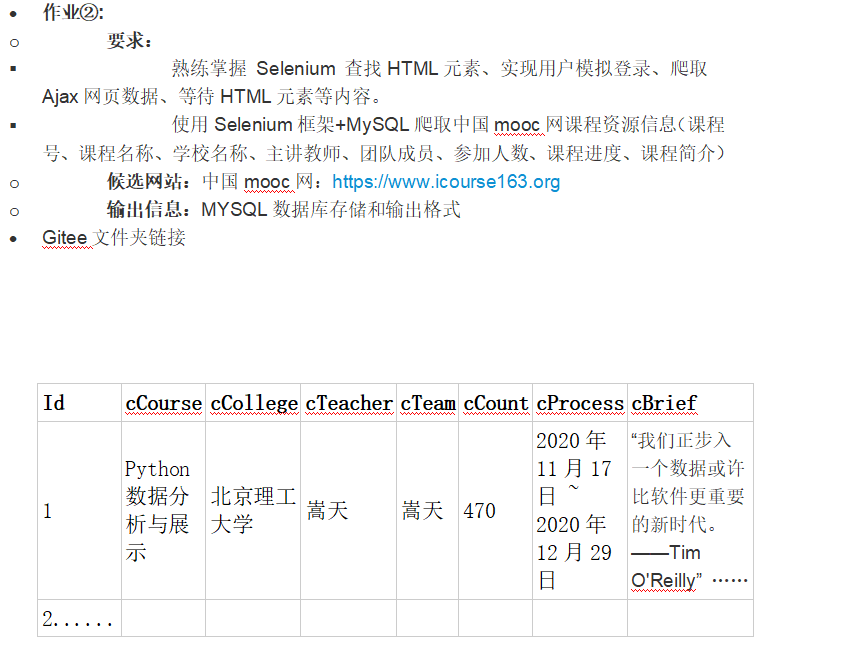

- 作业二

![image]()

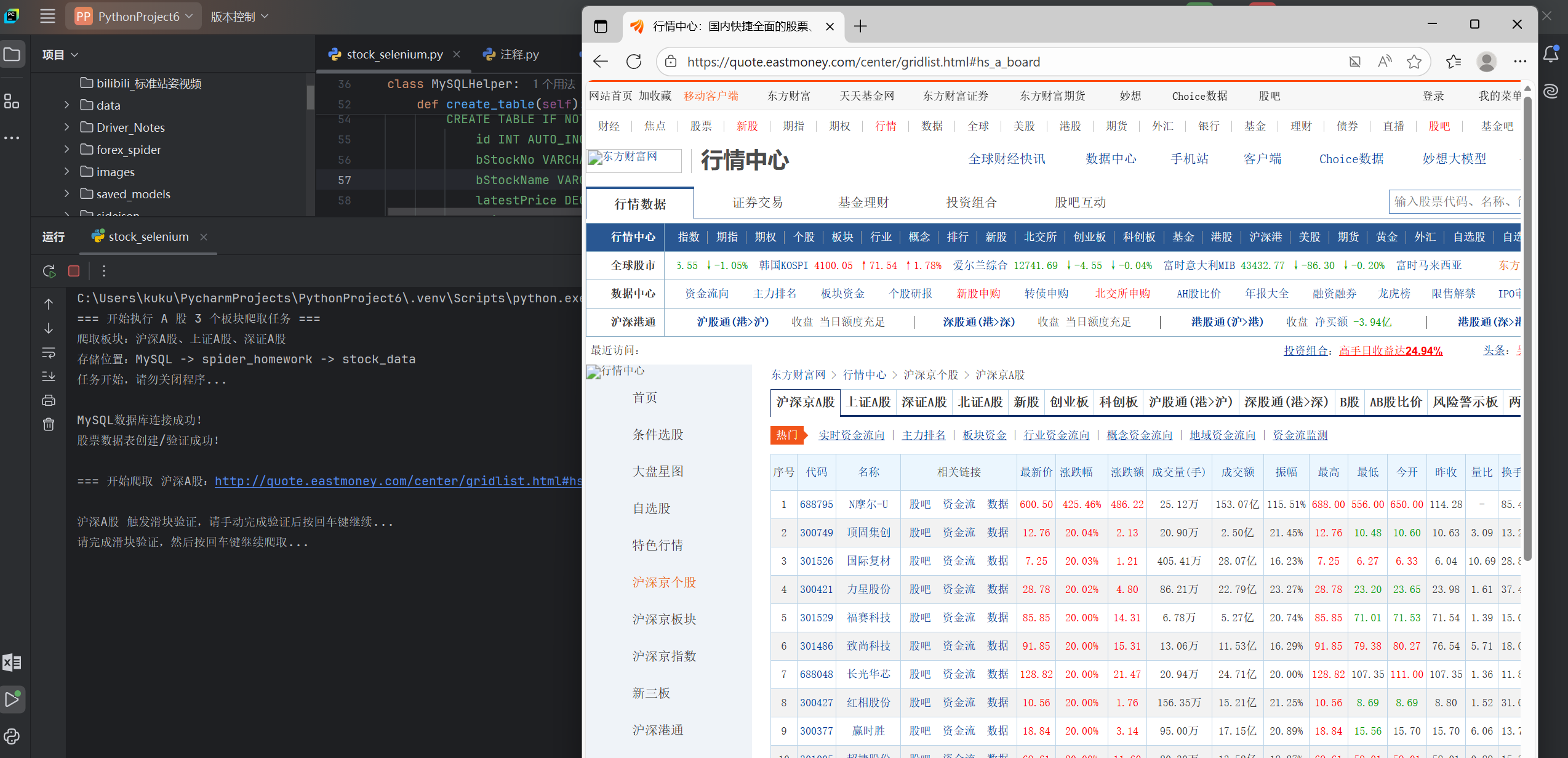

1. 完整代码及运行结果

点击查看代码

plaintext

import time

import pymysql

from selenium import webdriver

from selenium.webdriver.edge.options import Options

from selenium.webdriver.edge.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import re

# 核心配置

DB_CONFIG = {

'host': 'localhost', 'port': 3306, 'user': '用户',

'password': '密码', 'charset': 'utf8mb4'

}

EDGE_DRIVER_PATH = r"C:\Users\kuku\PycharmProjects\PythonProject6\msedgedriver.exe"

FZU_COURSE_URL = "https://www.icourse163.org/university/FZU#/c"

crawled_course_ids = set()

# 浏览器配置

edge_options = Options()

edge_options.add_argument('--start-maximized')

edge_options.add_experimental_option("excludeSwitches", ["enable-automation", "enable-logging"])

edge_options.add_experimental_option('useAutomationExtension', False)

driver = webdriver.Edge(service=Service(EDGE_DRIVER_PATH), options=edge_options)

wait = WebDriverWait(driver, 30)

def setup_database():

"""初始化数据库(移除cBrief字段)"""

conn = pymysql.connect(**DB_CONFIG)

cursor = conn.cursor()

cursor.execute("CREATE DATABASE IF NOT EXISTS mooc_courses")

cursor.execute("USE mooc_courses")

cursor.execute("DROP TABLE IF EXISTS course_info")

cursor.execute('''

CREATE TABLE IF NOT EXISTS course_info (

id INT AUTO_INCREMENT PRIMARY KEY,

cCourse VARCHAR(255) NOT NULL,

cCollege VARCHAR(100) NOT NULL,

cTeacher VARCHAR(100) NOT NULL DEFAULT '未公开',

cTeam TEXT NOT NULL,

cCount INT NOT NULL DEFAULT 0,

cProcess VARCHAR(100) NOT NULL DEFAULT '未公开',

course_id VARCHAR(50) UNIQUE NOT NULL,

course_url VARCHAR(255) UNIQUE NOT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

''')

conn.commit()

print("数据库初始化完成")

return conn, cursor

def manual_login_prompt():

"""手动登录提示(无自动检测,完全手动控制)"""

print("=" * 60)

print("请按以下步骤操作:")

print("1. 浏览器已打开MOOC登录页,请手动输入账号和密码登录")

print("2. 登录成功后,手动访问福州大学课程页:")

print(f" {FZU_COURSE_URL}")

print("3. 确保成功进入课程列表页(能看到课程卡片)")

print("4. 回到PyCharm控制台,输入 'y' 并回车,程序开始爬取!")

print("=" * 60)

# 打开登录页

driver.get("https://www.icourse163.org/login.htm")

# 等待用户输入确认(无限等待,直到用户手动触发)

while True:

user_input = input("已完成登录并进入课程页?请输入 'y' 开始爬取:")

if user_input.strip().lower() == 'y':

print("开始爬取流程...")

break

else:

print("输入错误,请输入 'y' 继续,或完成登录和页面跳转后再操作!")

def extract_course_urls():

"""提取课程URL"""

print("\n开始提取课程URL(滚动加载5次)...")

# 确保当前在课程页

driver.get(FZU_COURSE_URL)

time.sleep(5)

# 滚动加载

for i in range(5):

print(f"第{i + 1}次滚动加载...")

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(4)

# 提取URL

course_pattern = re.compile(r'https://www\.icourse163\.org/course/(FZU-\d+)')

all_links = driver.find_elements(By.TAG_NAME, "a")

course_urls = []

for link in all_links:

try:

url = link.get_attribute("href")

if url and course_pattern.match(url):

course_id = course_pattern.search(url).group(1)

if course_id not in crawled_course_ids:

course_urls.append((course_id, url))

crawled_course_ids.add(course_id)

except:

continue

course_urls = list(set(course_urls))

print(f"成功提取{len(course_urls)}门课程URL!")

return course_urls

def get_course_detail(course_id, course_url):

"""爬取7个字段(移除课程简介)"""

print(f"\n爬取课程:{course_url}")

info = {

"course_id": course_id, "cCourse": "未知课程", "cCollege": "福州大学",

"cTeacher": "未公开", "cTeam": "无", "cCount": 0,

"cProcess": "未公开", "course_url": course_url

}

try:

driver.get(course_url)

time.sleep(6)

driver.execute_script("window.scrollTo(0, 1200);")

time.sleep(3)

page_source = driver.page_source

# 1. 课程名称

try:

info["cCourse"] = wait.until(

EC.visibility_of_element_located((By.CSS_SELECTOR, ".course-title"))).text.strip()

except:

info["cCourse"] = driver.find_element(By.TAG_NAME, "h1").text.strip()

# 2. 主讲教师+团队(去重)

try:

teachers = [t.text.strip() for t in

driver.find_element(By.CSS_SELECTOR, ".m-teachers_teacher-list").find_elements(By.CSS_SELECTOR,

"h3") if

t.text.strip()]

teachers = list(set(teachers))

if teachers:

info["cTeacher"] = teachers[0]

info["cTeam"] = "、".join(teachers[1:]) if len(teachers) > 1 else "无"

except:

teachers = re.findall(r'<h3 class="[^"]*">([\u4e00-\u9fa5]{2,4})</h3>', page_source)

teachers = list(set(teachers))

if teachers:

info["cTeacher"] = teachers[0]

info["cTeam"] = "、".join(teachers[1:]) if len(teachers) > 1 else "无"

# 3. 参加人数(支持万单位)

try:

count_text = driver.find_element(By.CSS_SELECTOR,

".enroll-count, .u-count, .course-enroll-info .count").text.strip()

if "万" in count_text:

num = float(re.search(r"\d+(\.\d+)?", count_text).group())

info["cCount"] = int(num * 10000)

else:

count_match = re.search(r'(\d+)', count_text)

info["cCount"] = int(count_match.group(1)) if count_match else 0

except:

count_match = re.search(r'(\d+)人已参加|(\d+)人报名|(\d+)人学习', page_source)

info["cCount"] = int(

count_match.group(1) or count_match.group(2) or count_match.group(3)) if count_match else 0

# 4. 课程进度(开课时间范围)

try:

process_text = driver.find_element(By.XPATH,

"//div[contains(text(), '开课时间')]/following-sibling::div").text.strip()

if "~" not in process_text:

try:

end_time = driver.find_element(By.XPATH,

"//div[contains(text(), '结束时间')]/following-sibling::div").text.strip()

process_text = f"{process_text} ~ {end_time}"

except:

pass

info["cProcess"] = process_text

except:

process_match = re.search(

r'(\d{4}年\d{2}月\d{2}日)\s*-\s*(\d{4}年\d{2}月\d{2}日)|(\d{4}年\d{2}月\d{2}日)\s*~\s*(\d{4}年\d{2}月\d{2}日)',

page_source)

if process_match:

info[

"cProcess"] = f"{process_match.group(1) or process_match.group(3)} ~ {process_match.group(2) or process_match.group(4)}"

print(f"爬取成功:{info['cCourse']} | 主讲:{info['cTeacher']} | 人数:{info['cCount']} | 时间:{info['cProcess']}")

return info

except Exception as e:

print(f"爬取失败:{str(e)[:50]}...")

return None

def save_course_to_db(conn, cursor, info):

"""保存数据库(移除cBrief字段)"""

if not info:

return

try:

cursor.execute('''

INSERT INTO course_info (cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, course_id, course_url)

VALUES (%s, %s, %s, %s, %s, %s, %s, %s)

''', (info["cCourse"], info["cCollege"], info["cTeacher"], info["cTeam"],

info["cCount"], info["cProcess"], info["course_id"], info["course_url"]))

conn.commit()

except Exception as e:

print(f"保存失败:{e}")

conn.rollback()

def display_result(cursor):

"""规整表格输出(无课程简介,整齐排列)"""

cursor.execute("SELECT id, cCourse, cCollege, cTeacher, cTeam, cCount, cProcess FROM course_info")

records = cursor.fetchall()

# 定义固定字段宽度,确保对齐

column_widths = {

'id': 4,

'cCourse': 30,

'cCollege': 12,

'cTeacher': 10,

'cTeam': 18,

'cCount': 8,

'cProcess': 28

}

# 计算总宽度

total_width = sum(column_widths.values()) + 6 # 6个字段间隔

print(f"\n" + "-" * total_width)

print(f"MOOC课程爬取结果(共{len(records)}门)".center(total_width))

print("-" * total_width)

# 表头(中文表头,左对齐)

header = (

f"{'序号':<{column_widths['id']}} "

f"{'课程名称':<{column_widths['cCourse']}} "

f"{'学校':<{column_widths['cCollege']}} "

f"{'主讲教师':<{column_widths['cTeacher']}} "

f"{'团队成员':<{column_widths['cTeam']}} "

f"{'参加人数':<{column_widths['cCount']}} "

f"{'课程时间':<{column_widths['cProcess']}}"

)

print(header)

print("-" * total_width)

# 数据行(严格按宽度截断/填充,确保整齐)

for r in records:

# 课程名称

cCourse = r[1][:column_widths['cCourse'] - 3] + "..." if len(r[1]) > column_widths['cCourse'] else r[1].ljust(

column_widths['cCourse'])

# 学校

cCollege = r[2].ljust(column_widths['cCollege'])

# 主讲教师

cTeacher = r[3].ljust(column_widths['cTeacher'])

# 团队成员

cTeam = r[4][:column_widths['cTeam'] - 3] + "..." if len(r[4]) > column_widths['cTeam'] else r[4].ljust(

column_widths['cTeam'])

# 参加人数(右对齐更美观)

cCount = str(r[5]).rjust(column_widths['cCount'])

# 课程时间

cProcess = r[6][:column_widths['cProcess'] - 3] + "..." if len(r[6]) > column_widths['cProcess'] else r[

6].ljust(column_widths['cProcess'])

# 输出行

print(

f"{r[0]:<{column_widths['id']}} "

f"{cCourse} "

f"{cCollege} "

f"{cTeacher} "

f"{cTeam} "

f"{cCount} "

f"{cProcess}"

)

print("-" * total_width)

print(f"统计:共{len(records)}门课程 | 累计参加人数{sum([r[5] for r in records])}人".center(total_width))

print("-" * total_width)

# 主逻辑

if __name__ == "__main__":

print("=" * 50)

print("中国大学MOOC爬虫")

print("=" * 50)

# 1. 初始化数据库

conn, cursor = setup_database()

# 2. 手动登录+页面跳转

manual_login_prompt()

# 3. 提取课程URL

course_list = extract_course_urls()

# 4. 爬取并保存

if course_list:

print(f"\n开始爬取{len(course_list)}门课程...")

for cid, url in course_list:

save_course_to_db(conn, cursor, get_course_detail(cid, url))

time.sleep(3)

# 5. 规整格式输出结果

display_result(cursor)

# 6. 关闭资源

driver.quit()

cursor.close()

conn.close()

print(f"\n所有操作完成!数据库存储{len(course_list)}门课程")

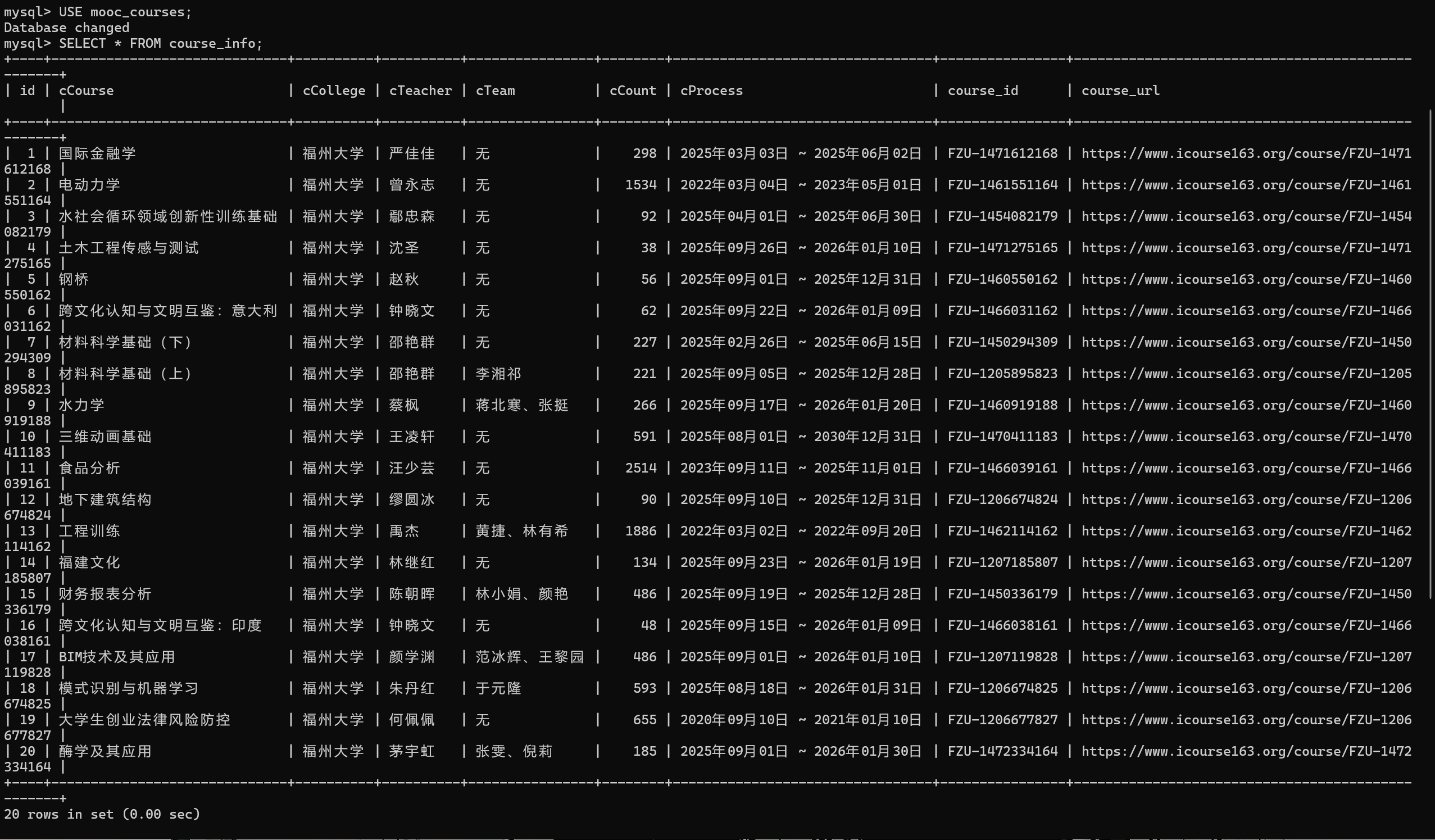

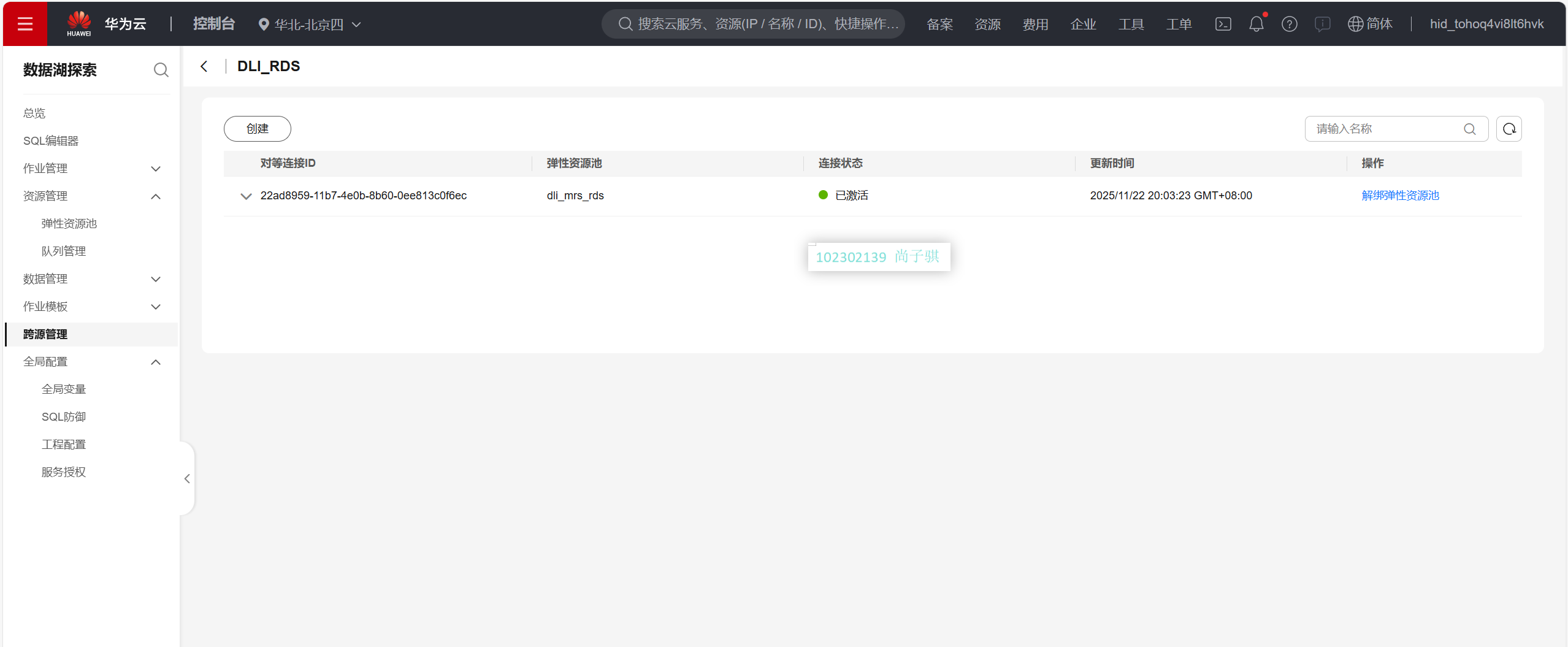

数据库验证

2. 心得体会

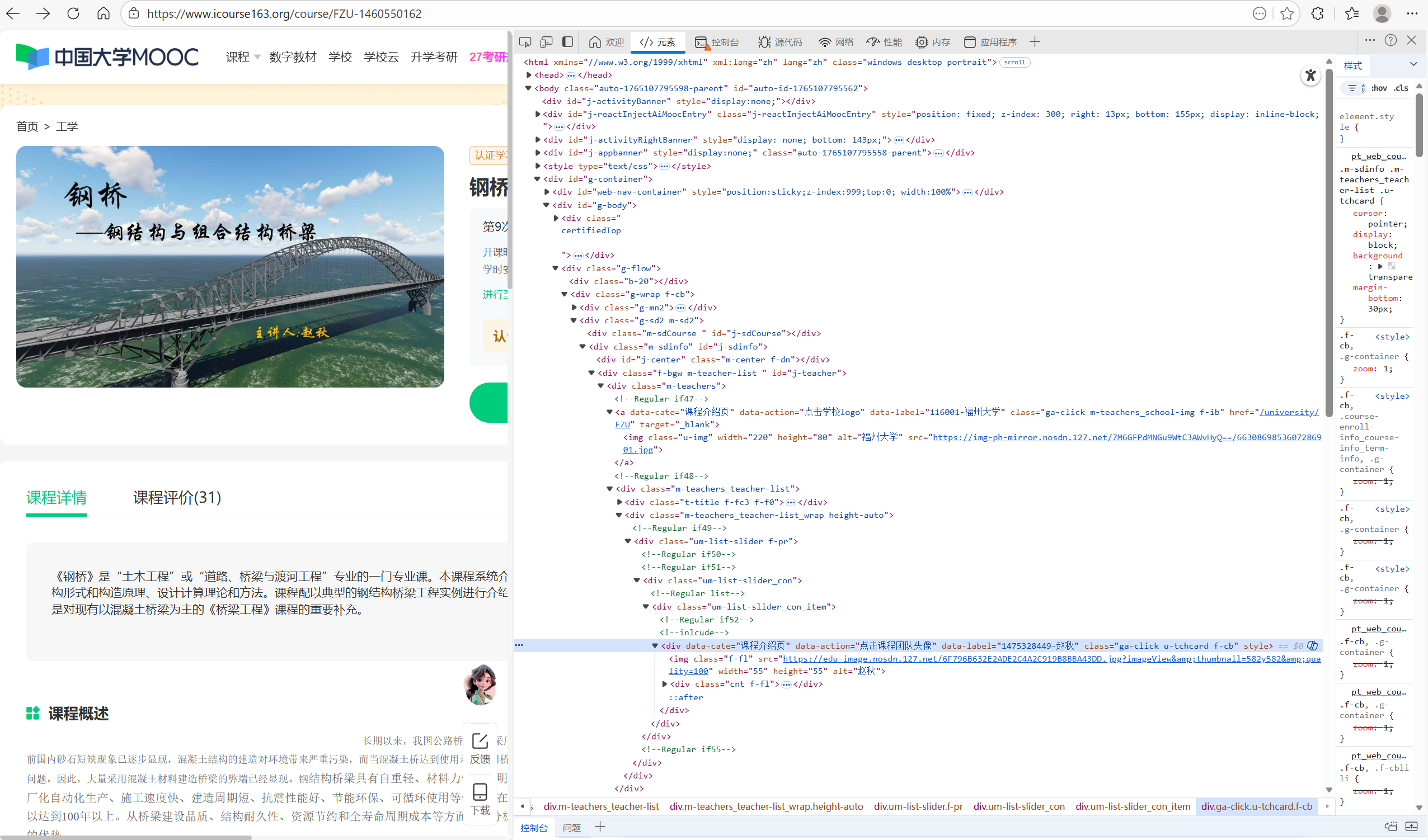

用F12调用查找教师元素精确页面源码,教师姓名在 h3.cnf-f3.fc3 这个元素中

但是爬取后爬不到教师信息,上网查询资料知道:中国大学 MOOC 会检测 Selenium 爬虫,动态内容教师以及开课时间被隐藏,导致精准选择器失效,选择器过于严格,用了太精确的 h3.cnf-f3.fc3,页面结构稍有变化就匹配失败,然后采用CSS 选择器直接定位 + 正则解析页面源码,而且mooc反爬更严格,我采用了和第一道题同一种方法,登录后再再返回自行确认运行代码,并且自动滑动网页模拟用户行为

CSS 选择器直接定位

try: # CSS选择器优先提取(多选择器兼容不同页面) count_text = driver.find_element(By.CSS_SELECTOR, ".enroll-count, .u-count, .course-enroll-info .count").text.strip() if "万" in count_text: num = float(re.search(r"\d+(\.\d+)?", count_text).group()) info["cCount"] = int(num * 10000) else: count_match = re.search(r'(\d+)', count_text) info["cCount"] = int(count_match.group(1)) if count_match else 0 except: # 正则兜底(从源码提取) count_match = re.search(r'(\d+)人已参加|(\d+)人报名|(\d+)人学习', page_source) info["cCount"] = int(count_match.group(1) or count_match.group(2) or count_match.group(3)) if count_match else 0

正则解析页面源码

try: # CSS+XPath优先提取 process_text = driver.find_element(By.XPATH, "//div[contains(text(), '开课时间')]/following-sibling::div").text.strip() if "~" not in process_text: end_time = driver.find_element(By.XPATH, "//div[contains(text(), '结束时间')]/following-sibling::div").text.strip() process_text = f"{process_text} ~ {end_time}" info["cProcess"] = process_text except: # 正则兜底(匹配源码中的时间格式) process_match = re.search(r'(\d{4}年\d{2}月\d{2}日)\s*-\s*(\d{4}年\d{2}月\d{2}日)|(\d{4}年\d{2}月\d{2}日)\s*~\s*(\d{4}年\d{2}月\d{2}日)', page_source) if process_match: info["cProcess"] = f"{process_match.group(1) or process_match.group(3)} ~ {process_match.group(2) or process_match.group(4)}"

整体思路

1)通过配置固定课程页 URL(福州大学 MOOC 课程页),手动登录后进入目标页面,滚动加载完成 Ajax 动态数据的获取

2) 全程使用 Selenium 控制浏览器,处理课程列表的 Ajax 滚动加载,确保获取完整课程链接

3) 采用「CSS 选择器优先定位 + 正则解析源码兜底」的方式,精准匹配课程名称、教师、人数等字段,兼容不同页面结构

4) 对登录、元素加载等环节采用显式等待(WebDriverWait),避免因页面加载延迟导致的元素定位失败

5) 封装数据库操作逻辑,自动完成建库、建表、数据插入,确保爬取结果持久化存储

6) 输出序号、课程名称、参加人数等核心字段,在本地数据库验证爬取数据的完整性

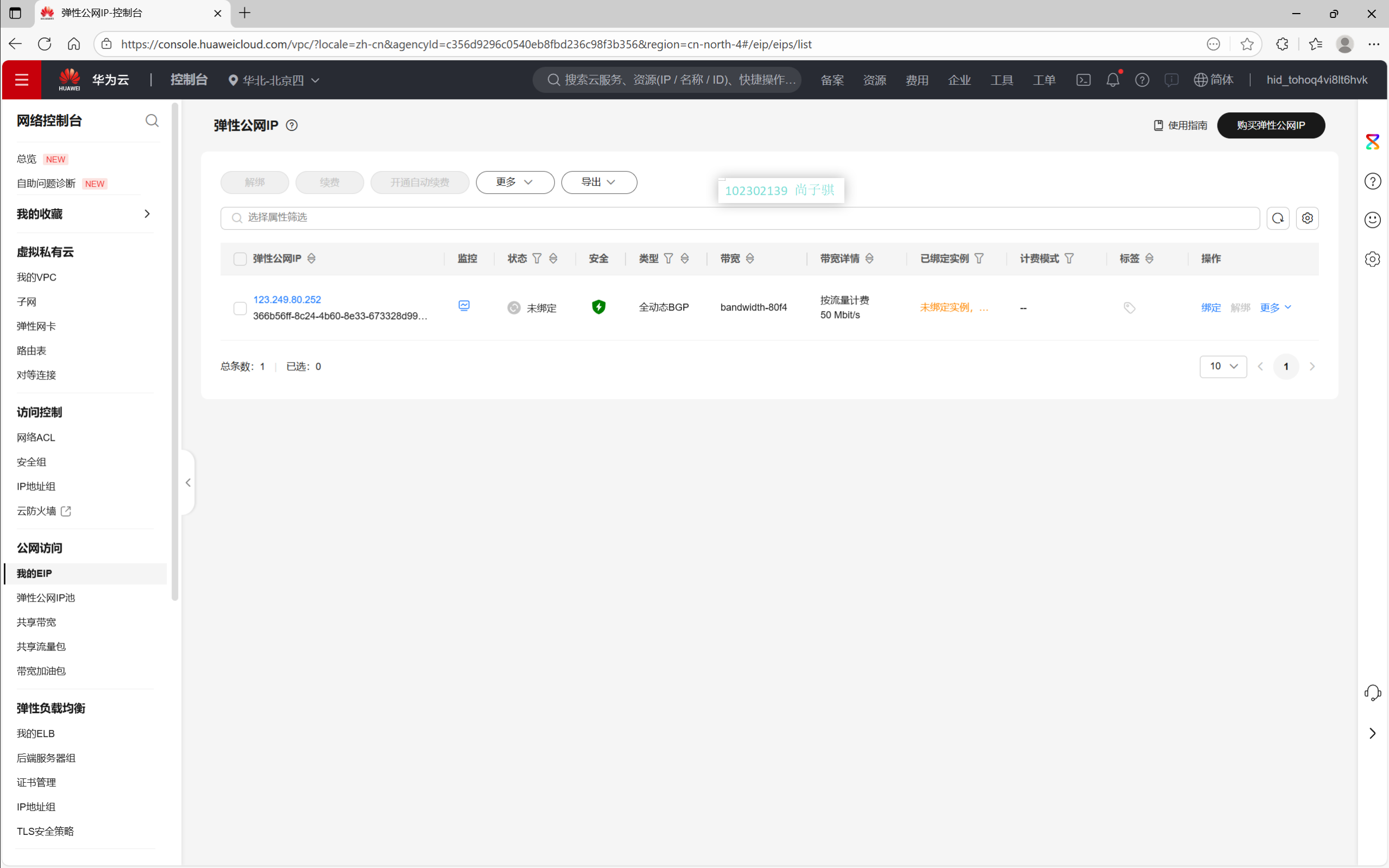

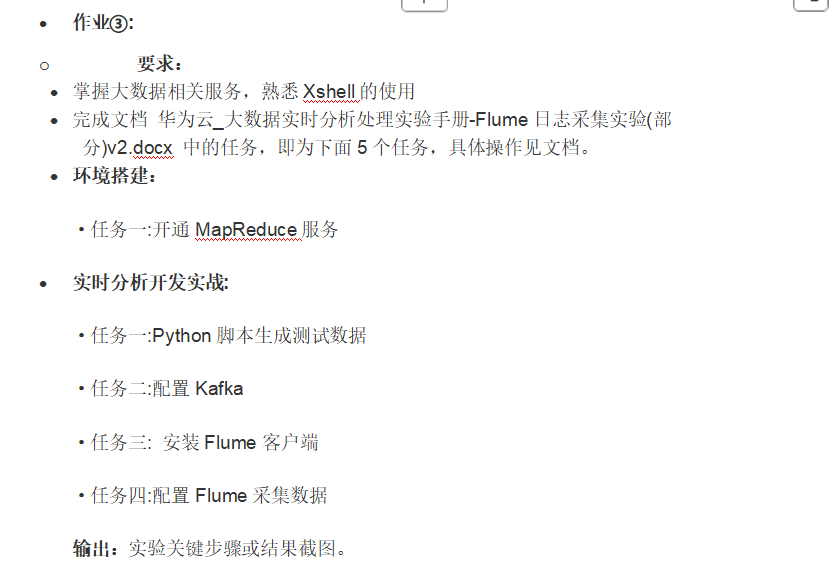

- 作业三

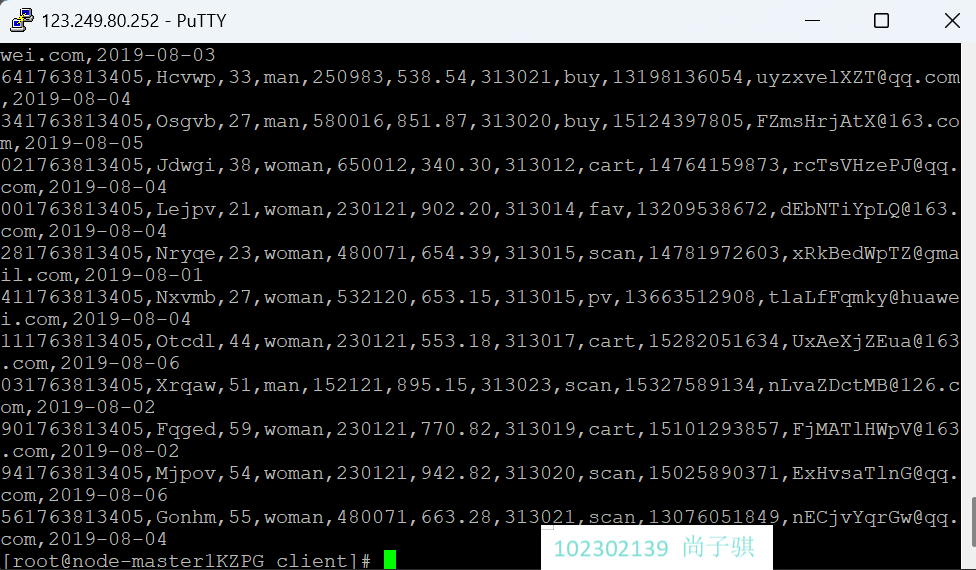

![image]()

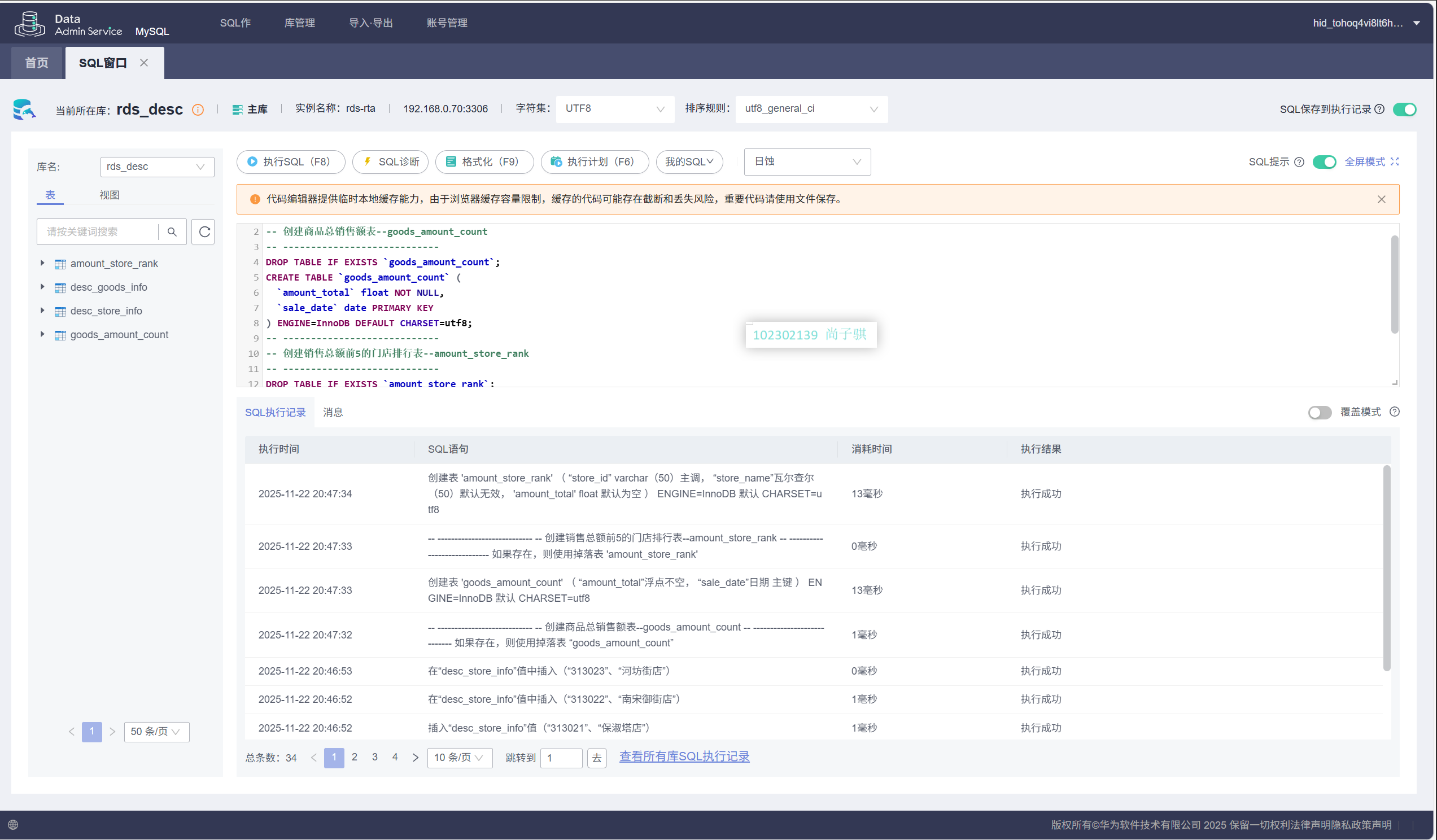

环境搭建:

任务一:开通MapReduce服务

实时分析开发实战:

任务一:Python脚本生成测试数据

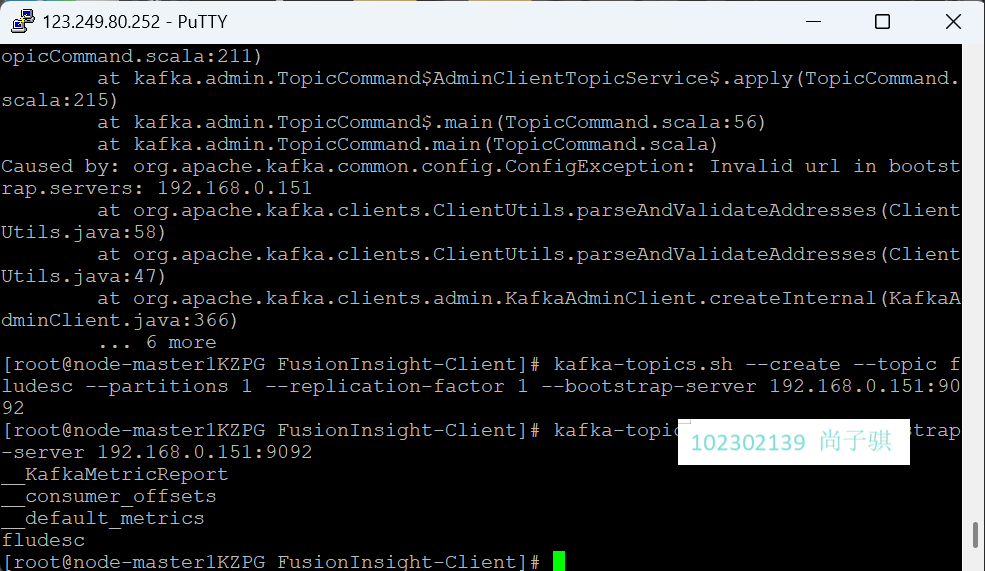

任务二:配置Kafka

任务三: 安装Flume客户端

任务四:配置Flume采集数据

创建数据库

验证数据

实验心得

本次大数据实时分析处理实验,让我系统掌握了华为云MRS、Kafka、Flume、DLI Flink、RDS、DLV等组件构成的实时数据处理全链路架构,熟练完成了从云服务开通配置、数据模拟采集、流处理分析到可视化展示的全流程实操。实验中,我不仅深入理解了各组件的协同逻辑与技术细节,还通过排查网络连通性、配置匹配、权限设置等问题,提升了问题解决能力,同时深刻体会到云原生技术在降低开发成本、提升处理效率上的优势,以及规范操作和资源管理的重要性。这次经历既巩固了技术基础,也让我对大数据实时场景的业务价值有了更直观的认知,为后续相关实践打下了坚实基础。

Gitee文件夹及代码链接:https://gitee.com/sike-0420/kuku/tree/master/%E4%BD%9C%E4%B8%9A4

浙公网安备 33010602011771号

浙公网安备 33010602011771号