K8S 1.24.1 helm 部署 kafka 和 kafka-console-ui,另带 kraft 配置, 集群外部访问配置

背景

| IP | 角色 | 中间件 |

|---|---|---|

| 172.16.16.108 | k8s-master-1 | kafka, zookeeper |

| 172.16.16.109 | k8s-node-1 | kafka, zookeeper |

| 172.16.16.110 | k8s-node-2 | kafka, zookeeper |

部署 kafka

mkdir -p /data/yaml/klvchen/kafka && cd /data/yaml/klvchen/kafka

# 添加 bitnami charts 仓库

helm repo add bitnami https://charts.bitnami.com/bitnami

# 查看

helm repo list

# 搜索

helm search repo kafka

wget https://charts.bitnami.com/bitnami/kafka-20.0.2.tgz

tar zxvf kafka-20.0.2.tgz

# 创建命名空间

kubectl create ns klvchen

# 确认自己的 storageClass

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate true 16d

# 根据自己的要求更改配置

cat my-values.yaml

replicaCount: 3 # kafka 副本数

global:

storageClass: nfs-client # kafka 和 zookeeper 使用的存储

heapOpts: "-Xmx1024m -Xms1024m" # kafka 启动的 jvm 参数

persistence: # kafka 每个副本的存储空间

size: 10Gi

resources:

limits:

cpu: 1000m

memory: 2Gi

requests:

cpu: 100m

memory: 100Mi

zookeeper:

replicaCount: 3 # zookeeper 的副本数

persistence:

size: 10Gi # zookeeper 每个副本的存储空间

resources:

limits:

cpu: 2000m

memory: 2Gi

externalAccess:

enabled: true # 开启外部访问

autoDiscovery:

enabled: true

service:

type: NodePort # 开启 nodeport

ports:

external: 9094

nodePorts: # nodeport 对应的端口,多少个 kafka 副本对应多少个端口

- 30001

- 30002

- 30003

# 启动

helm install --namespace klvchen kafka -f my-values.yaml --set rbac.create=true kafka

# 检查

helm -n klvchen ls

kubectl -n klvchen get pod

部署 kafka-console-ui

mkdir -p /data/yaml/klvchen/kafka-console-ui && cd /data/yaml/klvchen/kafka-console-ui

cat >> deployment.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-console-ui

namespace: klvchen

spec:

replicas: 1

selector:

matchLabels:

app: kafka-console-ui

template:

metadata:

labels:

app: kafka-console-ui

spec:

containers:

- name: kafka-console-ui

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 10m

memory: 10Mi

image: wdkang/kafka-console-ui:latest

volumeMounts:

- mountPath: /etc/localtime

readOnly: true

name: time-data

volumes:

- name: time-data

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

EOF

cat >> svc.yaml << EOF

kind: Service

apiVersion: v1

metadata:

labels:

app: kafka-console-ui

name: kafka-console-ui

namespace: klvchen

spec:

ports:

- port: 7766

targetPort: 7766

nodePort: 30088

selector:

app: kafka-console-ui

type: NodePort

EOF

kubectl apply -f deployment.yaml -f svc.yaml

访问 http://172.16.16.108:30088/

新建集群名字 kafka

地址:172.16.16.108:30001,172.16.16.108:30002,172.16.16.108:30003

参考:

https://artifacthub.io/packages/helm/bitnami/kafka

使用 kraft,不需要安装 zk

注意这里要使用 kraft 版本要比较新的版本

# 替换成 22.1.3 版本

helm pull bitnami/kafka --version 22.1.3

tar zxvf kafka-22.1.3.tgz

cat my-values.yaml

replicaCount: 3

global:

storageClass: nfs-client

heapOpts: "-Xmx2048m -Xms2048m"

persistence:

size: 30Gi

resources:

limits:

cpu: 2000m

memory: 3Gi

requests:

cpu: 100m

memory: 1536Mi

kraft:

clusterId: M2VhY2Q3NGQ0NGYzNDg2YW

# 启动

helm install --namespace klvchen kafka -f my-values.yaml --set rbac.create=true kafka

集群外部访问配置

cat my-values.yaml

replicaCount: 3

global:

storageClass: nfs-client

heapOpts: "-Xmx1024m -Xms1024m"

persistence:

size: 10Gi

resources:

limits:

cpu: 2000m

memory: 3Gi

requests:

cpu: 100m

memory: 1536Mi

externalAccess:

enabled: true

service:

useHostIPs: true

type: NodePort

nodePorts:

- 31050

- 31051

- 31052

kraft:

clusterId: M2VhY2Q3NGQ0NGYzNDg2YW

helm install kafka -f my-values.yaml ./kafka

[root@k8s-master-1 kafka]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kafka ClusterIP 10.101.20.228 <none> 9092/TCP,9095/TCP 45m

kafka-0-external NodePort 10.100.61.43 <none> 9094:31050/TCP 45m

kafka-1-external NodePort 10.109.70.160 <none> 9094:31051/TCP 45m

kafka-2-external NodePort 10.109.235.205 <none> 9094:31052/TCP 45m

kafka-headless ClusterIP None <none> 9092/TCP,9094/TCP,9093/TCP 45m

# 集群内使用 9092 端口访问

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kafka.default.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

kafka-0.kafka-headless.default.svc.cluster.local:9092

kafka-1.kafka-headless.default.svc.cluster.local:9092

kafka-2.kafka-headless.default.svc.cluster.local:9092

# 集群外使用 IP+NodePort 端口访问

172.16.16.108:31050

172.16.16.108:31051

172.16.16.108:31052

# 可以使用 kafka-client 这个 pod 来测试

kubectl run kafka-client --restart='Never' --image docker.io/bitnami/kafka:3.4.0-debian-11-r33 --namespace default --command -- sleep infinity

# 例子

PRODUCER:

kafka-console-producer.sh \

--broker-list prerequisites-kafka.datahub.svc.cluster.local:9092 \

--topic test

CONSUMER:

kafka-console-consumer.sh \

--bootstrap-server prerequisites-kafka.datahub.svc.cluster.local:9092 \

--topic test \

--from-beginning

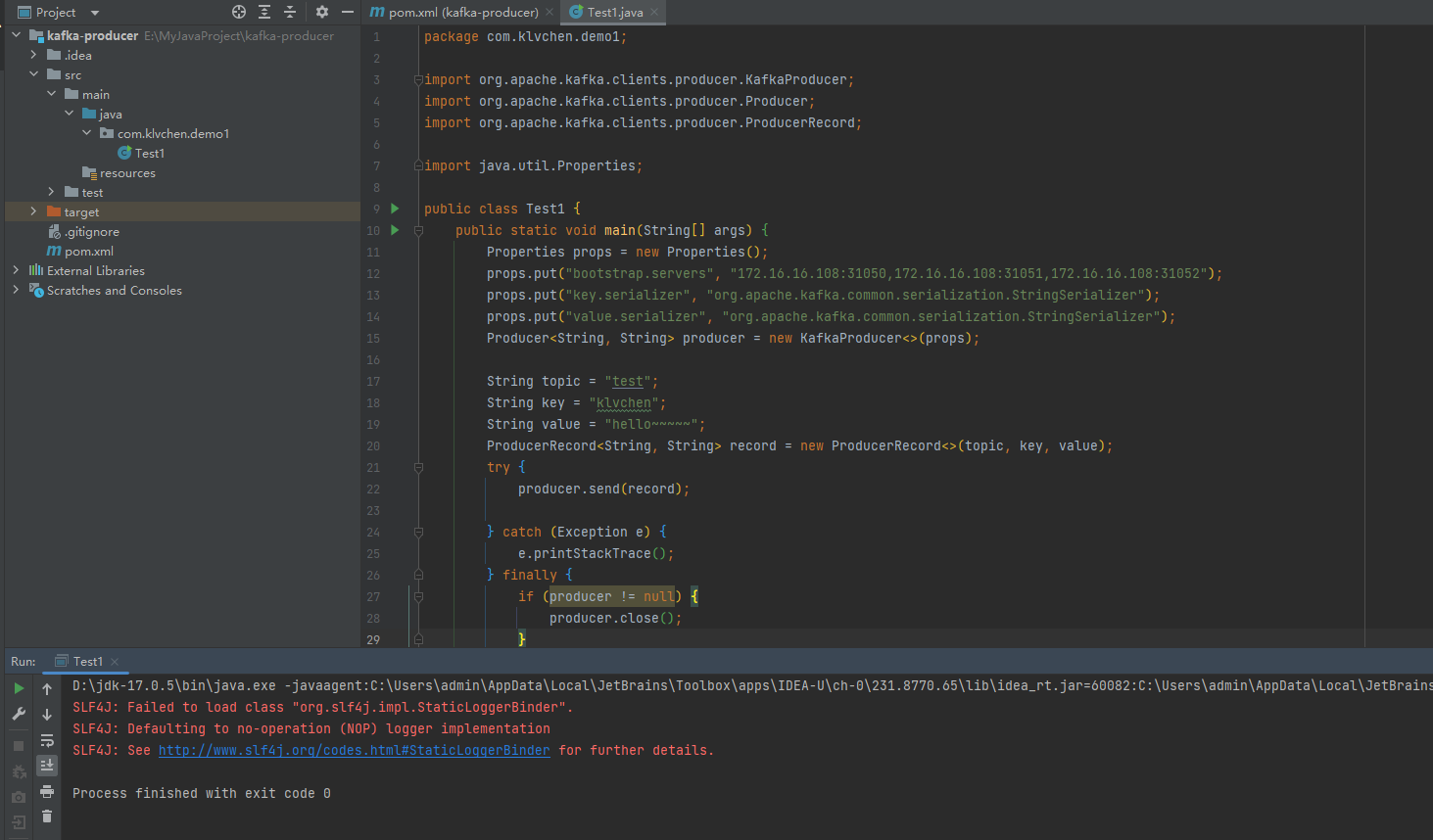

在集群外使用代码发送消息

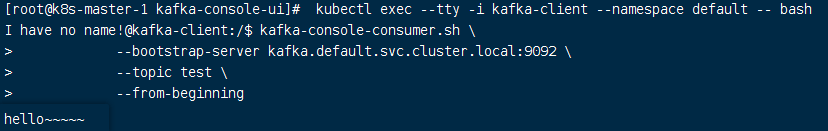

成功消费

浙公网安备 33010602011771号

浙公网安备 33010602011771号