K8S 1.29 对接 ceph reef

前提

银河麒麟 V10 部署 K8S v1.29.15 集群 -> https://www.cnblogs.com/klvchen/p/19273665

银河麒麟 V10 安装 ceph reef 集群-> https://www.cnblogs.com/klvchen/articles/19268588

ceph 创建 pool 和 用户(ceph-01上操作)

cephadm shell

# 获取 cluster id

ceph -s

# 运行结果类似

# cluster:

# id: cf7316be-c918-11f0-ba15-5a74cd4cc82a

ceph osd pool create k8s-rbd 32 32

ceph auth get-or-create client.k8s mon 'profile rbd' osd 'profile rbd pool=k8s-rbd' mgr 'profile rbd pool=k8s-rbd'

# 记录账户的 Key

ceph auth get-key client.k8s

# 运行结果类似

# AQBADiVpFvACNRAAq4ys0KnA+hd39F+CUf/vlg==

部署 ceph-csi-rbd (k8s master 上操作)

mkdir -p /data/yaml/default/ceph-csi-rbd

cd /data/yaml/default/ceph-csi-rbd

# 使用 Helm 部署

helm repo add ceph-csi https://ceph.github.io/csi-charts

helm repo ls

helm pull ceph-csi/ceph-csi-rbd --version 3.14.0

tar zxvf ceph-csi-rbd-3.14.0.tgz

# 因为国内原因 ceph-csi-rdb 下面所使用到的5个镜像无法正常拉取,可去 https://docker.aityp.com/ 自行下载

# registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.13.0

# registry.k8s.io/sig-storage/csi-provisioner:v5.1.0

# registry.k8s.io/sig-storage/csi-attacher:v4.8.0

# registry.k8s.io/sig-storage/csi-resizer:v1.13.1

# registry.k8s.io/sig-storage/csi-snapshotter:v8.2.0

# 创建自己的配置

vi my-values.yaml

# clusterID 是前面 ceph -s 得出的 id, monitors 是 3 个 ceph 的 ip, 可以使用 ceph orch host ls 命令查询

csiConfig:

- clusterID: "cf7316be-c918-11f0-ba15-5a74cd4cc82a"

monitors:

- "192.168.10.151:6789"

- "192.168.10.152:6789"

- "192.168.10.153:6789"

# StorageClass 配置

storageClass:

create: true

name: ceph-rbd

clusterID: "cf7316be-c918-11f0-ba15-5a74cd4cc82a"

pool: "k8s-rbd"

imageFeatures: "layering"

fstype: ext4

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

provisionerSecret: "csi-rbd-secret"

provisionerSecretNamespace: "default"

controllerExpandSecret: "csi-rbd-secret"

controllerExpandSecretNamespace: "default"

nodeStageSecret: "csi-rbd-secret"

nodeStageSecretNamespace: "default"

# Secret 配置, userKey 是上面通过 ceph shell 下的 ceph auth get-key client.k8s 获取

secret:

create: true

name: csi-rbd-secret

userID: "k8s"

userKey: "AQBADiVpFvACNRAAq4ys0KnA+hd39F+CUf/vlg=="

nodeplugin:

registrar:

image:

repository: devharbor.junengcloud.com/sig-storage/csi-node-driver-registrar

provisioner:

provisioner:

image:

repository: devharbor.junengcloud.com/sig-storage/csi-provisioner

resizer:

image:

repository: devharbor.junengcloud.com/sig-storage/csi-resizer

attacher:

image:

repository: devharbor.junengcloud.com/sig-storage/csi-attacher

snapshotter:

image:

repository: devharbor.junengcloud.com/sig-storage/csi-snapshotter

# 部署

helm install ceph-csi-rbd -f my-values.yaml ./ceph-csi-rbd

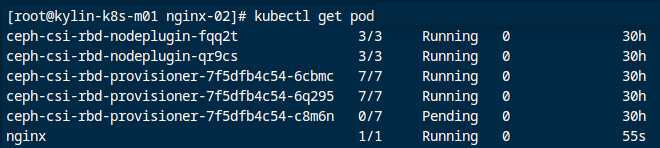

# 检查 Pod 状态

kubectl get pods -l app=ceph-csi-rbd

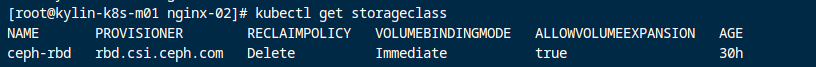

# 检查 StorageClass

kubectl get storageclass

测试

mkdir /data/yaml/default/nginx

cd /data/yaml/default/nginx

cat <<EOF > pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: ceph-rbd

EOF

kubectl apply -f pvc.yaml

cat <<EOF > pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: web-server

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/library/nginx:1.29.0-alpine

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

EOF

kubectl apply -f pod.yaml

浙公网安备 33010602011771号

浙公网安备 33010602011771号