[Pytorch]PyTorch使用tensorboardX(转

文章来源: https://zhuanlan.zhihu.com/p/35675109

https://www.aiuai.cn/aifarm646.html

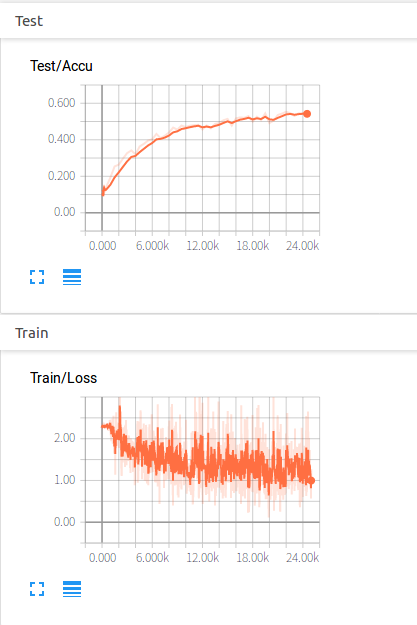

之前用pytorch是手动记录数据做图,总是觉得有点麻烦。学习了一下tensorboardX,感觉网上资料有点杂,记录一下重点。由于大多数情况只是看一下loss,lr,accu这些曲线,就先总结这些,什么images,audios以后需要再总结。

1.安装:有各种方法,docker安装,使用logger.py脚本调用感觉都不简洁。现在的tensorboardX感觉已经很好了,没什么坑。在命令行pip安装即可

pip install tensorboardX

2.调用

from tensorboardX import SummaryWriter

writer = SummaryWriter('log')

writer就相当于一个日志,保存你要做图的所有信息。第二句就是在你的项目目录下建立一个文件夹log,存放画图用的文件。刚开始的时候是空的。

训练的循环中,每次写入 图像名称,loss数值, n_iteration

writer.add_scalar('Train/Loss', loss.data[0], niter)

验证的循环中,写入预测的准确度即可:

writer.add_scalar('Test/Accu', correct/total, niter)

为了看得清楚一点,我把整个train_eval写一起了

def train_eval(epoch):

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

inputs, labels = Variable(inputs), Variable(labels)

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.data[0]

#每2000个batch显示一次当前的loss和accu

if i % 2000 == 1999:

print('[epoch: %d, batch: %5d] loss: %.3f' %

(epoch + 1, i+1, running_loss / 2000))

running_loss = 0.0

print('[epoch: %d, batch: %5d] Accu: %.3f' %(epoch + 1, i+1, correct/total))

#每10个batch画个点用于loss曲线

if i % 10 == 0:

niter = epoch * len(trainloader) + i

writer.add_scalar('Train/Loss', loss.data[0], niter)

#每500个batch全验证集检测,画个点用于Accu

if i % 500 == 0:

correct = 0

total = 0

for data in testloader:

images, target = data

res = net(Variable(images))

_, predicted = torch.max(res.data, 1)

total += labels.size(0)

correct += (predicted == target).sum()

writer.add_scalar('Test/Accu', correct/total, niter)

3.显示

会发现刚刚的log文件夹里面有文件了。在命令行输入如下,载入刚刚做图的文件(那个./log要写完整的路径)

tensorboard --logdir=./log

在浏览器输入:

就可以看到我们做的两个图了

tensorboardX 用于 Pytorch (Chainer, MXNet, Numpy 等) 的可视化库.

类似于 TensorFlow 的 tensorboard 模块.

tensorboard 采用简单的函数调用来写入 TensorBoard 事件.

- 支持

scalar,image,figure,histogram,audio,text,graph,onnx_graph,embedding,pr_curve和videosummaries. demo_graph.py的要求:tensorboardX>=1.2,pytorch>=0.4.

安装:

sudo pip install tensorboardX

# 或

sudo pip install git+https://github.com/lanpa/tensorboardX1. TensorBoardX 使用 Demo

# demo.py

import torch

import torchvision.utils as vutils

import numpy as np

import torchvision.models as models

from torchvision import datasets

from tensorboardX import SummaryWriter

resnet18 = models.resnet18(False)

writer = SummaryWriter()

sample_rate = 44100

freqs = [262, 294, 330, 349, 392, 440, 440, 440, 440, 440, 440]

for n_iter in range(100):

dummy_s1 = torch.rand(<span class="hljs-number">1</span>)

dummy_s2 = torch.rand(<span class="hljs-number">1</span>)

<span class="hljs-comment"># data grouping by `slash`</span>

writer.add_scalar(<span class="hljs-string">'data/scalar1'</span>, dummy_s1[<span class="hljs-number">0</span>], n_iter)

writer.add_scalar(<span class="hljs-string">'data/scalar2'</span>, dummy_s2[<span class="hljs-number">0</span>], n_iter)

writer.add_scalars(<span class="hljs-string">'data/scalar_group'</span>, {<span class="hljs-string">'xsinx'</span>: n_iter * np.sin(n_iter),

<span class="hljs-string">'xcosx'</span>: n_iter * np.cos(n_iter),

<span class="hljs-string">'arctanx'</span>: np.arctan(n_iter)}, n_iter)

dummy_img = torch.rand(<span class="hljs-number">32</span>, <span class="hljs-number">3</span>, <span class="hljs-number">64</span>, <span class="hljs-number">64</span>) <span class="hljs-comment"># output from network</span>

<span class="hljs-keyword">if</span> n_iter % <span class="hljs-number">10</span> == <span class="hljs-number">0</span>:

x = vutils.make_grid(dummy_img, normalize=<span class="hljs-keyword">True</span>, scale_each=<span class="hljs-keyword">True</span>)

writer.add_image(<span class="hljs-string">'Image'</span>, x, n_iter)

dummy_audio = torch.zeros(sample_rate * <span class="hljs-number">2</span>)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(x.size(<span class="hljs-number">0</span>)):

<span class="hljs-comment"># amplitude of sound should in [-1, 1]</span>

dummy_audio[i] = np.cos(freqs[n_iter // <span class="hljs-number">10</span>] * np.pi * float(i) / float(sample_rate))

writer.add_audio(<span class="hljs-string">'myAudio'</span>, dummy_audio, n_iter, sample_rate=sample_rate)

writer.add_text(<span class="hljs-string">'Text'</span>, <span class="hljs-string">'text logged at step:'</span> + str(n_iter), n_iter)

<span class="hljs-keyword">for</span> name, param <span class="hljs-keyword">in</span> resnet18.named_parameters():

writer.add_histogram(name, param.clone().cpu().data.numpy(), n_iter)

<span class="hljs-comment"># needs tensorboard 0.4RC or later</span>

writer.add_pr_curve(<span class="hljs-string">'xoxo'</span>, np.random.randint(<span class="hljs-number">2</span>, size=<span class="hljs-number">100</span>), np.random.rand(<span class="hljs-number">100</span>), n_iter)

dataset = datasets.MNIST('mnist', train=False, download=True)

images = dataset.test_data[:100].float()

label = dataset.test_labels[:100]

features = images.view(100, 784)

writer.add_embedding(features, metadata=label, label_img=images.unsqueeze(1))

# export scalar data to JSON for external processing

writer.export_scalars_to_json("./all_scalars.json")

writer.close()

运行以上 demo.py 代码:

python demo.py然后,即可采用 TensorBoard 可视化(需要先安装过 TensorFlow):

tensorboard --logdir ./runs在 demo.py代码里主要给出了以下几个方面的信息:

SCALARS:data/scalar1,data/scalar2 和 data/scalar_group

writer.add_scalar('data/scalar1', dummy_s1[0], n_iter)

writer.add_scalar('data/scalar2', dummy_s2[0], n_iter)

writer.add_scalars('data/scalar_group',

{'xsinx': n_iter * np.sin(n_iter),

'xcosx': n_iter * np.cos(n_iter),

'arctanx': np.arctan(n_iter)}, n_iter)

IMAGES:

writer.add_image('Image', x, n_iter)

AUDIO:

writer.add_audio('myAudio', dummy_audio, n_iter, sample_rate=sample_rate)

DISTRIBUTIONS和 HISTOGRAMS:

for name, param in resnet18.named_parameters():

writer.add_histogram(name, param.clone().cpu().data.numpy(), n_iter)

TEXT:

writer.add_text('Text', 'text logged at step:' + str(n_iter), n_iter)

PR CURVES:

# needs tensorboard 0.4RC or later

writer.add_pr_curve('xoxo', np.random.randint(2, size=100), np.random.rand(100), n_iter)

PROJECTOR:

dataset = datasets.MNIST('mnist', train=False, download=False)

images = dataset.test_data[:100].float()

label = dataset.test_labels[:100]features = images.view(100, 784)

writer.add_embedding(features, metadata=label, label_img=images.unsqueeze(1))

(一直在计算 PCA 。。。)

2. TensorBoardX - Graph 可视化

demo_graph.py

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision

from torch.autograd import Variable

from tensorboardX import SummaryWriter

class Net1(nn.Module):

def init(self):

super(Net1, self).init()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

self.bn = nn.BatchNorm2d(20)

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">forward</span><span class="hljs-params">(self, x)</span>:</span>

x = F.max_pool2d(self.conv1(x), <span class="hljs-number">2</span>)

x = F.relu(x) + F.relu(-x)

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), <span class="hljs-number">2</span>))

x = self.bn(x)

x = x.view(<span class="hljs-number">-1</span>, <span class="hljs-number">320</span>)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

x = F.softmax(x, dim=<span class="hljs-number">1</span>)

<span class="hljs-keyword">return</span> x

class Net2(nn.Module):

def init(self):

super(Net2, self).init()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">forward</span><span class="hljs-params">(self, x)</span>:</span>

x = F.relu(F.max_pool2d(self.conv1(x), <span class="hljs-number">2</span>))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), <span class="hljs-number">2</span>))

x = x.view(<span class="hljs-number">-1</span>, <span class="hljs-number">320</span>)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

x = F.log_softmax(x, dim=<span class="hljs-number">1</span>)

<span class="hljs-keyword">return</span> x

dummy_input = Variable(torch.rand(13, 1, 28, 28))

model = Net1()

with SummaryWriter(comment='Net1') as w:

w.add_graph(model, (dummy_input, ))

model = Net2()

with SummaryWriter(comment='Net2') as w:

w.add_graph(model, (dummy_input, ))

dummy_input = torch.Tensor(1, 3, 224, 224)

with SummaryWriter(comment='alexnet') as w:

model = torchvision.models.alexnet()

w.add_graph(model, (dummy_input, ))

with SummaryWriter(comment='vgg19') as w:

model = torchvision.models.vgg19()

w.add_graph(model, (dummy_input, ))

with SummaryWriter(comment='densenet121') as w:

model = torchvision.models.densenet121()

w.add_graph(model, (dummy_input, ))

with SummaryWriter(comment='resnet18') as w:

model = torchvision.models.resnet18()

w.add_graph(model, (dummy_input, ))

class SimpleModel(nn.Module):

def init(self):

super(SimpleModel, self).init()

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">forward</span><span class="hljs-params">(self, x)</span>:</span>

<span class="hljs-keyword">return</span> x * <span class="hljs-number">2</span>

model = SimpleModel()

dummy_input = (torch.zeros(1, 2, 3),)

with SummaryWriter(comment='constantModel') as w:

w.add_graph(model, dummy_input)

def conv3x3(in_planes, out_planes, stride=1):

"""3x3 convolution with padding"""

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

padding=1, bias=False)

class BasicBlock(nn.Module):

expansion = 1

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">__init__</span><span class="hljs-params">(self, inplanes, planes, stride=<span class="hljs-number">1</span>, downsample=None)</span>:</span>

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

<span class="hljs-comment"># self.relu = nn.ReLU(inplace=True)</span>

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes)

self.stride = stride

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">forward</span><span class="hljs-params">(self, x)</span>:</span>

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = F.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += residual

out = F.relu(out)

<span class="hljs-keyword">return</span> out

dummy_input = torch.rand(1, 3, 224, 224)

with SummaryWriter(comment='basicblock') as w:

model = BasicBlock(3, 3)

w.add_graph(model, (dummy_input, )) # , verbose=True)

class RNN(nn.Module):

def init(self, input_size, hidden_size, output_size):

super(RNN, self).init()

self.hidden_size = hidden_size

self.i2h = nn.Linear(

n_categories +

input_size +

hidden_size,

hidden_size)

self.i2o = nn.Linear(

n_categories +

input_size +

hidden_size,

output_size)

self.o2o = nn.Linear(hidden_size + output_size, output_size)

self.dropout = nn.Dropout(0.1)

self.softmax = nn.LogSoftmax(dim=1)

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">forward</span><span class="hljs-params">(self, category, input, hidden)</span>:</span>

input_combined = torch.cat((category, input, hidden), <span class="hljs-number">1</span>)

hidden = self.i2h(input_combined)

output = self.i2o(input_combined)

output_combined = torch.cat((hidden, output), <span class="hljs-number">1</span>)

output = self.o2o(output_combined)

output = self.dropout(output)

output = self.softmax(output)

<span class="hljs-keyword">return</span> output, hidden

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">initHidden</span><span class="hljs-params">(self)</span>:</span>

<span class="hljs-keyword">return</span> torch.zeros(<span class="hljs-number">1</span>, self.hidden_size)

n_letters = 100

n_hidden = 128

n_categories = 10

rnn = RNN(n_letters, n_hidden, n_categories)

cat = torch.Tensor(1, n_categories)

dummy_input = torch.Tensor(1, n_letters)

hidden = torch.Tensor(1, n_hidden)

out, hidden = rnn(cat, dummy_input, hidden)

with SummaryWriter(comment='RNN') as w:

w.add_graph(rnn, (cat, dummy_input, hidden), verbose=False)

import pytest

print('expect error here:')

with pytest.raises(Exception) as e_info:

dummy_input = torch.rand(1, 1, 224, 224)

with SummaryWriter(comment='basicblock_error') as w:

w.add_graph(model, (dummy_input, )) # error

这里主要给出了自定义网络 Net1, 自定义网络 Net2, AlexNet, VGG19, DenseNet121, ResNet18, constantModel, basicblock 和 RNN 几个网络 graph 的例示.

运行 tensorboard --logdir=./runs/ 可得到如下可视化,以 AlexNet 为例:

双击 Main Graph 中的 AlexNet 可以查看网络 Graph 的具体网络层,下载 PNG,如:

3. TensorBoardX - matplotlib 可视化

[demo_matplotlib.py]

import matplotlib.pyplot as plt

plt.switch_backend('agg')

fig = plt.figure()

c1 = plt.Circle((0.2, 0.5), 0.2, color='r')

c2 = plt.Circle((0.8, 0.5), 0.2, color='r')

ax = plt.gca()

ax.add_patch(c1)

ax.add_patch(c2)

plt.axis('scaled')

from tensorboardX import SummaryWriter

writer = SummaryWriter()

writer.add_figure('matplotlib', fig)

writer.close()

4. TensorBoardX - nvidia-smi 可视化

demo_nvidia_smi.py

"""

write gpu and (gpu) memory usage of nvidia cards as scalar

"""

from tensorboardX import SummaryWriter

import time

import torch

try:

import nvidia_smi

nvidia_smi.nvmlInit()

handle = nvidia_smi.nvmlDeviceGetHandleByIndex(0) # gpu0

except ImportError:

print('This demo needs nvidia-ml-py or nvidia-ml-py3')

exit()

with SummaryWriter() as writer:

x = []

for n_iter in range(50):

x.append(torch.Tensor(1000, 1000).cuda())

res = nvidia_smi.nvmlDeviceGetUtilizationRates(handle)

writer.add_scalar('nv/gpu', res.gpu, n_iter)

res = nvidia_smi.nvmlDeviceGetMemoryInfo(handle)

writer.add_scalar('nv/gpu_mem', res.used, n_iter)

time.sleep(0.1)

5. TensorBoardX - embedding 可视化

demo_embedding.py

import torch

import torch.nn as nn

import torch.nn.functional as F

import os

from torch.autograd.variable import Variable

from tensorboardX import SummaryWriter

from torch.utils.data import TensorDataset, DataLoader

# EMBEDDING VISUALIZATION FOR A TWO-CLASSES PROBLEM

# 二分类问题的可视化

# just a bunch of layers

class M(nn.Module):

def init(self):

super(M, self).init()

self.cn1 = nn.Conv2d(in_channels=1, out_channels=64, kernel_size=3)

self.cn2 = nn.Conv2d(in_channels=64, out_channels=32, kernel_size=3)

self.fc1 = nn.Linear(in_features=128, out_features=2)

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">forward</span><span class="hljs-params">(self, i)</span>:</span>

i = self.cn1(i)

i = F.relu(i)

i = F.max_pool2d(i, <span class="hljs-number">2</span>)

i = self.cn2(i)

i = F.relu(i)

i = F.max_pool2d(i, <span class="hljs-number">2</span>)

i = i.view(len(i), <span class="hljs-number">-1</span>)

i = self.fc1(i)

i = F.log_softmax(i, dim=<span class="hljs-number">1</span>)

<span class="hljs-keyword">return</span> i

# 随机生成部分数据,加噪声

def get_data(value, shape):

data = torch.ones(shape) * value

# add some noise

data += torch.randn(shape)**2

return data

# dataset

# cat some data with different values

data = torch.cat((get_data(0, (100, 1, 14, 14)),

get_data(0.5, (100, 1, 14, 14))), 0)

# labels

labels = torch.cat((torch.zeros(100), torch.ones(100)), 0)

# generator

gen = DataLoader(TensorDataset(data, labels), batch_size=25, shuffle=True)

# network

m = M()

#loss and optim

loss = nn.NLLLoss()

optimizer = torch.optim.Adam(params=m.parameters())

# settings for train and log

num_epochs = 20

embedding_log = 5

writer = SummaryWriter(comment='mnist_embedding_training')

# TRAIN

for epoch in range(num_epochs):

for j, sample in enumerate(gen):

n_iter = (epoch * len(gen)) + j

# reset grad

m.zero_grad()

optimizer.zero_grad()

# get batch data

data_batch = Variable(sample[0], requires_grad=True).float()

label_batch = Variable(sample[1], requires_grad=False).long()

# FORWARD

out = m(data_batch)

loss_value = loss(out, label_batch)

# BACKWARD

loss_value.backward()

optimizer.step()

# LOGGING

writer.add_scalar('loss', loss_value.data.item(), n_iter)

<span class="hljs-keyword">if</span> j % embedding_log == <span class="hljs-number">0</span>:

print(<span class="hljs-string">"loss_value:{}"</span>.format(loss_value.data.item()))

<span class="hljs-comment"># we need 3 dimension for tensor to visualize it!</span>

out = torch.cat((out.data, torch.ones(len(out), <span class="hljs-number">1</span>)), <span class="hljs-number">1</span>)

writer.add_embedding(out,

metadata=label_batch.data,

label_img=data_batch.data,

global_step=n_iter)

writer.close()

t-SNE:

PCA:

6. TensorBoardX - multiple-embedding 可视化

demo_multiple_embedding.py

import math

import numpy as np

from tensorboardX import SummaryWriter

def main():

degrees = np.linspace(0, 3600 * math.pi / 180.0, 3600)

degrees = degrees.reshape(3600, 1)

labels = ["%d" % (i) for i in range(0, 3600)]

<span class="hljs-keyword">with</span> SummaryWriter() <span class="hljs-keyword">as</span> writer:

<span class="hljs-comment"># Maybe make a bunch of data that's always shifted in some</span>

<span class="hljs-comment"># way, and that will be hard for PCA to turn into a sphere?</span>

<span class="hljs-keyword">for</span> epoch <span class="hljs-keyword">in</span> range(<span class="hljs-number">0</span>, <span class="hljs-number">16</span>):

shift = epoch * <span class="hljs-number">2</span> * math.pi / <span class="hljs-number">16.0</span>

mat = np.concatenate([

np.sin(shift + degrees * <span class="hljs-number">2</span> * math.pi / <span class="hljs-number">180.0</span>),

np.sin(shift + degrees * <span class="hljs-number">3</span> * math.pi / <span class="hljs-number">180.0</span>),

np.sin(shift + degrees * <span class="hljs-number">5</span> * math.pi / <span class="hljs-number">180.0</span>),

np.sin(shift + degrees * <span class="hljs-number">7</span> * math.pi / <span class="hljs-number">180.0</span>),

np.sin(shift + degrees * <span class="hljs-number">11</span> * math.pi / <span class="hljs-number">180.0</span>)

], axis=<span class="hljs-number">1</span>)

writer.add_embedding(

mat=mat,

metadata=labels,

tag=<span class="hljs-string">"sin"</span>,

global_step=epoch)

mat = np.concatenate([

np.cos(shift + degrees * <span class="hljs-number">2</span> * math.pi / <span class="hljs-number">180.0</span>),

np.cos(shift + degrees * <span class="hljs-number">3</span> * math.pi / <span class="hljs-number">180.0</span>),

np.cos(shift + degrees * <span class="hljs-number">5</span> * math.pi / <span class="hljs-number">180.0</span>),

np.cos(shift + degrees * <span class="hljs-number">7</span> * math.pi / <span class="hljs-number">180.0</span>),

np.cos(shift + degrees * <span class="hljs-number">11</span> * math.pi / <span class="hljs-number">180.0</span>)

], axis=<span class="hljs-number">1</span>)

writer.add_embedding(

mat=mat,

metadata=labels,

tag=<span class="hljs-string">"cos"</span>,

global_step=epoch)

mat = np.concatenate([

np.tan(shift + degrees * <span class="hljs-number">2</span> * math.pi / <span class="hljs-number">180.0</span>),

np.tan(shift + degrees * <span class="hljs-number">3</span> * math.pi / <span class="hljs-number">180.0</span>),

np.tan(shift + degrees * <span class="hljs-number">5</span> * math.pi / <span class="hljs-number">180.0</span>),

np.tan(shift + degrees * <span class="hljs-number">7</span> * math.pi / <span class="hljs-number">180.0</span>),

np.tan(shift + degrees * <span class="hljs-number">11</span> * math.pi / <span class="hljs-number">180.0</span>)

], axis=<span class="hljs-number">1</span>)

writer.add_embedding(

mat=mat,

metadata=labels,

tag=<span class="hljs-string">"tan"</span>,

global_step=epoch)

if name == "main":

main()

</div>

</article>

</div>

浙公网安备 33010602011771号

浙公网安备 33010602011771号