Keras 回归 拟合 收集

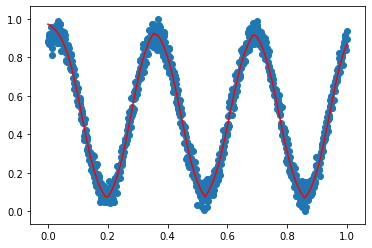

案例1

1 from keras.models import Sequential 2 from keras.layers import Dense, LSTM, Activation 3 from keras.optimizers import adam, rmsprop, adadelta 4 import numpy as np 5 import matplotlib.pyplot as plt 6 #construct model 7 models = Sequential() 8 models.add(Dense(100, init='uniform',activation='relu' ,input_dim=1)) 9 models.add(Dense(50, activation='relu')) 10 models.add(Dense(1,activation='tanh')) 11 adamoptimizer = adam(lr=0.001, beta_1=0.9, beta_2=0.999, decay=0.00001) 12 models.compile(optimizer='rmsprop', loss='mse',metrics=["accuracy"] ) 13 14 #train data 15 dataX = np.linspace(-2 * np.pi,2 * np.pi, 1000) 16 dataX = np.reshape(dataX, [dataX.__len__(), 1]) 17 noise = np.random.rand(dataX.__len__(), 1) * 0.1 18 dataY = np.sin(dataX) + noise 19 20 models.fit(dataX, dataY, epochs=100, batch_size=10, shuffle=True, verbose = 1) 21 predictY = models.predict(dataX, batch_size=1) 22 score = models.evaluate(dataX, dataY, batch_size=10) 23 24 print(score) 25 #plot 26 fig, ax = plt.subplots() 27 ax.plot(dataX, dataY, 'b-') 28 ax.plot(dataX, predictY, 'r.',) 29 30 ax.set(xlabel="x", ylabel="y=f(x)", title="y = sin(x),red:predict data,bule:true data") 31 ax.grid(True) 32 33 plt.show()

案例2:

1 import numpy as np 2 3 import random 4 from sklearn.preprocessing import MinMaxScaler 5 import matplotlib.pyplot as plt 6 from keras.models import Sequential 7 from keras.layers import Dense,Activation 8 from keras.optimizers import Adam,SGD 9 10 X = np.linspace(1,20,1000) 11 X = X[:,np.newaxis] 12 y = np.sin(X) + np.random.normal(0,0.08,(1000,1)) 13 min_max_scaler = MinMaxScaler((0,1)) 14 y_train = min_max_scaler.fit_transform(y) 15 x_train = min_max_scaler.fit_transform(X) 16 17 model1=Sequential() 18 model1.add(Dense(1000,input_dim = 1)) 19 model1.add(Activation('relu')) 20 model1.add(Dense(1)) 21 model1.add(Activation('sigmoid')) 22 adam = Adam(lr = 0.001) 23 sgd = SGD(lr = 0.1,decay=12-5,momentum=0.9) 24 model1.compile(optimizer = adam,loss = 'mse') 25 print('-------------training--------------') 26 model1.fit(x_train,y_train,batch_size= 12,nb_epoch = 500,shuffle=True) 27 Y_train_pred=model1.predict(x_train) 28 plt.scatter(x_train,y_train) 29 plt.plot(x_train,Y_train_pred,'r-') 30 plt.show()

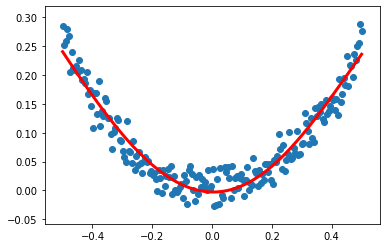

案例3

1 #加激活函数的方法2:model.add(Dense(units=10,input_dim=1,activation=' ')) 2 from keras.optimizers import SGD 3 from keras.layers import Dense,Activation 4 #构建一个顺序模型 5 model=Sequential() 6 7 #在模型中添加一个全连接层 8 #units是输出维度,input_dim是输入维度(shift+两次tab查看函数参数) 9 #输入1个神经元,隐藏层10个神经元,输出层1个神经元 10 model.add(Dense(units=10,input_dim=1,activation='relu')) #增加非线性激活函数 11 model.add(Dense(units=1,activation='relu')) #默认连接上一层input_dim=10 12 13 #定义优化算法(修改学习率) 14 defsgd=SGD(lr=0.3) 15 16 #编译模型 17 model.compile(optimizer=defsgd,loss='mse') #optimizer参数设置优化器,loss设置目标函数 18 19 #训练模型 20 for step in range(3001): 21 #每次训练一个批次 22 cost=model.train_on_batch(x_data,y_data) 23 #每500个batch打印一个cost值 24 if step%500==0: 25 print('cost:',cost) 26 27 #打印权值和偏置值 28 W,b=model.layers[0].get_weights() #layers[0]只有一个网络层 29 print('W:',W,'b:',b) 30 31 #x_data输入网络中,得到预测值y_pred 32 y_pred=model.predict(x_data) 33 34 plt.scatter(x_data,y_data) 35 36 plt.plot(x_data,y_pred,'r-',lw=3) 37 plt.show()

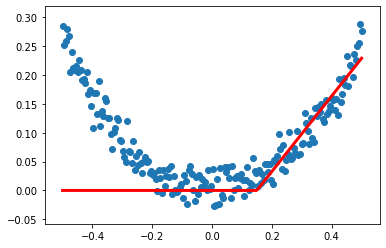

案例4:

1 #加激活函数的方法1:mode.add(Activation('')) 2 from keras.optimizers import SGD 3 from keras.layers import Dense,Activation 4 import numpy as np 5 6 np.random.seed(0) 7 x_data=np.linspace(-0.5,0.5,200) 8 noise=np.random.normal(0,0.02,x_data.shape) 9 y_data=np.square(x_data)+noise 10 11 #构建一个顺序模型 12 model=Sequential() 13 14 #在模型中添加一个全连接层 15 #units是输出维度,input_dim是输入维度(shift+两次tab查看函数参数) 16 #输入1个神经元,隐藏层10个神经元,输出层1个神经元 17 model.add(Dense(units=10,input_dim=1)) 18 model.add(Activation('tanh')) #增加非线性激活函数 19 model.add(Dense(units=1)) #默认连接上一层input_dim=10 20 model.add(Activation('tanh')) 21 22 #定义优化算法(修改学习率) 23 defsgd=SGD(lr=0.3) 24 25 #编译模型 26 model.compile(optimizer=defsgd,loss='mse') #optimizer参数设置优化器,loss设置目标函数 27 28 #训练模型 29 for step in range(3001): 30 #每次训练一个批次 31 cost=model.train_on_batch(x_data,y_data) 32 #每500个batch打印一个cost值 33 if step%500==0: 34 print('cost:',cost) 35 36 #打印权值和偏置值 37 W,b=model.layers[0].get_weights() #layers[0]只有一个网络层 38 print('W:',W,'b:',b) 39 40 #x_data输入网络中,得到预测值y_pred 41 y_pred=model.predict(x_data) 42 43 plt.scatter(x_data,y_data) 44 45 plt.plot(x_data,y_pred,'r-',lw=3) 46 plt.show()

案列5

1 import numpy as np 2 import matplotlib.pyplot as plt 3 from keras.models import Sequential 4 from keras.layers import Dense 5 from keras.optimizers import Adam 6 7 np.random.seed(0) 8 points = 500 9 X = np.linspace(-3, 3, points) 10 y = np.sin(X) + np.random.uniform(-0.5, 0.5, points) 11 12 13 model = Sequential() 14 model.add(Dense(50, activation='sigmoid', input_dim=1)) 15 model.add(Dense(30, activation='sigmoid')) 16 model.add(Dense(1)) 17 adam = Adam(lr=0.01) 18 model.compile(loss='mse', optimizer=adam) 19 model.fit(X, y, epochs=50) 20 21 predictions = model.predict(X) 22 plt.scatter(X, y) 23 plt.plot(X, predictions, 'ro') 24 plt.show()

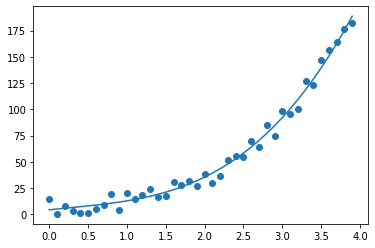

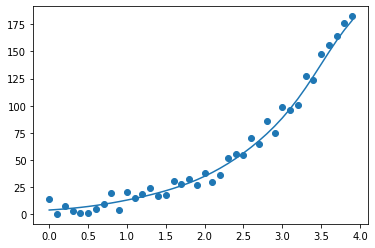

案列6:

%matplotlib inline import matplotlib.pyplot as plt import numpy as np x = list(np.arange(0,4,0.1)) #给3次多项式添加噪音 y = list(map(lambda val: val**3*3 + np.random.random()*20 , x) ) plt.scatter(x, y) #指明用3次多项式匹配 w = np.polyfit (x, y, 3) fn = np.poly1d(w) #打印适配出来的参数和函数 print(w) print(fn) plt.plot(x, fn(x))

案列7

1 %matplotlib inline

2 import matplotlib.pyplot as plt

3 from keras.datasets import mnist

4 from keras.models import Sequential

5 from keras.layers.core import Dense, Activation

6 from keras.layers.advanced_activations import LeakyReLU, PReLU

7 from keras.optimizers import SGD

8

9 x = list(np.arange(0,4,0.1))

10 #给3次多项式添加噪音

11 y = list(map(lambda val: val**3*3 + np.random.random()*20 , x) )

12

13 model = Sequential()

14 #神经元个数越多,效果会越好,收敛越快,太少的话难以收敛到所需曲线

15 model.add(Dense(100, input_shape=(1,)))

16

17 #Relu,得到的是一条横线

18 #Tanh,稍稍好于Relu,但是拟合的不够

19 #sigmoid, 只要神经元个数足够(50+),训练1000轮以上,就能达到比较好的效果

20 model.add(Activation('sigmoid'))

21 #model.add(LeakyReLU(alpha=0.01))

22 #model.add(Dense(3))

23

24 model.add(Dense(1))

25 model.compile(optimizer="sgd", loss="mse")

26 model.fit(x, y, epochs=2000, verbose=0)

27

28 print(type(fn(3)))

29 print(fn(1))

30 print(fn(3))

31

32 plt.scatter(x, y)

33 plt.plot(x, model.predict(x))

浙公网安备 33010602011771号

浙公网安备 33010602011771号