NLP FROM SCRATCH: CLASSIFYING NAMES WITH A CHARACTER-LEVEL RNN

翻译总结于 pytorch官网 Author: Sean Robertson

构建一个基础的character-level RNN来分类单词。本文和接下来的两篇文章将会从头(数据处理)开始构建NLP模型。特别的没有利用各种已有的操作(torchtext),所以可以从底层来学习NLP模型的处理过程。

character-level RNN按照序列读取单词,每步输出一个预测和“隐藏态”(hidden state),将前一个隐藏态输入到下一步。利用最终的预测作为输出,即预测的类别。

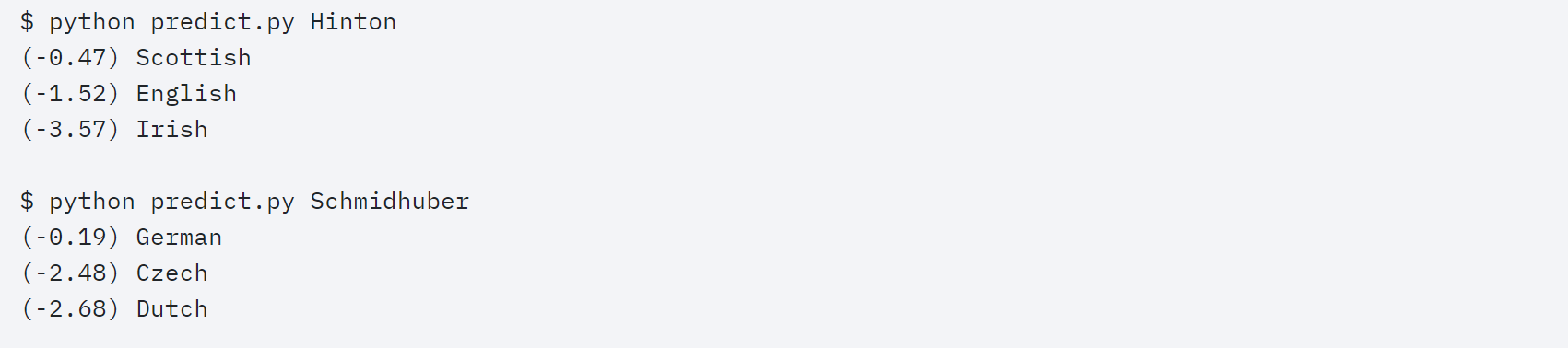

特别的,将对来自18种语言的几千个姓氏进行训练,并根据拼写来预测该姓氏来自哪种语言。例如:

推荐阅读:

I assume you have at least installed PyTorch, know Python, and understand Tensors:

- https://pytorch.org/ For installation instructions

- Deep Learning with PyTorch: A 60 Minute Blitz to get started with PyTorch in general

- Learning PyTorch with Examples for a wide and deep overview

- PyTorch for Former Torch Users if you are former Lua Torch user

It would also be useful to know about RNNs and how they work:

- The Unreasonable Effectiveness of Recurrent Neural Networks shows a bunch of real life examples

- Understanding LSTM Networks is about LSTMs specifically but also informative about RNNs in general

数据准备

数据下载:Download the data from here and extract it to the current directory.

在data/names文件下有18个txt文件,以[language].txt命名。每个文件包含了一些名字,一行一个名字(需要将其从unicode转为ascii)。

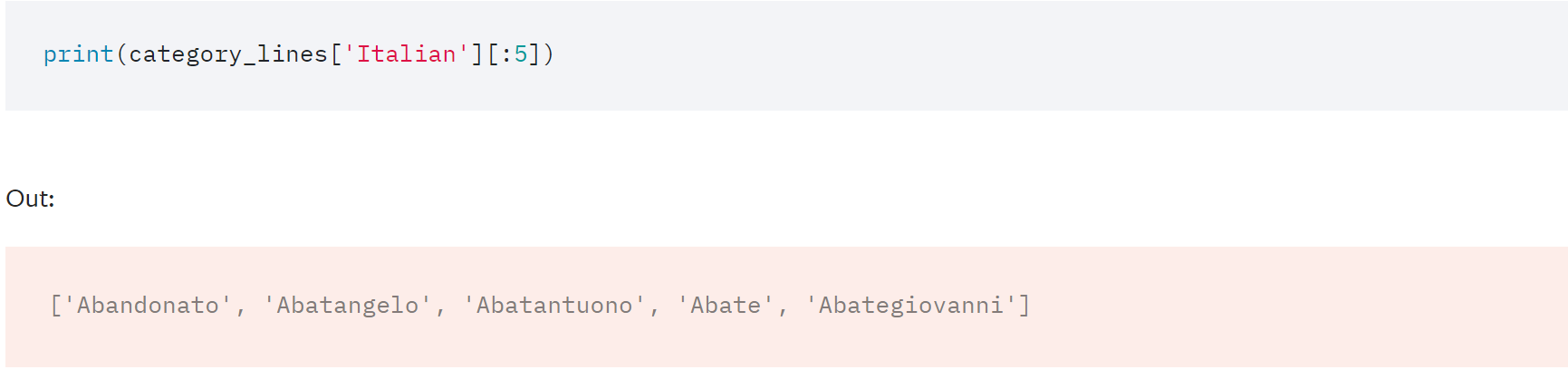

最后生成字典列表,每个字典元素都是:{language:[names...]}。字典格式:变量“category”和“line”(表示语言和名字)在后面会用到。

1 # -*- coding: utf-8 -*-

2 """

3 Created on Tue Apr 14 11:12:13 2020

4

5 @author: lenovo

6 """

7

8 from __future__ import unicode_literals, print_function, division

9 from io import open

10 import glob

11 import os

12

13 def findFiles(path): return glob.glob(path)

14

15 print(findFiles('data/names/*.txt'))

16

17 import unicodedata

18 import string

19

20 all_letters = string.ascii_letters + " .,;'"

21 n_letters = len(all_letters) # 57

22

23 # Turn a Unicode string to plain ASCII, thanks to https://stackoverflow.com/a/518232/2809427

24 def unicodeToAscii(s):

25 return ''.join(

26 c for c in unicodedata.normalize('NFD', s)

27 if unicodedata.category(c) != 'Mn'

28 and c in all_letters

29 )

30

31 print(unicodeToAscii('Ślusàrski'))

32

33 # Build the category_lines dictionary, a list of names per language

34 category_lines = {}

35 all_categories = []

36

37 # Read a file and split into lines

38 def readLines(filename):

39 lines = open(filename, encoding='utf-8').read().strip().split('\n')

40 return [unicodeToAscii(line) for line in lines]

41

42 for filename in findFiles('data/names/*.txt'):

43 category = os.path.splitext(os.path.basename(filename))[0]

44 all_categories.append(category)

45 lines = readLines(filename)

46 category_lines[category] = lines # 字典 语言到名字

47

48 n_categories = len(all_categories) # 字典长度 18

category_lines变量里面就是一个字典,包括从category(不同语言)到名字names(列表)的映射。同时all_categories就是所有语言的list,而n_categories是list长度,即语言数。

将名字转为Tensor

为利用数据,需要将name转为tensor。利用独热编码实现编码字母,例如b就是<0 1 0 0 0 ...>。所以一个名字就是2维的矩阵(由字母组成)。在批量这个维度上有维度1(pytorch语法格式)。

1 import torch

2

3 # Find letter index from all_letters, e.g. "a" = 0

4 def letterToIndex(letter):

5 return all_letters.find(letter)

6

7 # Just for demonstration, turn a letter into a <1 x n_letters> Tensor

8 def letterToTensor(letter):

9 tensor = torch.zeros(1, n_letters)

10 tensor[0][letterToIndex(letter)] = 1

11 return tensor

12

13 # Turn a line into a <line_length x 1 x n_letters>,

14 # or an array of one-hot letter vectors

15 def lineToTensor(line):

16 tensor = torch.zeros(len(line), 1, n_letters)

17 for li, letter in enumerate(line):

18 tensor[li][0][letterToIndex(letter)] = 1

19 return tensor

20

21 print(letterToTensor('J'))

22

23 print(lineToTensor('Jones').size())

建立网络

在自动求导前,toch里构建循环神经网络包括在几个时间步骤中复制网络层参数。该网络层包括隐藏状态和梯度。因为是自动求导,所以可以构建一个非常纯的RNN实现。本文的RNN模型主要copy自the PyTorch for Torch users tutorial,仅仅有两层组成。包括输入、隐藏态、logsoftmax层。

1 class RNN(nn.Module):

2 def __init__(self, input_size, hidden_size, output_size):

3 super(RNN, self).__init__()

4

5 self.hidden_size = hidden_size

6

7 self.i2h = nn.Linear(input_size + hidden_size, hidden_size)

8 self.i2o = nn.Linear(input_size + hidden_size, output_size)

9 self.softmax = nn.LogSoftmax(dim=1)

10

11 def forward(self, input, hidden):

12 combined = torch.cat((input, hidden), 1)

13 hidden = self.i2h(combined)

14 output = self.i2o(combined)

15 output = self.softmax(output)

16 return output, hidden

17

18 def initHidden(self):

19 return torch.zeros(1, self.hidden_size)

20

21 n_hidden = 128

22 rnn = RNN(n_letters, n_hidden, n_categories)

这步需要给一个输入(当前字母的tensor)和之前的隐藏态(最初初始化为全零)。得到的是输出(每个语言的概率)和下一个隐藏态(存起来为了下一步)。

1 input = letterToTensor('A')

2 hidden =torch.zeros(1, n_hidden)

3

4 output, next_hidden = rnn(input, hidden)

为了效率,不想每一步都创一个新的tensor,利用lineToTensor而不是letterToTensor,并利用切片。预处理批量tensors时可以进一步优化。

1 input = lineToTensor('Albert')

2 hidden = torch.zeros(1, n_hidden)

3

4 output, next_hidden = rnn(input[0], hidden)

5 print(output)

这个就是对所有类别的预测,给定一个名字,哪个概率最高,就属于哪类。

训练(准备工作)

正式训练之前需要一些帮助函数。首先是网络的输出,即类别概率,利用Tensor.topk来得到最大值的索引。

1 def categoryFromOutput(output):

2 top_n, top_i = output.topk(1)

3 category_i = top_i[0].item()

4 return all_categories[category_i], category_i

5

6 print(categoryFromOutput(output))

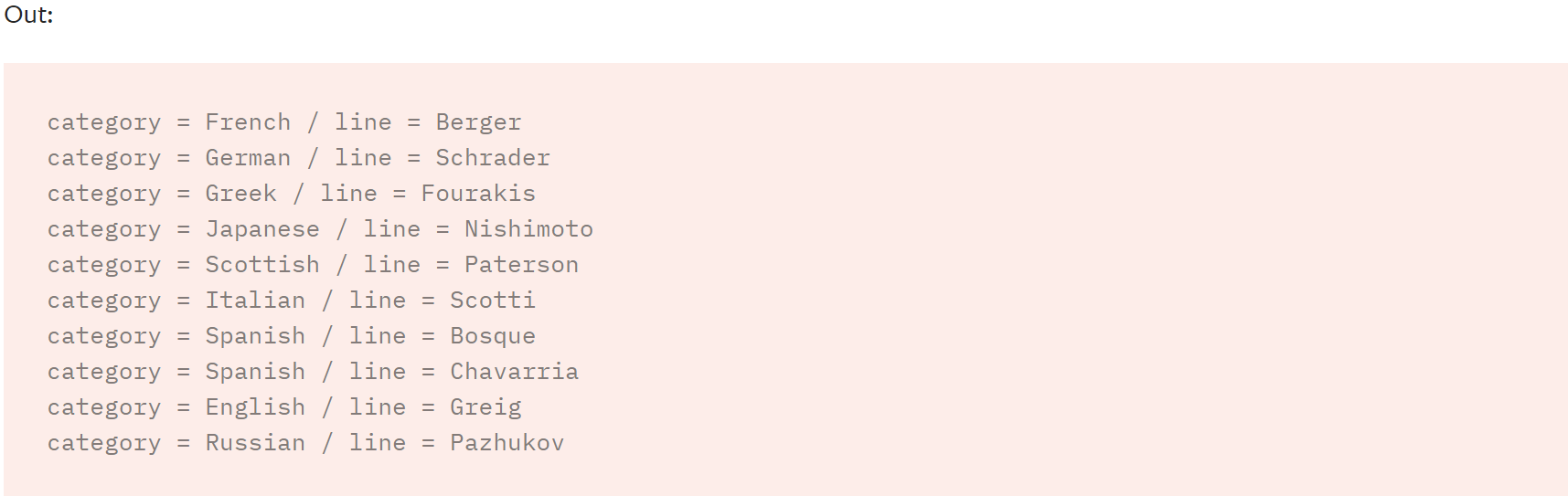

以下是一个随即整的训练样本:

1 import random

2

3 def randomChoice(l):

4 return l[random.randint(0, len(l) - 1)]

5

6 def randomTrainingExample():

7 category = randomChoice(all_categories)

8 line = randomChoice(category_lines[category])

9 category_tensor = torch.tensor([all_categories.index(category)], dtype=torch.long)

10 line_tensor = lineToTensor(line)

11 return category, line, category_tensor, line_tensor

12

13 for i in range(10):

14 category, line, category_tensor, line_tensor = randomTrainingExample()

15 print('category =', category, '/ line =', line)

训练网络

对于损失函数,可以利用nn.NLLLoss,RNN的最后一层为nn.LogSoftmax

criterion = nn.NLLLoss()

每个循环过程分别执行:

- 创建输入和目标tensor

- 创建初始的隐藏态为全零

- 读取每个字母并为下个字母保持隐藏态

- 对比输出和目标

- 反向传播

- 输出loss

1 learning_rate = 0.005 # If you set this too high, it might explode. If too low, it might not learn

2

3 def train(category_tensor, line_tensor):

4 hidden = rnn.initHidden()

5

6 rnn.zero_grad()

7

8 for i in range(line_tensor.size()[0]):

9 output, hidden = rnn(line_tensor[i], hidden)

10

11 loss = criterion(output, category_tensor)

12 loss.backward()

13

14 # Add parameters' gradients to their values, multiplied by learning rate

15 for p in rnn.parameters():

16 p.data.add_(-learning_rate, p.grad.data)

17

18 return output, loss.item()

现在可以跑了。train函数返回输出和损失,可以打印一波看看。因为有1000多个样本,仅仅打印一部分看看。

1 import time 2 import math 3 4 n_iters = 100000 5 print_every = 5000 6 plot_every = 1000 7 8 9 10 # Keep track of losses for plotting 11 current_loss = 0 12 all_losses = [] 13 14 def timeSince(since): 15 now = time.time() 16 s = now - since 17 m = math.floor(s / 60) 18 s -= m * 60 19 return '%dm %ds' % (m, s) 20 21 start = time.time() 22 23 for iter in range(1, n_iters + 1): 24 category, line, category_tensor, line_tensor = randomTrainingExample() 25 output, loss = train(category_tensor, line_tensor) 26 current_loss += loss 27 28 # Print iter number, loss, name and guess 29 if iter % print_every == 0: 30 guess, guess_i = categoryFromOutput(output) 31 correct = '✓' if guess == category else '✗ (%s)' % category 32 print('%d %d%% (%s) %.4f %s / %s %s' % (iter, iter / n_iters * 100, timeSince(start), loss, line, guess, correct)) 33 34 # Add current loss avg to list of losses 35 if iter % plot_every == 0: 36 all_losses.append(current_loss / plot_every) 37 current_loss = 0

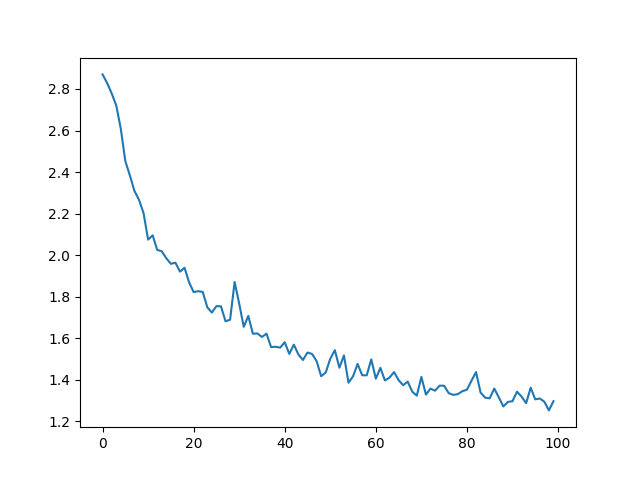

画出结果图

画出累计的损失值

import matplotlib.pyplot as plt import matplotlib.ticker as ticker plt.figure() plt.plot(all_losses)

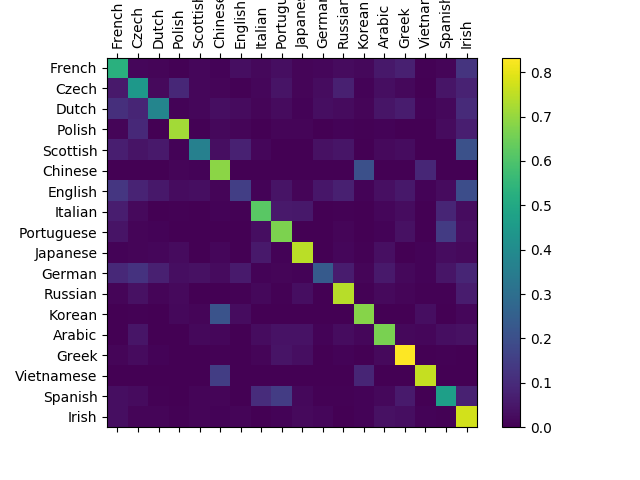

结果评估

为了对比在不同类别的表现,构建一个混淆矩阵,每行表示一个语言,列为各个语言的预测。为了计算混合矩阵,使用evaluate()在网络中运行一组样本,与train()中除去backprop相同。

1 # Keep track of correct guesses in a confusion matrix 2 confusion = torch.zeros(n_categories, n_categories) 3 n_confusion = 10000 4 5 # Just return an output given a line 6 def evaluate(line_tensor): 7 hidden = rnn.initHidden() 8 9 for i in range(line_tensor.size()[0]): 10 output, hidden = rnn(line_tensor[i], hidden) 11 12 return output 13 14 # Go through a bunch of examples and record which are correctly guessed 15 for i in range(n_confusion): 16 category, line, category_tensor, line_tensor = randomTrainingExample() 17 output = evaluate(line_tensor) 18 guess, guess_i = categoryFromOutput(output) 19 category_i = all_categories.index(category) 20 confusion[category_i][guess_i] += 1 21 22 # Normalize by dividing every row by its sum 23 for i in range(n_categories): 24 confusion[i] = confusion[i] / confusion[i].sum() 25 26 # Set up plot 27 fig = plt.figure() 28 ax = fig.add_subplot(111) 29 cax = ax.matshow(confusion.numpy()) 30 fig.colorbar(cax) 31 32 # Set up axes 33 ax.set_xticklabels([''] + all_categories, rotation=90) 34 ax.set_yticklabels([''] + all_categories) 35 36 # Force label at every tick 37 ax.xaxis.set_major_locator(ticker.MultipleLocator(1)) 38 ax.yaxis.set_major_locator(ticker.MultipleLocator(1)) 39 40 # sphinx_gallery_thumbnail_number = 2 41 plt.show()

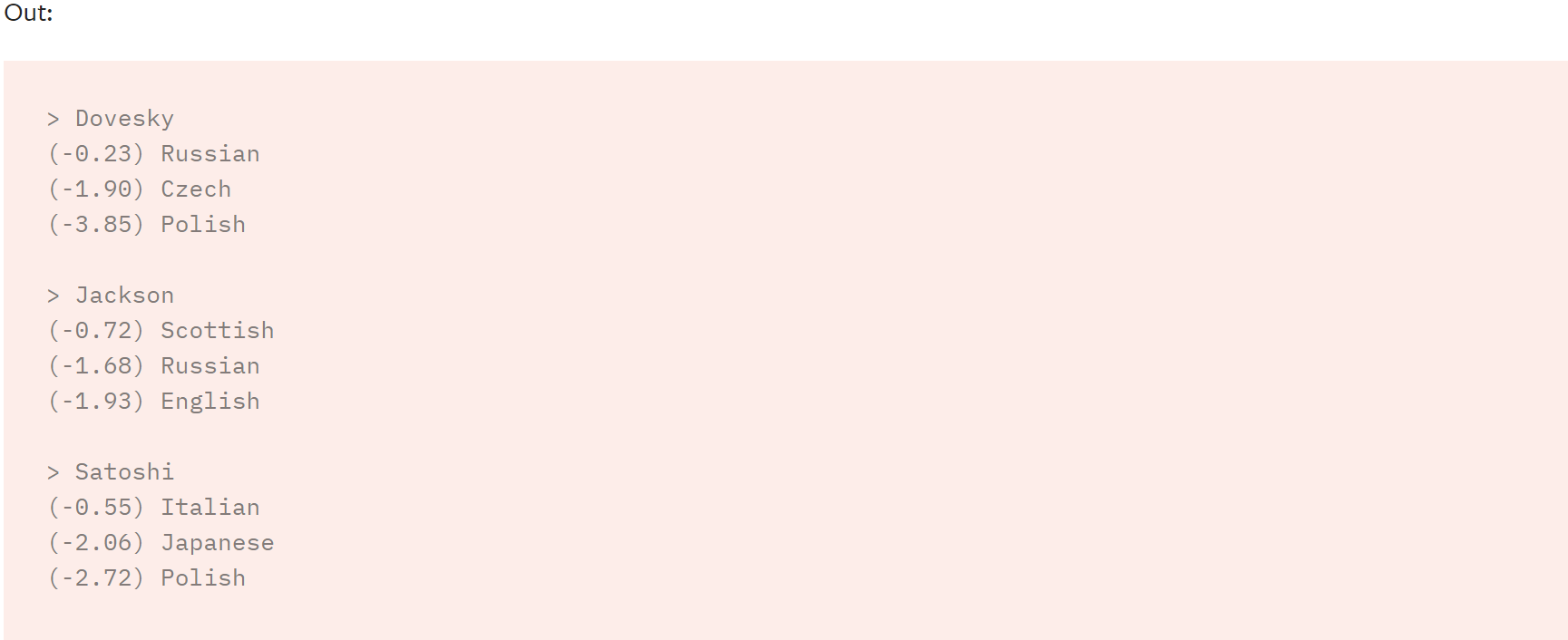

用户预测

1 def predict(input_line, n_predictions=3): 2 print('\n> %s' % input_line) 3 with torch.no_grad(): 4 output = evaluate(lineToTensor(input_line)) 5 6 # Get top N categories 7 topv, topi = output.topk(n_predictions, 1, True) 8 predictions = [] 9 10 for i in range(n_predictions): 11 value = topv[0][i].item() 12 category_index = topi[0][i].item() 13 print('(%.2f) %s' % (value, all_categories[category_index])) 14 predictions.append([value, all_categories[category_index]]) 15 16 predict('Dovesky') 17 predict('Jackson') 18 predict('Satoshi')

The final versions of the scripts in the Practical PyTorch repo split the above code into a few files:

data.py(loads files)model.py(defines the RNN)train.py(runs training)predict.py(runspredict()with command line arguments)server.py(serve prediction as a JSON API with bottle.py)

Run train.py to train and save the network.

Run predict.py with a name to view predictions:

Run server.py and visit http://localhost:5533/Yourname to get JSON output of predictions.

Exercises

- Try with a different dataset of line -> category, for example:

- Any word -> language

- First name -> gender

- Character name -> writer

- Page title -> blog or subreddit

- Get better results with a bigger and/or better shaped network

- Add more linear layers

- Try the

nn.LSTMandnn.GRUlayers - Combine multiple of these RNNs as a higher level network

Total running time of the script: ( 3 minutes 6.446 seconds)

整体代码:

1 # -*- coding: utf-8 -*- 2 """ 3 Created on Tue Apr 14 11:12:13 2020 4 5 @author: lenovo 6 """ 7 8 from __future__ import unicode_literals, print_function, division 9 from io import open 10 import glob 11 import os 12 13 def findFiles(path): return glob.glob(path) 14 15 print(findFiles('data/names/*.txt')) 16 17 import unicodedata 18 import string 19 20 all_letters = string.ascii_letters + " .,;'" 21 n_letters = len(all_letters) 22 23 # Turn a Unicode string to plain ASCII, thanks to https://stackoverflow.com/a/518232/2809427 24 def unicodeToAscii(s): 25 return ''.join( 26 c for c in unicodedata.normalize('NFD', s) 27 if unicodedata.category(c) != 'Mn' 28 and c in all_letters 29 ) 30 31 print(unicodeToAscii('Ślusàrski')) 32 33 # Build the category_lines dictionary, a list of names per language 34 category_lines = {} 35 all_categories = [] 36 37 # Read a file and split into lines 38 def readLines(filename): 39 lines = open(filename, encoding='utf-8').read().strip().split('\n') 40 return [unicodeToAscii(line) for line in lines] 41 42 for filename in findFiles('data/names/*.txt'): 43 category = os.path.splitext(os.path.basename(filename))[0] 44 all_categories.append(category) 45 lines = readLines(filename) 46 category_lines[category] = lines 47 48 n_categories = len(all_categories) 49 50 51 52 import torch 53 54 # Find letter index from all_letters, e.g. "a" = 0 55 def letterToIndex(letter): 56 return all_letters.find(letter) 57 58 # Just for demonstration, turn a letter into a <1 x n_letters> Tensor 59 def letterToTensor(letter): 60 tensor = torch.zeros(1, n_letters) 61 tensor[0][letterToIndex(letter)] = 1 62 return tensor 63 64 # Turn a line into a <line_length x 1 x n_letters>, 65 # or an array of one-hot letter vectors 66 def lineToTensor(line): 67 tensor = torch.zeros(len(line), 1, n_letters) 68 for li, letter in enumerate(line): 69 tensor[li][0][letterToIndex(letter)] = 1 70 return tensor 71 72 print(letterToTensor('J')) 73 74 print(lineToTensor('Jones').size()) 75 76 77 import torch.nn as nn 78 79 class RNN(nn.Module): 80 def __init__(self, input_size, hidden_size, output_size): 81 super(RNN, self).__init__() 82 83 self.hidden_size = hidden_size 84 85 self.i2h = nn.Linear(input_size + hidden_size, hidden_size) 86 self.i2o = nn.Linear(input_size + hidden_size, output_size) 87 self.softmax = nn.LogSoftmax(dim=1) 88 89 def forward(self, input, hidden): 90 combined = torch.cat((input, hidden), 1) 91 hidden = self.i2h(combined) 92 output = self.i2o(combined) 93 output = self.softmax(output) 94 return output, hidden 95 96 def initHidden(self): 97 return torch.zeros(1, self.hidden_size) 98 99 n_hidden = 128 100 rnn = RNN(n_letters, n_hidden, n_categories) 101 102 input = letterToTensor('A') 103 hidden =torch.zeros(1, n_hidden) 104 105 output, next_hidden = rnn(input, hidden) 106 107 def categoryFromOutput(output): 108 top_n, top_i = output.topk(1) 109 category_i = top_i[0].item() 110 return all_categories[category_i], category_i 111 112 print(categoryFromOutput(output)) 113 114 import random 115 116 def randomChoice(l): 117 return l[random.randint(0, len(l) - 1)] 118 119 def randomTrainingExample(): 120 category = randomChoice(all_categories) 121 line = randomChoice(category_lines[category]) 122 category_tensor = torch.tensor([all_categories.index(category)], dtype=torch.long) 123 line_tensor = lineToTensor(line) 124 return category, line, category_tensor, line_tensor 125 126 for i in range(10): 127 category, line, category_tensor, line_tensor = randomTrainingExample() 128 print('category =', category, '/ line =', line) 129 130 criterion = nn.NLLLoss() 131 132 learning_rate = 0.005 # If you set this too high, it might explode. If too low, it might not learn 133 134 def train(category_tensor, line_tensor): 135 hidden = rnn.initHidden() 136 137 rnn.zero_grad() 138 139 for i in range(line_tensor.size()[0]): 140 output, hidden = rnn(line_tensor[i], hidden) 141 142 loss = criterion(output, category_tensor) 143 loss.backward() 144 145 # Add parameters' gradients to their values, multiplied by learning rate 146 for p in rnn.parameters(): 147 p.data.add_(-learning_rate, p.grad.data) 148 149 return output, loss.item() 150 151 152 import time 153 import math 154 155 n_iters = 100000 156 print_every = 5000 157 plot_every = 1000 158 159 160 161 # Keep track of losses for plotting 162 current_loss = 0 163 all_losses = [] 164 165 def timeSince(since): 166 now = time.time() 167 s = now - since 168 m = math.floor(s / 60) 169 s -= m * 60 170 return '%dm %ds' % (m, s) 171 172 start = time.time() 173 174 for iter in range(1, n_iters + 1): 175 category, line, category_tensor, line_tensor = randomTrainingExample() 176 output, loss = train(category_tensor, line_tensor) 177 current_loss += loss 178 179 # Print iter number, loss, name and guess 180 if iter % print_every == 0: 181 guess, guess_i = categoryFromOutput(output) 182 correct = '✓' if guess == category else '✗ (%s)' % category 183 print('%d %d%% (%s) %.4f %s / %s %s' % (iter, iter / n_iters * 100, timeSince(start), loss, line, guess, correct)) 184 185 # Add current loss avg to list of losses 186 if iter % plot_every == 0: 187 all_losses.append(current_loss / plot_every) 188 current_loss = 0 189 190 import time 191 import math 192 193 n_iters = 100000 194 print_every = 5000 195 plot_every = 1000 196 197 198 199 # Keep track of losses for plotting 200 current_loss = 0 201 all_losses = [] 202 203 def timeSince(since): 204 now = time.time() 205 s = now - since 206 m = math.floor(s / 60) 207 s -= m * 60 208 return '%dm %ds' % (m, s) 209 210 start = time.time() 211 212 for iter in range(1, n_iters + 1): 213 category, line, category_tensor, line_tensor = randomTrainingExample() 214 output, loss = train(category_tensor, line_tensor) 215 current_loss += loss 216 217 # Print iter number, loss, name and guess 218 if iter % print_every == 0: 219 guess, guess_i = categoryFromOutput(output) 220 correct = '✓' if guess == category else '✗ (%s)' % category 221 print('%d %d%% (%s) %.4f %s / %s %s' % (iter, iter / n_iters * 100, timeSince(start), loss, line, guess, correct)) 222 223 # Add current loss avg to list of losses 224 if iter % plot_every == 0: 225 all_losses.append(current_loss / plot_every) 226 current_loss = 0 227 228 import matplotlib.pyplot as plt 229 import matplotlib.ticker as ticker 230 231 plt.figure() 232 plt.plot(all_losses)

浙公网安备 33010602011771号

浙公网安备 33010602011771号