kubernetes ---- Loki收集K8s日志(文件类型日志)

由于公司K8s集群环境并不是很大的集群,所以日志收集使用的是Loki+Grafana的方案,使用helm的方式安装部署loki启动前修改配置文件已应用自定义配置来实现日志的收集,文件方式的日志收集等。

一、Loki的部署

- 部署helm;

https://www.yuque.com/kkkfree/ilecfh/tf6dai

- helm拉取loki-stack;

# 加源

~] helm repo add grafana https://grafana.github.io/helm-charts

~] helm repo update

# 拉取2.0.0版本的loki,因为现在已有的grafana版本是5.0与最新版本的loki不兼容,所以使用老版本

~] helm fetch grafana/loki-stack --version v2.0.0 --untar --untardir .

~] cd loki-stack

# 生成 k8s 配置

loki-stack ] helm template loki . > loki.yaml

- 自定义部署文件

loki-stack ] mkdir conf

loki-stack ] cp loki.yaml{,.bak}

loki-stack ] sed -i 's/namespace: logs/namespace: default/g' loki.yaml

loki-stack ] vim loki.yaml

...

apiVersion: v1

kind: Service

metadata:

name: loki

namespace: logs

labels:

app: loki

chart: loki-2.0.0

release: loki

heritage: Helm

annotations:

{}

spec:

# 端口类型修改为NodePort绑定到节点上的3100,我们这边使用的Nginx代理,所以不改也可以

type: NodePort

ports:

- port: 3100

protocol: TCP

name: http-metrics

targetPort: http-metrics

nodePort: 3100

selector:

app: loki

release: loki

# 搜索"storage"将emptyDir修改为pvc的名称,这里做日志持久化

volumes:

- name: config

secret:

secretName: loki

- name: storage

persistentVolumeClaim:

claimName: logs-pvc

# 搜索"hostPath"找到定义的volumes在volumes下添加下列内容,文件方式记录日志的日志目录

# volumeMounts也要添加

- mountPath: /gensee/nginx

name: nginx

- hostPath:

path: /gensee/nginx

type: ""

name: nginx

# 搜索kubernetes-pods-static,在这个ConfigMap里添加下列自定义的日志文件标签处理方式,在kubernetes-pods-static这一组的下列新增job_name

- job_name: kubernetes-pods-name-nginx

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /gensee/nginx/$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_name

target_label: __path__

- job_name: kubernetes-pods-app-eschol-nginx

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

source_labels:

- __meta_kubernetes_pod_label_name

- source_labels:

- __meta_kubernetes_pod_label_app

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /gensee/nginx/$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_name

target_label: __path__

- job_name: kubernetes-pods-direct-controllers-nginx

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: drop

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /gensee/nginx/$1/*.log

separator: /

source_labels:

- __meta_kuberenetes_pod_name

target_label: __path__

- job_name: kubernetes-pods-indirect-controller-nginx

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: keep

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- action: replace

regex: '([0-9a-z-.]+)-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /gensee/nginx/$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_name

target_label: __path__

- job_name: kubernetes-pods-static-nginx

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: ''

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- action: replace

source_labels:

- __meta_kubernetes_pod_label_component

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /gensee/nginx/$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_name

target_label: __path__

...

loki-stack ] mkdir pvc && cd pvc

pvc ] vim log-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: logs-pvc

namespace: logs

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 200Gi

pvc ] kubectl apply -f log-pvc.yaml

- 自定义配置文件

# 获取loki的配置文件

~] kubectl get secret loki -o json -n logs | jq -r '.data."loki.yaml"' | base64 -d > /root/loki-stack/conf/loki.yaml

~] vim /root/loki-stack/conf/loki.yaml

auth_enabled: false

chunk_store_config:

max_look_back_period: 0s

compactor:

shared_store: filesystem

working_directory: /data/loki/boltdb-shipper-compactor

ingester:

chunk_block_size: 262144

chunk_idle_period: 3m

chunk_retain_period: 1m

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

max_transfer_retries: 0

limits_config:

enforce_metric_name: false

# 旧日志是否拒绝接收

reject_old_samples: true

# 多久以前的数据不接收

reject_old_samples_max_age: 72h

# 当你搭建完成promtail,并且启动发送日志到loki的时候很有可能会碰到这个错误,

# 因为你要收集的日志太多了,超过了loki的限制,所以会报429,如果你要增加限制可以修改loki的配置文件,在limits_config中添加

ingestion_rate_mb: 100

# 每个用户允许摄入的突发大小(样本大小)。 单位MB。

# 突发大小指的是每个分发器本地速率限制器,即使在

# case的“全局”策略,并且应该至少设置为最大日志

# 单个推请求中期望的大小

ingestion_burst_size_mb: 200

schema_config:

configs:

- from: "2020-10-24"

index:

period: 24h

prefix: index_

object_store: filesystem

schema: v11

store: boltdb-shipper

server:

http_listen_port: 3100

grpc_server_max_recv_msg_size: 10388608

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/boltdb-shipper-active

cache_location: /data/loki/boltdb-shipper-cache

cache_ttl: 24h

shared_store: filesystem

filesystem:

directory: /data/loki/chunks

table_manager:

retention_deletes_enabled: false

retention_period: 0s

- 部署loki-stack

# 部署(如果要修改默认配置必须要修改一下yaml)

~] kubectl apply -f loki.yaml

# 使用自定义的配置文件

~] kubectl delete secret loki -n logs

- 自定义的Pod日志输出路径

...

volumeMounts:

- name: nginx-log

mountPath: /gensee/openresty/nginx/logs/

subPathExpr: $(POD_NAME)

volumes:

- name: nginx-log

hostPath:

path: /gensee/nginx

type: DirectoryOrCreate

...

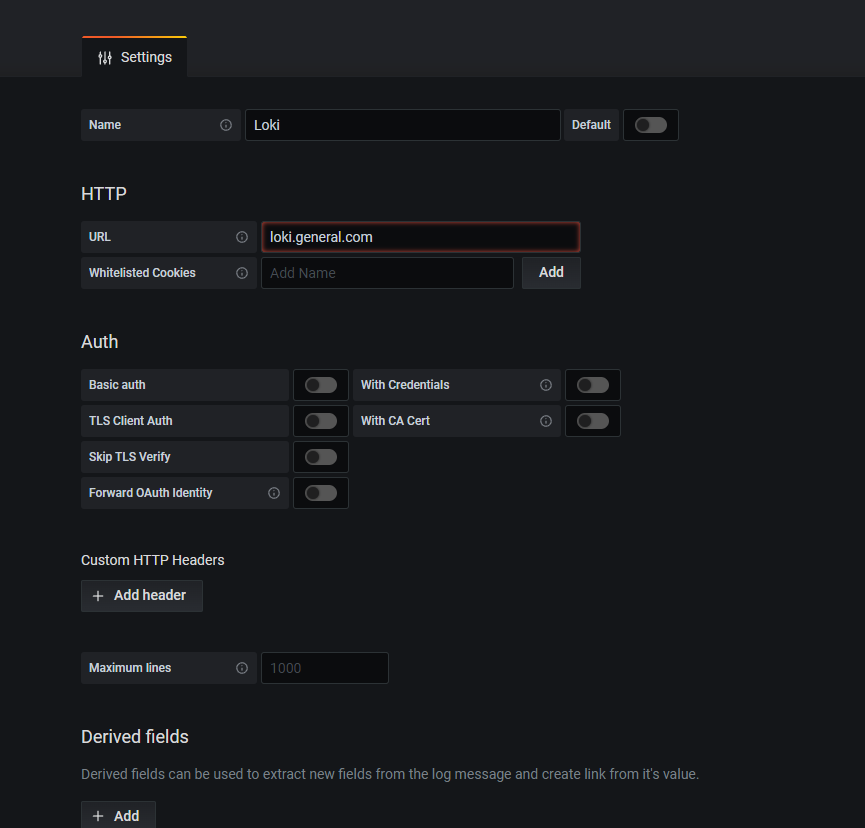

- 反向代理Loki以让外部Grafana可连接Loki

~] vim nginx/conf/conf.d/loki.conf

server {

listen 80;

server_name loki.general.com; # 替换成你的域名

access_log logs/loki.log;

location / {

proxy_pass http://loki.logs.svc.cluster.local:3100;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forward-For $proxy_add_x_forwarded_for;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

}

}

~] kubectl delete cm nginx-confd -n nginx

~] kubectl create cm nginx-confd --from-file=nginx/conf/conf.d/loki.conf -n nginx

二、配置Grafana

- 增加DataSource;

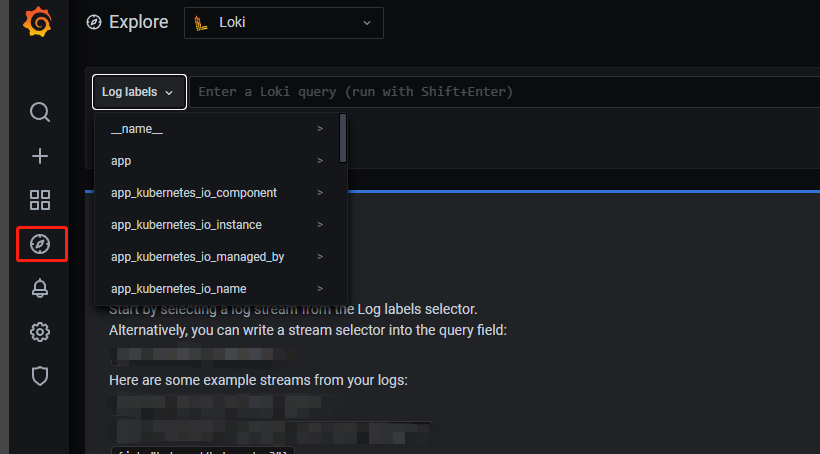

- 测试通过后在下列位置查看日志,筛选标签即可查看日志

浙公网安备 33010602011771号

浙公网安备 33010602011771号