Elastic Search 分词器的介绍和使用

分词器的介绍和使用

什么是分词器?

将用户输入的一段文本,按照一定逻辑,分析成多个词语的一种工具

常用的内置分词器

standard analyzer、simple analyzer、whitespace analyzer、stop analyzer、language analyzer、pattern analyzer

standard analyzer

标准分析器是默认分词器,如果未指定,则使用该分词器。

POST localhost:9200/_analyze

参数:

{

"analyzer":"standard",

"text":"The best 3-points shooter is Curry!"

}

返回值:

{

"tokens": [

{

"token": "the",

"start_offset": 0,

"end_offset": 3,

"type": "<ALPHANUM>",

"position": 0

},

{

"token": "best",

"start_offset": 4,

"end_offset": 8,

"type": "<ALPHANUM>",

"position": 1

},

{

"token": "3",

"start_offset": 9,

"end_offset": 10,

"type": "<NUM>",

"position": 2

},

{

"token": "points",

"start_offset": 11,

"end_offset": 17,

"type": "<ALPHANUM>",

"position": 3

},

{

"token": "shooter",

"start_offset": 18,

"end_offset": 25,

"type": "<ALPHANUM>",

"position": 4

},

{

"token": "is",

"start_offset": 26,

"end_offset": 28,

"type": "<ALPHANUM>",

"position": 5

},

{

"token": "curry",

"start_offset": 29,

"end_offset": 34,

"type": "<ALPHANUM>",

"position": 6

}

]

}

simple analyzer

simple 分析器当它遇到只要不是字母的字符,就将文本解析成 term,而且所有的 term 都是小写的。

POST localhost:9200/_analyze

参数:

{

"analyzer":"simple",

"text":"The best 3-points shooter is Curry!"

}

返回值:

{

"tokens": [

{

"token": "the",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "best",

"start_offset": 4,

"end_offset": 8,

"type": "word",

"position": 1

},

{

"token": "points",

"start_offset": 11,

"end_offset": 17,

"type": "word",

"position": 2

},

{

"token": "shooter",

"start_offset": 18,

"end_offset": 25,

"type": "word",

"position": 3

},

{

"token": "is",

"start_offset": 26,

"end_offset": 28,

"type": "word",

"position": 4

},

{

"token": "curry",

"start_offset": 29,

"end_offset": 34,

"type": "word",

"position": 5

}

]

}

whitespace analyzer

whitespace 分析器,当它遇到空白字符时,就将文本解析成terms

POST localhost:9200/_analyze

参数:

{

"analyzer":"whitespace",

"text":"The best 3-points shooter is Curry!"

}

返回值:

{

"tokens": [

{

"token": "The",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "best",

"start_offset": 4,

"end_offset": 8,

"type": "word",

"position": 1

},

{

"token": "3-points",

"start_offset": 9,

"end_offset": 17,

"type": "word",

"position": 2

},

{

"token": "shooter",

"start_offset": 18,

"end_offset": 25,

"type": "word",

"position": 3

},

{

"token": "is",

"start_offset": 26,

"end_offset": 28,

"type": "word",

"position": 4

},

{

"token": "Curry!",

"start_offset": 29,

"end_offset": 35,

"type": "word",

"position": 5

}

]

}

stop analyzer

stop 分析器 和 simple 分析器很像,唯一不同的是,stop 分析器增加了对删除停止词的支持,默认使用了 english 停止词

stopwords 预定义的停止词列表,比如 (the,a,an,this,of,at)等等

POST localhost:9200/_analyze

参数:

{

"analyzer":"stop",

"text":"The best 3-points shooter is Curry!"

}

返回值:

{

"tokens": [

{

"token": "best",

"start_offset": 4,

"end_offset": 8,

"type": "word",

"position": 1

},

{

"token": "points",

"start_offset": 11,

"end_offset": 17,

"type": "word",

"position": 2

},

{

"token": "shooter",

"start_offset": 18,

"end_offset": 25,

"type": "word",

"position": 3

},

{

"token": "curry",

"start_offset": 29,

"end_offset": 34,

"type": "word",

"position": 5

}

]

}

language analyzer

(特定的语言的分词器,比如说,english,英语分词器),内置语言:arabic,armenian,basque,bengali,brazilian,bulgarian,catalan,cjk,czech,danish,dutch,english,finnish,french,galician,german,greek,hindi,hungarian,indonesian,irish,italian,latvian,lithuanian,norwegian,persian,portuguese,romanian,russian,sorani,spanish,swedish,turkish,thai

POST localhost:9200/_analyze

参数:

{

"analyzer":"english",

"text":"The best 3-points shooter is Curry!"

}

返回值:

{

"tokens": [

{

"token": "best",

"start_offset": 4,

"end_offset": 8,

"type": "<ALPHANUM>",

"position": 1

},

{

"token": "3",

"start_offset": 9,

"end_offset": 10,

"type": "<NUM>",

"position": 2

},

{

"token": "point",

"start_offset": 11,

"end_offset": 17,

"type": "<ALPHANUM>",

"position": 3

},

{

"token": "shooter",

"start_offset": 18,

"end_offset": 25,

"type": "<ALPHANUM>",

"position": 4

},

{

"token": "curri",

"start_offset": 29,

"end_offset": 34,

"type": "<ALPHANUM>",

"position": 6

}

]

}

pattern analyzer

用正则表达式来将文本分割成terms,默认的正则表达式是\W+(非单词字符)

POST localhost:9200/_analyze

参数:

{

"analyzer":"pattern",

"text":"The best 3-points shooter is Curry!"

}

返回值:

{

"tokens": [

{

"token": "the",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "best",

"start_offset": 4,

"end_offset": 8,

"type": "word",

"position": 1

},

{

"token": "3",

"start_offset": 9,

"end_offset": 10,

"type": "word",

"position": 2

},

{

"token": "points",

"start_offset": 11,

"end_offset": 17,

"type": "word",

"position": 3

},

{

"token": "shooter",

"start_offset": 18,

"end_offset": 25,

"type": "word",

"position": 4

},

{

"token": "is",

"start_offset": 26,

"end_offset": 28,

"type": "word",

"position": 5

},

{

"token": "curry",

"start_offset": 29,

"end_offset": 34,

"type": "word",

"position": 6

}

]

}

使用示例

PUT localhost:9200/my_index

参数:

{

"settings":{

"analysis":{

"analyzer":{

"my_analyzer":{

"type":"whitespace"

}

}

}

},

"mappings":{

"properties":{

"name":{

"type":"text"

},

"team_name":{

"type":"text"

},

"position":{

"type":"text"

},

"play_year":{

"type":"long"

},

"jerse_no":{

"type":"keyword"

},

"title":{

"type":"text",

"analyzer":"my_analyzer"

}

}

}

}

返回值:

{

"acknowledged": true,

"shards_acknowledged": true,

"index": "my_index"

}

PUT localhost:9200/my_index/_doc/1

参数:

{

"name":"库⾥里里",

"team_name":"勇⼠士",

"position":"控球后卫",

"play_year":10,

"jerse_no":"30",

"title":"The best 3-points shooter is Curry!"

}

返回值:

{

"_index": "my_index",

"_type": "_doc",

"_id": "1",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"_seq_no": 0,

"_primary_term": 1

}

POST localhost:9200/my_index/_search

参数:

{

"query":{

"match":{

"title":"Curry!"

}

}

}

返回值:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 0.2876821,

"hits": [

{

"_index": "my_index",

"_type": "_doc",

"_id": "1",

"_score": 0.2876821,

"_source": {

"name": "库⾥里里",

"team_name": "勇⼠士",

"position": "控球后卫",

"play_year": 10,

"jerse_no": "30",

"title": "The best 3-points shooter is Curry!"

}

}

]

}

}

常见中文分词器的使用

如果用默认的分词器 standard 进行中文分词,中文会被单独分成一个个汉字

POST localhost:9200/_analyze 参数: { "analyzer": "standard", "text": "火箭明年总冠军" } 返回值: { "tokens": [ { "token": "火", "start_offset": 0, "end_offset": 1, "type": "<IDEOGRAPHIC>", "position": 0 }, { "token": "箭", "start_offset": 1, "end_offset": 2, "type": "<IDEOGRAPHIC>", "position": 1 }, { "token": "明", "start_offset": 2, "end_offset": 3, "type": "<IDEOGRAPHIC>", "position": 2 }, { "token": "年", "start_offset": 3, "end_offset": 4, "type": "<IDEOGRAPHIC>", "position": 3 }, { "token": "总", "start_offset": 4, "end_offset": 5, "type": "<IDEOGRAPHIC>", "position": 4 }, { "token": "冠", "start_offset": 5, "end_offset": 6, "type": "<IDEOGRAPHIC>", "position": 5 }, { "token": "军", "start_offset": 6, "end_offset": 7, "type": "<IDEOGRAPHIC>", "position": 6 } ] }

常见分词器

smartCN分词器:一个简单的中文或中英文混合文本的分词器

IK分词器:更智能更友好的中文分词器

smartCn

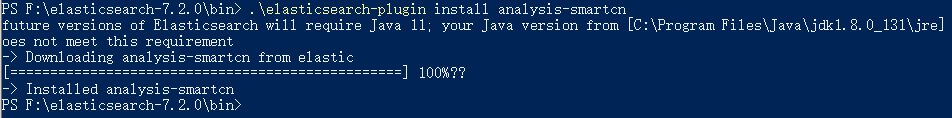

安装,进入到bin目录

- Linux 使用命令:sh elasticsearch-plugin install analysis-smartcn

- Windows 使用命令:.\elasticsearch-plugin install analysis-smartcn

安装后需要重新启动

POST localhost:9200/_analyze 参数: { "analyzer": "smartcn", "text": "火箭明年总冠军" } 返回值: { "tokens": [ { "token": "火箭", "start_offset": 0, "end_offset": 2, "type": "word", "position": 0 }, { "token": "明年", "start_offset": 2, "end_offset": 4, "type": "word", "position": 1 }, { "token": "总", "start_offset": 4, "end_offset": 5, "type": "word", "position": 2 }, { "token": "冠军", "start_offset": 5, "end_offset": 7, "type": "word", "position": 3 } ] }

IK分词器

下载:https://github.com/medcl/elasticsearch-analysis-ik/releases

安装:解压安装到 plugins 目录

安装后重新启动

POST localhost:9200/_analyze 参数: { "analyzer": "ik_max_word", "text": "火箭明年总冠军" } 返回值: { "tokens": [ { "token": "火箭", "start_offset": 0, "end_offset": 2, "type": "CN_WORD", "position": 0 }, { "token": "明年", "start_offset": 2, "end_offset": 4, "type": "CN_WORD", "position": 1 }, { "token": "总冠军", "start_offset": 4, "end_offset": 7, "type": "CN_WORD", "position": 2 }, { "token": "冠军", "start_offset": 5, "end_offset": 7, "type": "CN_WORD", "position": 3 } ] }

浙公网安备 33010602011771号

浙公网安备 33010602011771号