19.B站薪享宏福笔记——第四章 k8s资源清单

4 k8s资源清单

4.1 什么是资源

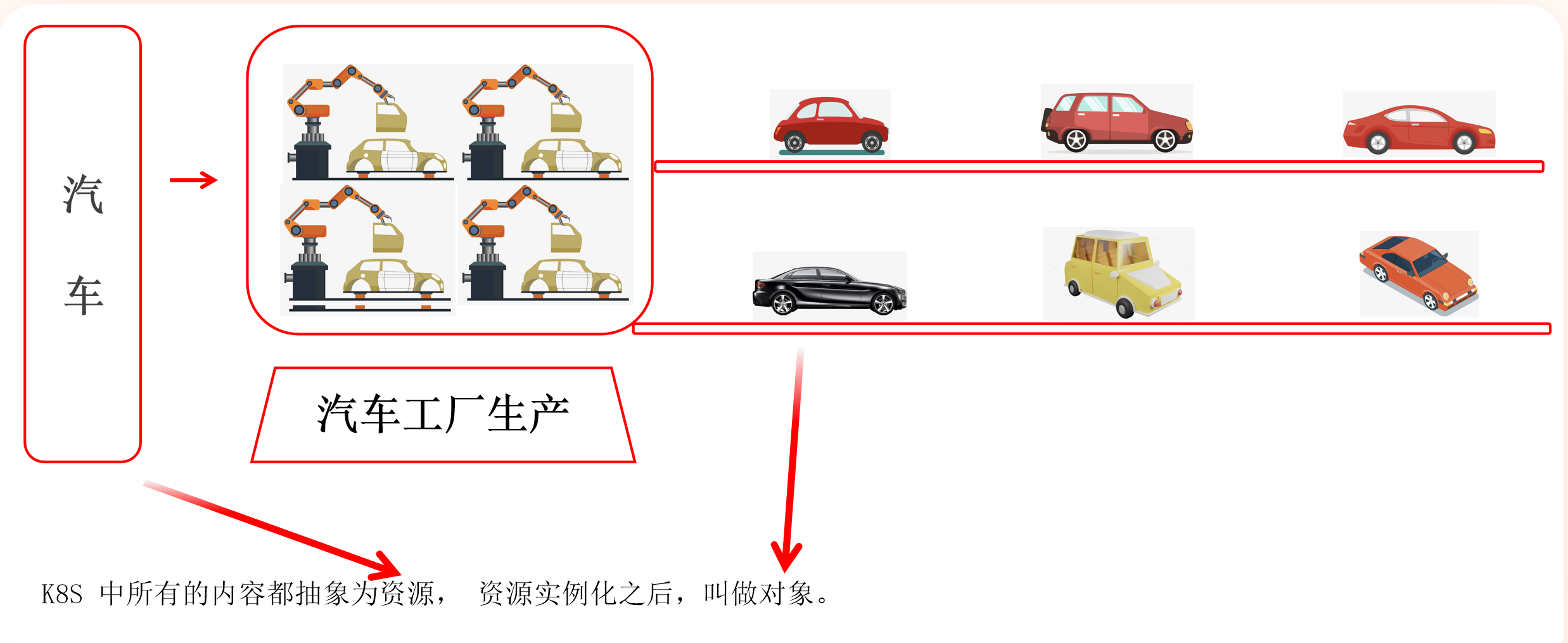

——资源就是 Kubernetes 中的一切,一切皆资源。

4.1.1 基本概述

1.汽车:特殊的类型、一类 集群中资源

2.汽车工厂生产:不同的资源,不同的参数,生产出不同的车。 集群中控制器

3.car:具象化的车,可以不同颜色、形状、材质等。 集群中对象

4.1.2 资源类别

学校、院系、班级(资源的归纳,便于集群中创建不同的服务,更好的去分割。)

集群级资源(学校)

Namespace、Node、ClusterRole、ClusterRoleBinding

名称空间级别(院系)

工作负载型资源:Pod、ReplicaSet、Deployment...

服务发现及负载均衡型资源:Service、Ingress...

配置与存储型资源:Volume、CSI...

特殊类型的存储卷:ConfigMap、Secret...

元数据型资源(班级)(不能独立存在,必须依附别的资源对象上)

HPA、PodTemplate、LimitRange

4.2 资源清单的编写

——结构、定义、编写

4.2.1 资源清单结构

(1)举例

apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: myapp spec: containers: - name: myapp-1 image: myapp:v1.0 - name: busybox-1 image: busybox:v1.0 command: - "/bin/sh" - "-c" - "sleep 3600" status: conditions: - lastProbeTime: null status: "True" type: Initialized - lastProbetime: null

apiVersion、kind、metadata、spec:必选项,用户自定义

status:由k8s集群维护生成的状态信息

(2)apiVersion 接口组/版本

# 核心组v1版本,写做:core/v1、v1,举例:group/apiversion,接口组/版本 apiVersion: v1 # 如下所示,为了多人协作更新代码,在原代码的基础上新增版本代码。 # 假如user用户访问classroom/v1/index.php仍然可用,本身开发人员可以在classroom/v2beta1/index.php继续开发 [root@k8s-master01 home]# tree . ├── classroom │ ├── v1 │ │ └── index.php │ └── v2beta1 │ └── index.php └── teacher ├── v1 │ └── index.php ├── v2 │ └── index.php └── v3 └── index.php # kubectl api-versions 查看当前k8s版本所有接口组及版本。 flowcontrol.apiserver.k8s.io 下的两个版本就是这种情况,新增v1beta3版本 [root@k8s-master01 home]# kubectl api-versions admissionregistration.k8s.io/v1 apiextensions.k8s.io/v1 apiregistration.k8s.io/v1 apps/v1 authentication.k8s.io/v1 authorization.k8s.io/v1 autoscaling/v1 autoscaling/v2 batch/v1 certificates.k8s.io/v1 coordination.k8s.io/v1 crd.projectcalico.org/v1 discovery.k8s.io/v1 events.k8s.io/v1 flowcontrol.apiserver.k8s.io/v1 flowcontrol.apiserver.k8s.io/v1beta3 networking.k8s.io/v1 node.k8s.io/v1 policy/v1 rbac.authorization.k8s.io/v1 scheduling.k8s.io/v1 storage.k8s.io/v1 v1 # 使用kubectl explain 查看当前资源的接口组、版本、必填信息 # 不同的版本下,使用的接口组和版本可能会不一样,使用前可以看看 [root@k8s-master01 home]# kubectl explain pod KIND: Pod VERSION: v1 DESCRIPTION: Pod is a collection of containers that can run on a host. This resource is created by clients and scheduled onto hosts. FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <ObjectMeta> Standard object's metadata. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata spec <PodSpec> Specification of the desired behavior of the pod. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status status <PodStatus> Most recently observed status of the pod. This data may not be up to date. Populated by the system. Read-only. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

(3)kind 类别

定义的要求,比如需要汽车,飞机,就定义汽车、飞机,比如:pod、deployment...

(4)metadata 元数据

对象的描述信息,比如su7、007、001 pod是名称空间级别的资源,要写到归属在哪一个名称空间下的资源,默认是default pod名字、归属名称空间名字、pod标签(用于归类)

(5)spec 期望

声明式表达(告诉别人你想怎么样)

最终想要达成的状态(不一定达成,尽量达成)

(6)status 状态

根据期望,k8s 达成的状态情况,非用户定义,集群根据运行启动情况,动态描绘资源对象状态

# 可以通过 . 解决资源不清,有问题直接1级.2级.3级查看信息,还会提供网站资料 [root@k8s-master01 home]# kubectl explain pod.spec.containers.image KIND: Pod VERSION: v1 FIELD: image <string> DESCRIPTION: Container image name. More info: https://kubernetes.io/docs/concepts/containers/images This field is optional to allow higher level config management to default or override container images in workload controllers like Deployments and StatefulSets.

(7)定义第一个 pod

[root@k8s-master01 ~]# cat 1.pod.yaml apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: myapp spec: containers: - name: myapp-1 image: myapp:v1.0 - name: busybox-1 image: busybox command: - "/bin/sh" - "-c" - "sleep 3600"

1.接口组版本:核心组v1版

2.类别:pod类别

3.元数据描述:pod名、pod所在名称空间、pod标签

4.期望:容器组,基于myapp:v1.0镜像启动一个名为myapp-1的容器,基于busybox镜像启动一个名为busybox-1的容器,busybox-1容器启动后执行sh命令sleep 3600

(8)导入需要用到的镜像

# 下载需要用到的应用镜像 [root@k8s-master01 home]# docker pull swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/wangyanglinux/myapp:v1 v1: Pulling from ddn-k8s/docker.io/wangyanglinux/myapp 550fe1bea624: Pull complete af3988949040: Pull complete d6642feac728: Pull complete c20f0a205eaa: Pull complete 438668b6babd: Pull complete bf778e8612d0: Pull complete Digest: sha256:9eeca44ba2d410e54fccc54cbe9c021802aa8b9836a0bcf3d3229354e4c8870e Status: Downloaded newer image for swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/wangyanglinux/myapp:v1 swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/wangyanglinux/myapp:v1 # 修改镜像标签 [root@k8s-master01 home]# docker tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/wangyanglinux/myapp:v1 myapp:v1.0 [root@k8s-master01 home]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE nginx latest be69f2940aaf 6 weeks ago 192MB busybox latest 6d3e4188a38a 8 months ago 4.28MB registry.aliyuncs.com/google_containers/kube-apiserver v1.29.2 8a9000f98a52 15 months ago 127MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.29.2 138fb5a3a2e3 15 months ago 122MB registry.aliyuncs.com/google_containers/kube-proxy v1.29.2 9344fce2372f 15 months ago 82.3MB registry.aliyuncs.com/google_containers/kube-scheduler v1.29.2 6fc5e6b7218c 15 months ago 59.5MB registry.aliyuncs.com/google_containers/etcd 3.5.10-0 a0eed15eed44 19 months ago 148MB calico/typha v3.26.3 5993c7d25ac5 19 months ago 67.4MB calico/kube-controllers v3.26.3 08c1b67c88ce 19 months ago 74.3MB calico/cni v3.26.3 fb04b19c1058 19 months ago 209MB calico/node v3.26.3 17e960f4e39c 20 months ago 247MB registry.aliyuncs.com/google_containers/coredns v1.11.1 cbb01a7bd410 21 months ago 59.8MB registry.aliyuncs.com/google_containers/pause 3.9 e6f181688397 2 years ago 744kB ghost 4.48.2 1ae6b8d721a2 2 years ago 480MB registry.aliyuncs.com/google_containers/pause 3.8 4873874c08ef 2 years ago 711kB swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/wangyanglinux/myapp v1 d4a5e0eaa84f 7 years ago 15.5MB myapp v1.0 d4a5e0eaa84f 7 years ago 15.5MB pause 3.1 da86e6ba6ca1 7 years ago 742kB # 导出镜像到node01、node02节点 [root@k8s-master01 ~]# docker save myapp:v1.0 -o myapp.tar.gz [root@k8s-master01 ~]# scp myapp.tar.gz root@n1:/root root@n1's password: myapp.tar.gz 100% 15MB 84.4MB/s 00:00 [root@k8s-master01 ~]# scp myapp.tar.gz root@n2:/root root@n2's password: myapp.tar.gz 100% 15MB 79.8MB/s 00:00 [root@k8s-node01 ~]# docker load -i myapp.tar.gz .......... [root@k8s-node02 ~]# docker load -i myapp.tar.gz ..........

(9)基于k8s创建第一个 pod

[root@k8s-master01 4]# pwd /root/4 [root@k8s-master01 4]# ls -ltr total 4 -rw-r--r-- 1 root root 257 May 28 23:35 1.pod.yaml # 基于指定文件创建资源清单 [root@k8s-master01 4]# kubectl create -f 1.pod.yaml pod/pod-demo created # 不加 -n 默认default空间下 [root@k8s-master01 4]# kubectl get po NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 75s [root@k8s-master01 4]# kubectl get po -n default NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 0 94s

(10)验证演示

[root@k8s-master01 4]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-demo 2/2 Running 1 (9m46s ago) 70m 10.244.58.196 k8s-node02 <none> <none> # 在node02节点上,运行着逻辑pod,从名称归类上可以分辨,busybox休眠结束后,会重新拉起busybox加入到pod中(pause、myapp、busybox) [root@k8s-node02 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 8aeaf8dbd3f9 busybox "/bin/sh -c 'sleep 3…" 10 minutes ago Up 10 minutes k8s_busybox-1_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_1 8a14e0336370 busybox "/bin/sh -c 'sleep 3…" About an hour ago Exited (0) 11 minutes ago k8s_busybox-1_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_0 98e2ac37b627 d4a5e0eaa84f "nginx -g 'daemon of…" About an hour ago Up About an hour k8s_myapp-1_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_0 8975e45498db registry.aliyuncs.com/google_containers/pause:3.8 "/pause" About an hour ago Up About an hour k8s_POD_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_0 # master01可以访问 [root@k8s-master01 4]# curl 10.244.58.196 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> # 可以在 node02 节点上,通过docker命令进入容器添加信息,再访问,是容器组组成的pod [root@k8s-node02 ~]# docker exec -it k8s_myapp-1_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_0 sh / # echo '20250529' >> /usr/share/nginx/html/index.html / # exit [root@k8s-master01 4]# curl 10.244.58.196 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> 20250529 # 可以在 master 节点,通过kubectl命令进入pod,添加信息 [root@k8s-master01 4]#kubectl exec -it pod-demo -c myapp-1 -- /bin/sh / # echo '11:46:23' >> /usr/share/nginx/html/index.html / # exit [root@k8s-master01 4]# curl 10.244.58.196 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> 20250529 11:46:23

(11)同一Pod中的两个myapp

# 基于容器新封装一个myapp:v2.0 [root@k8s-node02 ~]# docker exec -it k8s_myapp-1_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_0 sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # cat index.html Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> usr/share/nginx/html # exit [root@k8s-node02 ~]# docker container commit k8s_myapp-1_pod-demo_default_cd8836a4-a7d8-44c7-a3e6-079f0dbb04ea_0 myapp:v2.0 sha256:93ddbdcd983ae95c9eb4ee5c0b5acc505027e4e687342c8dd490abf20d67485d [root@k8s-node02 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE myapp v2.0 93ddbdcd983a 4 seconds ago 15.5MB # 推送给另外两个节点,另外两个节点再倒入到本地镜像 [root@k8s-node02 ~]# docker save myapp:v2.0 -o myapp_v2.0.tar.gz [root@k8s-node02 ~]# scp myapp_v2.0.tar.gz root@m1:/root [root@k8s-node02 ~]# scp myapp_v2.0.tar.gz root@n1:/root [root@k8s-master01 ~]# docker load -i myapp_v2.0.tar.gz [root@k8s-node01 ~]# docker load -i myapp_v2.0.tar.gz # 同一个Pod中运行两个myapp [root@k8s-master01 4]# cat 2.pod.yaml apiVersion: v1 kind: Pod metadata: name: pod-demo-1 namespace: default labels: app: myapp spec: containers: - name: myapp-1 image: myapp:v1.0 - name: myapp-2 image: myapp:v2.0 [root@k8s-master01 4]# kubectl create -f 2.pod.yaml pod/pod-demo-1 created # 一直启动不成功 [root@k8s-master01 4]# kubectl get po NAME READY STATUS RESTARTS AGE pod-demo 2/2 Running 3 (57m ago) 3h59m pod-demo-1 1/2 CrashLoopBackOff 5 (14s ago) 3m28s # 查看描述一个启动,另外一个启动不成功 [root@k8s-master01 4]# kubectl describe po pod-demo-1 ......... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 104s default-scheduler Successfully assigned default/pod-demo-1 to k8s-node01 Normal Pulled 103s kubelet Container image "myapp:v1.0" already present on machine Normal Created 103s kubelet Created container myapp-1 Normal Started 103s kubelet Started container myapp-1 Normal Pulled 8s (x5 over 103s) kubelet Container image "myapp:v2.0" already present on machine Normal Created 8s (x5 over 103s) kubelet Created container myapp-2 Normal Started 8s (x5 over 103s) kubelet Started container myapp-2 Warning BackOff 5s (x7 over 97s) kubelet Back-off restarting failed container myapp-2 in pod pod-demo-1_default(a79e14af-66a1-4be0-95fb-2e2f557719dc) # 查看日志,一个pod网络中myapp-1已经绑定了80端口,myapp-2绑定出错 [root@k8s-master01 4]# kubectl logs pod-demo-1 myapp-1 [root@k8s-master01 4]# kubectl logs pod-demo-1 myapp-2 2025/05/29 06:15:01 [emerg] 1#1: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2025/05/29 06:15:01 [emerg] 1#1: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2025/05/29 06:15:01 [emerg] 1#1: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2025/05/29 06:15:01 [emerg] 1#1: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2025/05/29 06:15:01 [emerg] 1#1: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2025/05/29 06:15:01 [emerg] 1#1: still could not bind() nginx: [emerg] still could not bind()

(12)本节命令总结

# 获取当前的资源,pod kubectl get pod - A,--all-namespaces 查看当前所有名称空间的资源 -n 指定名称空间,默认值 default,kube-system 空间存放着当前组件资源 --show-labes 查看当前的标签 -l 筛选资源,key、key=value -o wide 详细信息包括 IP 、分批的节点 # 进入pod 内部的容器执行命令 kubectl exec -it pod名 -c 容器名 -- command -c 可以省略,默认进入唯一的容器内部 # 查看资源的描述 kubectl explain pod.spec # 查看 pod 内部容器的日志 kubectl logs pod名 -c 容器名 # 查看资源对象的详细描述 kubectl describe pod名

4.3 Pod 的生命周期

4.3.1 Pod 生命周期详解流程图

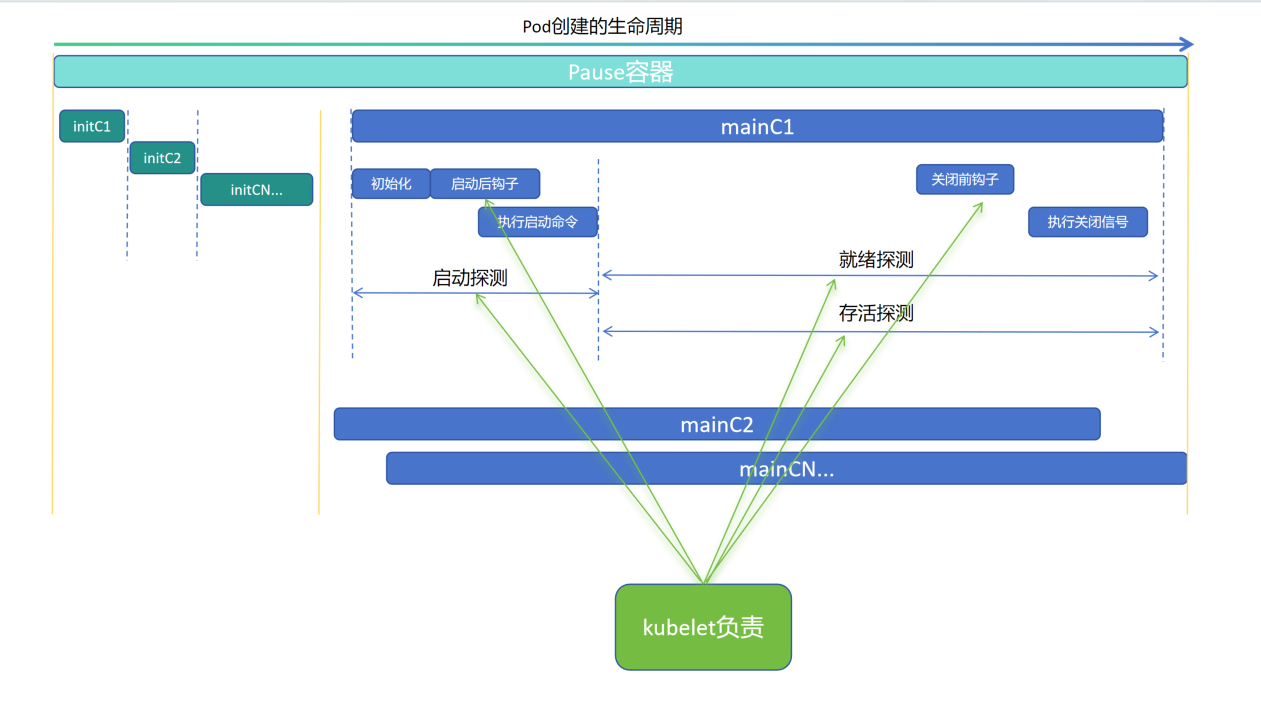

1.先启动pause容器,共享网络、IPC、PID、回收僵尸进程

2.启动初始化容器initC,并不伴随整个容器的生命周期,只是前期的一部分,不参与后期,只完成初始化动作。

3.可以定义多个初始化容器,一定是前一个初始化容器initC1完成任务后,才会启动initC2,如果initC1启动失败将再重构一个initC1重新启动,initC1启动成功并完成任务,返回码为0结束,开始启动initC2,如果initC2启动失败,将重头来过,继续从initC1开始启动,直到所有初始化容器启动成功并完成任务返回码为0,开始启动主容器mainC。(启动是线性的、连坐制)

剖析原因:(1)安全性,当有安全性操作时,可以使用此资源,因为他是一段时间的操作,会比在主容器中操作理想(我家大门常打开是不可取的,很危险)

(2)阻塞性,初始化容器ininC不成功,便不会进入主容器mainC,当有启动顺序的要求时,可以在initC中添加判断任务。(nginx+php的Pod在初始化容器initC中加入判断后端mysql服务是否提供访问。初始化容器initC判断mysql正常提供服务,因此正常结束任务,才开始启动mainC。)

4.进入主容器mainC,多个mainC可以同时创建、同时存在,创建过程中是并发执行,但之前镜像下载是线性的,所以看起来mainC启动是线性的,主容器mainC开始执行初始化init操作,然后主容器mainC执行启动命令。

内部钩子特性:由pod所在节点的kubelet机制执行(随着集群节点增加,apiServer会越来越“忙”,但是 kubelet 是随着节点增加而增加的)

(1)启动后钩子poststart:主容器mainC初始化启动后执行的钩子动作,并不能保证执行启动命令时启动后钩子 poststart 已经执行完毕了(可能会有交叉、重叠的部分)(如果非使用启动后钩子为主容器mainC进行编译,那么主容器mainC一定要在启动后钩子执行完后再执行启动命令,可以使用判断,判断启动后钩子执行成功后,再执行启动命令)。

(2)关闭前钩子prestop:关闭容器前,先发送15号信号,如果15号信号一直没有停止容器,再发送9信号强制杀死,关闭前钩子 prestop 先拦截15号信号,执行完关闭前钩子prestop中的任务后,才释放15号信号,可以用于pod关闭前,数据的备份保存。(我知道你很急,但你先别急)

内部探针特性:由pod所在节点的 kubelet 机制执行(随着集群节点增加,apiServer会越来越“忙”,但是 kubelet 是随着节点增加而增加的)

(1)就绪探测 readnessprobe:整个pod周期,探测pod内部容器服务是否准备好,是否可对外提供服务。

(2)存活探测livenessprobe:整个pod周期,探测pod是否存活,如果探测pod已死或假死,将杀死容器,再重建容器。

(3)启动探测startupprobe:存活和启动探测周期短,启动执行命令还没执行完,容器内服务还没启动,就被存活探测检测为死亡,杀死开始然后重建。因此定义了启动探测,存活探测和就绪探测都在启动探测执行后才开始执行,且启动探测只在容器启动时执行一次,后续不在执行。

4.3.2 初始化容器 initC

(1)初始化容器的特性

init 容器与普通的容器非常像,除了如下两点:

1.init 容器总是运行到成功完成为止

2.每个 init 容器都必须在下一个 init 容器启动之前成功完成

如果 Pod 的 init 容器失败,Kubernetes 会不断地重启该Pod,直到 Init 容器成功为止。然而,如果 Pod 对应的 restartPolicy 为 Never,他不会重新启动。

InitC 与应用容器具备不同的镜像,可以把一些危险的工具放置在 InitC 中,进行使用

InitC 多个之间是线性启动的,所以可以做一些延迟性的操作

InitC 无法定义 readinessProbe,其他以外同应用容器定义无异

(2)阻塞性验证

[root@k8s-master01 4]# cat 3.init.yaml apiVersion: v1 kind: Pod metadata: name: initc-1 labels: app: initc key: myservice spec: containers: - name: myapp-container image: busybox:1.28 command: ['sh', '-c', 'echo The app is running! && sleep 3600'] initContainers: - name: init-myservice image: busybox:1.28 command: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;'] - name: init-mydb image: busybox:1.28 command: ['sh', '-c', 'until nslookup mydb; do echo waiting for mydb; sleep 2; done;']

1.核心组:v1版接口 2.类别:pod 3.元数据:pod名、pod标签 4.期望:容器组、容器名称、基于镜像版本、执行命令。初始容器1名称、基于镜像版本、执行为假循环命令解析myservice,为真打印信息后退出循环。初始容器2名称,基于镜像版本,执行为假循环命令解析myservice,为真打印信息后退出循环。

# 一直在执行初始化没有创建成功,因为第一个初始化容器没有解析myservice [root@k8s-master01 4]# kubectl create -f 3.init.yaml pod/initc-1 created [root@k8s-master01 4]# kubectl get po NAME READY STATUS RESTARTS AGE initc-1 0/1 Init:0/2 0 3s [root@k8s-master01 4]# kubectl describe po initc-1 .......... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 15s default-scheduler Successfully assigned default/initc-1 to k8s-node02 Normal Pulled 14s kubelet Container image "busybox:1.28" already present on machine Normal Created 14s kubelet Created container init-myservice Normal Started 14s kubelet Started container init-myservice # 通过日志可以看到没有对应解析的service [root@k8s-master01 4]# kubectl logs initc-1 -c init-myservice nslookup: can't resolve 'myservice' Server: 10.0.0.10 Address 1: 10.0.0.10 kube-dns.kube-system.svc.cluster.local waiting for myservice Server: 10.0.0.10 Address 1: 10.0.0.10 kube-dns.kube-system.svc.cluster.local # 创建对应的service, myservice [root@k8s-master01 4]# kubectl create svc clusterip myservice --tcp=80:80 service/myservice created [root@k8s-master01 4]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 4d23h myservice ClusterIP 10.4.249.169 <none> 80/TCP 5s # pod中的第一个容器已经启动成功,但是第二个仍然没有创建成功 [root@k8s-master01 4]# kubectl get pod NAME READY STATUS RESTARTS AGE initc-1 0/1 Init:1/2 0 3m20s [root@k8s-master01 4]# kubectl logs initc-1 -c init-myservice|tail -2 Name: myservice Address 1: 10.4.249.169 myservice.default.svc.cluster.local [root@k8s-master01 4]# kubectl describe pod initc ........ Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 7m4s default-scheduler Successfully assigned default/initc-1 to k8s-node02 Normal Pulled 7m3s kubelet Container image "busybox:1.28" already present on machine Normal Created 7m3s kubelet Created container init-myservice Normal Started 7m3s kubelet Started container init-myservice Normal Pulled 3m47s kubelet Container image "busybox:1.28" already present on machine Normal Created 3m46s kubelet Created container init-mydb Normal Started 3m46s kubelet Started container init-mydb [root@k8s-master01 4]# kubectl logs initc-1 -c init-mydb nslookup: can't resolve 'mydb' Server: 10.0.0.10 Address 1: 10.0.0.10 kube-dns.kube-system.svc.cluster.local waiting for mydb Server: 10.0.0.10 Address 1: 10.0.0.10 kube-dns.kube-system.svc.cluster.local # 创建第二个svc, mydb [root@k8s-master01 4]# kubectl create svc clusterip mydb --tcp=80:80 service/mydb created [root@k8s-master01 4]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 4d23h mydb ClusterIP 10.4.196.135 <none> 80/TCP 5s myservice ClusterIP 10.4.249.169 <none> 80/TCP 7m41s [root@k8s-master01 4]# kubectl get pod NAME READY STATUS RESTARTS AGE initc-1 1/1 Running 0 10m [root@k8s-master01 4]# kubectl logs initc-1 -c init-mydb|tail -2 Name: mydb Address 1: 10.4.196.135 mydb.default.svc.cluster.local # pod 启动完成,并运行成功,由此可见,初始化容器具有阻塞性,myservice未成功,不会创建mydb,mydb未成功,不会创建mainC,当且当mydb创建时间足够长,会看到initc-1中pod的init-myservice容器每次也是重构过的。 [root@k8s-master01 4]# kubectl logs initc-1 Defaulted container "myapp-container" out of: myapp-container, init-myservice (init), init-mydb (init) The app is running!

(3)检查 initC 的返回码

# 根据返回值来验证容器初始化是不是只有返回为0,才继续执行后续主容器 [root@k8s-master01 4]# yum -y install go ........ # 使用go语言进行编译,定义返回码的代码 [root@k8s-master01 4]# cat randexit package main import ( "flag" "fmt" "math/rand" "os" "time" ) func main() { // 定义命令行参数 sleepTime := flag.Int("sleeptime", 4, "休眠时间(秒)") exitCode := flag.Int("exitcode", 2, "返回码:0=成功,1=失败,其他=随机") flag.Parse() // 记录原始休眠时间用于输出 sleepTimeOriginal := *sleepTime // 执行休眠逻辑 for *sleepTime > 0 { time.Sleep(time.Second * 1) *sleepTime-- } // 根据 exitcode 参数决定退出码 if *exitCode == 0 { fmt.Printf("休眠 %v 秒,返回码为 %v!\n", sleepTimeOriginal, 0) os.Exit(0) } else if *exitCode == 1 { fmt.Printf("休眠 %v 秒,返回码为 %v!\n", sleepTimeOriginal, 1) os.Exit(1) } else { // 随机生成一个数 rand.Seed(time.Now().UnixNano()) num := rand.Intn(100) if num >= 50 { fmt.Printf("休眠 %v 秒,产生的随机数为 %v,大于等于 50,返回码为 %v!\n", sleepTimeOriginal, num, 1) os.Exit(1) } else { fmt.Printf("休眠 %v 秒,产生的随机数为 %v,小于 50,返回码为 %v!\n", sleepTimeOriginal, num, 0) os.Exit(0) } } } [root@k8s-master01 4]# mv randexit randexit.go [root@k8s-master01 4]# rm -f randexit [root@k8s-master01 4]# go build -o randexit randexit.go # 编译文件执行成功 [root@k8s-master01 4]# ./randexit 休眠 4 秒,产生的随机数为 32,小于 50,返回码为 0! # 构建镜像 [root@k8s-master01 4]# cat Dockerfile FROM alpine ADD ./randexit /root RUN chmod +x /root/randexit CMD ["--sleeptime=5"] ENTRYPOINT ["/root/randexit"] [root@k8s-master01 4]# docker build -t randexitv1 . [root@k8s-master01 4]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE randexitv1 latest 5fbaac0ba1e4 2 minutes ago 12.9MB # 导出镜像到node01和node02节点 [root@k8s-master01 4]# docker save randexitv1:latest -o randexitv1.tar.gz [root@k8s-master01 4]# scp randexitv1.tar.gz root@n1:/root/ root@n1's password: randexitv1.tar.gz 100% 13MB 83.9MB/s 00:00 [root@k8s-master01 4]# scp randexitv1.tar.gz root@n2:/root/ root@n2's password: randexitv1.tar.gz 100% 13MB 84.7MB/s 00:00 [root@k8s-node01 ~]# docker load -i randexitv1.tar.gz [root@k8s-node02 ~]# docker load -i randexitv1.tar.gz

[root@k8s-master01 4]# cat 4.pod.yaml apiVersion: v1 kind: Pod metadata: name: myapp-pod labels: app: myapp spec: containers: - name: myapp image: myapp:v1.0 initContainers: - name: randexit image: randexitv1:latest imagePullPolicy: IfNotPresent args: ["--exitcode=1"]

1.接口组版本:核心组v1版本 2.类别:pod 3.元数据:pod名、pod标签 4.期望:容器组:基于myapp:v1.0版本镜像启动的容器名myapp、初始化容器:基于本地有则使用本地,本地无则去镜像仓库下载randexit镜像启动的容器名randexit,返回码为1

[root@k8s-master01 4]# kubectl create -f 4.pod.yaml pod/myapp-pod created [root@k8s-master01 4]# kubectl get po NAME READY STATUS RESTARTS AGE myapp-pod 0/1 Init:0/1 0 3s [root@k8s-master01 4]# kubectl describe po myapp-pod ........ Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 15s default-scheduler Successfully assigned default/myapp-pod to k8s-node02 Normal Pulled 9s (x2 over 14s) kubelet Container image "randexitv1:latest" already present on machine Normal Created 9s (x2 over 14s) kubelet Created container randexit Normal Started 9s (x2 over 14s) kubelet Started container randexit Warning BackOff 4s kubelet Back-off restarting failed container randexit in pod myapp-pod_default(9c42b6bc-59f0-4fb6-9301-5473012fc2f4) [root@k8s-master01 4]# kubectl logs myapp-pod -c randexit 休眠 4 秒,返回码为 1! # 创建pod名为myapp-pod-0,返回码为0 [root@k8s-master01 4]# cat 5.pod.yaml apiVersion: v1 kind: Pod metadata: name: myapp-pod-0 labels: app: myapp spec: containers: - name: myapp image: myapp:v1.0 initContainers: - name: randexit image: randexitv1:latest imagePullPolicy: IfNotPresent args: ["--exitcode=0"] [root@k8s-master01 4]# kubectl create -f 5.pod.yaml pod/myapp-pod-0 created # 相同的资源,pod名不同是因为一个名字空间下不能重复,就修改了返回码,则返回码为0,启动成功 [root@k8s-master01 4]# kubectl get po NAME READY STATUS RESTARTS AGE myapp-pod 0/1 Init:CrashLoopBackOff 5 (109s ago) 5m8s myapp-pod-0 1/1 Running 0 9s [root@k8s-master01 4]# kubectl logs myapp-pod -c randexit 休眠 4 秒,返回码为 1! [root@k8s-master01 4]# kubectl logs myapp-pod-0 -c randexit 休眠 4 秒,返回码为 0!

4.3.3 探针

(1)探针及探测方式介绍

a.介绍

探针是由当前Pod所在节点的 kubelet 对容器执行的定期诊断。要执行诊断,kubelet 调用由容器实现的 Handler。有三种类型的处理程序:

ExecAction:在容器内执行指定命令。如果命令退出时返回码为0则认为诊断成功

TCPSockerAction:对指定端口上的容器的IP地址进行TCP检查。如果端口打开,则诊断被认为是成功的

HTTPGetAction:对指定的端口和路径上的容器的IP地址执行HTTP Get请求。如果响应的状态码大于等于 200 且小于 400 ,则诊断被认为是成功的

每次探测都将获得以下三种结果之一:

成功:容器通过诊断

失败:容器未通过诊断

未知:诊断失败,因此不会采取任何行动

startupProbe:开始检测吗?

livenessProbe:还活着吗?

readinessProbe:准备提供服务了吗?

b.生产图

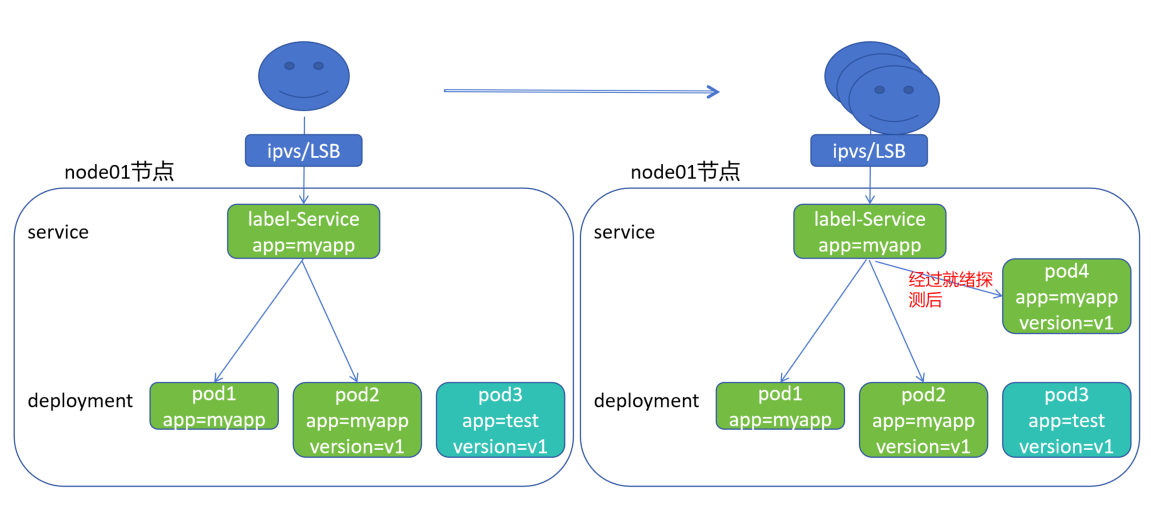

1.左图当访问量在可控范围内,svc 会负载到带有app=myapp的pod上,以此达到服务的可用。(deployment管理app=myapp的pod,svc负载app=myapp的pod,都是子集运算。)

2.右图,当用户访问增加,现有pod不足以抗住访问时,需要新增pod,但是svc会负载到带有app=myapp标签的pod上,而如果pod4还没有启动就被负载,是不能提供访问的,所以添加就绪探针,只有当pod4达到启动状态,才会将pod4加入到svc的负载中。

(2)readinessProbe 就绪探针

介绍:k8s 通过添加就绪探针,解决尤其是在扩容时保证提供给用户的服务都是可用的。

选项说明:

initialDelaySeconds:容器启动后要等待多少秒后就绪探针开始工作,单位“秒”,默认是0秒,最小值是0

periodSeconds:执行探测的时间间隔(单位是秒),默认是10s,单位“秒”,最小值是1

timeoutSeconds:探针执行检测请求后,等待响应的超时时间,默认值是1s,单位“秒”,最小值是1

successThreshold:探针检测失败后认为成功的最小连接成功次数,默认值为1。必须为1才能激活和启动,最小值为1

failureThreshold:探测失败的重试次数,重试一定次数后将认为失败,默认值为3,最小值为1。

总结:1.如果 pod 内部的容器不添加就绪探测,默认就绪。

2.如果添加了就绪探测,只有就绪通过以后,才标记修改为就绪状态。当前pod内的所有的容器都就绪,才标记当前Pod就绪。

结果:

成功:将当前的容器标记为就绪

失败:静默

未知:静默

[root@k8s-master01 4.3]# cat 1.pod.yaml apiVersion: v1 kind: Pod metadata: name: pod-1 namespace: default labels: app: myapp spec: containers: - name: myapp-1 image: myapp:v1.0 [root@k8s-master01 4.3]# cat 2.pod.yaml apiVersion: v1 kind: Pod metadata: name: pod-2 namespace: default labels: app: myapp version: v1 spec: containers: - name: myapp-1 image: myapp:v1.0 [root@k8s-master01 4.3]# kubectl create -f 1.pod.yaml pod/pod-1 created [root@k8s-master01 4.3]# kubectl create -f 2.pod.yaml pod/pod-2 created [root@k8s-master01 4.3]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-1 1/1 Running 0 3m57s app=myapp pod-2 1/1 Running 0 2m57s app=myapp,version=v1 # 创建负载的svc,svc自动匹配pod中带有本svc标签名myapp的pod进行负载。通过svc的ip负载后端pod [root@k8s-master01 4.3]# kubectl create svc clusterip myapp --tcp=80:80 service/myapp created [root@k8s-master01 4.3]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 6d14h myapp ClusterIP 10.5.145.49 <none> 80/TCP 5s [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-1 [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-2 # 创建pod3,启动但是并未被svc加入到负载中,因为不符合svc的标签匹配 [root@k8s-master01 4.3]# cat 3.pod.yaml apiVersion: v1 kind: Pod metadata: name: pod-3 namespace: default labels: app: test version: v1 spec: containers: - name: myapp-1 image: myapp:v1.0 [root@k8s-master01 4.3]# kubectl create -f 3.pod.yaml pod/pod-3 created [root@k8s-master01 4.3]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-1 1/1 Running 0 8m41s app=myapp pod-2 1/1 Running 0 7m41s app=myapp,version=v1 pod-3 1/1 Running 0 24s app=test,version=v1 [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-1 [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-2

[root@k8s-master01 4.3]# cat 4.pod.yaml apiVersion: v1 kind: Pod metadata: name: readiness-httpget-pod namespace: default labels: app: myapp env: test spec: containers: - name: readiness-httpget-container image: myapp:v1.0 imagePullPolicy: IfNotPresent readinessProbe: httpGet: port: 80 path: /index1.html initialDelaySeconds: 1 periodSeconds: 3

1.核心组:v1版本 2.类型:pod 3.元数据:pod名、pod所在名字空间、标签:app=myap、env=test 4.期望:容器组:pod内容器名、基于镜像版本、镜像下载策略、就绪探针,基于httpget探测index1.html的80端口、延迟检测时间1秒、每间隔3秒重复探测

# 创建第4个pod,符合svc的标签,仍然不能被负载,pod-3是就绪但不匹配,readiness-httpget-pod是匹配但未就绪 [root@k8s-master01 4.3]# kubectl create -f 4.pod.yaml pod/readiness-httpget-pod created [root@k8s-master01 4.3]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-1 1/1 Running 1 (21m ago) 3h48m app=myapp pod-2 1/1 Running 1 (21m ago) 3h47m app=myapp,version=v1 pod-3 1/1 Running 1 (21m ago) 3h40m app=test,version=v1 readiness-httpget-pod 0/1 Running 0 21s app=myapp,env=test [root@k8s-master01 4.3]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 6d18h myapp ClusterIP 10.5.145.49 <none> 80/TCP 3h44m [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-2 [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-1 # pod 内部就绪探测不成功 [root@k8s-master01 4.3]# kubectl describe po readiness-httpget-pod .......... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 14s default-scheduler Successfully assigned default/readiness-httpget-pod to k8s-node01 Normal Pulled 13s kubelet Container image "myapp:v1.0" already present on machine Normal Created 13s kubelet Created container readiness-httpget-container Normal Started 12s kubelet Started container readiness-httpget-container Warning Unhealthy 1s (x7 over 12s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404 # 进入pod,创建探测的路径 [root@k8s-master01 4.3]# kubectl exec -it readiness-httpget-pod -c readiness-httpget-container -- /bin/sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # ls 50x.html index.html /usr/share/nginx/html # touch index1.html /usr/share/nginx/html # ls 50x.html index.html index1.html /usr/share/nginx/html # exit # 已经运行并且访问svc,已经被负载到集群中 [root@k8s-master01 4.3]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-1 1/1 Running 1 (35m ago) 4h3m app=myapp pod-2 1/1 Running 1 (35m ago) 4h2m app=myapp,version=v1 pod-3 1/1 Running 1 (35m ago) 3h54m app=test,version=v1 readiness-httpget-pod 1/1 Running 0 5m app=myapp,env=test [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-1 [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html readiness-httpget-pod [root@k8s-master01 4.3]# curl 10.5.145.49/hostname.html pod-2

[root@k8s-master01 4.3]# cat 5.pod.yaml apiVersion: v1 kind: Pod metadata: name: readiness-exec-pod namespace: default spec: containers: - name: readiness-exec-container image: busybox:1.28.1 imagePullPolicy: IfNotPresent command: ["/bin/sh","-c","touch /tmp/live ; sleep 60; rm -rf /tmp/live; sleep 3600"] readinessProbe: exec: command: ["test","-e","/tmp/live"] initialDelaySeconds: 1 periodSeconds: 3

4.期望:容器组:容器名、基于镜像版本、镜像拉取策略、执行命令,创建/tmp/live,睡眠60秒,然后删除,休眠3600秒、基于命令探测:test -e /tmp/live、1秒后开始工作、探测间隔3秒

# 创建基于exec命令的探测,创建开始正常运行,但是过了一段时间后,大概60多秒,pod内没有容器运行了,验证1.exec探测有效,2.exec老版本通过后就结束了不在探测,而新版本从开始创建到容器死亡,一直进行探测 [root@k8s-master01 4.3]# kubectl create -f 5.pod.yaml pod/readiness-exec-pod created [root@k8s-master01 4.3]# kubectl get po --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-1 1/1 Running 1 (61m ago) 4h28m app=myapp pod-2 1/1 Running 1 (61m ago) 4h27m app=myapp,version=v1 pod-3 1/1 Running 1 (61m ago) 4h20m app=test,version=v1 readiness-exec-pod 1/1 Running 0 10s <none> readiness-httpget-pod 1/1 Running 0 30m app=myapp,env=test [root@k8s-master01 4.3]# kubectl get po --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-1 1/1 Running 1 (62m ago) 4h29m app=myapp pod-2 1/1 Running 1 (62m ago) 4h28m app=myapp,version=v1 pod-3 1/1 Running 1 (62m ago) 4h21m app=test,version=v1 readiness-exec-pod 0/1 Running 0 80s <none> readiness-httpget-pod 1/1 Running 0 31m app=myapp,env=test

[root@k8s-master01 4.3]# cat 6.pod.yaml apiVersion: v1 kind: Pod metadata: name: readiness-tcp-pod spec: containers: - name: readiness-exec-container image: myapp:v1.0 readinessProbe: initialDelaySeconds: 5 timeoutSeconds: 1 tcpSocket: port: 80

4.期望:容器组:容器名、基于镜像版本、存活探测、开始探测时间5秒、超时时间1秒、探测端口:80

备注:这里不做演示,因为这里杀死容器端口,pod也将重启(pod端口在不一定能够提供正常服务,但端口不在一定不能提供正常服务),主要应用于应用中,端口假死的检测。

(3)livenessProbe 存活探针

介绍:k8s 通过添加存活探针,解决虽然活着但是已经死了的问题。

选项说明:

initialDelaySeconds:容器启动后要等待多少秒后存活探针开始工作,单位“秒”,默认是0秒,最小值是0

periodSeconds:执行探测的时间间隔(单位是秒),默认是10s,单位“秒”,最小值是1

timeoutSeconds:探针执行检测请求后,等待响应的超时时间,默认值是1s,单位“秒”,最小值是1

successThreshold:探针检测失败后认为成功的最小连接成功次数,默认值为1。必须为1才能激活和启动,最小值为1

failureThreshold:探测失败的重试次数,重试一定次数后将认为失败,默认值为3,最小值为1。

总结:

如果 pod 内部不指定存活探测,可能会发生容器运行但是无法提供服务的情况

结果:

成功:静默

失败:根据重启的策略进行基于镜像的重建的动作,默认是:Always、Never、OnFailure

未知:静默(不做动作)

[root@k8s-master01 4.3]# cat 7.pod.yaml apiVersion: v1 kind: Pod metadata: name: liveness-exec-pod namespace: default spec: containers: - name: liveness-exec-container image: busybox:1.28.1 imagePullPolicy: IfNotPresent command: ["/bin/sh","-c","touch /tmp/live ; sleep 60; rm -rf /tmp/live; sleep 3600"] livenessProbe: exec: command: ["test","-e","/tmp/live"] initialDelaySeconds: 1 periodSeconds: 3

4.期望:容器组:容器名、基于镜像版本、镜像拉取策略、命令:创建/tmp/live,休眠60秒,然后删除/tmp/live,再休眠3600秒、存活探测:命令:test -e /tmp/live、开始探测时间1秒、检测3秒探测

# 先启动成功,运行中,60秒后删除/tmp/live,存活探测检测失败,pod默认Always重构,重构后,创建文件,探测是成功的,但60秒后又删除,所以顺序是启动、运行一段时间、重构、启动、再运行一段时间、再重构(模拟生产中文件损坏的情况) # 与前面就绪探测不同,就绪探测是失败不加入到负载,而存活探测是失败会根据重构策略重构,默认always

[root@k8s-master01 4.3]# kubectl create -f 7.pod.yaml pod/liveness-exec-pod created [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE liveness-exec-pod 1/1 Running 0 4s [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE liveness-exec-pod 0/1 CrashLoopBackOff 11 (62s ago) 34m

[root@k8s-master01 4.3]# cat 8.pod.yaml apiVersion: v1 kind: Pod metadata: name: liveness-httpget-pod namespace: default spec: containers: - name: liveness-httpget-container image: myapp:v1.0 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 livenessProbe: httpGet: port: 80 path: /index.html initialDelaySeconds: 1 periodSeconds: 3 timeoutSeconds: 3

4.期望:容器组:容器名、基于镜像版本、镜像拉取策略、容器暴露名为http的80端口、存活探测:index.html的80端口、探测开始时间1秒、间隔3秒、超时返回3秒

# 创建pod后,pod正常运行,进入pod中删除探测文件,pod重构并强制登出(验证再次进入,又出现新的index.html),根据pod启动节点,查看node02节点有两个容器,其中一个死亡,一个新建 [root@k8s-master01 4.3]# kubectl create -f 8.pod.yaml pod/liveness-httpget-pod created [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE liveness-httpget-pod 1/1 Running 0 4s [root@k8s-master01 4.3]# kubectl exec -it liveness-httpget-pod -- /bin/sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # ls 50x.html index.html /usr/share/nginx/html # rm index.html /usr/share/nginx/html # command terminated with exit code 137 [root@k8s-master01 4.3]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES liveness-httpget-pod 1/1 Running 1 (4m9s ago) 5m7s 10.244.58.222 k8s-node02 <none> <none> [root@k8s-master01 4.3]# kubectl exec -it liveness-httpget-pod -- /bin/sh / # ls /usr/share/nginx/html/ 50x.html index.html / # exit [root@k8s-node02 ~]# docker ps -a|grep liveness-httpget-pod d7b2e3730868 d4a5e0eaa84f "nginx -g 'daemon of…" 4 minutes ago Up 4 minutes k8s_liveness-httpget-container_liveness-httpget-pod_default_92fdaa94-e8eb-4fcf-b8d6-1b5de83a4272_1 8a8267185c65 d4a5e0eaa84f "nginx -g 'daemon of…" 5 minutes ago Exited (0) 4 minutes ago k8s_liveness-httpget-container_liveness-httpget-pod_default_92fdaa94-e8eb-4fcf-b8d6-1b5de83a4272_0 47cfce963a09 registry.aliyuncs.com/google_containers/pause:3.8 "/pause" 5 minutes ago Up 5 minutes k8s_POD_liveness-httpget-pod_default_92fdaa94-e8eb-4fcf-b8d6-1b5de83a4272_0

k8s_liveness-httpget-container_liveness-httpget-pod_default_92fdaa94-e8eb-4fcf-b8d6-1b5de83a4272_1

# k8s前缀_容器名_pod名_所在命名空间_当前容器的md5值_重构次数

[root@k8s-master01 4.3]# cat 9.pod.yaml apiVersion: v1 kind: Pod metadata: name: liveness-tcp-pod spec: containers: - name: liveness-tcp-container image: myapp:v1.0 livenessProbe: initialDelaySeconds: 5 timeoutSeconds: 1 tcpSocket: port: 80

4.期望:容器组:容器名、基于镜像版本、存活探测、开始探测时间5秒、超时时间1秒、探测端口:80

备注:这里不做演示,因为这里杀死容器端口,pod也将重启(pod端口在不一定能够提供正常服务,但端口不在一定不能提供正常服务),主要应用于应用中,端口假死的检测。

(4)startupProbe 启动探针

介绍:k8s 在 1.16 版本后增加 startupProbe 探针,主要解决在复杂的程序中 readinessProbe、livenessProbe 探针无法更好的判断程序是否启动、是否存活。(启动时间过长,被存活探测无限重构的问题)

选项说明:

initialDelaySeconds:容器启动后要等待多少秒后存活探针开始工作,单位“秒”,默认是0秒,最小值是0

periodSeconds:执行探测的时间间隔(单位是秒),默认是10s,单位“秒”,最小值是1

timeoutSeconds:探针执行检测请求后,等待响应的超时时间,默认值是1s,单位“秒”,最小值是1

successThreshold:探针检测失败后认为成功的最小连接成功次数,默认值为1。必须为1才能激活和启动,最小值为1

failureThreshold:探测失败的重试次数,重试一定次数后将认为失败,默认值为3,最小值为1。

总结:

保障存活探针在执行的时候不会因为时间设定问题导致无限死亡或者延迟很长的情况

结果:

成功:开始允许存活探测、就绪探测开始执行

失败:静默,等待下一次启动探针执行

未知:静默,等待下一次启动探针执行

[root@k8s-master01 4.3]# cat 10.pod.yaml apiVersion: v1 kind: Pod metadata: name: startupprobe-1 namespace: default spec: containers: - name: myapp-container image: myapp:v1.0 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 readinessProbe: httpGet: port: 80 path: /index2.html initialDelaySeconds: 1 periodSeconds: 3 startupProbe: httpGet: path: /index1.html port: 80 failureThreshold: 30 periodSeconds: 10

4.期望:容器组:容器名、基于镜像版本、镜像下载策略、容器端口名和端口、定义就绪探测:httpget探针探测80端口,index2.html,开始探测时间1秒,间隔3秒、启动探测:httpget探针探测80端口,index1.html,失败界定30次,间隔10秒 # 备注:应用程序将会有最多 5 分钟 failureThreshold * periodSeconds(30 * 10 = 300s)的时间来完成其启动过程。

# 安装vim文本编辑器 [root@k8s-master01 4.3]# yum -y install vim # 每次使用vim,都需要进入粘贴模式,直接设置成使用vim进入粘贴模式 [root@k8s-master01 ~]# echo "alias vim='vim -c \"set paste\"'" >> /root/.bashrc [root@k8s-master01 4.3]# kubectl create -f 10.pod.yaml pod/startupprobe-1 created # 未就绪,一直在重启,添加就绪探测的条件仍然无法启动,添加启动探测的条件后,pod启动(验证,启动探测最先发挥作用,启动探测后才开始存活探测和就绪探测) [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE startupprobe-1 0/1 Running 5 (11s ago) 25m [root@k8s-master01 4.3]# kubectl exec -it startupprobe-1 -c myapp-container -- /bin/sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # echo "到此一游 666" >> index2.html /usr/share/nginx/html # exit [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE startupprobe-1 0/1 Running 5 (102s ago) 26m [root@k8s-master01 4.3]# kubectl exec -it startupprobe-1 -c myapp-container -- /bin/sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # echo '启动探测' >> index1.html /usr/share/nginx/html # exit [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE startupprobe-1 1/1 Running 5 (3m12s ago) 28m

(5)钩子

Pod hook(钩子)是由 Kubernetes 管理的 pod 所在当前节点的 kubelet 发起的,当容器中的进程启动前或者容器中的进程终止之前运行,这包含在容器的生命周期之中。可以同时为 Pod 中的所有容器都配置钩子hook

钩子Hook 的类型包括两种:

1.exec:执行一段命令

2.HTTP:发送HTTP请求

基于 exec 方式:

[root@k8s-master01 4.3]# cat 11.pod.yaml apiVersion: v1 kind: Pod metadata: name: lifecycle-exec-pod spec: containers: - name: lifecycle-exec-container image: myapp:v1 lifecycle: postStart: exec: command: ["/bin/sh", "-c", "echo postStart > /usr/share/message"] preStop: exec: command: ["/bin/sh", "-c", "echo preStop > /usr/share/message"]

4.期望:容器组:容器名、基于镜像版本、生命周期:启动后钩子,执行命令 echo postStart > /usr/share/message,停止前钩子,执行命令 echo preStop /usr/share/message。

# 创建pod后,查看/usr/share/message里面的信息是postStart,证明启动后钩子在执行,写一个while循环体,持续输出postStart # 新开一个终端 kubectl delete po lifecycle-exec-pod ,删除pod,pod在死亡前,在/usr/share/message 里面输出 preStop 信息,然后pod死亡,强制退出pod,证明关闭前钩子在执行且先拦住关闭信号,先执行关闭前钩子动作再执行关闭动作 [root@k8s-master01 4.3]# kubectl create -f 11.pod.yaml pod/lifecycle-exec-pod created [root@k8s-master01 4.3]# kubectl exec -it lifecycle-exec-pod -c lifecycle-exec-container -- /bin/sh / # cat /usr/share/message postStart / # while true ;do cat /usr/share/message ;done postStart ........... preStop preStopcommand terminated with exit code 137 [root@k8s-master01 4.3]#

基于HTTP Get方式:

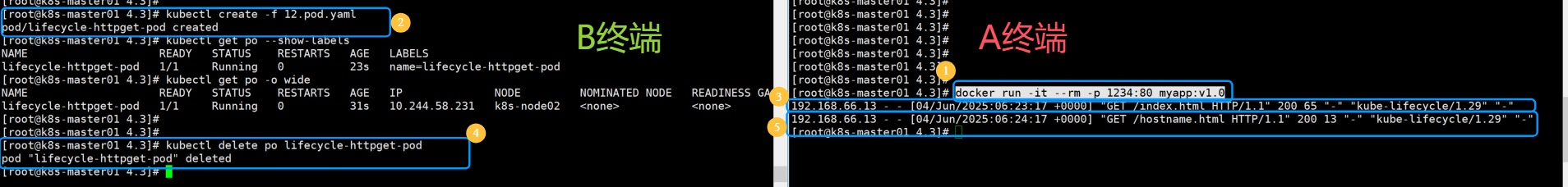

[root@k8s-master01 4.3]# cat 12.pod.yaml apiVersion: v1 kind: Pod metadata: name: lifecycle-httpget-pod labels: name: lifecycle-httpget-pod spec: containers: - name: lifecycle-httpget-container image: myapp:v1.0 ports: - containerPort: 80 lifecycle: postStart: httpGet: host: 192.168.66.11 path: index.html port: 1234 preStop: httpGet: host: 192.168.66.11 path: hostname.html port: 1234

4.期望:容器组:容器名、基于镜像版本、端口:容器端口80、生命周期:启动后钩子:httpget访问host IP 的端口1234和路径index.html 、关闭前钩子:httpget访问host IP 的端口1234和路径hostname.html

# 先启动1234端口做监听,A终端窗口 [root@k8s-master01 4.3]# docker run -it --rm -p 1234:80 myapp:v1.0 # 新开一个终端B,启动Pod [root@k8s-master01 4.3]# kubectl create -f 12.pod.yaml pod/lifecycle-httpget-pod created [root@k8s-master01 4.3]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES lifecycle-httpget-pod 1/1 Running 0 31s 10.244.58.231 k8s-node02 <none> <none> # A终端有访问index.html的日志(启动后钩子执行) [root@k8s-master01 4.3]# docker run -it --rm -p 1234:80 myapp:v1.0 192.168.66.13 - - [04/Jun/2025:06:23:17 +0000] "GET /index.html HTTP/1.1" 200 65 "-" "kube-lifecycle/1.29" "-" # B终端删除pod [root@k8s-master01 4.3]# kubectl delete po lifecycle-httpget-pod pod "lifecycle-httpget-pod" deleted # A终端有访问hostname.html的日志(关闭前钩子执行) [root@k8s-master01 4.3]# docker run -it --rm -p 1234:80 myapp:v1.0 192.168.66.13 - - [04/Jun/2025:06:23:17 +0000] "GET /index.html HTTP/1.1" 200 65 "-" "kube-lifecycle/1.29" "-" 192.168.66.13 - - [04/Jun/2025:06:24:17 +0000] "GET /hostname.html HTTP/1.1" 200 13 "-" "kube-lifecycle/1.29" "-"

(6)关于 preStop 的延伸话题

在 k8s 中,理想的状态是 pod 优雅释放,但是并不是每一个 Pod 都会这么顺利

Pod 卡死,处理不了优雅退出的命令或者操作

优雅退出的逻辑有 BUG,陷入死循环

代码问题,导致执行的命令没有效果

对于以上问题,k8s 的 Pod 终止流程中还有一个“最多可以容忍的时间”,即 grace period(在pod.spec.terminationGracePeriodSeconds 字段定义),这个值默认是 30 秒,当我们执行 kubectl delete 的时候也可以通过 --grace-period 参数显示指定一个优雅退出时间覆盖 Pod 中的配置,如果我们配置的 grace period 超过时间之后,k8s 就只能选择强制kill Pod。值得注意的是,这与 perStop Hook 和 SIGTERM 信号并行发生。k8s 不会等待 preStop Hook 完成。如果你的应用程序完成关闭并在 terminationGracePeriod 完成之前退出,k8s 会立即进入下一步(注意:因为优雅退出被卡死,到达容忍时间,强制杀死pod,有可能导致关闭前钩子还没有执行成功)。

(7)最后

Pod 生命周期中的 initC、startupProbe、libenessProbe、readinessProbe、hook 都是可以并且存在的,可以选择全部、部分或者完全不用。

[root@k8s-master01 4.3]# cat 13.pod.yaml apiVersion: v1 kind: Pod metadata: name: lifecycle-pod labels: app: lifecycle-pod spec: containers: - name: busybox-container image: busybox:1.28.1 command: ["/bin/sh","-c","touch /tmp/live ; sleep 600; rm -rf /tmp/live; sleep 3600"] livenessProbe: exec: command: ["test","-e","/tmp/live"] initialDelaySeconds: 1 periodSeconds: 3 lifecycle: postStart: httpGet: host: 192.168.66.11 path: index.html port: 1234 preStop: httpGet: host: 192.168.66.11 path: hostname.html port: 1234 - name: myapp-container image: myapp:v1.0 livenessProbe: httpGet: port: 80 path: /index.html initialDelaySeconds: 1 periodSeconds: 3 timeoutSeconds: 3 readinessProbe: httpGet: port: 80 path: /index1.html initialDelaySeconds: 1 periodSeconds: 3 initContainers: - name: init-myservice image: busybox:1.28.1 command: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;'] - name: init-mydb image: busybox:1.28.1 command: ['sh', '-c', 'until nslookup mydb; do echo waiting for mydb; sleep 2; done;']

4.期望:容器组: (1).容器名称、基于镜像版本、执行命令touch /tmp/live ; sleep 600; rm -rf /tmp/live; sleep 3600、存活探测:exec命令:test -e /tmp/live,间隔1秒,3秒探测一次、容器生命周期:启动后钩子:httpget 的ip、端口、index.html、关闭前钩子:httpget 的ip、端口、hostname.html (2).容器名称、基于镜像版本、存活探测:httpget 的 端口、index.html,延迟时间1秒,间隔3秒,超时3秒、就绪探测:httpget 的 端口、index1.html,延迟时间1秒、间隔3秒 (3).初始化容器组: (4).容器名,基于镜像版本、执行解析 nslookup myservice 为假循环命令 (5).容器名,基于镜像版本、执行解析 nslookup mydb 为假循环命令

[root@k8s-master01 4.3]# kubectl create -f 13.pod.yaml pod/lifecycle-pod created # 初始化两个容器,需要创建svc,并且是线性阻塞的 [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE lifecycle-pod 0/2 Init:0/2 0 5s [root@k8s-master01 4.3]# kubectl create svc clusterip myservice --tcp=80:80 service/myservice created [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE lifecycle-pod 0/2 Init:1/2 0 63s [root@k8s-master01 4.3]# kubectl create svc clusterip mydb --tcp=80:80 service/mydb created [root@k8s-master01 4.3]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 7d12h mydb ClusterIP 10.10.118.243 <none> 80/TCP 68s myservice ClusterIP 10.7.38.32 <none> 80/TCP 99s [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE lifecycle-pod 0/2 PostStartHookError 0 2m20s # 新开终端,启动后钩子访问探测,访问到才能启动 [root@k8s-master01 4.3]# docker run -it --rm -p 1234:80 myapp:v1.0 192.168.66.13 - - [04/Jun/2025:07:21:42 +0000] "GET /index.html HTTP/1.1" 200 65 "-" "kube-lifecycle/1.29" "-" # 原终端,indexl.html没有,启动不成功 [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE lifecycle-pod 1/2 Running 4 (93s ago) 5m56s [root@k8s-master01 4.3]# kubectl exec -it lifecycle-pod -c myapp-container -- /bin/sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # echo "ccc666..." > index1.html /usr/share/nginx/html # exit # 创建后pod启动 [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE lifecycle-pod 2/2 Running 4 (4m24s ago) 8m47s # 删除pod后,index.html 存活探测不成功,重构pod,重构后没有之前手动添加的index1.html,所以启动的READY是 1/2 [root@k8s-master01 4.3]# kubectl exec -it lifecycle-pod -c myapp-container -- /bin/sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # ls 50x.html index.html index1.html /usr/share/nginx/html # mv index.html index.html.bak /usr/share/nginx/html # command terminated with exit code 137 [root@k8s-master01 4.3]# kubectl get po NAME READY STATUS RESTARTS AGE lifecycle-pod 1/2 Running 5 (7s ago) 10m

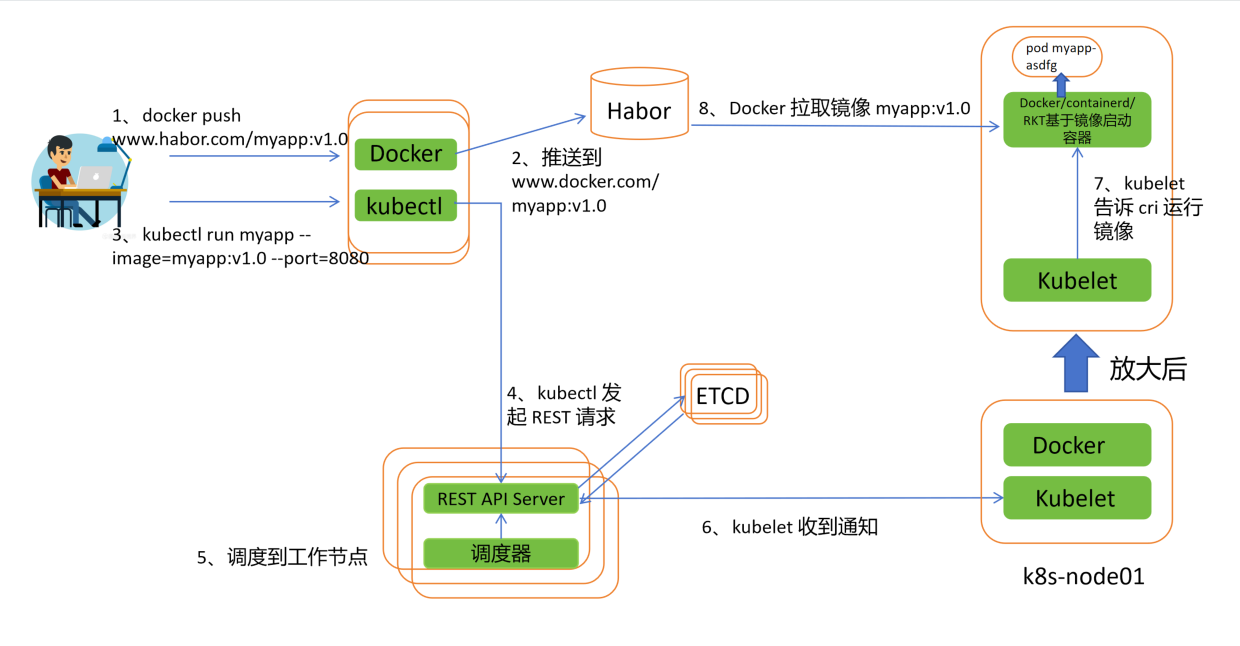

4.4 Pod是如何被调度运行的?

1.推送镜像,通过本地docker客户端推送到镜像仓库

2.本地的镜像仓库推送到远程镜像仓库(可以是docker官方、国内、私服habor)

3.通过 kubernetes 客户端 kubectl 发送运行 pod 的命令

4.kubectl 基于 REST 请求发送给API Server,API验证通过后,ETCD 会将数据持久化存储

5.调度器 Schedule 监听 API Server,从而计算 pod 调度到那个节点,以此去确定绑定关联,再写入到 ETCD 中

6.所有节点的 kubelet 监听 API Server,kubelet 确定自己当前节点是否有 pod 需要创建

7.当有 pod 需要创建,kubelet 调用当前容器的 CRI 接口(docker、containerd、RKT)

8.到镜像仓库拉取对应的镜像,创建出的容器组符合 pod 的关系后,就成了 pod

———————————————————————————————————————————————————————————————————————————

无敌小马爱学习

浙公网安备 33010602011771号

浙公网安备 33010602011771号