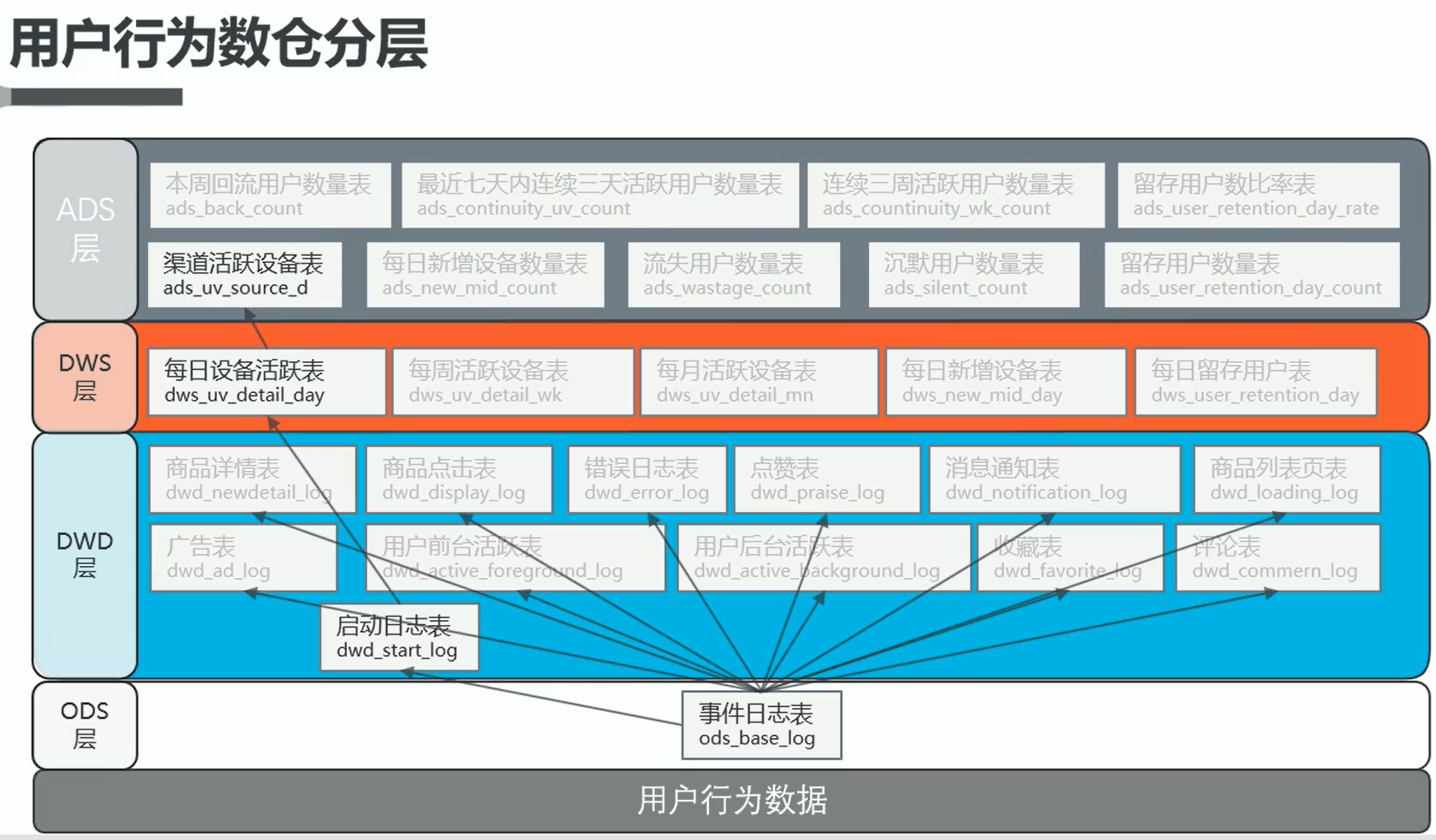

4. 数仓分层 && 用户行为数仓搭建

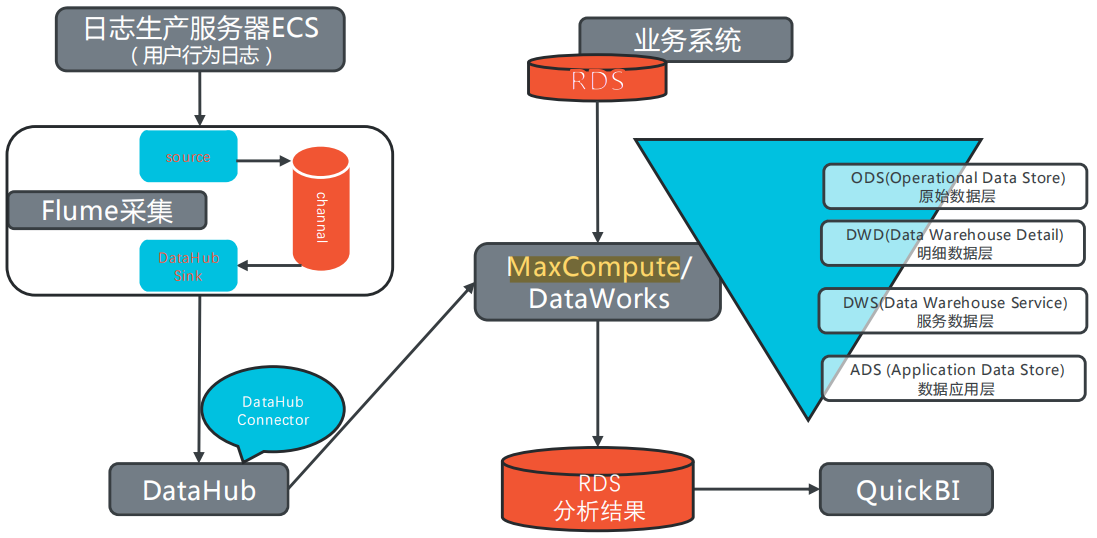

整体架构

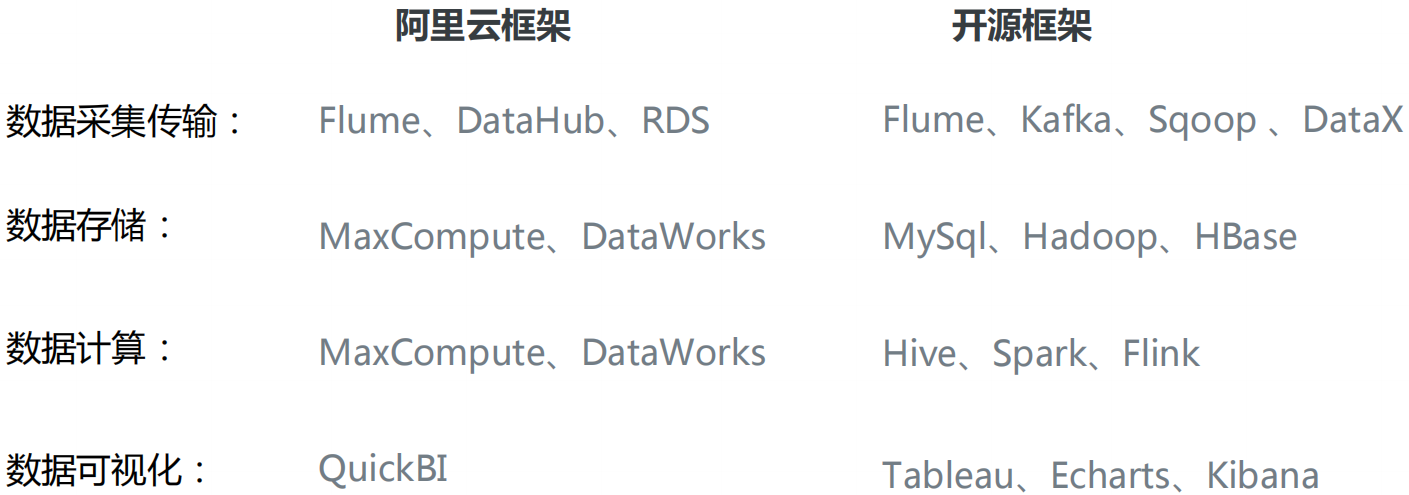

技术框架

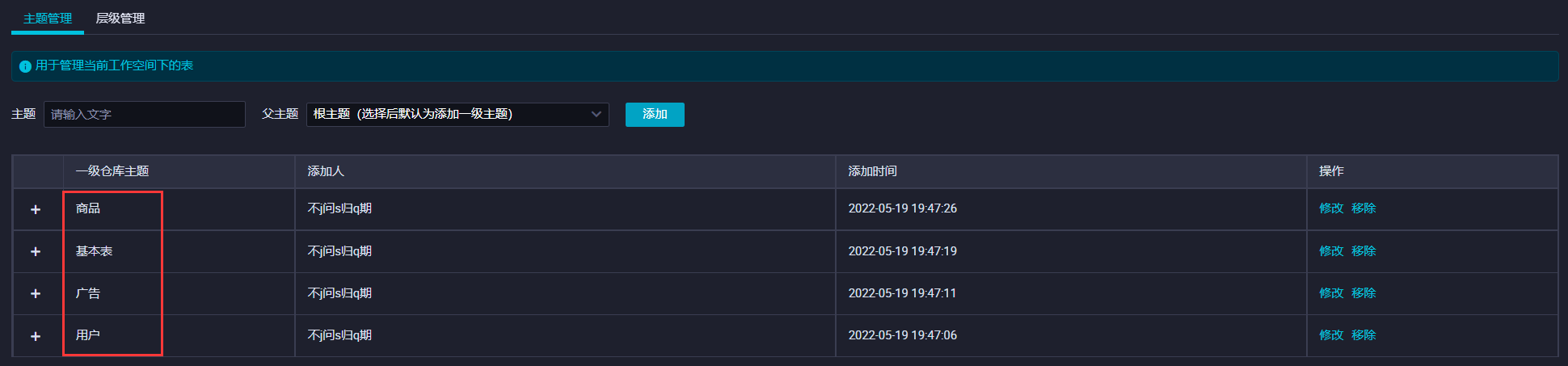

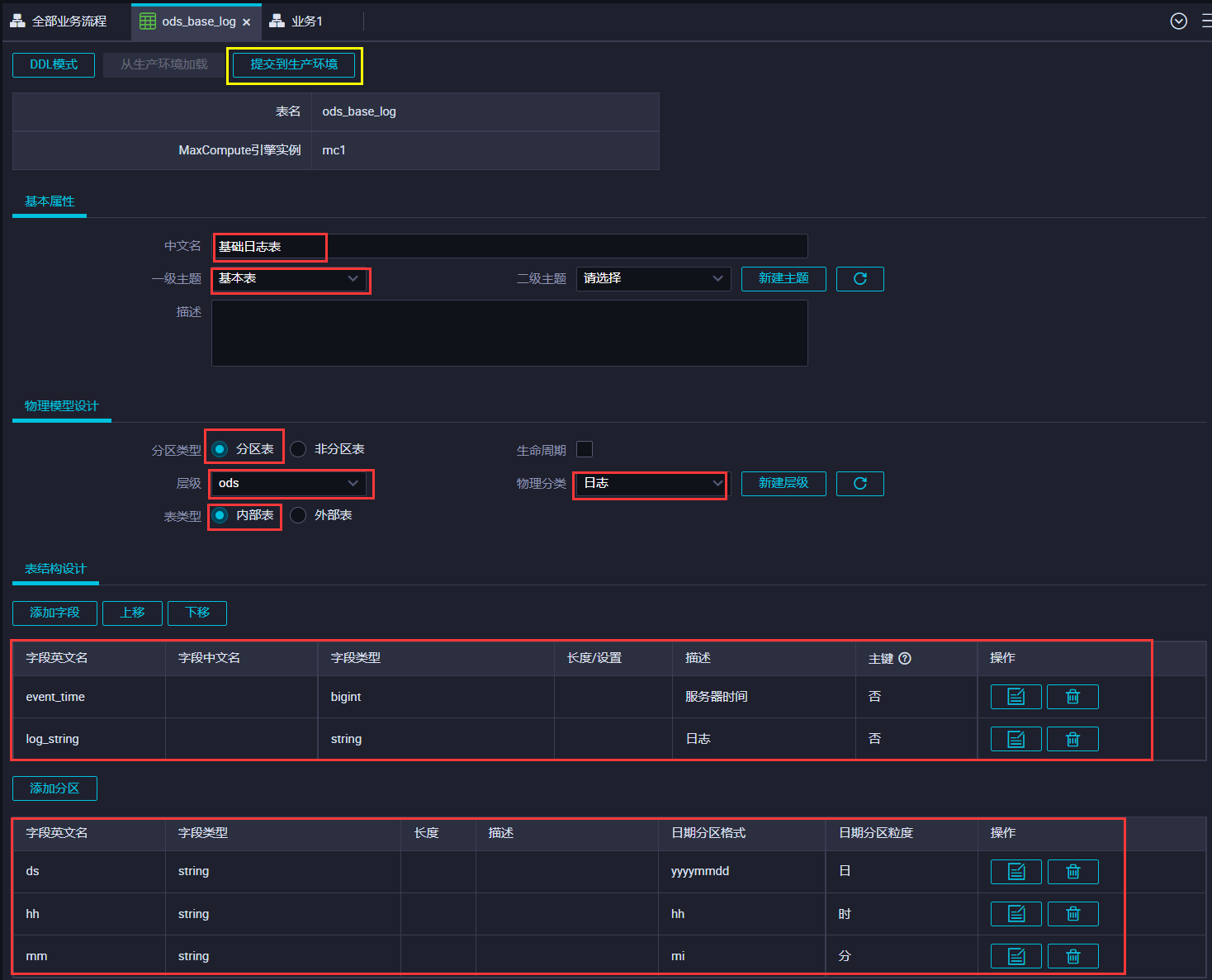

1. 配置表主题

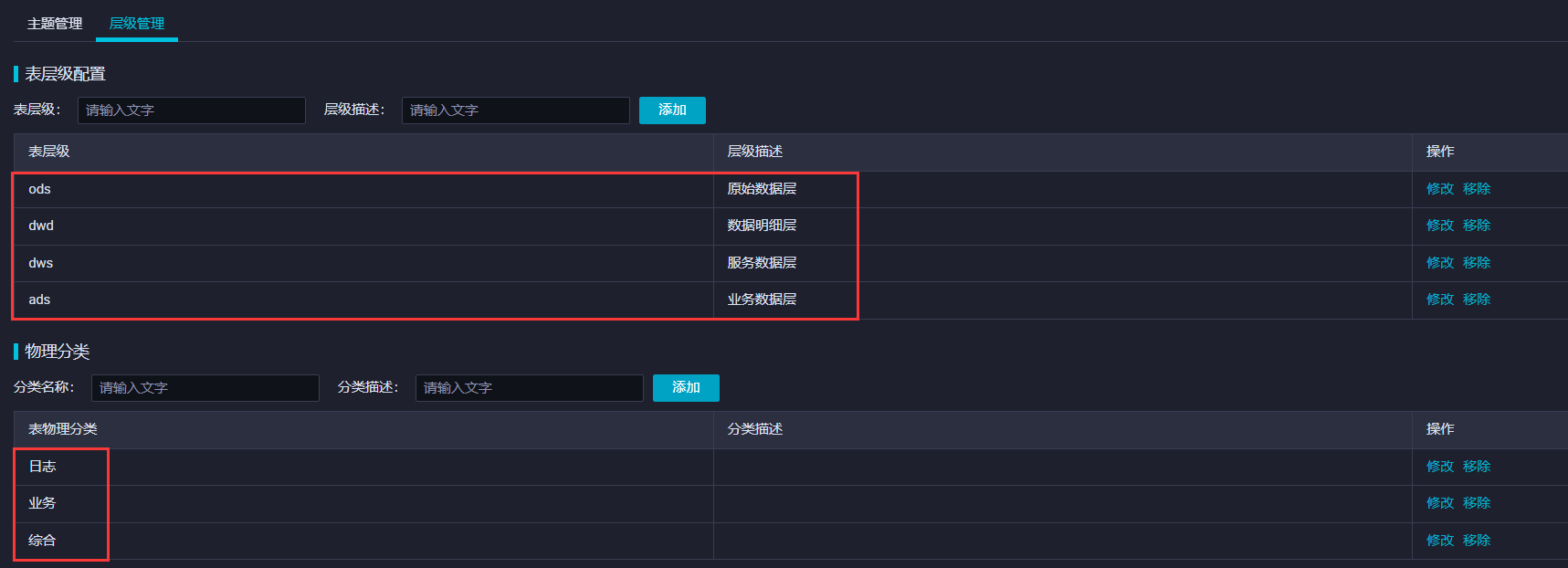

2. 配置表层级

3. 数据仓库分层

1. 原始数据层ODS层搭建

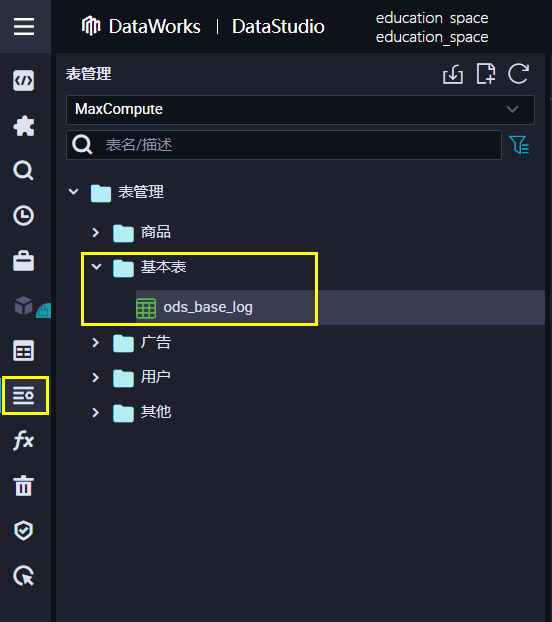

表的位置

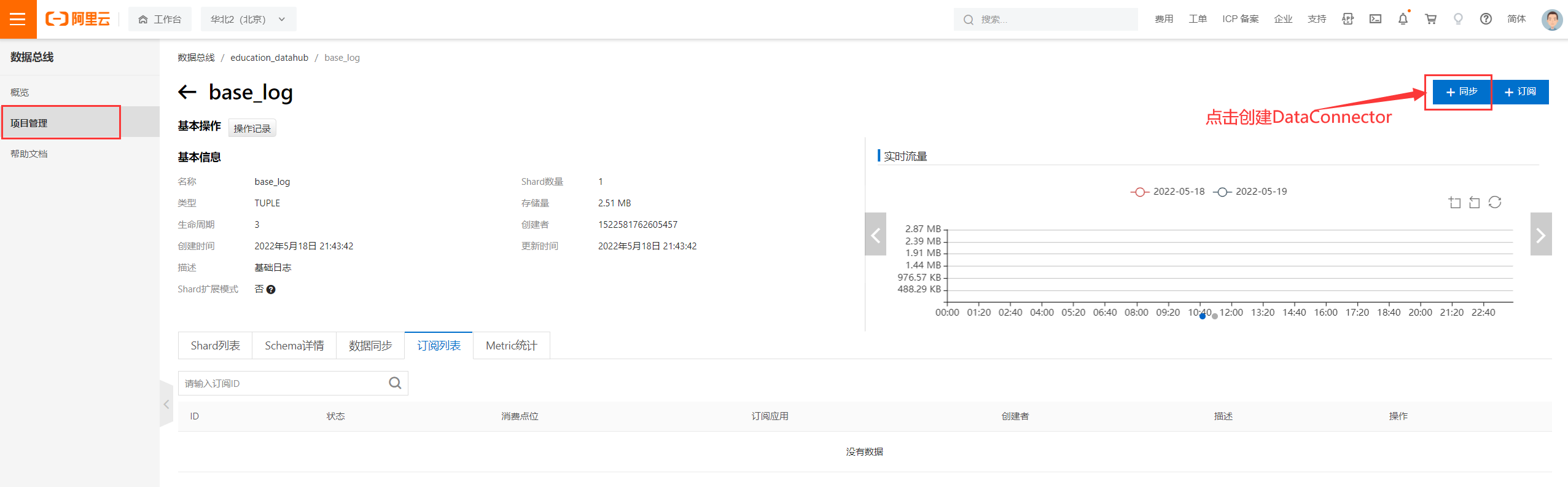

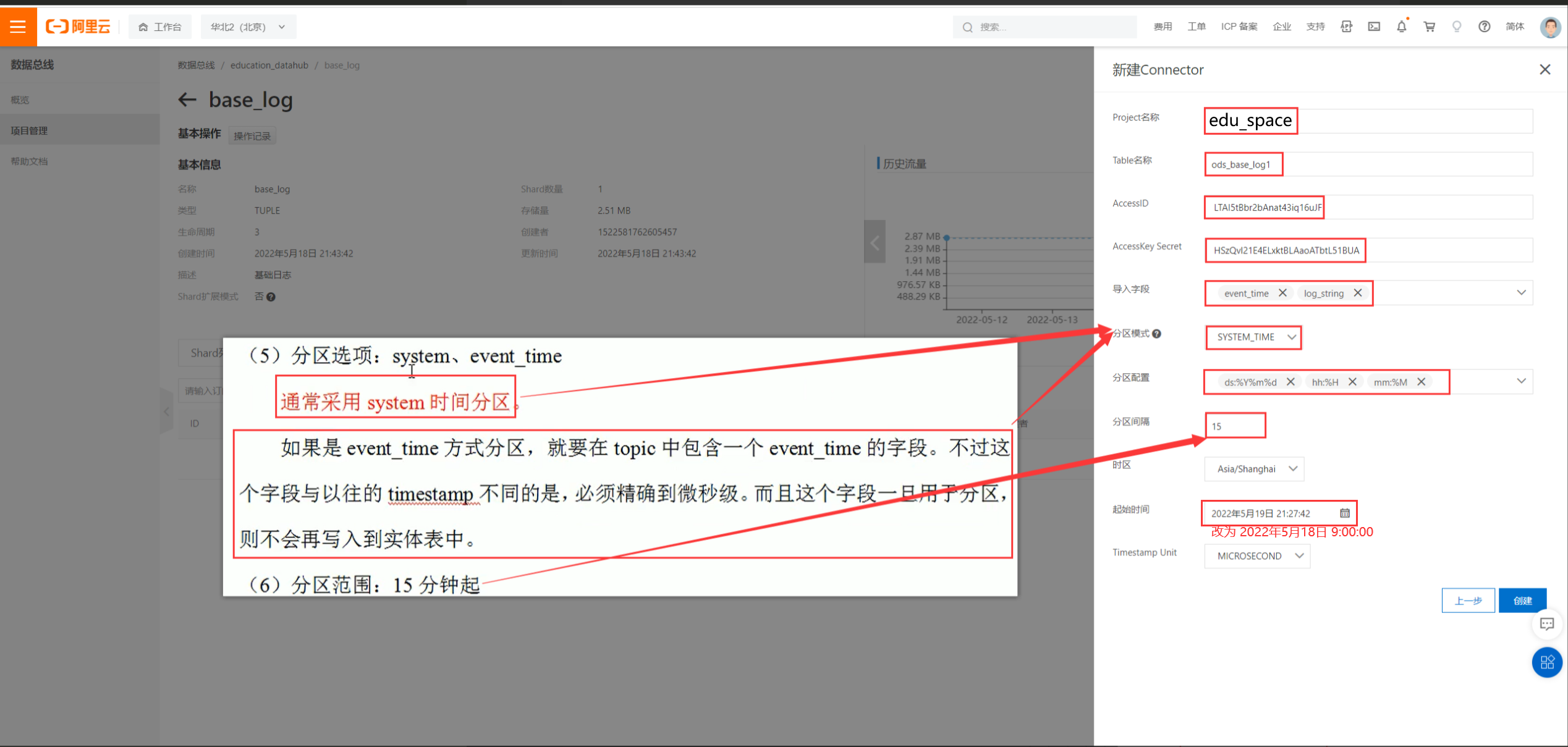

使用DataHub Connector将DataHub中的数据推送到MaxCompute中

创建DataConnector

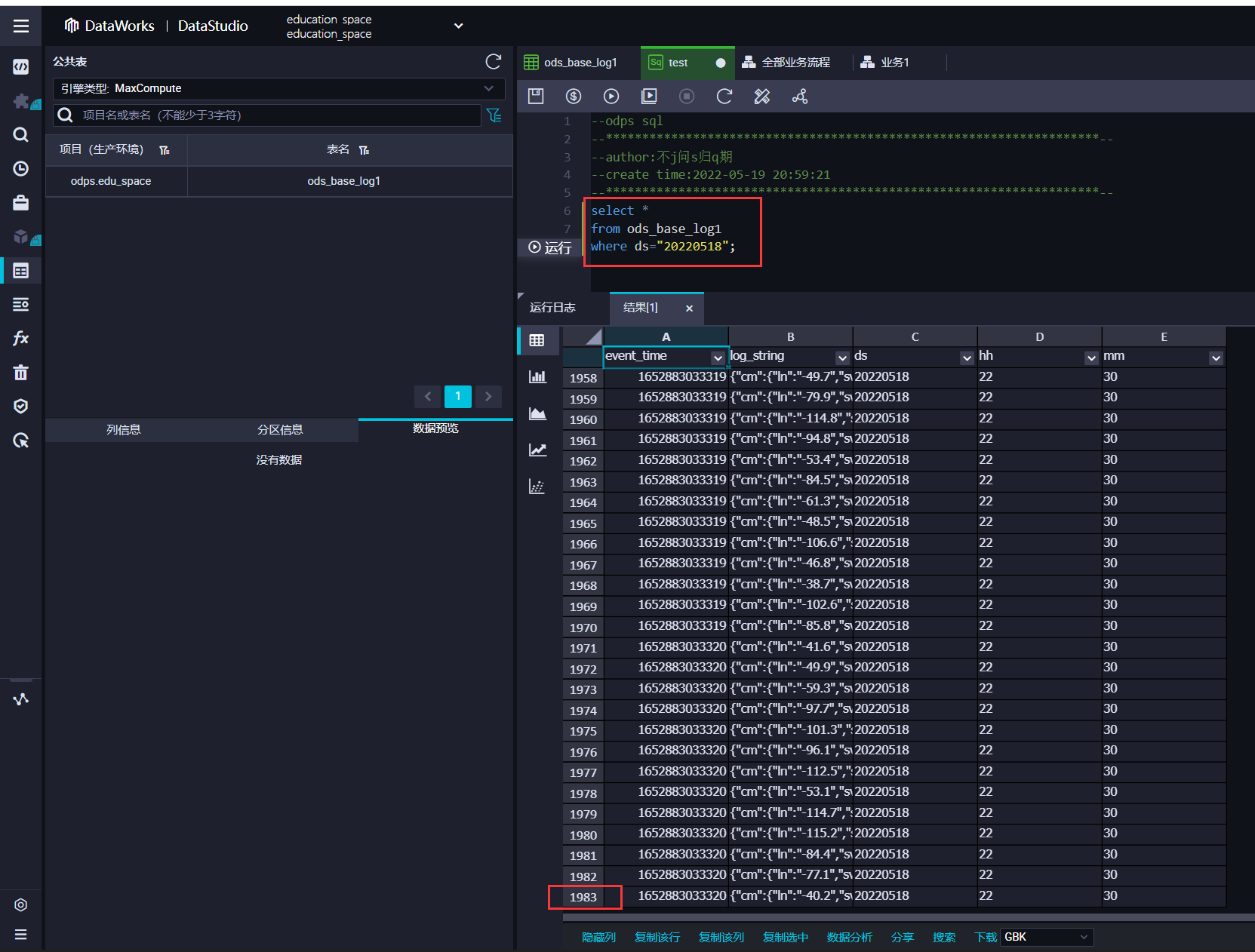

数据成功加载

2. DWD层搭建

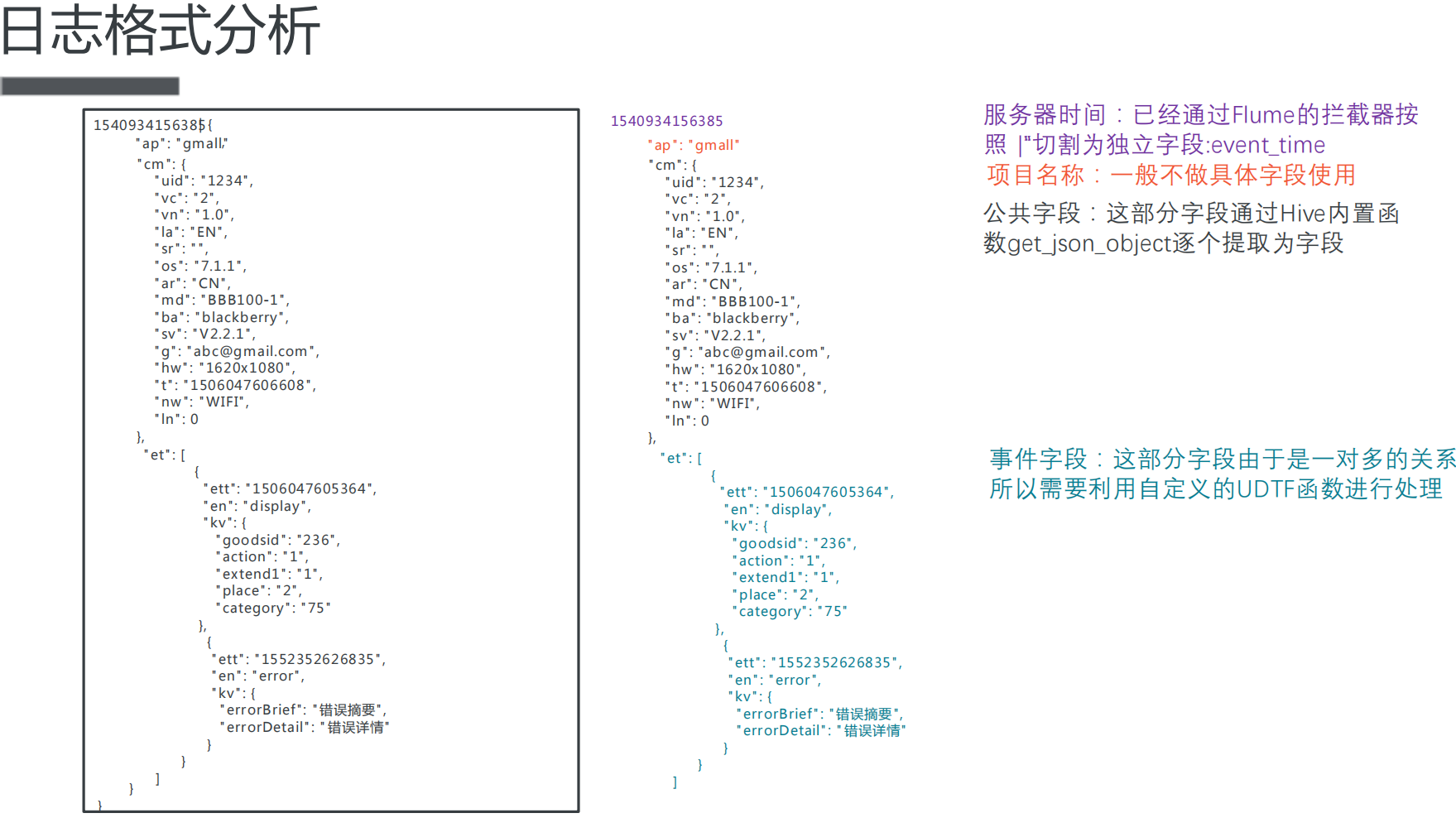

日志格式分析

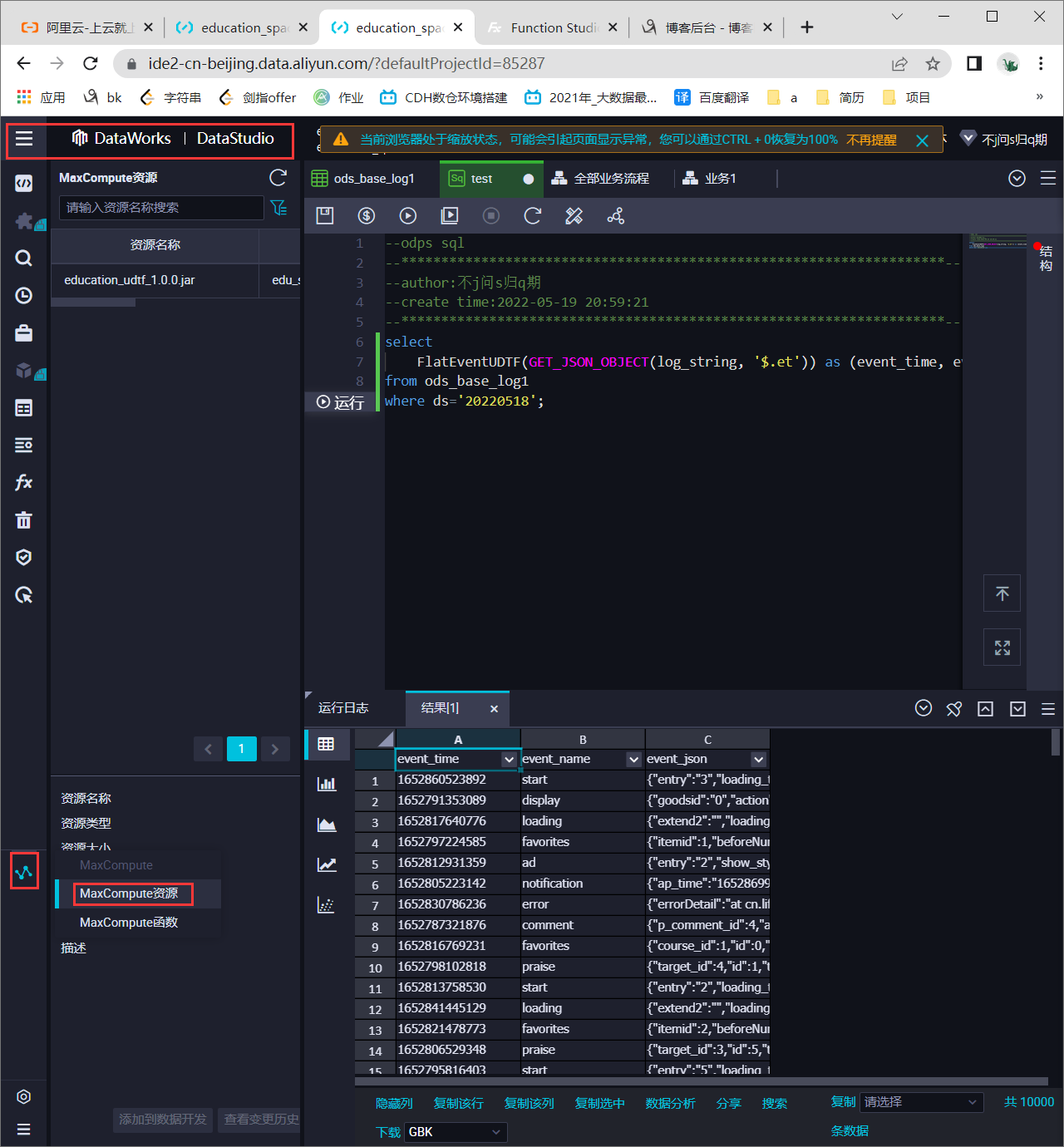

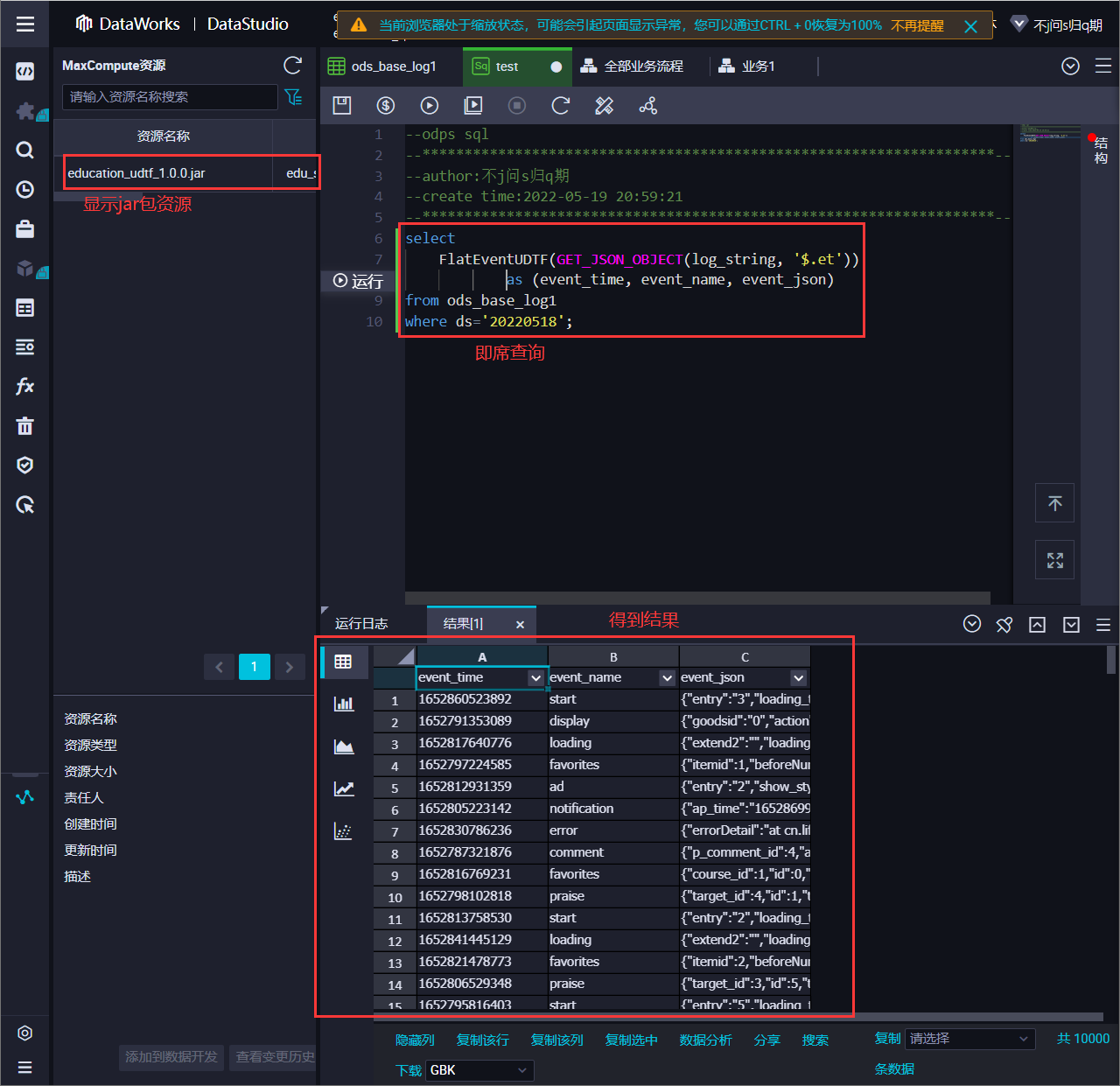

自定义UDTF(解析具体事件字段)

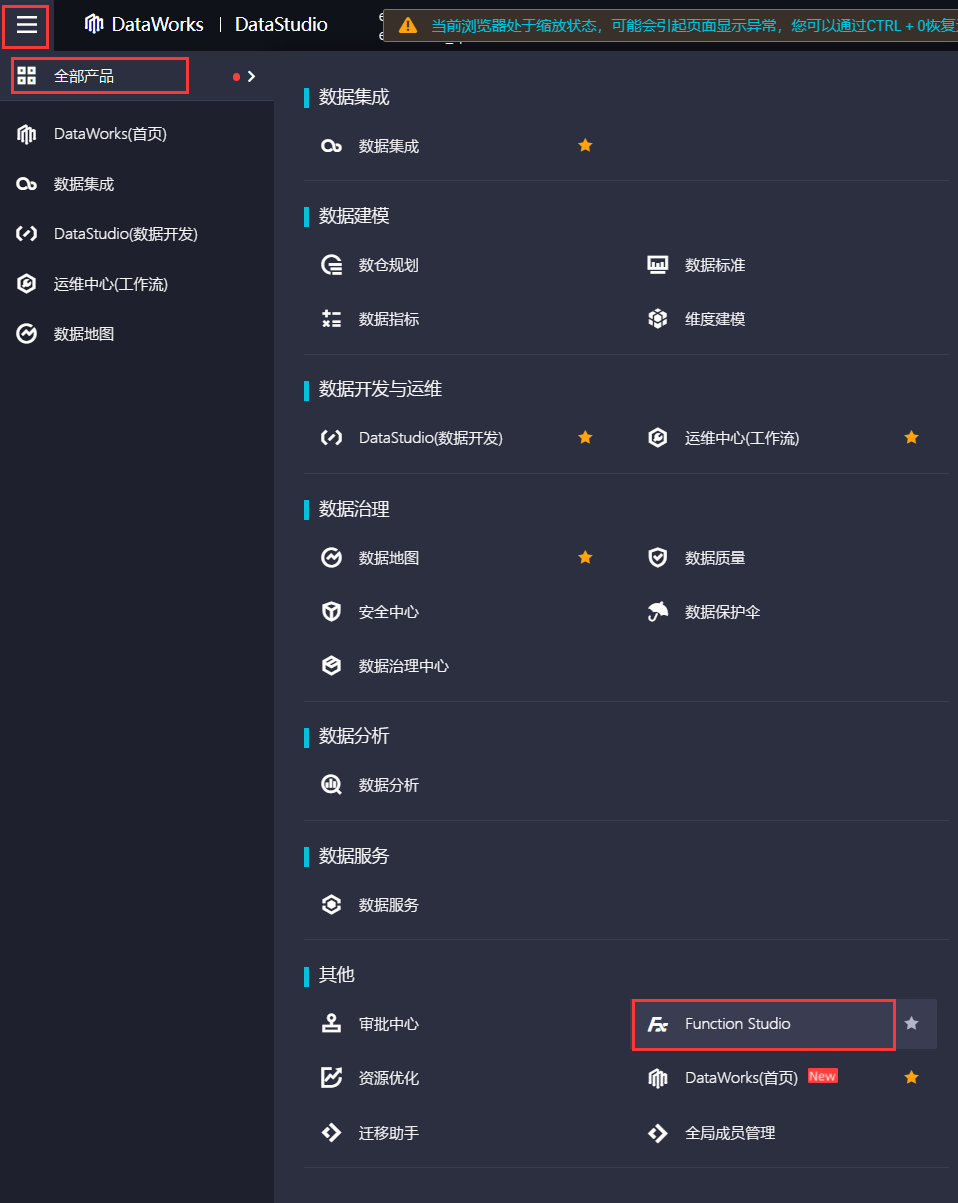

直接在FunctionStudio中开发

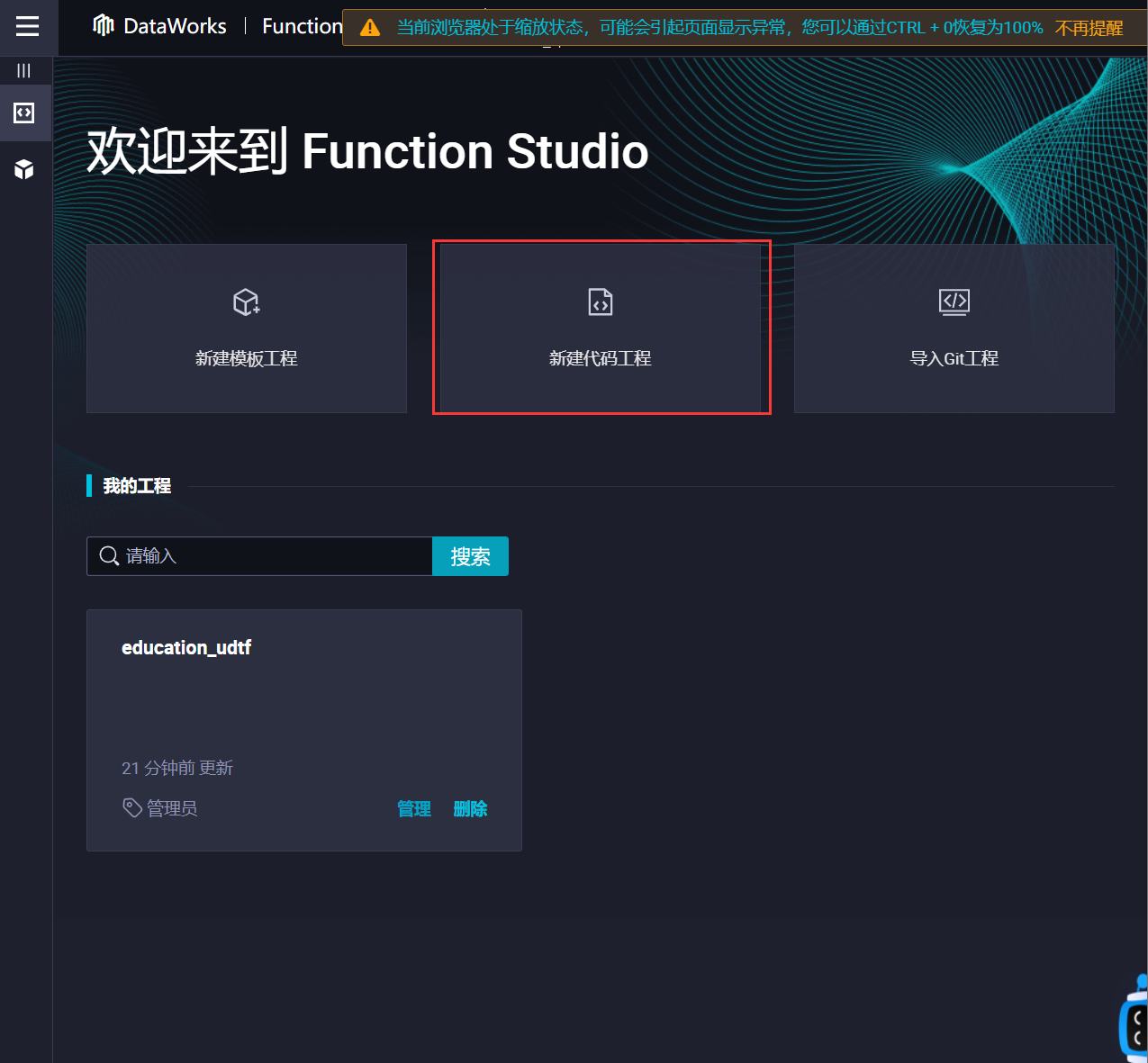

1.打开FunctionStudio界面

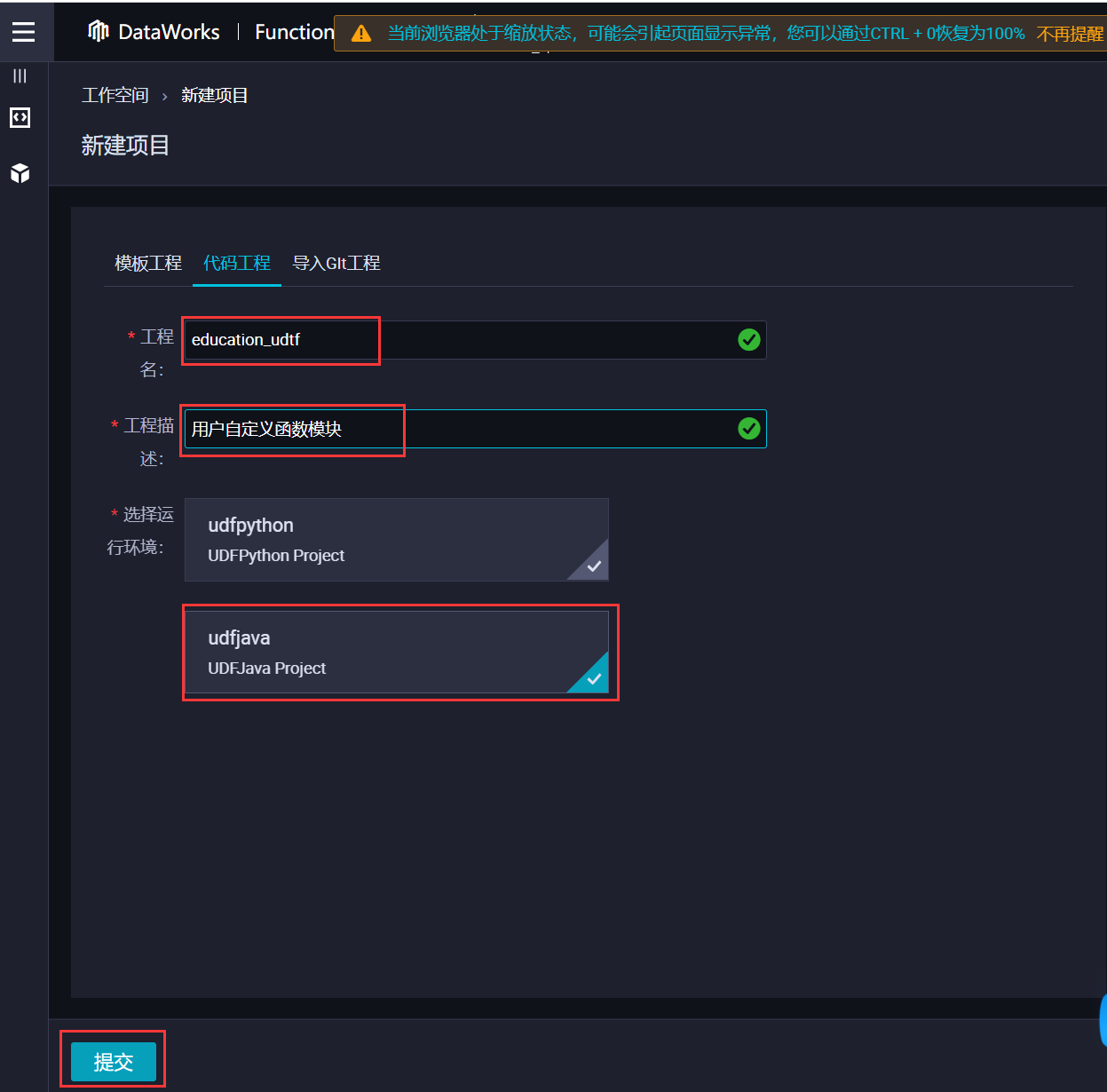

2.新建工程

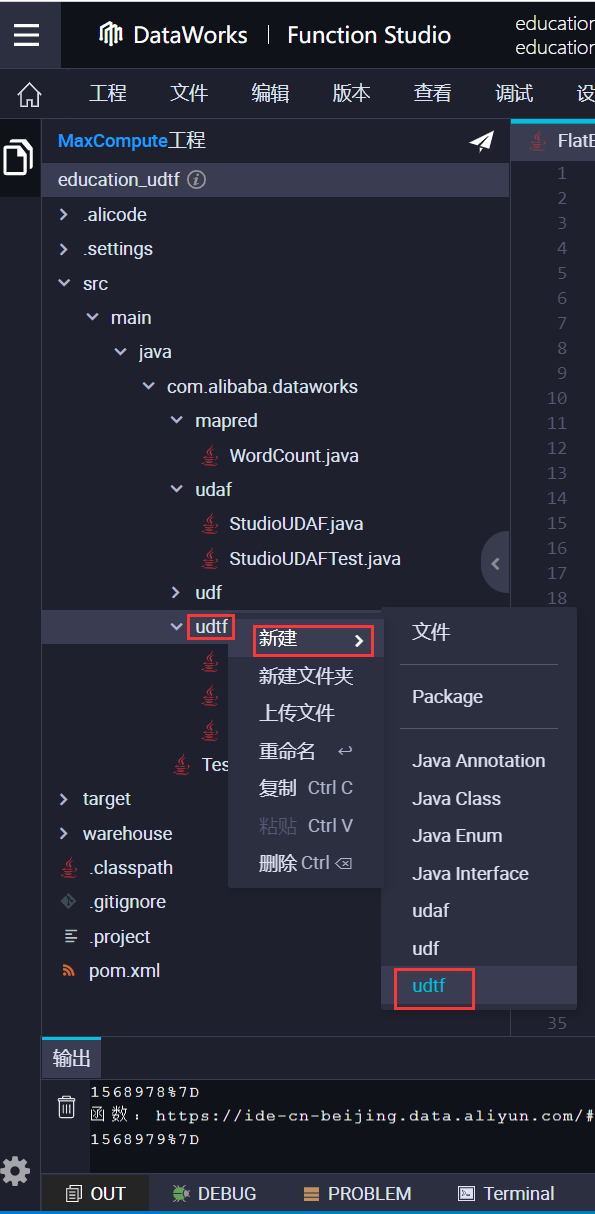

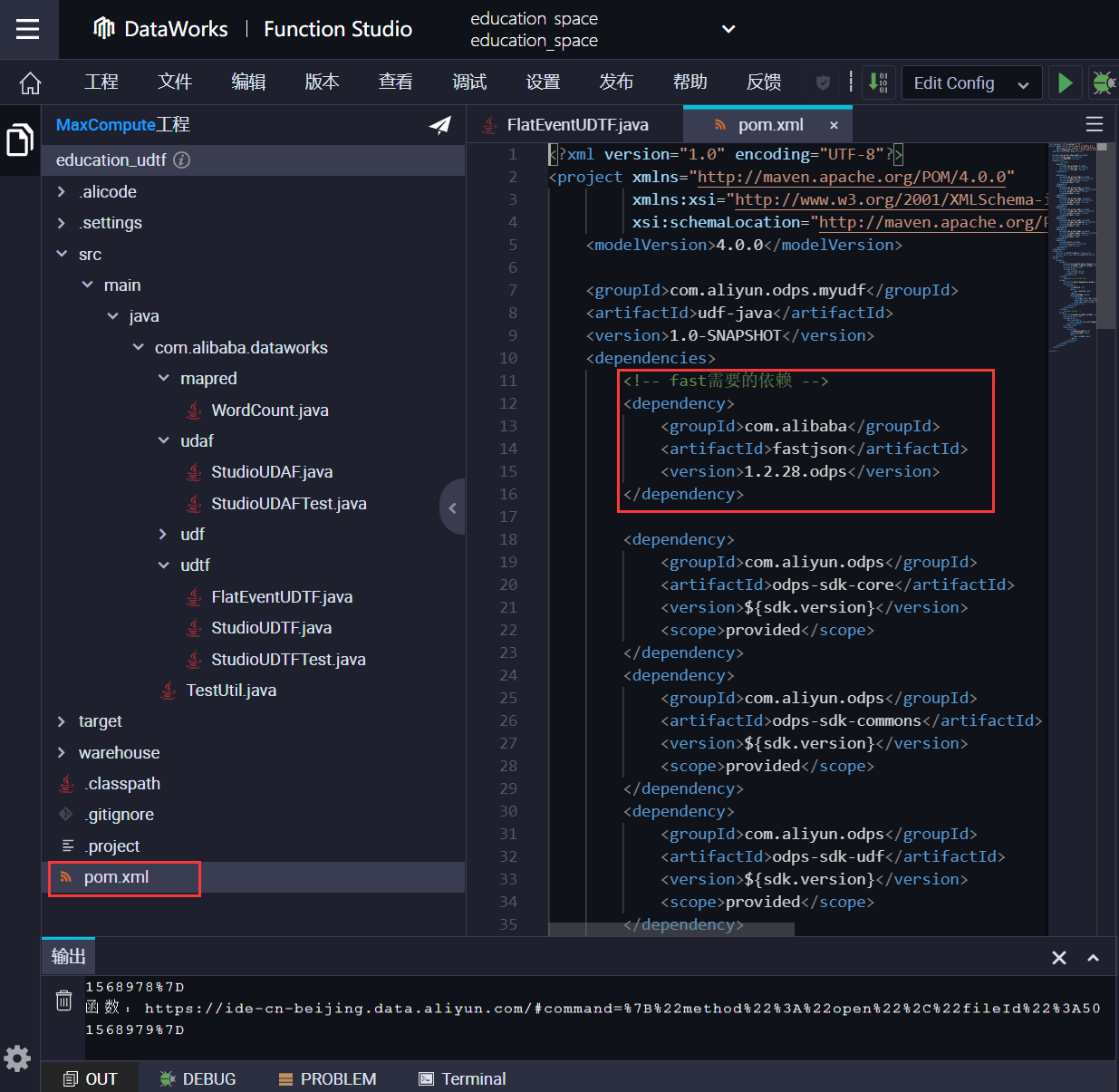

3. 分析日志格式,导入依赖,编写自定义UDTF代码

导入依赖

// 导入依赖

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.28.odps</version>

</dependency>

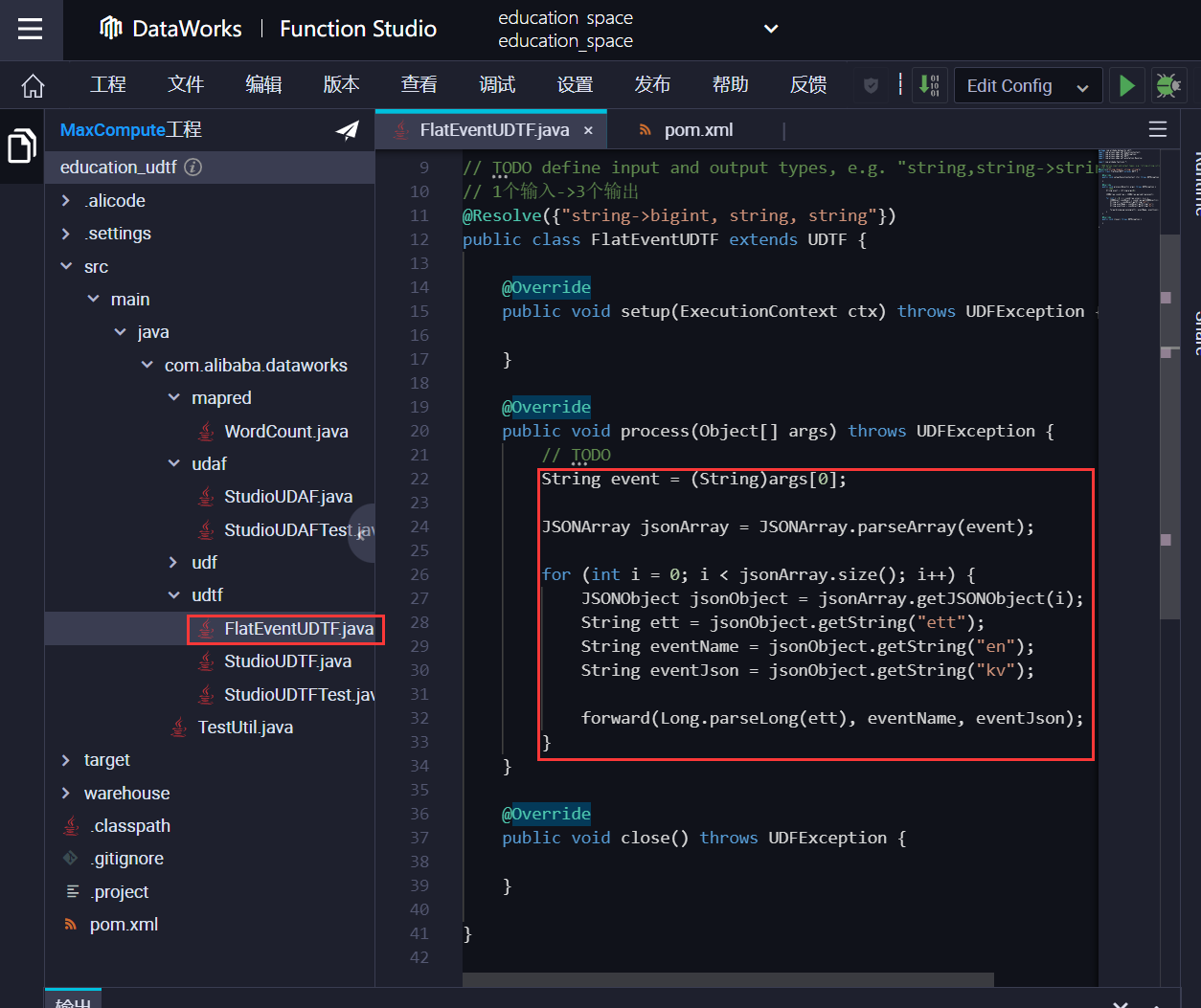

编写UDTF代码

// 编写自定义UDTF代码

String event = (String)args[0];

JSONArray jsonArray = JSONArray.parseArray(event);

for (int i = 0; i < jsonArray.size(); i++) {

JSONObject jsonObject = jsonArray.getJSONObject(i);

String ett = jsonObject.getString("ett");

String eventName = jsonObject.getString("en");

String eventJson = jsonObject.getString("kv");

forward(Long.parseLong(ett), eventName, eventJson);

}

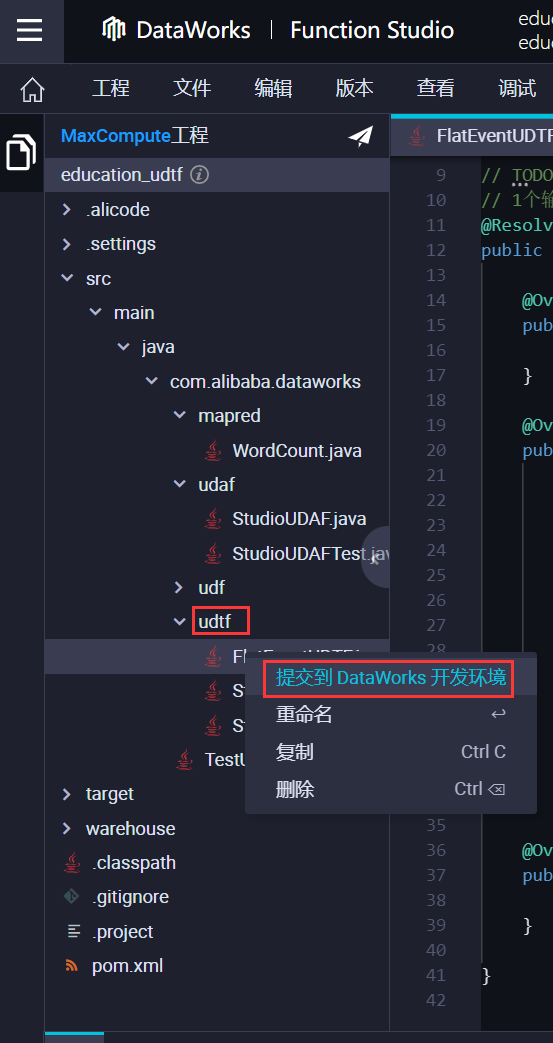

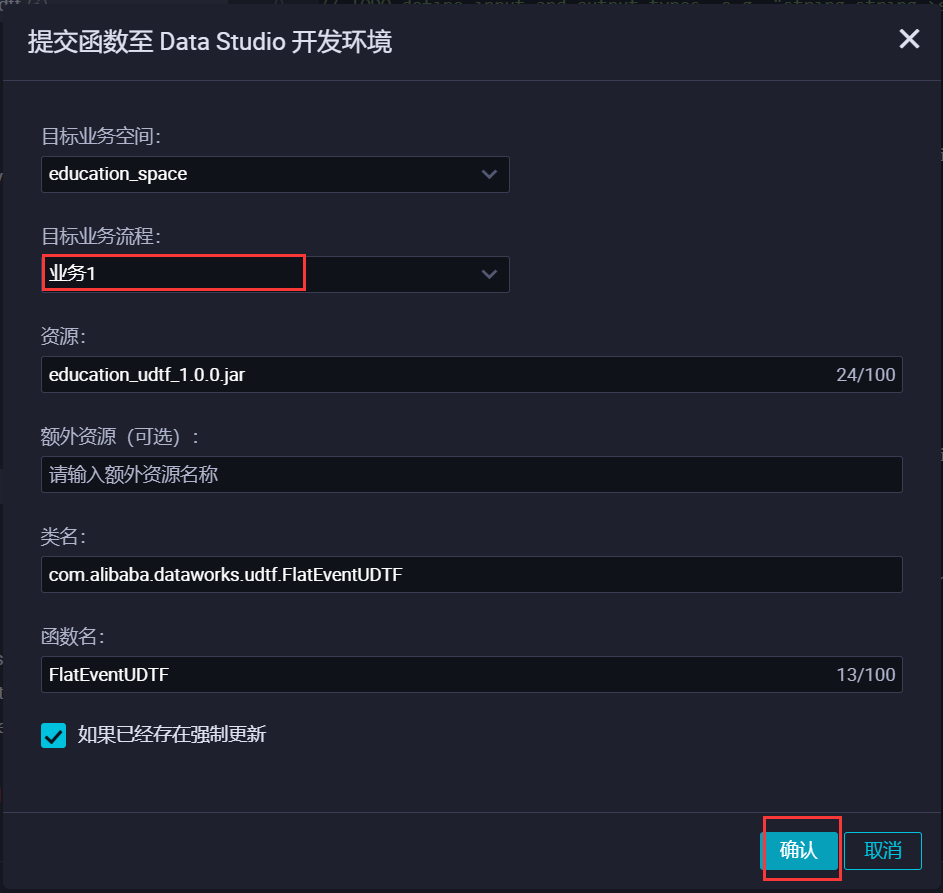

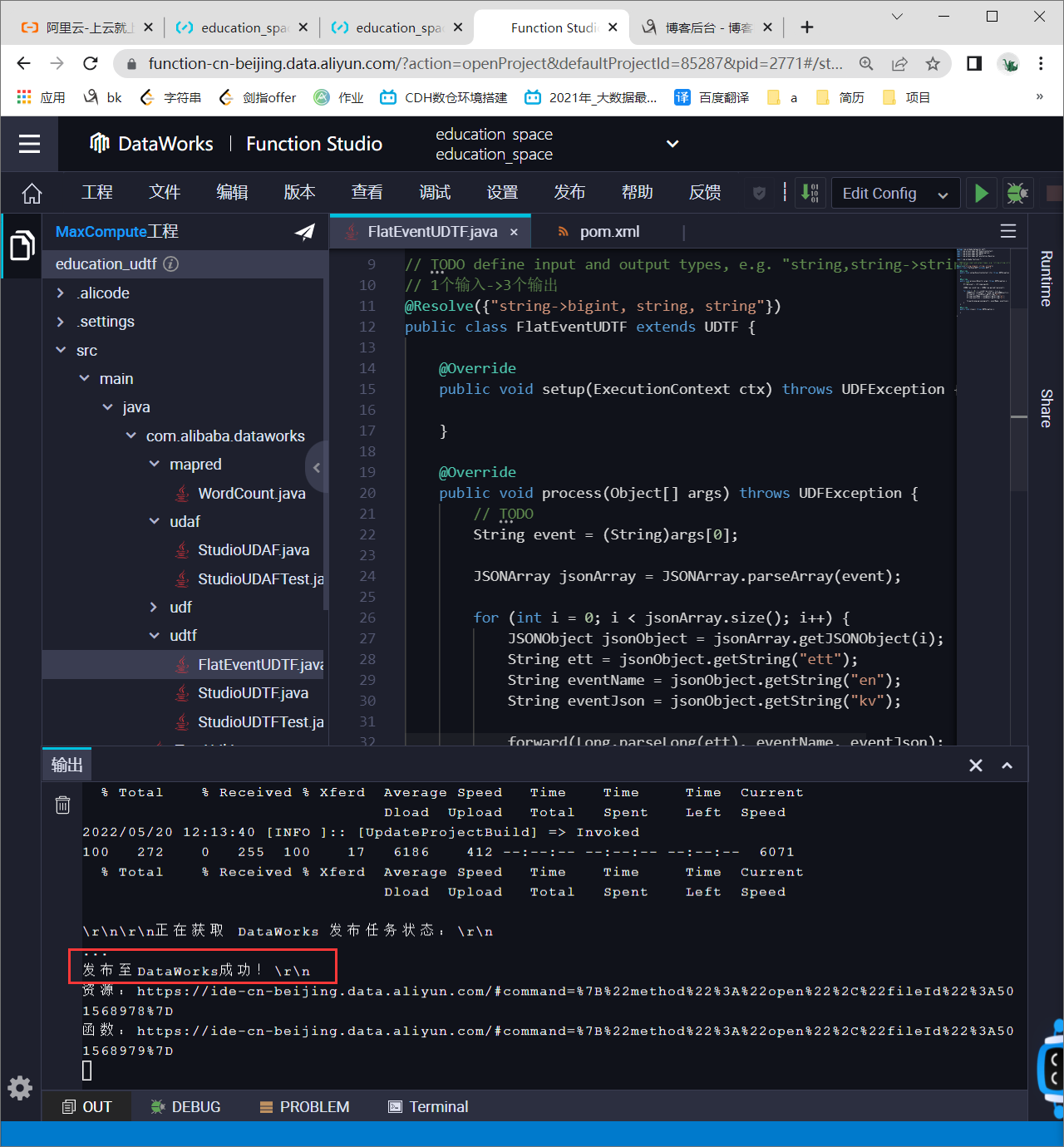

打包部署

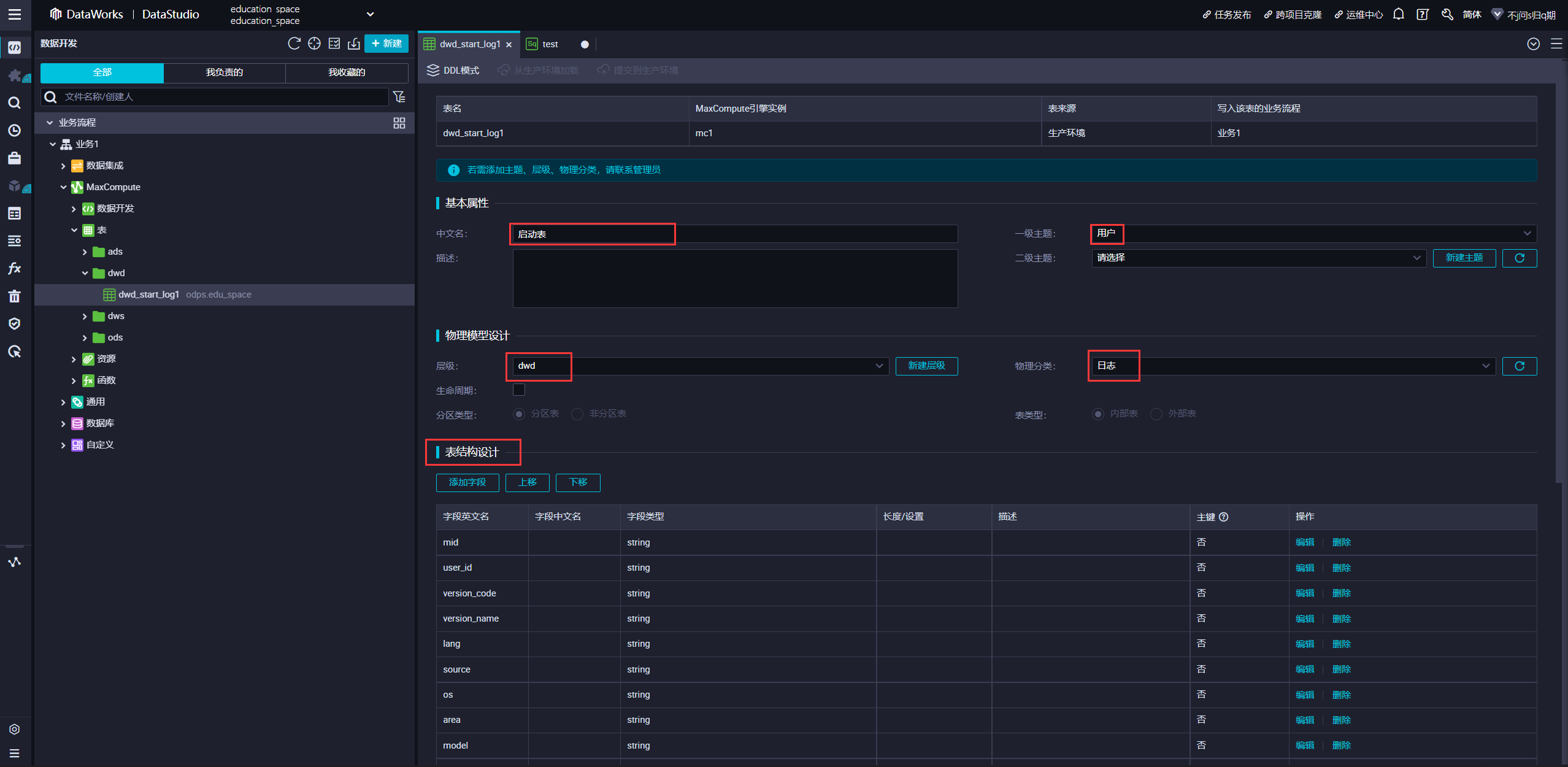

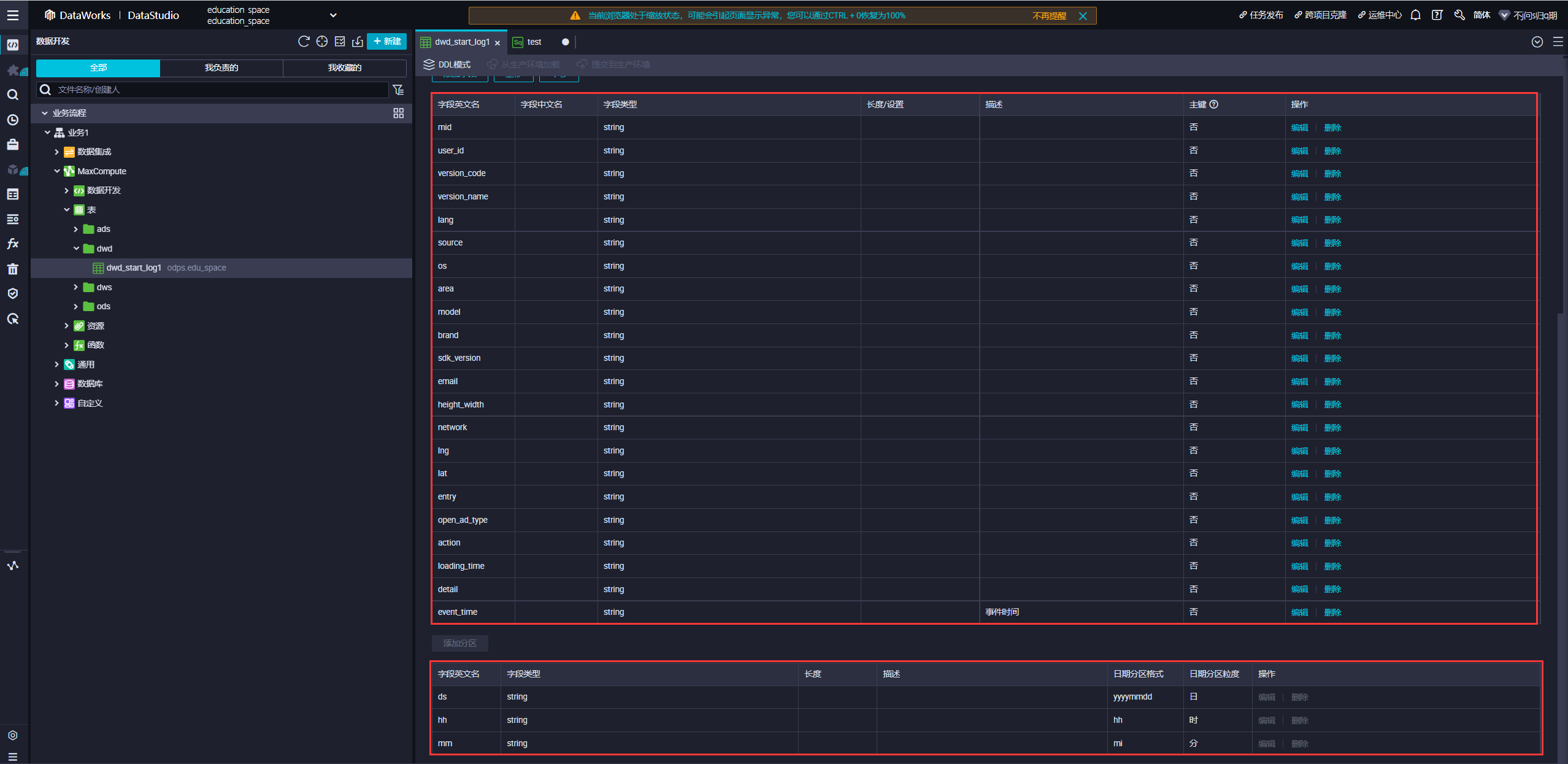

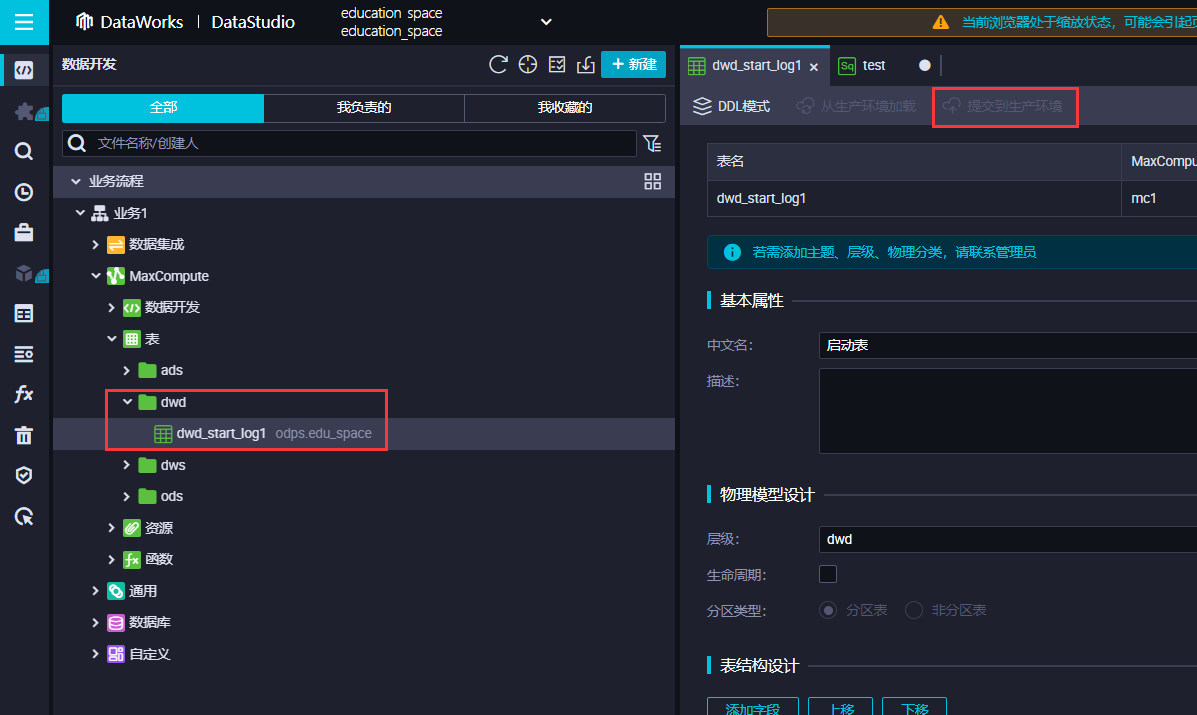

3.DWD层建表,手动提交到生产环境,放到dwd这个文件夹中

1. 手动将 ODS 层数据导入 DWD 层

insert overwrite table dwd_start_log1 PARTITION (ds, hh, mm)

SELECT

-- 公共字段

-- 通过Hive内置函数get_json_object逐个提取为字段

GET_JSON_OBJECT(log_string, '$.cm.mid') mid,

GET_JSON_OBJECT(log_string, '$.cm.uid') user_id,

GET_JSON_OBJECT(log_string,'$.cm.vc') version_code,

GET_JSON_OBJECT(log_string,'$.cm.vn') version_name,

GET_JSON_OBJECT(log_string,'$.cm.l') lang,

GET_JSON_OBJECT(log_string,'$.cm.sr') source,

GET_JSON_OBJECT(log_string,'$.cm.os') os,

GET_JSON_OBJECT(log_string,'$.cm.ar') area,

GET_JSON_OBJECT(log_string,'$.cm.md') model,

GET_JSON_OBJECT(log_string,'$.cm.ba') brand,

GET_JSON_OBJECT(log_string,'$.cm.sv') sdk_version,

GET_JSON_OBJECT(log_string,'$.cm.hw') height_width,

GET_JSON_OBJECT(log_string,'$.cm.g') email,

GET_JSON_OBJECT(log_string,'$.cm.hw') sv,

GET_JSON_OBJECT(log_string,'$.cm.ln') ln,

GET_JSON_OBJECT(log_string,'$.cm.la') la,

-- 事件字段

GET_JSON_OBJECT(event_view.event_json, '$.entry') entry,

GET_JSON_OBJECT(event_view.event_json, '$.loading_time') loading_time,

GET_JSON_OBJECT(event_view.event_json, '$.action') action,

GET_JSON_OBJECT(event_view.event_json, '$.open_ad_type') open_ad_type,

GET_JSON_OBJECT(event_view.event_json, '$.detail') detail,

-- 分区时间

event_view.event_time,

-- 分区值

ds,

hh,

mm

FROM ods_base_log1

LATERAL VIEW FlatEventUDTF(GET_JSON_OBJECT(log_string, '$.et')) event_view as event_time, event_name, event_json

where ds = '20220518' and event_view.event_name='start';

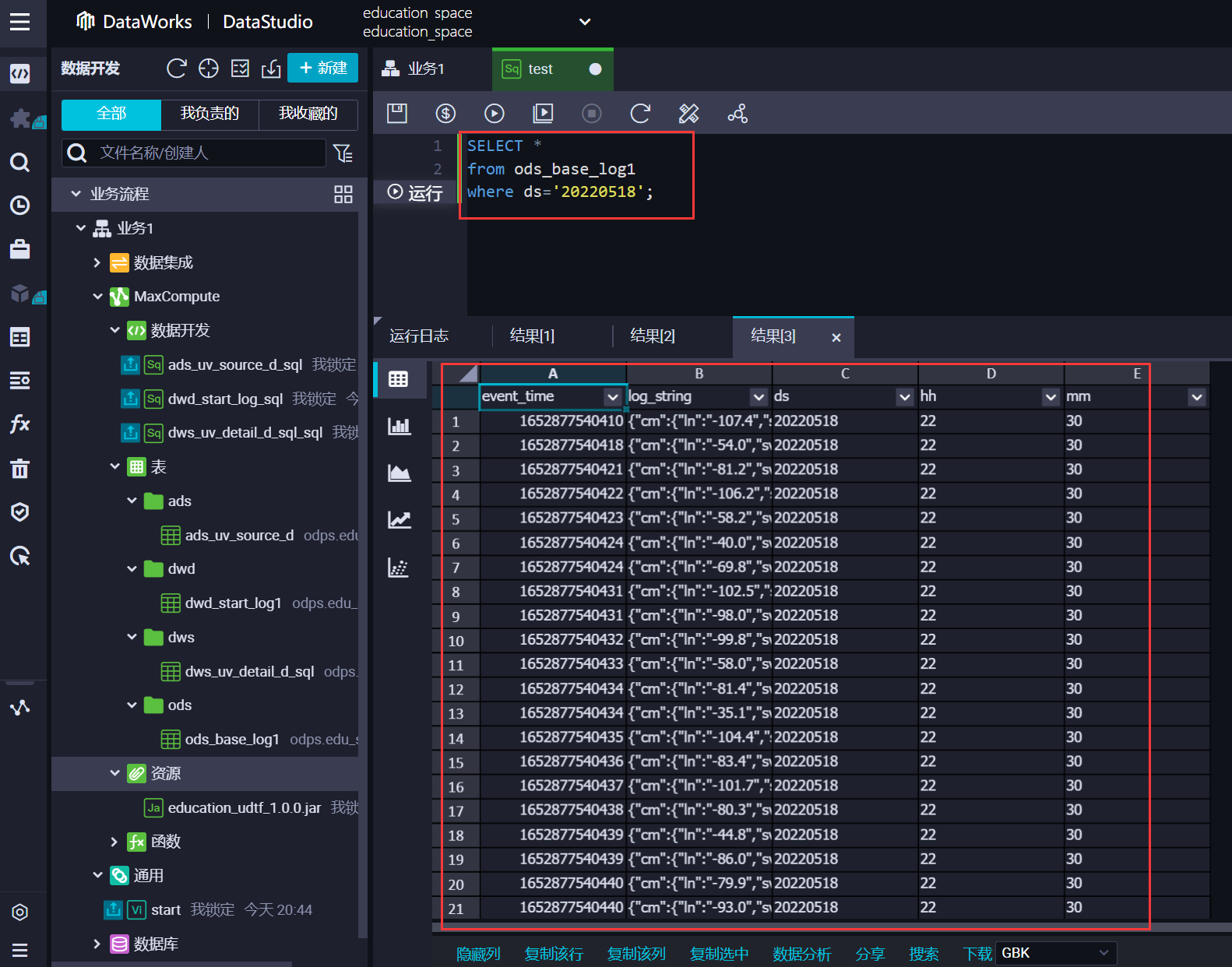

2. 查询导入的数据

select * from dwd_start_log1 where ds='20220518';

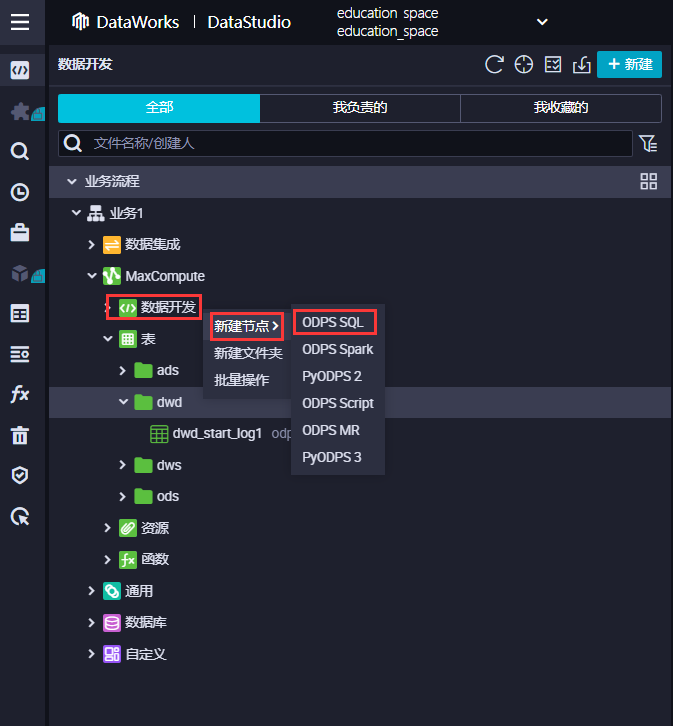

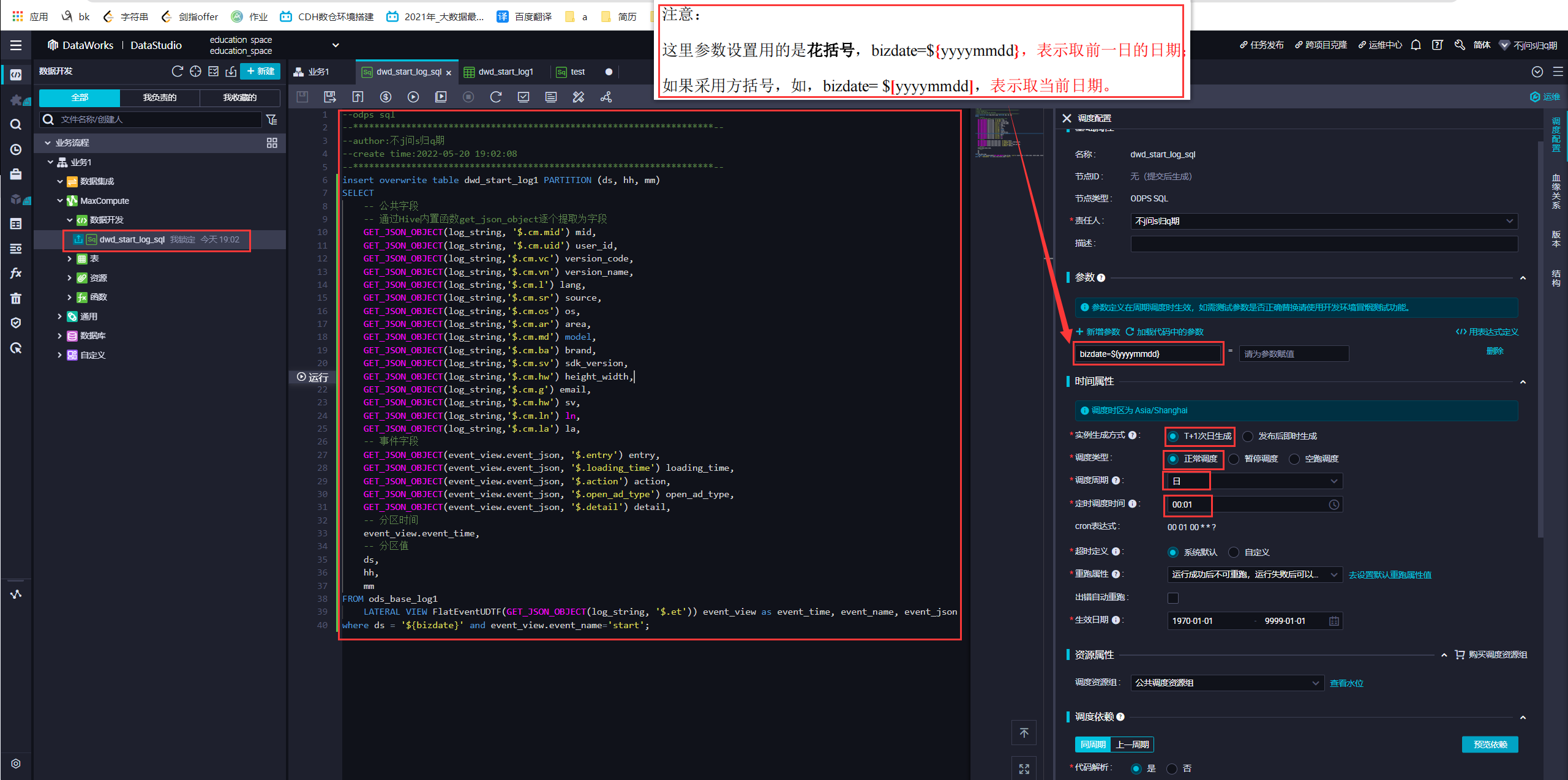

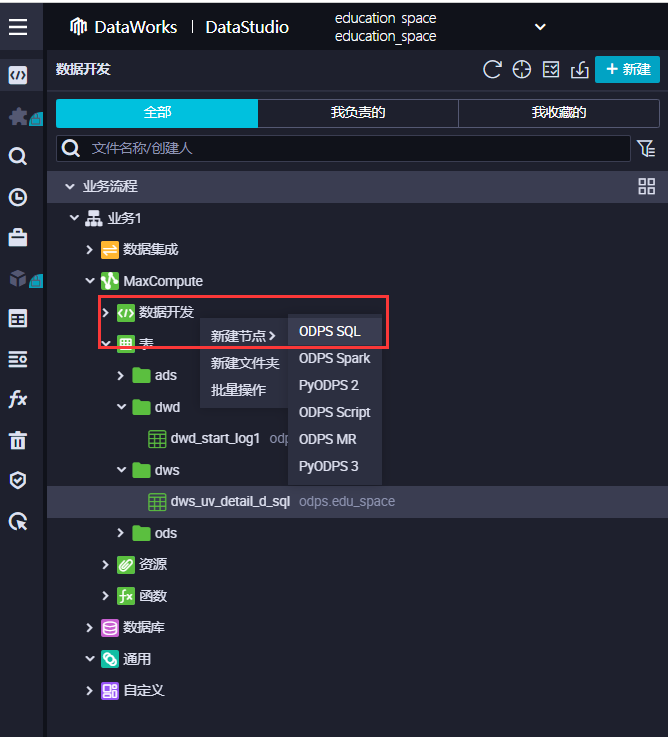

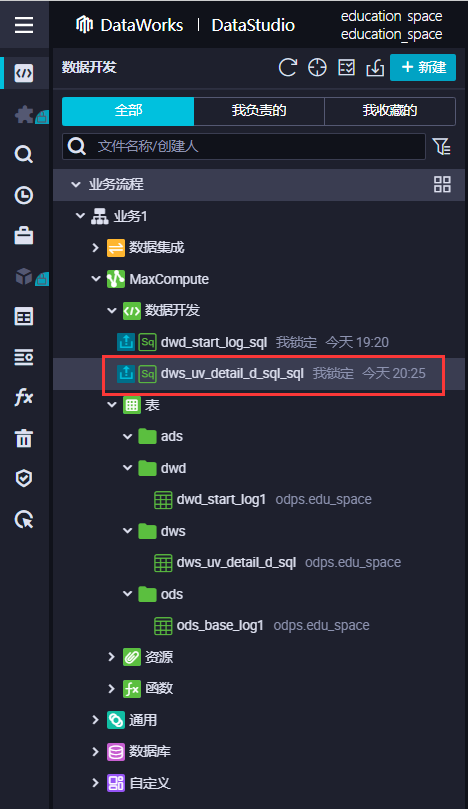

3. ods层到dwd层写一个定时任务

1. 创建一个ODPS节点

insert overwrite table dwd_start_log1 PARTITION (ds, hh, mm)

SELECT

-- 公共字段

-- 通过Hive内置函数get_json_object逐个提取为字段

GET_JSON_OBJECT(log_string, '$.cm.mid') mid,

GET_JSON_OBJECT(log_string, '$.cm.uid') user_id,

GET_JSON_OBJECT(log_string,'$.cm.vc') version_code,

GET_JSON_OBJECT(log_string,'$.cm.vn') version_name,

GET_JSON_OBJECT(log_string,'$.cm.l') lang,

GET_JSON_OBJECT(log_string,'$.cm.sr') source,

GET_JSON_OBJECT(log_string,'$.cm.os') os,

GET_JSON_OBJECT(log_string,'$.cm.ar') area,

GET_JSON_OBJECT(log_string,'$.cm.md') model,

GET_JSON_OBJECT(log_string,'$.cm.ba') brand,

GET_JSON_OBJECT(log_string,'$.cm.sv') sdk_version,

GET_JSON_OBJECT(log_string,'$.cm.hw') height_width,

GET_JSON_OBJECT(log_string,'$.cm.g') email,

GET_JSON_OBJECT(log_string,'$.cm.hw') sv,

GET_JSON_OBJECT(log_string,'$.cm.ln') ln,

GET_JSON_OBJECT(log_string,'$.cm.la') la,

-- 事件字段

GET_JSON_OBJECT(event_view.event_json, '$.entry') entry,

GET_JSON_OBJECT(event_view.event_json, '$.loading_time') loading_time,

GET_JSON_OBJECT(event_view.event_json, '$.action') action,

GET_JSON_OBJECT(event_view.event_json, '$.open_ad_type') open_ad_type,

GET_JSON_OBJECT(event_view.event_json, '$.detail') detail,

-- 分区时间

event_view.event_time,

-- 分区值

ds,

hh,

mm

FROM ods_base_log1

LATERAL VIEW FlatEventUDTF(GET_JSON_OBJECT(log_string, '$.et')) event_view as event_time, event_name, event_json

where ds = '${bizdate}' and event_view.event_name='start';

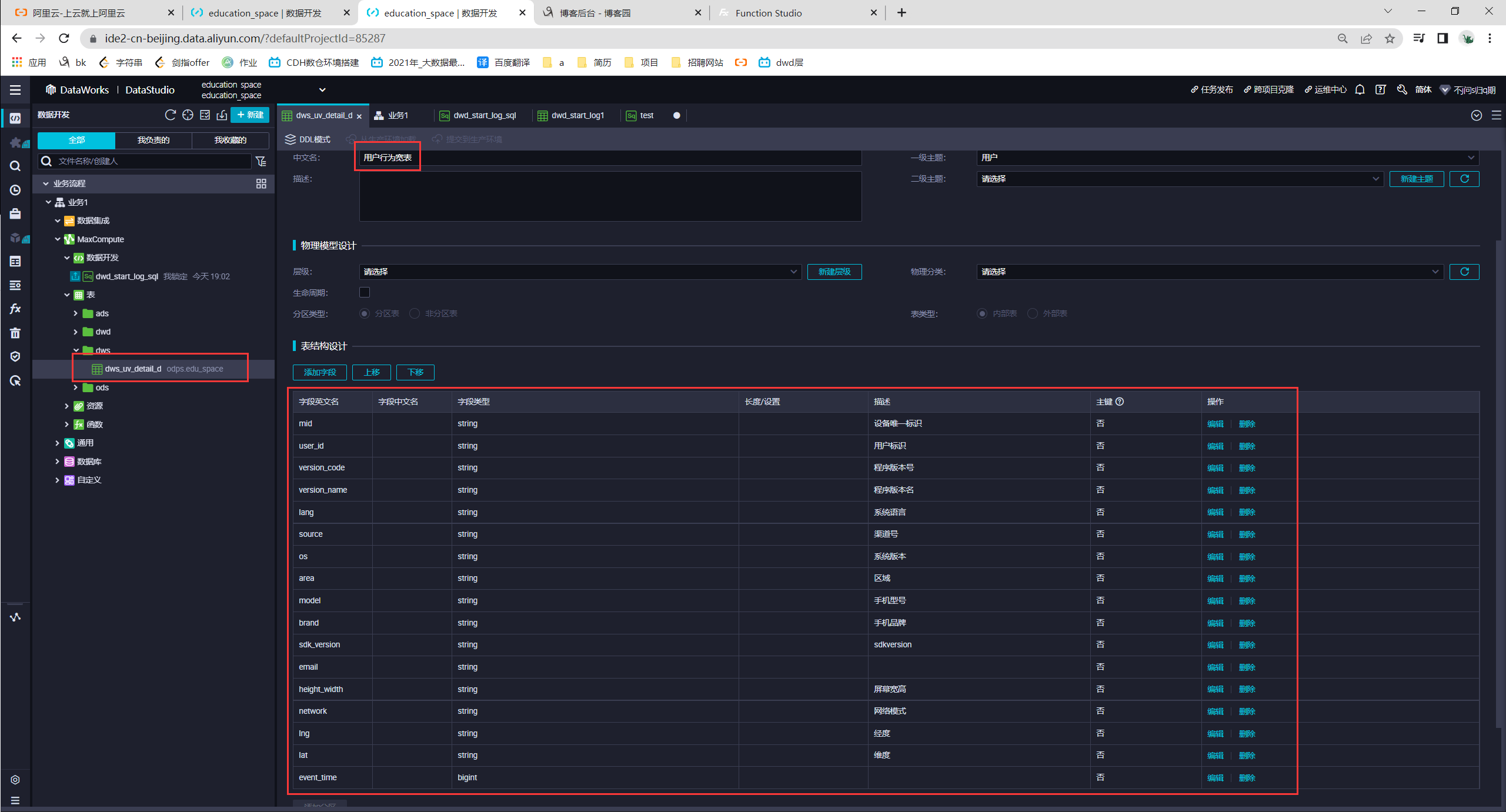

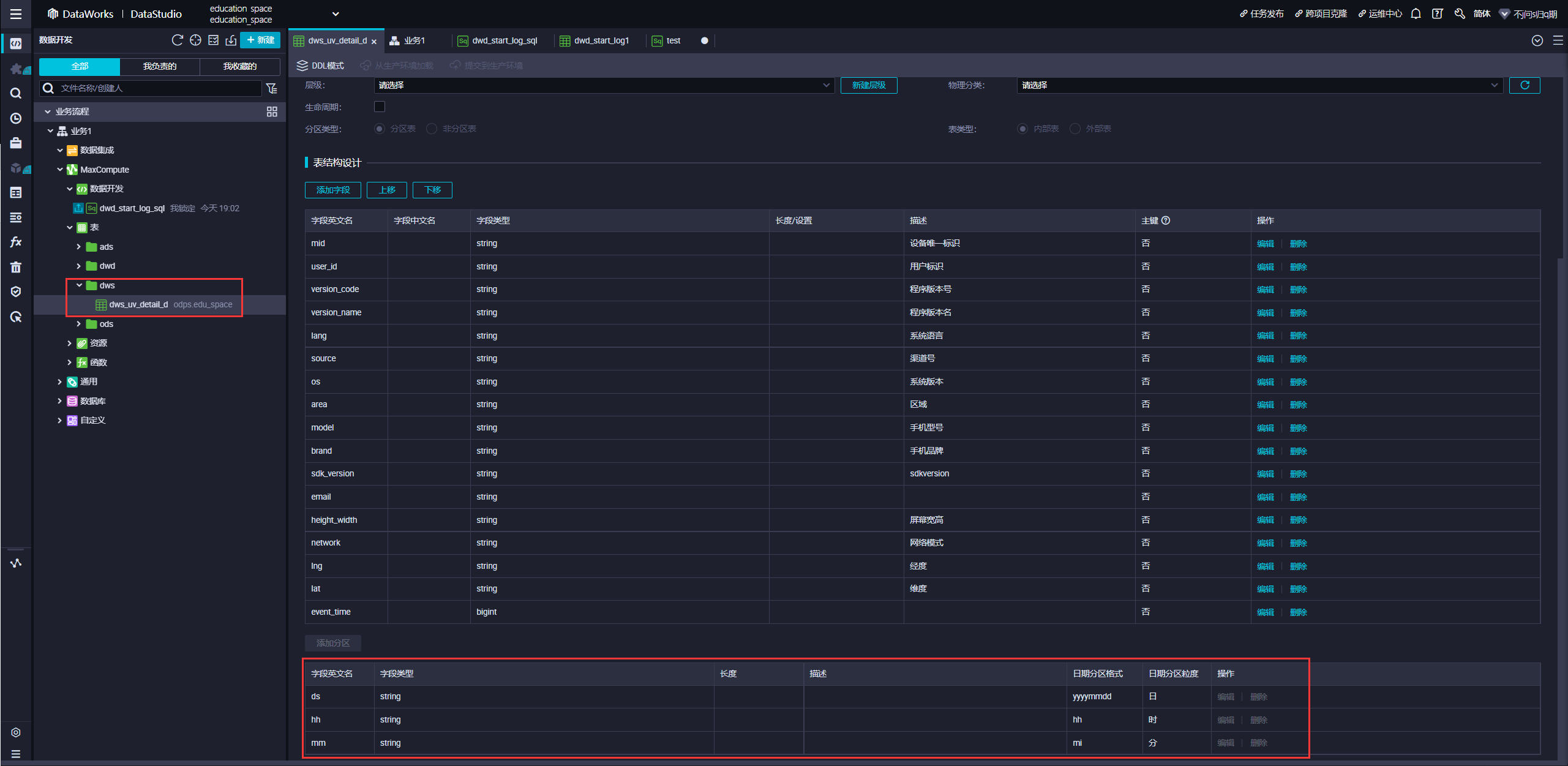

4. DWS层

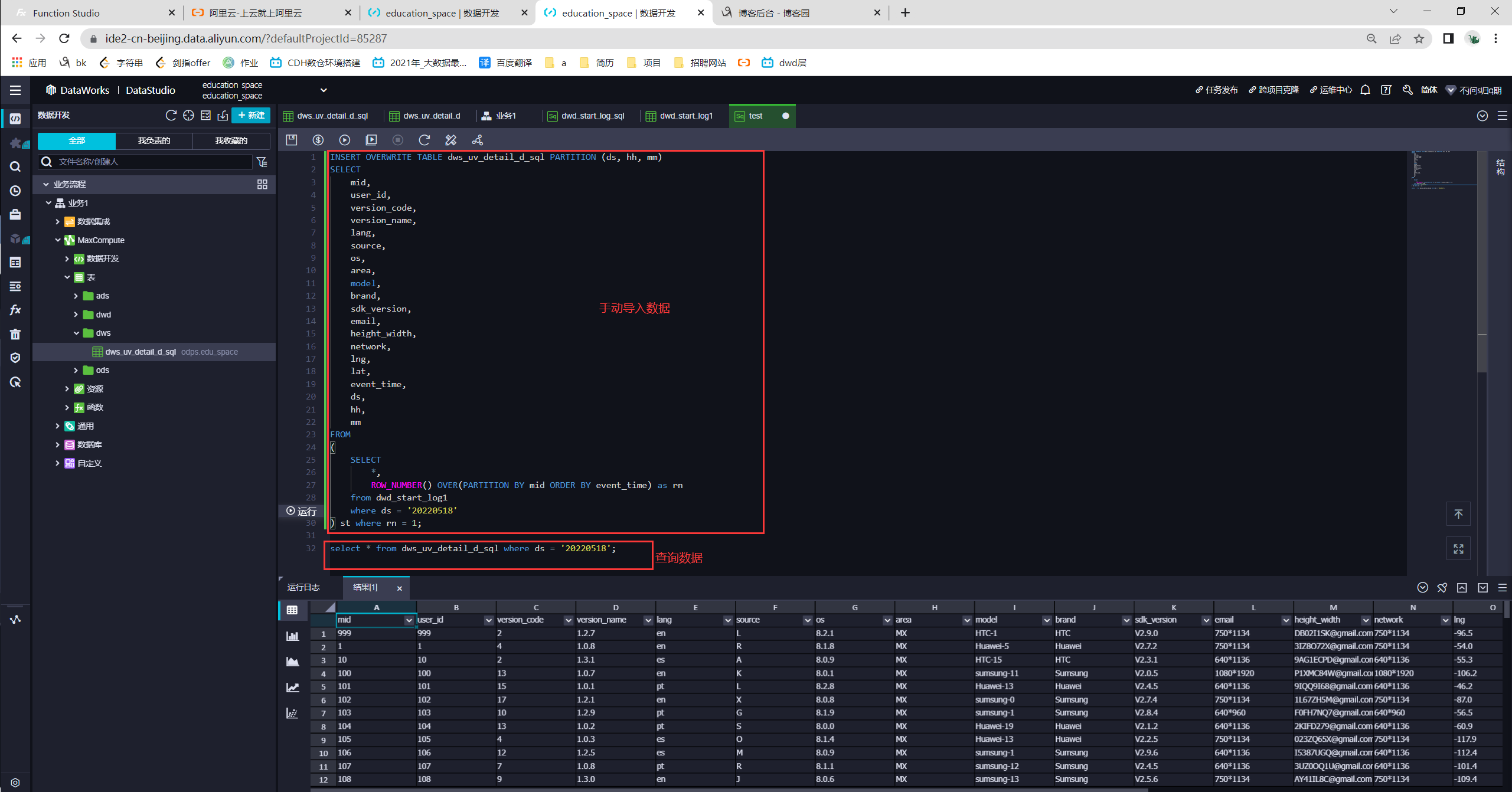

1. 手动将DWD层数据导入DWS层

新建表

导入数据

// 导入数据

INSERT OVERWRITE TABLE dws_uv_detail_d_sql PARTITION (ds, hh, mm)

SELECT

mid,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

email,

height_width,

network,

lng,

lat,

event_time,

ds,

hh,

mm

FROM

(

SELECT

*,

ROW_NUMBER() OVER(PARTITION BY mid ORDER BY event_time) as rn

from dwd_start_log1

where ds = '20220518'

) st where rn = 1;

// 查询数据

select * from dws_uv_detail_d_sql where ds = '20220518';

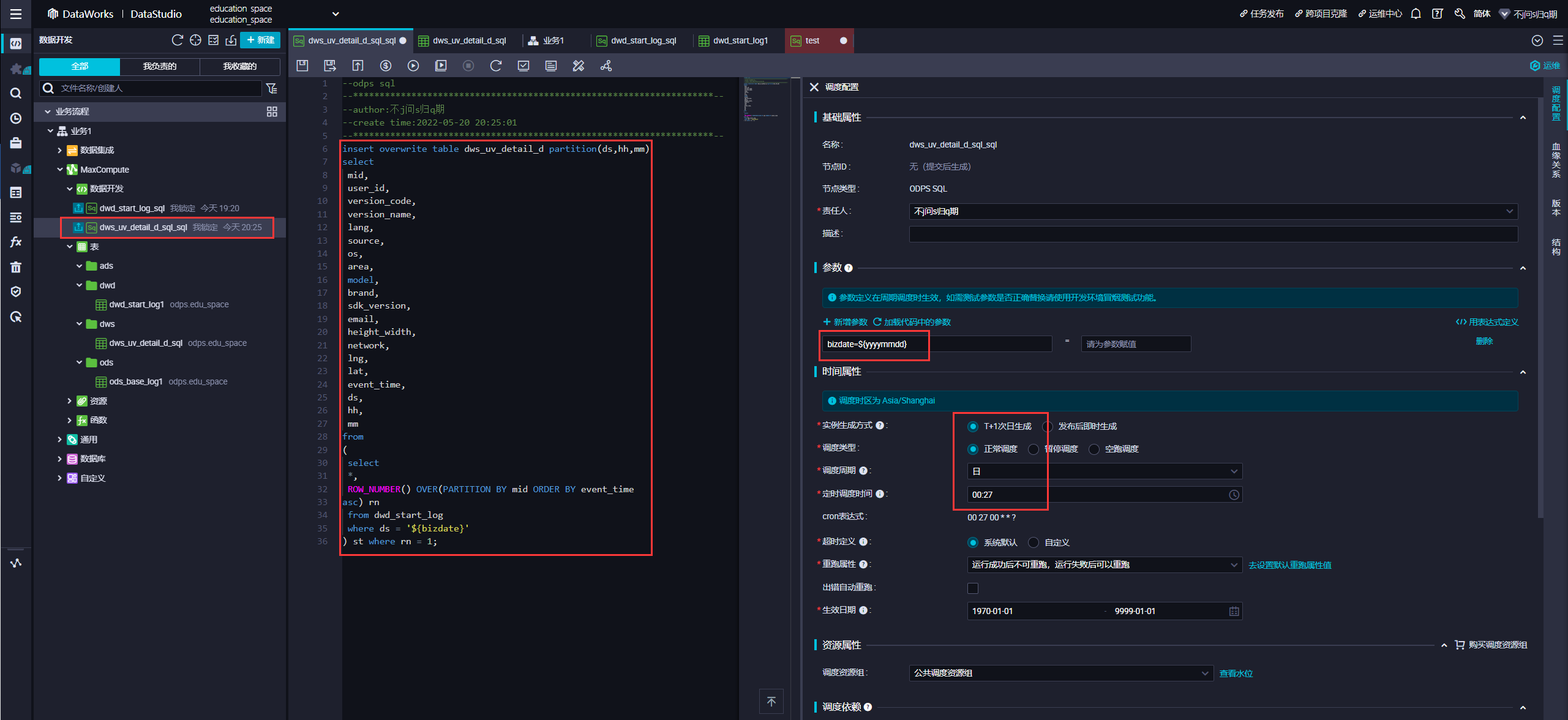

2. ods层到dwd层写一个定时任务

insert overwrite table dws_uv_detail_d partition(ds,hh,mm)

select

mid,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

email,

height_width,

network,

lng,

lat,

event_time,

ds,

hh,

mm

from

(

select

*,

ROW_NUMBER() OVER(PARTITION BY mid ORDER BY event_time

asc) rn

from dwd_start_log

where ds = '${bizdate}'

) st where rn = 1;

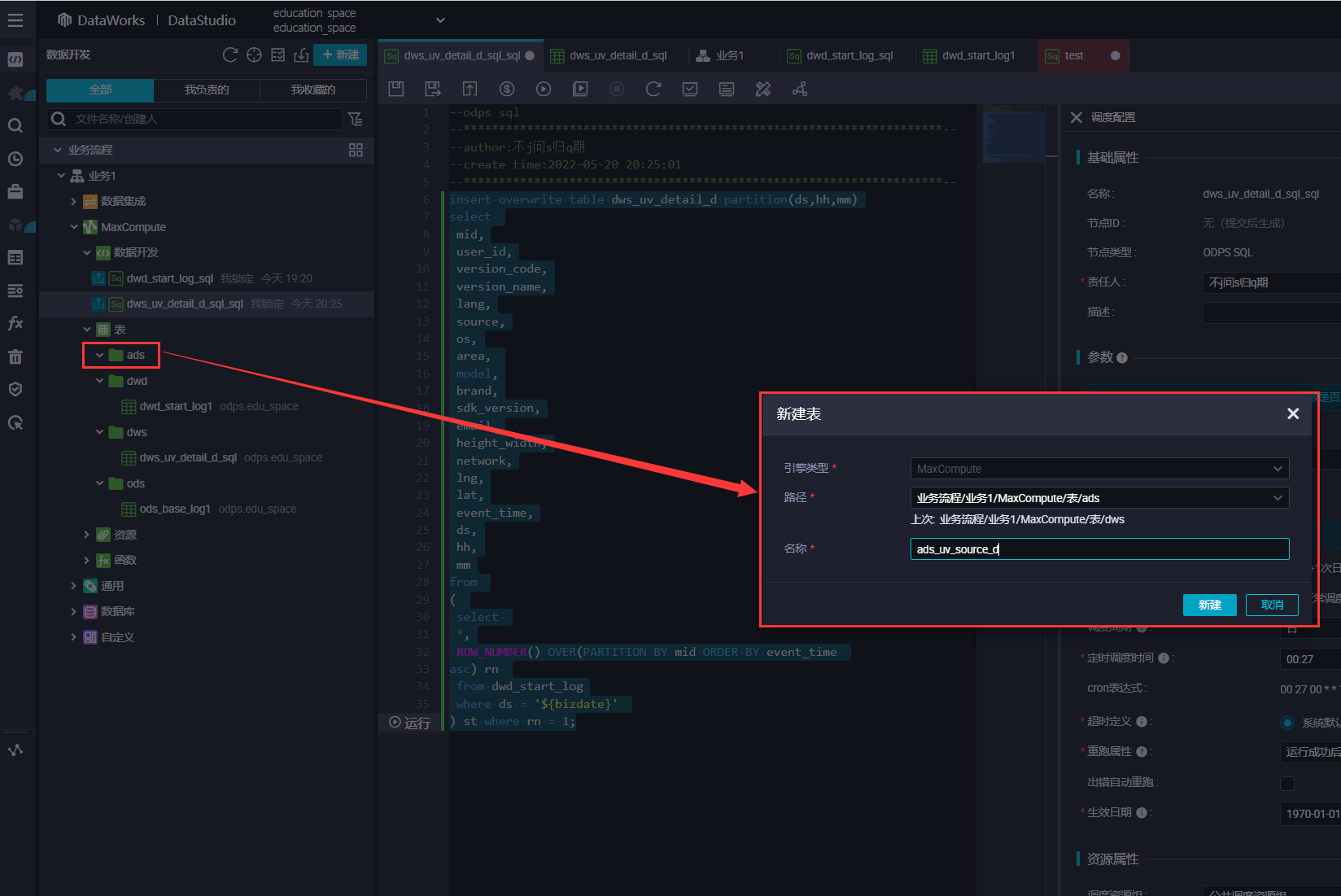

5. ADS层分析业务的指标

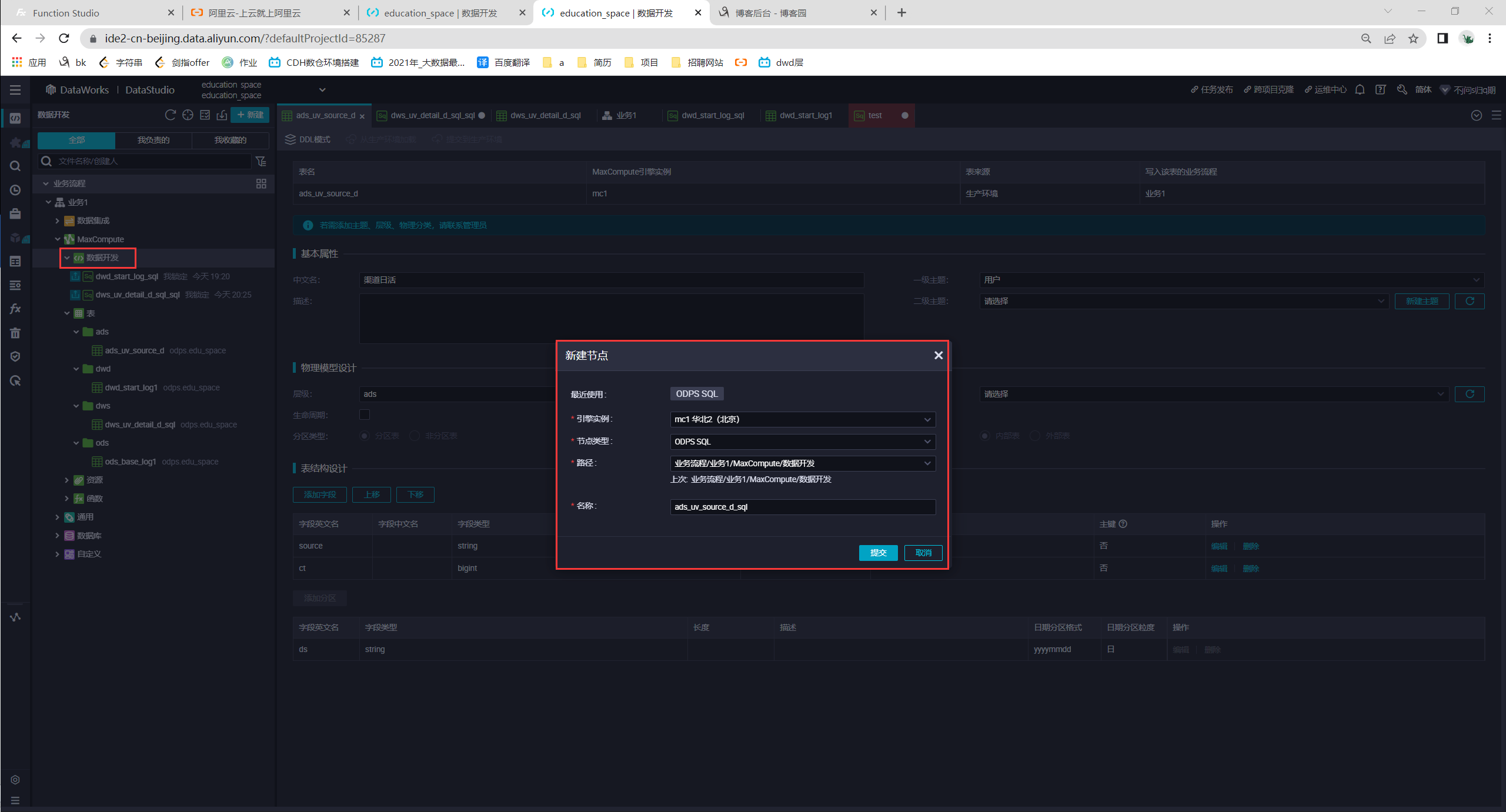

创建表

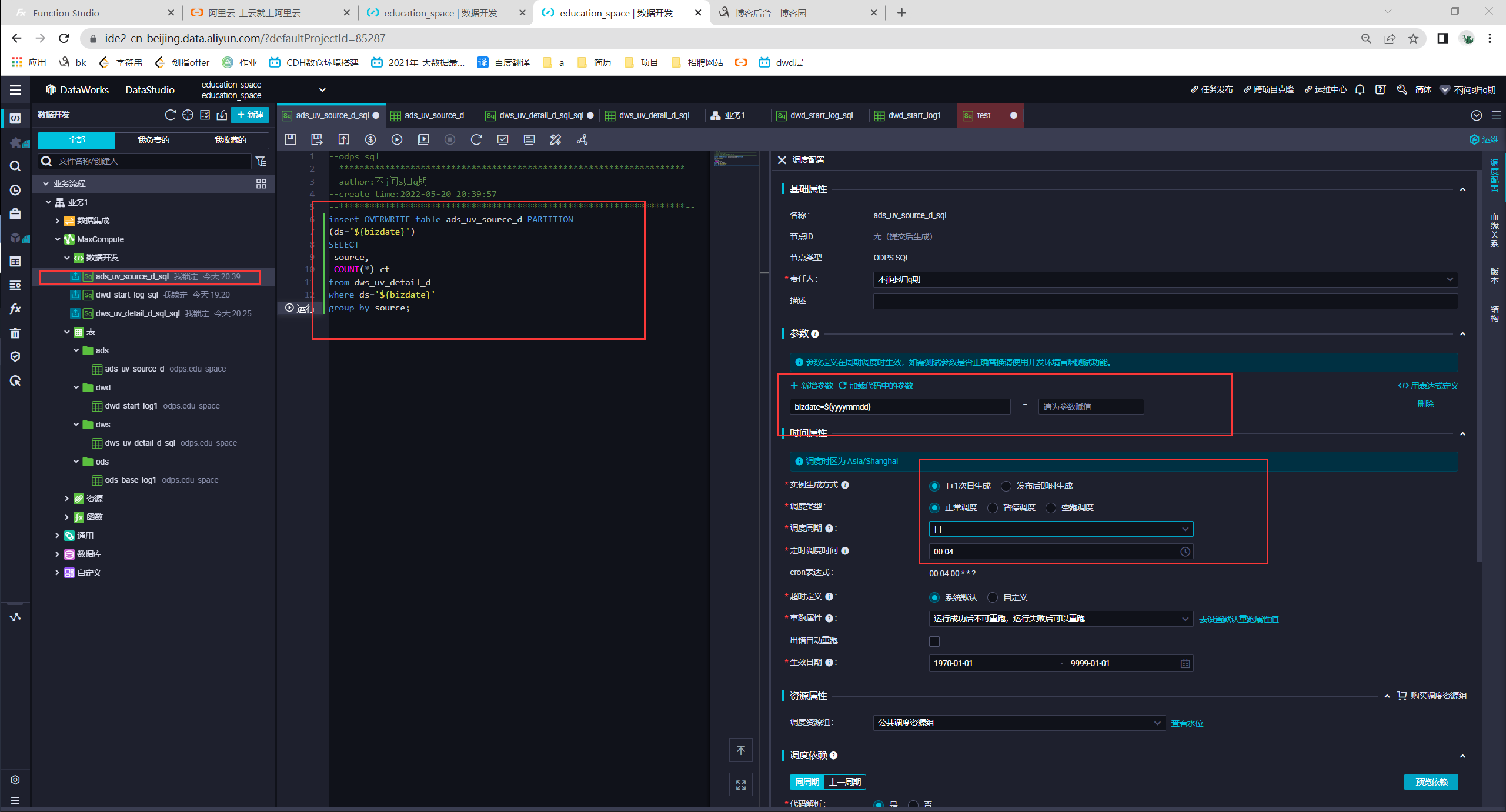

脚本编写

insert OVERWRITE table ads_uv_source_d PARTITION

(ds='${bizdate}')

SELECT

source,

COUNT(*) ct

from dws_uv_detail_d

where ds='${bizdate}'

group by source;

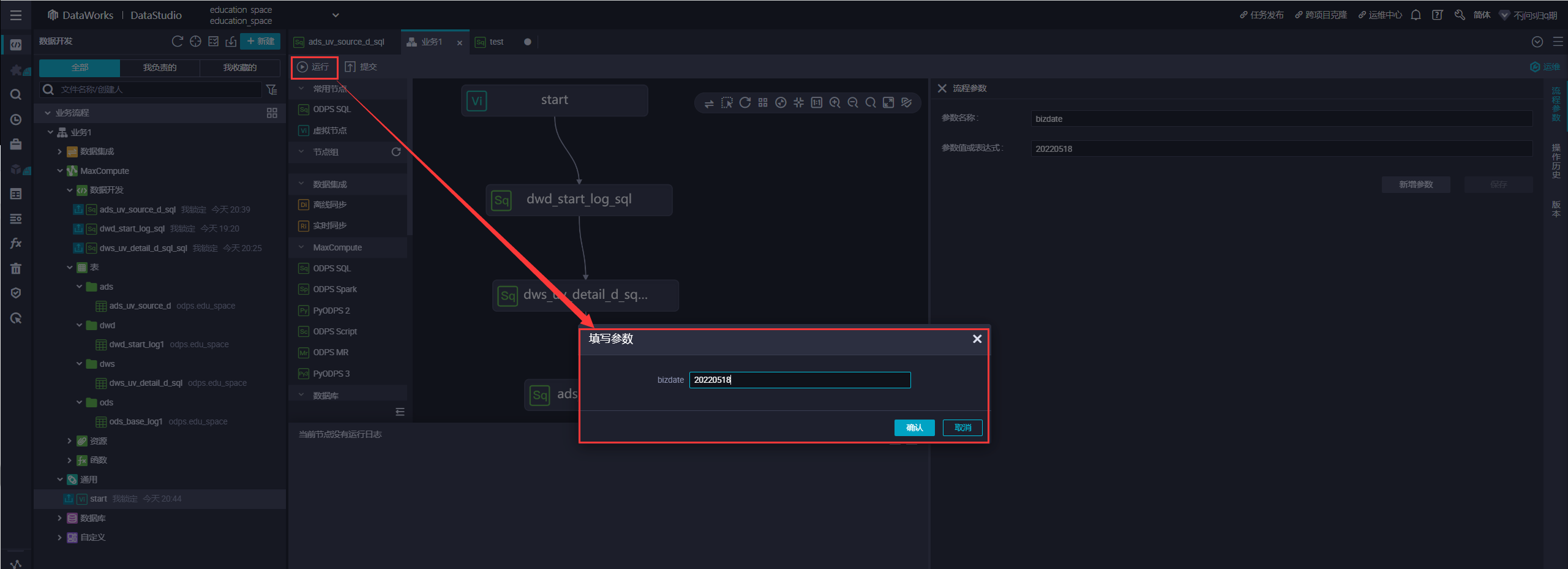

创建虚拟节点,开始执行

运行

成功将数据导入ads层

本文来自博客园,作者:jsqup,转载请注明原文链接:https://www.cnblogs.com/jsqup/p/16290508.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号