ceph集群安装

1、环境节点准备:

角色 IP

master 192.168.77.10 系统盘:/dev/sda 数据盘:/dev/sdb

node1 192.168.77.11 系统盘:/dev/sda 数据盘:/dev/sdb

node2 192.168.77.12 系统盘:/dev/sda 数据盘:/dev/sdb

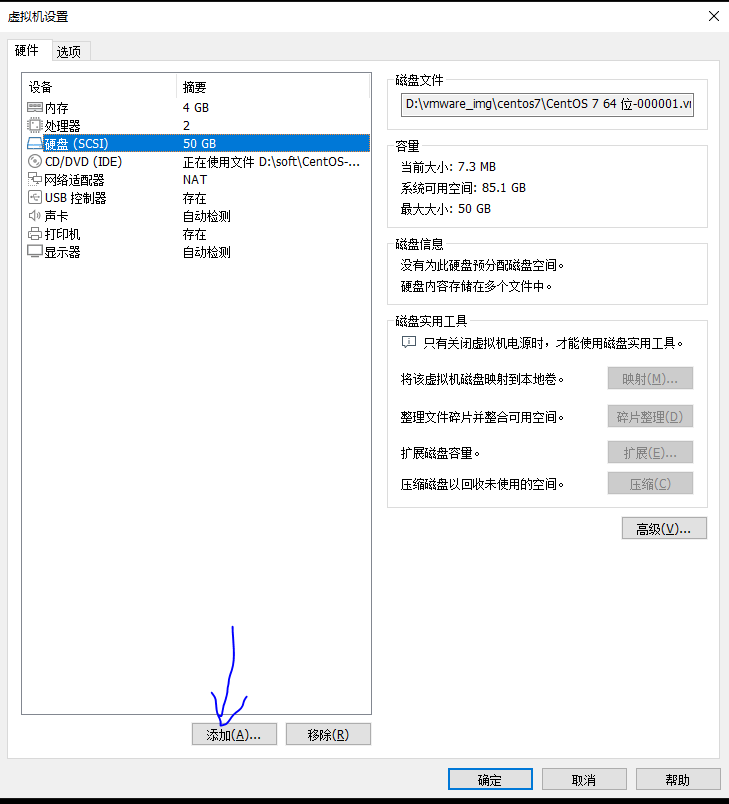

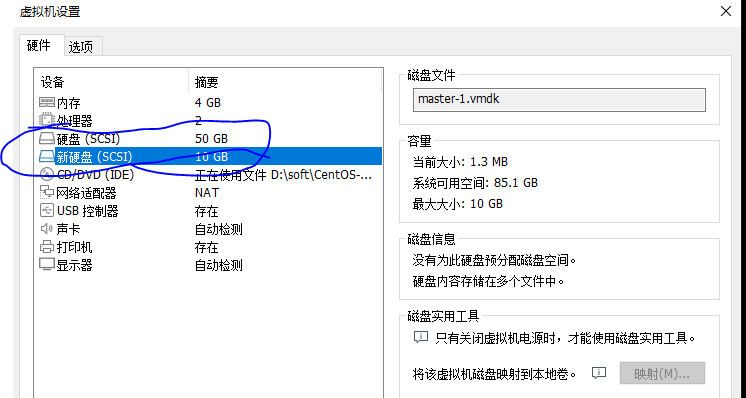

2、给虚拟机增加数据盘:

然后应用保存,其他两台虚拟机也这样操作,然后将三台虚拟机进行重启,reboot:

重启后然后查询数据盘:

[root@master ~]# ansible k8s -m shell -a "lsblk|grep sdb" 192.168.77.10 | CHANGED | rc=0 >> sdb 8:16 0 10G 0 disk 192.168.77.11 | CHANGED | rc=0 >> sdb 8:16 0 10G 0 disk 192.168.77.12 | CHANGED | rc=0 >> sdb 8:16 0 10G 0 disk

数据盘添加完成

3、环境初始化:

主机名设置,并设置hosts文件,并配置ssh互信,因为已经配置了ansible,所以这一块都是已完成:

[root@master ~]# ansible k8s -m shell -a "hostname" 192.168.77.10 | CHANGED | rc=0 >> master 192.168.77.11 | CHANGED | rc=0 >> node1 192.168.77.12 | CHANGED | rc=0 >> node2

关闭防火墙、关闭selinux:

[root@master ~]# ansible k8s -m shell -a "systemctl stop firewalld && systemctl disable firewalld && systemctl status firewalld"

[root@master ~]# ansible k8s -m shell -a "sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config" [root@master ~]# ansible k8s -m shell -a "setenforce 0"

注意:三个节点一定要时间同步

4、配置ceph源:

[root@master ~]# vim /etc/yum.repos.d/cephcentos7.repo [root@master ~]# ansible k8s -m copy -a "src=/etc/yum.repos.d/cephcentos7.repo dest=/etc/yum.repos.d/cephcentos7.repo"

[root@master cephmgrdashboard]# cat /etc/yum.repos.d/cephcentos7.repo [Ceph] name=Ceph packages for $basearch baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/$basearch enabled=1 gpgcheck=0 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1 [Ceph-noarch] name=Ceph noarch packages baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch enabled=1 gpgcheck=0 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1 [ceph-source] name=Ceph source packages baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS enabled=1 gpgcheck=0 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1

5、部署安装ceph集群,采用将这些rpm下载到本地再安装,以后离线也可以使用:

[root@master ~]# mkdir /root/ceph_deploy/ [root@master ~]# yum -y install --downloadonly --downloaddir=/root/ceph_deploy/ ceph-deploy python-pip [root@master ~]# cd ceph_deploy/ [root@master ceph_deploy]# ll total 2000 -rw-r--r--. 1 root root 292428 Apr 10 2020 ceph-deploy-2.0.1-0.noarch.rpm -rw-r--r--. 1 root root 1752855 Sep 3 2020 python2-pip-8.1.2-14.el7.noarch.rpm [root@master ceph_deploy]# yum localinstall *.rpm -y

安装ceph ceph-radosgw:

[root@master ceph_deploy]# ansible k8s -m shell -a "mkdir -p /root/ceph_deploy/pkg" [root@master ceph_deploy]# ansible k8s -m shell -a "yum -y install --downloadonly --downloaddir=/root/ceph_deploy/pkg ceph ceph-radosgw" [root@master pkg]# ansible k8s -m shell -a "cd /root/ceph_deploy/pkg;yum localinstall *.rpm -y"

开始创建ceph cluster:

[root@master pkg]# mkdir /root/ceph_deploy/cephcluster [root@master pkg]# cd /root/ceph_deploy/cephcluster

[root@master cephcluster]# ceph-deploy new master node1 node2 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new master node1 node2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] func : <function new at 0x7f6904d2ade8> [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f6904d27f80> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] ssh_copykey : True [ceph_deploy.cli][INFO ] mon : ['master', 'node1', 'node2'] [ceph_deploy.cli][INFO ] public_network : None [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster_network : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] fsid : None [ceph_deploy.new][DEBUG ] Creating new cluster named ceph [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [master][DEBUG ] connected to host: master [master][DEBUG ] detect platform information from remote host [master][DEBUG ] detect machine type [master][DEBUG ] find the location of an executable [master][INFO ] Running command: /usr/sbin/ip link show Exception KeyError: (7,) in <function remove at 0x7f690419d7d0> ignored [master][INFO ] Running command: /usr/sbin/ip addr show [master][DEBUG ] IP addresses found: [u'192.168.77.10'] [ceph_deploy.new][DEBUG ] Resolving host master [ceph_deploy.new][DEBUG ] Monitor master at 192.168.77.10 [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [node1][DEBUG ] connected to host: master [node1][INFO ] Running command: ssh -CT -o BatchMode=yes node1 [node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host [node1][DEBUG ] detect machine type [node1][DEBUG ] find the location of an executable [node1][INFO ] Running command: /usr/sbin/ip link show [node1][INFO ] Running command: /usr/sbin/ip addr show [node1][DEBUG ] IP addresses found: [u'192.168.77.11'] [ceph_deploy.new][DEBUG ] Resolving host node1 [ceph_deploy.new][DEBUG ] Monitor node1 at 192.168.77.11 [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [node2][DEBUG ] connected to host: master [node2][INFO ] Running command: ssh -CT -o BatchMode=yes node2 [node2][DEBUG ] connected to host: node2 [node2][DEBUG ] detect platform information from remote host [node2][DEBUG ] detect machine type [node2][DEBUG ] find the location of an executable [node2][INFO ] Running command: /usr/sbin/ip link show [node2][INFO ] Running command: /usr/sbin/ip addr show [node2][DEBUG ] IP addresses found: [u'192.168.77.12'] [ceph_deploy.new][DEBUG ] Resolving host node2 [ceph_deploy.new][DEBUG ] Monitor node2 at 192.168.77.12 [ceph_deploy.new][DEBUG ] Monitor initial members are ['master', 'node1', 'node2'] [ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.77.10', '192.168.77.11', '192.168.77.12'] [ceph_deploy.new][DEBUG ] Creating a random mon key... [ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring... [ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

[root@master cephcluster]# ll total 16 -rw-r--r--. 1 root root 239 Oct 24 05:42 ceph.conf -rw-r--r--. 1 root root 4876 Oct 24 05:42 ceph-deploy-ceph.log -rw-------. 1 root root 73 Oct 24 05:42 ceph.mon.keyring

查看配置文件:

[root@master cephcluster]# cat ceph.conf [global] fsid = 4d95bda1-1894-4b87-be64-ca65a66376c8 mon_initial_members = master, node1, node2 mon_host = 192.168.77.10,192.168.77.11,192.168.77.12 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx

修改ceph.conf 新增网络配置

[root@master cephcluster]# cat ceph.conf [global] fsid = 4d95bda1-1894-4b87-be64-ca65a66376c8 mon_initial_members = master, node1, node2 mon_host = 192.168.77.10,192.168.77.11,192.168.77.12 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx public network = 192.168.77.0/24 cluster network = 192.168.77.0/24

集群配置初始化,生成所有密钥,并启动monitor组件:

[root@master cephcluster]# ceph-deploy mon create-initial 输出结果: [master][DEBUG ] connected to host: master [master][DEBUG ] detect platform information from remote host [master][DEBUG ] detect machine type [master][DEBUG ] get remote short hostname [master][DEBUG ] fetch remote file [master][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.master.asok mon_status [master][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-master/keyring auth get client.admin [master][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-master/keyring auth get client.bootstrap-mds [master][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-master/keyring auth get client.bootstrap-mgr [master][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-master/keyring auth get client.bootstrap-osd [master][INFO ] Running command: /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-master/keyring auth get client.bootstrap-rgw [ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring [ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring [ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpJXF3xy

生成的密钥文件:

[root@master cephcluster]# ls -l total 72 -rw-------. 1 root root 113 Oct 24 05:47 ceph.bootstrap-mds.keyring -rw-------. 1 root root 113 Oct 24 05:47 ceph.bootstrap-mgr.keyring -rw-------. 1 root root 113 Oct 24 05:47 ceph.bootstrap-osd.keyring -rw-------. 1 root root 113 Oct 24 05:47 ceph.bootstrap-rgw.keyring -rw-------. 1 root root 151 Oct 24 05:47 ceph.client.admin.keyring -rw-r--r--. 1 root root 305 Oct 24 05:45 ceph.conf -rw-r--r--. 1 root root 42977 Oct 24 05:47 ceph-deploy-ceph.log -rw-------. 1 root root 73 Oct 24 05:42 ceph.mon.keyring

配置的ceph-mon服务:

systemctl status ceph-mon@master systemctl status ceph-mon@node1 systemctl status ceph-mon@node2 查看节点选举状态: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.master.asok mon_status|grep state ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status|grep state ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status|grep state

角色如下:

[root@master cephcluster]# ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.master.asok mon_status|grep state "state": "leader", [root@node1 pkg]# ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status|grep state "state": "peon", [root@node2 ~]# ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status|grep state "state": "peon",

没问题后,将ceph集群配置文件ceph.conf推送到三个节点:

[root@master cephcluster]# ceph-deploy admin master node1 node2 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin master node1 node2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fc78aa97830> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['master', 'node1', 'node2'] [ceph_deploy.cli][INFO ] func : <function admin at 0x7fc78b7ab230> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to master [master][DEBUG ] connected to host: master [master][DEBUG ] detect platform information from remote host [master][DEBUG ] detect machine type [master][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node1 [node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host [node1][DEBUG ] detect machine type [node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node2 [node2][DEBUG ] connected to host: node2 [node2][DEBUG ] detect platform information from remote host [node2][DEBUG ] detect machine type [node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [root@master cephcluster]# ll /etc/ceph/ total 12 -rw-------. 1 root root 151 Oct 24 05:53 ceph.client.admin.keyring -rw-r--r--. 1 root root 305 Oct 24 05:53 ceph.conf -rw-r--r--. 1 root root 92 Jun 29 18:36 rbdmap -rw-------. 1 root root 0 Oct 24 05:46 tmpnL8vKD

以上配置操作没问题后,检查ceph集群状态:

[root@master cephcluster]# ceph -s|grep -i health health: HEALTH_WARN

发现集群状态是warn,报错信息:mons are allowing insecure global_id reclaim

解决办法:禁用不安全模式

[root@master cephcluster]# ceph config set mon auth_allow_insecure_global_id_reclaim false [root@master cephcluster]# ceph -s cluster: id: 4d95bda1-1894-4b87-be64-ca65a66376c8 health: HEALTH_OK services: mon: 3 daemons, quorum master,node1,node2 (age 8m) mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs:

至此ceph集群目前搭建ok,集群状态正常

6、配置osd,之前已经在三个节点挂在了数据盘,没有做初始化操作:

[root@master cephcluster]# ansible k8s -m shell -a "lsblk|grep sdb" 192.168.77.10 | CHANGED | rc=0 >> sdb 8:16 0 10G 0 disk 192.168.77.12 | CHANGED | rc=0 >> sdb 8:16 0 10G 0 disk 192.168.77.11 | CHANGED | rc=0 >> sdb 8:16 0 10G 0 disk

目前三个节点的数据盘都是10G的大小,现在将数据盘进行配置osd添加到ceph集群:

ceph-deploy disk zap master /dev/sdb ceph-deploy osd create master --data /dev/sdb ceph-deploy disk zap node1 /dev/sdb ceph-deploy osd create node1 --data /dev/sdb ceph-deploy disk zap node2 /dev/sdb ceph-deploy osd create node2 --data /dev/sdb

具体执行信息如下:

[root@master cephcluster]# ceph-deploy disk zap master /dev/sdb [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap master /dev/sdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : zap [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f37573fc6c8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] host : master [ceph_deploy.cli][INFO ] func : <function disk at 0x7f3757435938> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] disk : ['/dev/sdb'] [ceph_deploy.osd][DEBUG ] zapping /dev/sdb on master [master][DEBUG ] connected to host: master [master][DEBUG ] detect platform information from remote host [master][DEBUG ] detect machine type [master][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core [master][DEBUG ] zeroing last few blocks of device [master][DEBUG ] find the location of an executable [master][INFO ] Running command: /usr/sbin/ceph-volume lvm zap /dev/sdb [master][WARNIN] --> Zapping: /dev/sdb [master][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [master][WARNIN] Running command: /usr/bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync [master][WARNIN] stderr: 10+0 records in [master][WARNIN] 10+0 records out [master][WARNIN] 10485760 bytes (10 MB) copied [master][WARNIN] stderr: , 0.0272198 s, 385 MB/s [master][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb> [root@master cephcluster]# ceph-deploy osd create master --data /dev/sdb [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create master --data /dev/sdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] bluestore : None [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f15e47b47e8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] fs_type : xfs [ceph_deploy.cli][INFO ] block_wal : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] journal : None [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] host : master [ceph_deploy.cli][INFO ] filestore : None [ceph_deploy.cli][INFO ] func : <function osd at 0x7f15e47e78c0> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] zap_disk : False [ceph_deploy.cli][INFO ] data : /dev/sdb [ceph_deploy.cli][INFO ] block_db : None [ceph_deploy.cli][INFO ] dmcrypt : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb [master][DEBUG ] connected to host: master [master][DEBUG ] detect platform information from remote host [master][DEBUG ] detect machine type [master][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core [ceph_deploy.osd][DEBUG ] Deploying osd to master [master][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [master][WARNIN] osd keyring does not exist yet, creating one [master][DEBUG ] create a keyring file [master][DEBUG ] find the location of an executable [master][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb [master][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key [master][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 155a0972-b125-4cf9-9324-a6852e4ec426 [master][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07 /dev/sdb [master][WARNIN] stdout: Physical volume "/dev/sdb" successfully created. [master][WARNIN] stdout: Volume group "ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07" successfully created [master][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 2559 -n osd-block-155a0972-b125-4cf9-9324-a6852e4ec426 ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07 [master][WARNIN] stdout: Logical volume "osd-block-155a0972-b125-4cf9-9324-a6852e4ec426" created. [master][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key [master][WARNIN] Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 [master][WARNIN] Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-0 [master][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07/osd-block-155a0972-b125-4cf9-9324-a6852e4ec426 [master][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 [master][WARNIN] Running command: /usr/bin/ln -s /dev/ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07/osd-block-155a0972-b125-4cf9-9324-a6852e4ec426 /var/lib/ceph/osd/ceph-0/block [master][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap [master][WARNIN] stderr: 2021-10-24 05:58:25.289 7f6aca610700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory [master][WARNIN] 2021-10-24 05:58:25.289 7f6aca610700 -1 AuthRegistry(0x7f6ac40662f8) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx [master][WARNIN] stderr: got monmap epoch 2 [master][WARNIN] Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQBALnVhzwPSEBAANhdbMRBKBY9aLA2sstsFLw== [master][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring [master][WARNIN] added entity osd.0 auth(key=AQBALnVhzwPSEBAANhdbMRBKBY9aLA2sstsFLw==) [master][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring [master][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/ [master][WARNIN] Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 155a0972-b125-4cf9-9324-a6852e4ec426 --setuser ceph --setgroup ceph [master][WARNIN] stderr: 2021-10-24 05:58:25.818 7fb9720daa80 -1 bluestore(/var/lib/ceph/osd/ceph-0/) _read_fsid unparsable uuid [master][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdb [master][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [master][WARNIN] Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07/osd-block-155a0972-b125-4cf9-9324-a6852e4ec426 --path /var/lib/ceph/osd/ceph-0 --no-mon-config [master][WARNIN] Running command: /usr/bin/ln -snf /dev/ceph-38fea9c0-9680-453d-9af4-8d3dbfb1ed07/osd-block-155a0972-b125-4cf9-9324-a6852e4ec426 /var/lib/ceph/osd/ceph-0/block [master][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block [master][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 [master][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [master][WARNIN] Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-155a0972-b125-4cf9-9324-a6852e4ec426 [master][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-155a0972-b125-4cf9-9324-a6852e4ec426.service to /usr/lib/systemd/system/ceph-volume@.service. [master][WARNIN] Running command: /usr/bin/systemctl enable --runtime ceph-osd@0 [master][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service to /usr/lib/systemd/system/ceph-osd@.service. [master][WARNIN] Running command: /usr/bin/systemctl start ceph-osd@0 [master][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0 [master][WARNIN] --> ceph-volume lvm create successful for: /dev/sdb [master][INFO ] checking OSD status... [master][DEBUG ] find the location of an executable [master][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json [ceph_deploy.osd][DEBUG ] Host master is now ready for osd use. [root@master cephcluster]# ceph-deploy disk zap node1 /dev/sdb [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap node1 /dev/sdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : zap [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fb0905006c8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] host : node1 [ceph_deploy.cli][INFO ] func : <function disk at 0x7fb090539938> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] disk : ['/dev/sdb'] [ceph_deploy.osd][DEBUG ] zapping /dev/sdb on node1 [node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host [node1][DEBUG ] detect machine type [node1][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core [node1][DEBUG ] zeroing last few blocks of device [node1][DEBUG ] find the location of an executable [node1][INFO ] Running command: /usr/sbin/ceph-volume lvm zap /dev/sdb [node1][WARNIN] --> Zapping: /dev/sdb [node1][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [node1][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync [node1][WARNIN] stderr: 10+0 records in [node1][WARNIN] 10+0 records out [node1][WARNIN] 10485760 bytes (10 MB) copied [node1][WARNIN] stderr: , 0.0342769 s, 306 MB/s [node1][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb> [root@master cephcluster]# ceph-deploy osd create node1 --data /dev/sdb [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create node1 --data /dev/sdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] bluestore : None [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f4fb87c37e8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] fs_type : xfs [ceph_deploy.cli][INFO ] block_wal : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] journal : None [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] host : node1 [ceph_deploy.cli][INFO ] filestore : None [ceph_deploy.cli][INFO ] func : <function osd at 0x7f4fb87f68c0> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] zap_disk : False [ceph_deploy.cli][INFO ] data : /dev/sdb [ceph_deploy.cli][INFO ] block_db : None [ceph_deploy.cli][INFO ] dmcrypt : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb [node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host [node1][DEBUG ] detect machine type [node1][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core [ceph_deploy.osd][DEBUG ] Deploying osd to node1 [node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [node1][WARNIN] osd keyring does not exist yet, creating one [node1][DEBUG ] create a keyring file [node1][DEBUG ] find the location of an executable [node1][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb [node1][WARNIN] Running command: /bin/ceph-authtool --gen-print-key [node1][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 4044bc47-2945-4748-8464-a76ca6d8c3c1 [node1][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d /dev/sdb [node1][WARNIN] stdout: Physical volume "/dev/sdb" successfully created. [node1][WARNIN] stdout: Volume group "ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d" successfully created [node1][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 2559 -n osd-block-4044bc47-2945-4748-8464-a76ca6d8c3c1 ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d [node1][WARNIN] stdout: Logical volume "osd-block-4044bc47-2945-4748-8464-a76ca6d8c3c1" created. [node1][WARNIN] Running command: /bin/ceph-authtool --gen-print-key [node1][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-1 [node1][WARNIN] Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-1 [node1][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d/osd-block-4044bc47-2945-4748-8464-a76ca6d8c3c1 [node1][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2 [node1][WARNIN] Running command: /bin/ln -s /dev/ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d/osd-block-4044bc47-2945-4748-8464-a76ca6d8c3c1 /var/lib/ceph/osd/ceph-1/block [node1][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-1/activate.monmap [node1][WARNIN] stderr: 2021-10-24 05:58:37.698 7f9bfb6f0700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory [node1][WARNIN] 2021-10-24 05:58:37.698 7f9bfb6f0700 -1 AuthRegistry(0x7f9bf40662f8) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx [node1][WARNIN] stderr: got monmap epoch 2 [node1][WARNIN] Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-1/keyring --create-keyring --name osd.1 --add-key AQBMLnVhEGs5KRAA3tzQQNeZYJL07OaYV+Yagw== [node1][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-1/keyring [node1][WARNIN] added entity osd.1 auth(key=AQBMLnVhEGs5KRAA3tzQQNeZYJL07OaYV+Yagw==) [node1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/keyring [node1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/ [node1][WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 1 --monmap /var/lib/ceph/osd/ceph-1/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-1/ --osd-uuid 4044bc47-2945-4748-8464-a76ca6d8c3c1 --setuser ceph --setgroup ceph [node1][WARNIN] stderr: 2021-10-24 05:58:38.230 7fb8c1628a80 -1 bluestore(/var/lib/ceph/osd/ceph-1/) _read_fsid unparsable uuid [node1][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdb [node1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1 [node1][WARNIN] Running command: /bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d/osd-block-4044bc47-2945-4748-8464-a76ca6d8c3c1 --path /var/lib/ceph/osd/ceph-1 --no-mon-config [node1][WARNIN] Running command: /bin/ln -snf /dev/ceph-7306e20f-d3f1-4fe6-9a02-c65b9f4f543d/osd-block-4044bc47-2945-4748-8464-a76ca6d8c3c1 /var/lib/ceph/osd/ceph-1/block [node1][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-1/block [node1][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2 [node1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1 [node1][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-1-4044bc47-2945-4748-8464-a76ca6d8c3c1 [node1][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-1-4044bc47-2945-4748-8464-a76ca6d8c3c1.service to /usr/lib/systemd/system/ceph-volume@.service. [node1][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@1 [node1][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@1.service to /usr/lib/systemd/system/ceph-osd@.service. [node1][WARNIN] Running command: /bin/systemctl start ceph-osd@1 [node1][WARNIN] --> ceph-volume lvm activate successful for osd ID: 1 [node1][WARNIN] --> ceph-volume lvm create successful for: /dev/sdb [node1][INFO ] checking OSD status... [node1][DEBUG ] find the location of an executable [node1][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json [ceph_deploy.osd][DEBUG ] Host node1 is now ready for osd use. [root@master cephcluster]# ceph-deploy disk zap node2 /dev/sdb [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap node2 /dev/sdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : zap [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f065e4ff6c8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] host : node2 [ceph_deploy.cli][INFO ] func : <function disk at 0x7f065e538938> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] disk : ['/dev/sdb'] [ceph_deploy.osd][DEBUG ] zapping /dev/sdb on node2 [node2][DEBUG ] connected to host: node2 [node2][DEBUG ] detect platform information from remote host [node2][DEBUG ] detect machine type [node2][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core [node2][DEBUG ] zeroing last few blocks of device [node2][DEBUG ] find the location of an executable [node2][INFO ] Running command: /usr/sbin/ceph-volume lvm zap /dev/sdb [node2][WARNIN] --> Zapping: /dev/sdb [node2][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [node2][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync [node2][WARNIN] stderr: 10+0 records in [node2][WARNIN] 10+0 records out [node2][WARNIN] 10485760 bytes (10 MB) copied [node2][WARNIN] stderr: , 0.0299158 s, 351 MB/s [node2][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb> [root@master cephcluster]# ceph-deploy osd create node2 --data /dev/sdb [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create node2 --data /dev/sdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] bluestore : None [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2c0fa327e8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] fs_type : xfs [ceph_deploy.cli][INFO ] block_wal : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] journal : None [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] host : node2 [ceph_deploy.cli][INFO ] filestore : None [ceph_deploy.cli][INFO ] func : <function osd at 0x7f2c0fa658c0> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] zap_disk : False [ceph_deploy.cli][INFO ] data : /dev/sdb [ceph_deploy.cli][INFO ] block_db : None [ceph_deploy.cli][INFO ] dmcrypt : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb [node2][DEBUG ] connected to host: node2 [node2][DEBUG ] detect platform information from remote host [node2][DEBUG ] detect machine type [node2][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core [ceph_deploy.osd][DEBUG ] Deploying osd to node2 [node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [node2][WARNIN] osd keyring does not exist yet, creating one [node2][DEBUG ] create a keyring file [node2][DEBUG ] find the location of an executable [node2][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb [node2][WARNIN] Running command: /bin/ceph-authtool --gen-print-key [node2][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 496ca4ff-307e-419b-923c-dddf6304eefa [node2][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14 /dev/sdb [node2][WARNIN] stdout: Physical volume "/dev/sdb" successfully created. [node2][WARNIN] stdout: Volume group "ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14" successfully created [node2][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 2559 -n osd-block-496ca4ff-307e-419b-923c-dddf6304eefa ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14 [node2][WARNIN] stdout: Logical volume "osd-block-496ca4ff-307e-419b-923c-dddf6304eefa" created. [node2][WARNIN] Running command: /bin/ceph-authtool --gen-print-key [node2][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-2 [node2][WARNIN] Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-2 [node2][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14/osd-block-496ca4ff-307e-419b-923c-dddf6304eefa [node2][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2 [node2][WARNIN] Running command: /bin/ln -s /dev/ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14/osd-block-496ca4ff-307e-419b-923c-dddf6304eefa /var/lib/ceph/osd/ceph-2/block [node2][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-2/activate.monmap [node2][WARNIN] stderr: 2021-10-24 05:58:52.238 7f2b352f5700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory [node2][WARNIN] 2021-10-24 05:58:52.238 7f2b352f5700 -1 AuthRegistry(0x7f2b300662f8) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx [node2][WARNIN] stderr: got monmap epoch 2 [node2][WARNIN] Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-2/keyring --create-keyring --name osd.2 --add-key AQBbLnVhcWMnDRAApxlMO02H7rIOcgEo3qYbIw== [node2][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-2/keyring [node2][WARNIN] added entity osd.2 auth(key=AQBbLnVhcWMnDRAApxlMO02H7rIOcgEo3qYbIw==) [node2][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/keyring [node2][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/ [node2][WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 2 --monmap /var/lib/ceph/osd/ceph-2/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-2/ --osd-uuid 496ca4ff-307e-419b-923c-dddf6304eefa --setuser ceph --setgroup ceph [node2][WARNIN] stderr: 2021-10-24 05:58:52.768 7f290d041a80 -1 bluestore(/var/lib/ceph/osd/ceph-2/) _read_fsid unparsable uuid [node2][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdb [node2][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2 [node2][WARNIN] Running command: /bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14/osd-block-496ca4ff-307e-419b-923c-dddf6304eefa --path /var/lib/ceph/osd/ceph-2 --no-mon-config [node2][WARNIN] Running command: /bin/ln -snf /dev/ceph-bc7a7134-8143-4905-9f6c-bd24e0d1ce14/osd-block-496ca4ff-307e-419b-923c-dddf6304eefa /var/lib/ceph/osd/ceph-2/block [node2][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-2/block [node2][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2 [node2][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2 [node2][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-2-496ca4ff-307e-419b-923c-dddf6304eefa [node2][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-2-496ca4ff-307e-419b-923c-dddf6304eefa.service to /usr/lib/systemd/system/ceph-volume@.service. [node2][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@2 [node2][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@2.service to /usr/lib/systemd/system/ceph-osd@.service. [node2][WARNIN] Running command: /bin/systemctl start ceph-osd@2 [node2][WARNIN] --> ceph-volume lvm activate successful for osd ID: 2 [node2][WARNIN] --> ceph-volume lvm create successful for: /dev/sdb [node2][INFO ] checking OSD status... [node2][DEBUG ] find the location of an executable [node2][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json [ceph_deploy.osd][DEBUG ] Host node2 is now ready for osd use.

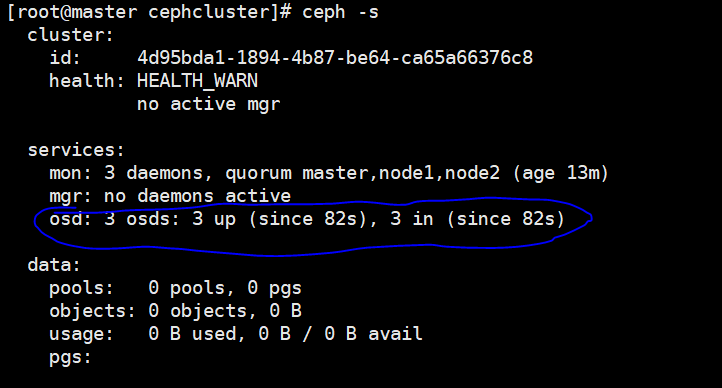

配置成功后,查询ceph集群是否已经支持osd:

这里三个节点,每个节点一块数据盘,正常配置

7、部署mgr:

[root@master cephcluster]# ceph-deploy mgr create master node1 node2

执行信息如下:

[root@master cephcluster]# ceph-deploy mgr create master node1 node2 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create master node1 node2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] mgr : [('master', 'master'), ('node1', 'node1'), ('node2', 'node2')] [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f3a32993a70> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] func : <function mgr at 0x7f3a33274140> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts master:master node1:node1 node2:node2 [master][DEBUG ] connected to host: master [master][DEBUG ] detect platform information from remote host [master][DEBUG ] detect machine type [ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to master [master][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [master][WARNIN] mgr keyring does not exist yet, creating one [master][DEBUG ] create a keyring file [master][DEBUG ] create path recursively if it doesn't exist [master][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.master mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-master/keyring [master][INFO ] Running command: systemctl enable ceph-mgr@master [master][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@master.service to /usr/lib/systemd/system/ceph-mgr@.service. [master][INFO ] Running command: systemctl start ceph-mgr@master [master][INFO ] Running command: systemctl enable ceph.target [node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host [node1][DEBUG ] detect machine type [ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node1 [node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [node1][WARNIN] mgr keyring does not exist yet, creating one [node1][DEBUG ] create a keyring file [node1][DEBUG ] create path recursively if it doesn't exist [node1][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node1 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node1/keyring [node1][INFO ] Running command: systemctl enable ceph-mgr@node1 [node1][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node1.service to /usr/lib/systemd/system/ceph-mgr@.service. [node1][INFO ] Running command: systemctl start ceph-mgr@node1 [node1][INFO ] Running command: systemctl enable ceph.target [node2][DEBUG ] connected to host: node2 [node2][DEBUG ] detect platform information from remote host [node2][DEBUG ] detect machine type [ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node2 [node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [node2][WARNIN] mgr keyring does not exist yet, creating one [node2][DEBUG ] create a keyring file [node2][DEBUG ] create path recursively if it doesn't exist [node2][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node2 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node2/keyring [node2][INFO ] Running command: systemctl enable ceph-mgr@node2 [node2][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node2.service to /usr/lib/systemd/system/ceph-mgr@.service. [node2][INFO ] Running command: systemctl start ceph-mgr@node2 [node2][INFO ] Running command: systemctl enable ceph.target

mgr服务:

systemctl status ceph-mgr@master systemctl status ceph-mgr@node1 systemctl status ceph-mgr@node2

检查ceph集群安装mgr状态:

至此monitor、osd、mgr都已安装成功,现在部署mgr-dashboard:

[root@master cephmgrdashboard]# ansible k8s -m shell -a "mkdir -p /root/ceph_deploy/pkg/cephmgrdashboard"

下载rpm包,然后安装

[root@master cephmgrdashboard]# ansible k8s -m shell -a "yum -y install --downloadonly --downloaddir=/root/ceph_deploy/pkg/cephmgrdashboard ceph-mgr-dashboard" [root@master cephmgrdashboard]# ansible k8s -m shell -a "cd /root/ceph_deploy/pkg/cephmgrdashboard;yum localinstall *.rpm -y"

ceph集群mgr服务启用dashboard模块:

[root@master cephmgrdashboard]# ceph mgr module enable dashboard [root@master cephmgrdashboard]# ceph dashboard create-self-signed-cert Self-signed certificate created [root@master cephmgrdashboard]# echo "admin" > password [root@master cephmgrdashboard]# ceph dashboard set-login-credentials admin -i password ****************************************************************** *** WARNING: this command is deprecated. *** *** Please use the ac-user-* related commands to manage users. *** ****************************************************************** Username and password updated [root@master cephmgrdashboard]# ceph mgr services { "dashboard": "https://master:8443/" }

上面-i password是需要以文件形式进行配置,然后浏览器进行访问:

至此ceph集群安装完毕

浙公网安备 33010602011771号

浙公网安备 33010602011771号