[Converge] Loss and Loss Functions

有这么几个类别

Ref: How to Choose Loss Functions When Training Deep Learning Neural Networks

This tutorial is divided into three parts; they are:

- Regression Loss Functions

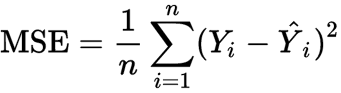

- Mean Squared Error Loss

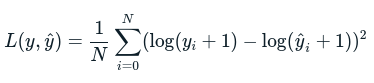

- Mean Squared Logarithmic Error Loss

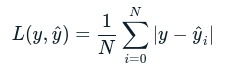

- Mean Absolute Error Loss

- Binary Classification Loss Functions

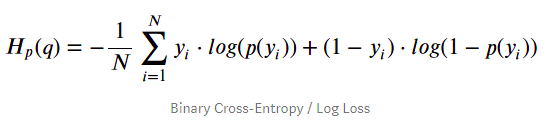

- Binary Cross-Entropy

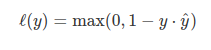

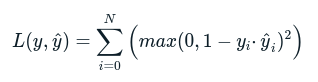

- Hinge Loss

- Squared Hinge Loss

- Multi-Class Classification Loss Functions

- Multi-Class Cross-Entropy Loss

- Sparse Multiclass Cross-Entropy Loss

- Kullback Leibler Divergence Loss

-

Regression Loss Functions

-

Binary Classification Loss Functions

DEEP Learning's Loss Function 使用正则了吗?

Ref: [Scikit-learn] 1.1 Generalized Linear Models - from Linear Regression to L1&L2

Ref: [Scikit-learn] 1.1 Generalized Linear Models - Logistic regression & Softmax

Ref: [Scikit-learn] 1.5 Generalized Linear Models - SGD for Classification

Ref: Loss function及regulation总结-1

Ref: Loss function及regulation总结-2

PS:这里注意下regulation和regularization term为两种不一样的范畴,具体来说regulation包含增加regularization term这种方法。

一、regulation term

- 简单区分L1,L2 regulation term:

L1正则化和L2正则化可以看做是损失函数的惩罚项。

所谓『惩罚』是指对损失函数中的某些参数做一些限制。对于线性回归模型,使用L1正则化的模型建叫做Lasso回归,使用L2正则化的模型叫做Ridge回归(岭回归)。

二、Regulation的常用方法

- Dropout

- Batch Normalization

- Data Augmentation

- DropConnect

- Fractional Max Pooling

- Stochastic Depth(Resnet- shortcut)

三、自定义Loss Funcion + L2

除了Regulation方法,那就自定义带有L1,L2的loss function,下面是个参考。

Goto: Implementing L2-constrained Softmax Loss Function on a Convolutional Neural Network using TensorFlow

End.

浙公网安备 33010602011771号

浙公网安备 33010602011771号