[Hadoop] Phoenix : SQL on HBASE

开门见山

SQL

一,基础

Ref: [MySQL] 02- Optimisation solutions

--------------------------------------------------

--------------------------------------------------

Hive是一个工具,用于分析.

Hive has been known to be the component of Big data ecosystem where legacy mappers and reducers are needed to process data from HDFS.

--------------------------------------------------

Ref: [Hadoop] HBase

-

- 优化思路(遗留问题)

- pyspark接口读写HBase

- pyspark的几个例子,与HBase关系不大.

二,参考阅读

Ref: 从mysql向HBase+Phoenix迁移数据的心得总结

Hbase核心原理

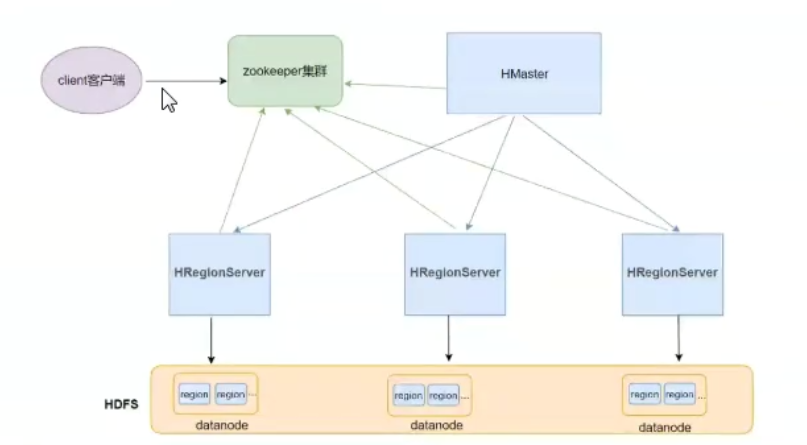

一,整体架构

Ref: HBase与Zookeeper的关系

Ref: [Hadoop] Zookeeper

- 在Hbase中插入或读取数据

当在Hbase中插入或读取数据时流程如下:

1. 在Client中写一个Java类运行,客户端只需要连接zookeeper,客户端会从zookeeper中得到Regionserver的映射信息,之后客户端会直接连接到Region Server。

2. RegionServer在启动之后会向zookeeper汇报信息(通过心跳RPC):本身有多少Region,有哪些数据,当前机器的运行状况等等。

3. master 启动后也会向zookeeper汇报信息,并且从zookeeper中得到Region Server的一些信息。例如当一台Region Server当掉之后,zookeeper会得知,之后Master也会通过zookeeper得到该Region Server当掉的信息。

4. 当客户端Client在做DDL(创建,修改,删除表)时,会通过zookeeper获取到Master的地址,而Master中保存了表的元数据信息,之后Client就可以直接与Master进行通信,进行表的DDL操作。

5. 当Region中数据不断增大,Master会向Region Serve发送指令,讲分割出来的Region进行转移。

- Zookeeper

1. 保证任何时候,集群中只有一个活跃的master,因为为保证安全性会启动多个Master

2. 存储所有Region的寻址入口。知道那个Region在哪台机器上。

3. 实时监控Region Server的状态,将Region Server的上下线的信息汇报给HMaster。(因为每间隔一段时间,RegionServer与Master都会zookeeper发送心跳信息),Region Server不直接向Master发送信息的原因是为了减少Master的压力因为只有一个活跃的Master,所有的RegionServer同时向他汇报信息,压力太大。而若有100台RegionServer时,Region Server可以分每10台向一个zookeeper汇报信息,实现zookeeper的负载均衡。

4. 存储Hbase的元数据(Schema)包括,知道整个Hbase集群中有哪些Table,每个 Table 有哪些column family(列族)

- Client

Client包含了访问Hbase的接口,Client维护这些Cache来加快对Hbase的访问,比如Region的位置信息. Zookeeper保证了任何时候群众只有一个Master存储所有的Region中的寻址入口还有实时监控RegionServer上的状态,将RegionServer的上线和下线信息实时通知给Master,存储hbase 的Schema,包括有哪些table,每个Table有哪些Column Family

- Master

Master有以下特点:

1、为RegionServer分配Region

2、负责RegionServer的负载均衡

3、发现失效的RegionServer并重新分配其上的Region

4、HDFS上的垃圾文件回收

5、处理Schema更新请求

- RegionServer

1、RegionServer维护Master分配给他的 Region,处理对这些Region的IO请求

2、RegionServer负责切分在运行过程中变得过大的Region,

二、跟踪数据流

- Flume数据源的记录

8f20c9188561b796ef8e26196de30be4, 39a096b71376b82f35732eff6d95779b, 1477969411, 104.10220, 30.71675 8f20c9188561b796ef8e26196de30be4, 39a096b71376b82f35732eff6d95779b, 1477969414, 104.10249, 30.71668

- Kafka的记录

Received message: (null, 8f20c9188561b796ef8e26196de30be4,39a096b71376b82f35732eff6d95779b,1477969411,104.10220,30.71675) at offset 6583,count:1Received message: (null, 8f20c9188561b796ef8e26196de30be4,39a096b71376b82f35732eff6d95779b,1477969414,104.10249,30.71668) at offset 6584,count:2

- Hbase的记录

hbase(main):005:0* list TABLE HTAB_GPS SYSTEM.CATALOG SYSTEM.FUNCTION SYSTEM.LOG SYSTEM.MUTEX SYSTEM.SEQUENCE SYSTEM.STATS VIRTUAL_STATIONS student 9 row(s) in 0.1950 seconds => ["HTAB_GPS", "SYSTEM.CATALOG", "SYSTEM.FUNCTION", "SYSTEM.LOG", "SYSTEM.MUTEX", "SYSTEM.SEQUENCE", "SYSTEM.STATS", "VIRTUAL_STATIONS", "student"] hbase(main):006:0> scan HTAB_GPS NameError: uninitialized constant HTAB_GPS

hbase(main):007:0> scan "HTAB_GPS" ROW COLUMN+CELL 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:CITYCODE, timestamp=1578310195867, value=510100 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:DRIVERID, timestamp=1578310195867, value=8f20c9188561b796ef8e26196de30be4 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:LAT, timestamp=1578310195867, value=30.71675 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:LNG, timestamp=1578310195867, value=104.10220 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:ORDERID, timestamp=1578310195867, value=39a096b71376b82f35732eff6d95779b 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:TIME, timestamp=1578310195867, value=2016-11-01 14:03:31 39a096b71376b82f35732eff6d95779b_1477969411 column=f1:TIMESTAMP, timestamp=1578310195867, value=1477969411

39a096b71376b82f35732eff6d95779b_1477969414 column=f1:CITYCODE, timestamp=1578310195867, value=510100 39a096b71376b82f35732eff6d95779b_1477969414 column=f1:DRIVERID, timestamp=1578310195867, value=8f20c9188561b796ef8e26196de30be4 39a096b71376b82f35732eff6d95779b_1477969414 column=f1:LAT, timestamp=1578310195867, value=30.71668 39a096b71376b82f35732eff6d95779b_1477969414 column=f1:LNG, timestamp=1578310195867, value=104.10249 39a096b71376b82f35732eff6d95779b_1477969414 column=f1:ORDERID, timestamp=1578310195867, value=39a096b71376b82f35732eff6d95779b 39a096b71376b82f35732eff6d95779b_1477969414 column=f1:TIME, timestamp=1578310195867, value=2016-11-01 14:03:34 39a096b71376b82f35732eff6d95779b_1477969414 column=f1:TIMESTAMP, timestamp=1578310195867, value=1477969414

三、代码操作

- Kafka -->Hbase

Kafka输出数据到Redis,以及Hbase。

public class GpsConsumer implements Runnable {

private static Logger log = Logger.getLogger(GpsConsumer.class); private final KafkaConsumer<String, String> consumer; private final String topic;

//计数消费到的消息条数 private static int count = 0; private FileOutputStream file = null; private BufferedOutputStream out = null; private PrintWriter printWriter = null; private String lineSeparator = null; private int batchNum = 0; JedisUtil instance = null; Jedis jedis = null; private String cityCode = ""; private Map<String, String> gpsMap = new HashMap<String, String>(); SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

public GpsConsumer(String topic, String groupId) {

if (topic.equalsIgnoreCase(TopicName.CHENG_DU_GPS_TOPIC.getTopicName())) { cityCode = Constants.CITY_CODE_CHENG_DU; } else if (topic.equalsIgnoreCase(TopicName.XI_AN_GPS_TOPIC.getTopicName())) { cityCode = Constants.CITY_CODE_XI_AN; } else if (topic.equalsIgnoreCase(TopicName.HAI_KOU_ORDER_TOPIC.getTopicName())) { cityCode = Constants.CITY_CODE_HAI_KOU; }else{ throw new IllegalArgumentException(topic+",主题名称不合法!"); }

Properties props = new Properties();//pro-cdh props.put("bootstrap.servers", Constants.KAFKA_BOOTSTRAP_SERVERS); // 设置好kafka集群 props.put("group.id", groupId); props.put("enable.auto.commit", "true"); props.put("auto.offset.reset", "earliest"); props.put("session.timeout.ms", "30000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); consumer = new KafkaConsumer<String,String>(props); # 第一步,构造好了kafka consumer this.topic = topic; }

//----------------------------------------------------------------------------------------------

@Override public void run() { while (true) { try { doWork(); } catch (Exception e) { e.printStackTrace(); } } } public void doWork() throws Exception { batchNum++; consumer.subscribe(Collections.singletonList(this.topic)); ConsumerRecords<String, String> records = consumer.poll(1000);

System.out.println("第" + batchNum + "批次," + records.count());

//司机ID String driverId = ""; //订单ID String orderId = ""; //经度 String lng = ""; //维度 String lat = ""; //时间戳 String timestamp = "";

Order order = null; Order startEndTimeOrder = null; Object tmpOrderObj = null;

/**

* Jeff: 如果有数据,则记录下来

*/ if (records.count() > 0) {

// (1) hbase就绪

Table table = HBaseUtil.getTable(Constants.HTAB_GPS);

// (2) Redis就绪 JedisUtil instance = JedisUtil.getInstance(); jedis = instance.getJedis();

/////////////////////////////////////////////////////

List<Put> puts = new ArrayList<>(); String rowkey = ""; if (gpsMap.size() > 0) { gpsMap.clear(); } //表不存在时创建表 if (!HBaseUtil.tableExists(Constants.HTAB_GPS)) { HBaseUtil.createTable(HBaseUtil.getConnection(), Constants.HTAB_GPS, Constants.DEFAULT_FAMILY); } for (ConsumerRecord<String, String> record : records) { count++; log.warn("Received message: (" + record.key() + ", " + record.value() + ") at offset " + record.offset() + ",count:" + count);

String value = record.value(); if (value.contains(",")) { order = new Order(); String[] split = value.split(","); driverId = split[0]; orderId = split[1]; timestamp = split[2]; lng = split[3]; lat = split[4]; rowkey = orderId + "_" + timestamp; gpsMap.put("CITYCODE", cityCode); gpsMap.put("DRIVERID", driverId); gpsMap.put("ORDERID", orderId); gpsMap.put("TIMESTAMP", timestamp + ""); gpsMap.put("TIME", sdf.format(new Date(Long.parseLong(timestamp+"000")))); gpsMap.put("LNG", lng); gpsMap.put("LAT", lat); order.setOrderId(orderId); puts.add(HBaseUtil.createPut(rowkey, Constants.DEFAULT_FAMILY.getBytes(), gpsMap));

////////////

// hbase //

//////////////////////////////////////////////////////////////////////////////////////////////

// redis //

///////////

//1.存入实时订单单号 jedis.sadd(Constants.REALTIME_ORDERS, cityCode + "_" + orderId);

//2.存入实时订单的经纬度信息 jedis.lpush(cityCode + "_" + orderId, lng + "," + lat);

//3.存入订单的开始结束时间信息 byte[] orderBytes = jedis.hget(Constants.ORDER_START_ENT_TIME.getBytes(), orderId.getBytes()); if (orderBytes != null) { tmpOrderObj = ObjUtil.deserialize(orderBytes); } if (null != tmpOrderObj) { startEndTimeOrder = (Order) tmpOrderObj; startEndTimeOrder.setEndTime(Long.parseLong(timestamp+"000")); jedis.hset(Constants.ORDER_START_ENT_TIME.getBytes(), orderId.getBytes(), ObjUtil.serialize(startEndTimeOrder)); } else { //第一次写入订单的开始时间,开始时间和结束时间一样 order.setStartTime(Long.parseLong(timestamp)); order.setEndTime(Long.parseLong(timestamp)); jedis.hset(Constants.ORDER_START_ENT_TIME.getBytes(), orderId.getBytes(), ObjUtil.serialize(order)); }

hourOrderInfoGather(jedis, gpsMap);

} else if (value.contains("end")) { jedis.lpush(cityCode + "_" + orderId, value); } }

table.put(puts); instance.returnJedis(jedis); } log.warn("正常结束..."); }

/** * 统计城市的每小时的订单信息和订单数 * @throws Exception */ public void hourOrderInfoGather(Jedis jedis, Map<String, String> gpsMap) throws Exception{

String time = gpsMap.get("TIME"); String orderId = gpsMap.get("ORDERID"); String day = time.substring(0,time.indexOf(" ")); String hour = time.split(" ")[1].substring(0,2);

//redis表名,小时订单统计 String hourOrderCountTab = cityCode + "_" + day + "_hour_order_count"; //redis表名,小时订单ID String hourOrderField = cityCode + "_" + day + "_" + hour; String hourOrder = cityCode + "_order"; int hourOrderCount = 0;

//redis set集合中存放每小时内的所有订单id if(!jedis.sismember(hourOrder,orderId)){ //使用set存储小时订单id jedis.sadd(hourOrder,orderId); String hourOrdernum = jedis.hget(hourOrderCountTab, hourOrderField); if(StringUtils.isEmpty(hourOrdernum)){ hourOrderCount = 1; }else{ hourOrderCount = Integer.parseInt(hourOrdernum) + 1; } //HashMap 存储每个小时的订单总数 jedis.hset(hourOrderCountTab, hourOrderField, hourOrderCount+""); } } public static void main(String[] args) { Logger.getLogger("org.apache.kafka").setLevel(Level.INFO); //kafka主题 String topic = "cheng_du_gps_topic"; //消费组id String groupId = "cheng_du_gps_consumer_01"; GpsConsumer gpsConsumer = new GpsConsumer(topic, groupId); Thread start = new Thread(gpsConsumer); start.start(); } }

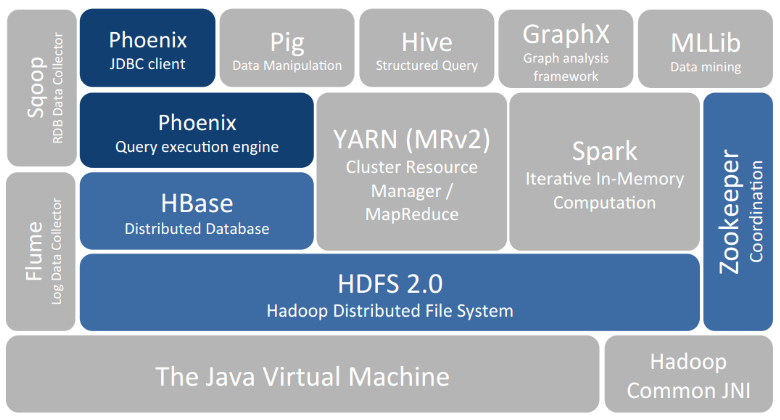

Phoenix

一、安装

- 使用的必要性

Ref: Phoenix三贴之二:Phoenix二级索引系统

Ref: Phoenix三贴之三:Phoenix和hive的整合

Phoenix 是 HBase 的开源 SQL 中间层,它允许你使用标准 JDBC 的方式来操作 HBase 上的数据。在 Phoenix 之前,如果你要访问 HBase,只能调用它的 Java API,但相比于使用一行 SQL 就能实现数据查询,HBase 的 API 还是过于复杂。Phoenix 的理念是 we put sql SQL back in NOSQL,即你可以使用标准的 SQL 就能完成对 HBase 上数据的操作。同时这也意味着你可以通过集成 Spring Data JPA 或 Mybatis 等常用的持久层框架来操作 HBase。

其次 Phoenix 的性能表现也非常优异,Phoenix 查询引擎会将 SQL 查询转换为一个或多个 HBase Scan,通过并行执行来生成标准的 JDBC 结果集。它通过直接使用 HBase API 以及协处理器和自定义过滤器,可以为小型数据查询提供毫秒级的性能,为千万行数据的查询提供秒级的性能。同时 Phoenix 还拥有二级索引等 HBase 不具备的特性,因为以上的优点,所以 Phoenix 成为了 HBase 最优秀的 SQL 中间层。

Apache Phoenix是构建在HBase之上的关系型数据库层,作为内嵌的客户端JDBC驱动用以对HBase中的数据进行低延迟访问。Apache Phoenix会将用户编写的sql查询编译为一系列的scan操作,最终产生通用的JDBC结果集返回给客户端。数据表的元数据存储在HBase的表中被会标记版本号,所以进行查询的时候会自动选择正确的schema。直接使用HBase的API,结合协处理器(coprocessor)和自定义的过滤器的话,小范围的查询在毫秒级响应,千万数据的话响应速度为秒级。

- 打开命令交互界面

[root@node01 opt]# python2 ./apache-phoenix-4.14.0-cdh5.14.2-bin/bin/sqlline.py

Setting property: [incremental, false] Setting property: [isolation, TRANSACTION_READ_COMMITTED] issuing: !connect jdbc:phoenix: none none org.apache.phoenix.jdbc.PhoenixDriver Connecting to jdbc:phoenix: SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/apache-phoenix-4.14.0-cdh5.14.2-bin/phoenix-4.14.0-cdh5.14.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 20/01/06 23:26:15 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Connected to: Phoenix (version 4.14) Driver: PhoenixEmbeddedDriver (version 4.14) Autocommit status: true Transaction isolation: TRANSACTION_READ_COMMITTED Building list of tables and columns for tab-completion (set fastconnect to true to skip)... 164/164 (100%) Done Done sqlline version 1.2.0

0: jdbc:phoenix:>

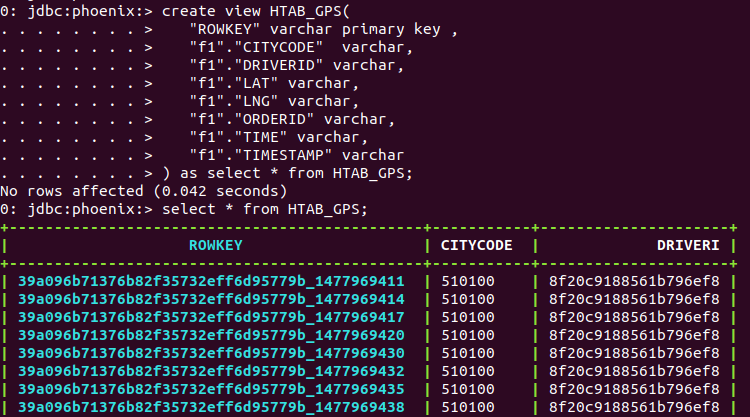

二、映射

对hbase表中的chengdu_gpu表在phoenix中做映射,就是在phoenix中创建hbase表的视图,创建视图的脚本如下:

三、操作

- Phoenix的记录

0: jdbc:phoenix:> select CITYCODE,DRIVERID,LAT,LNG,ORDERID from HTAB_GPS where LAT='30.71675'; +-----------+-----------------------------------+-----------+------------+-------+ | CITYCODE | DRIVERID | LAT | LNG | | +-----------+-----------------------------------+-----------+------------+-------+ | 510100 | 8f20c9188561b796ef8e26196de30be4 | 30.71675 | 104.10220 | 39a09 | +-----------+-----------------------------------+-----------+------------+-------+ 1 row selected (0.084 seconds)

0: jdbc:phoenix:> select CITYCODE,DRIVERID,LAT,LNG,ORDERID from HTAB_GPS where LAT='30.71668'; +-----------+-----------------------------------+-----------+------------+-------+ | CITYCODE | DRIVERID | LAT | LNG | | +-----------+-----------------------------------+-----------+------------+-------+ | 510100 | 8f20c9188561b796ef8e26196de30be4 | 30.71668 | 104.10249 | 39a09 | +-----------+-----------------------------------+-----------+------------+-------+

四、SQuirreL SQL

From: 配置使用Squirrel GUI连接Phoenix

Download: SQuirreL SQL

将phoenix-4.2.2-client.jar拷贝到squirrel安装目录的lib目录下。

高能预警:phoenix-4.2.2-client.jar可以从Phoenix的安装目录中找到,如果是其他版本的Phoenix则替换为相应的phoenix-[version]-client.jar

More details, please open the link.

End.

浙公网安备 33010602011771号

浙公网安备 33010602011771号