openseek-学习与复现记录

OpenSeek 致力于联合全球开源社区,推动算法、数据和系统方面的协作创新,目标是开发超越 DeepSeek 的下一代模型。

📌 项目概况

OpenSeek 是由北京人工智能研究院 (BAAI) 发起的开源项目,旨在联合全球开源社区,推动算法、数据和系统方面的协作创新,开发超越 DeepSeek 的下一代模型。该项目从 Bigscience 和 OPT 等大型模型计划中汲取灵感,致力于构建独立的开源算法创新系统。自 DeepSeek 模型开源以来,学术界已经看到了许多算法改进和突破,但这些创新往往缺乏完整的代码实现、必要的计算资源和高质量的数据支持。OpenSeek 项目旨在通过联合开源社区探索高质量的数据集构建机制,推动整个大型模型训练管道的开源,构建创新的训练和推理代码,以支持除 Nvidia 之外的各种 AI 芯片,并推动自主技术创新和应用开发。

Objectives of OpenSeek: OpenSeek 的目标:

-

高级数据技术 :应对获取高质量数据的挑战。

-

多种 AI 设备支持 :减少对特定芯片的依赖,提高模型的通用性和适应性。

-

标准化的 LLM 训练基线 :通过开源协作促进独立的算法创新和技术共享。

Project: https://github.com/orgs/FlagAI-Open/projects/1

项目名称:https://github.com/orgs/FlagAI-Open/projects/1

- datasets: https://huggingface.co/datasets/BAAI/OpenSeek-Pretrain-100B/

- frame:https://github.com/FlagAI-Open/OpenSeek

- training:https://github.com/FlagOpen/FlagScale

- other:https://github.com/FlagAI-Open/FlagAI

环境安装

安装Flagscale 环境(这里推荐使用 docker)

# Pull images

docker pull openseek2025/openseek:flagscale-20250527

# Clone the repository

git clone https://github.com/FlagOpen/FlagScale.git

进入docker image进行训练

docker_image=openseek2025/openseek:flagscale-20250527

docker run -it --gpus all --ipc=host --shm-size=8g -v /data2:/data2 $docker_image /bin/bash

运行baseline

openseek-baseline 作为 PAZHOU 算法竞赛的基线,也用于评估 openseek 中的 PR。Openseek-baseline 是一个标准化的 LLM 训练和评估管道,它由一个 100B 数据集 、 一个训练代码 、wandb、 检查点和评估结果组成。

数据准备

git lfs install

git clone https://huggingface.co/datasets/BAAI/OpenSeek-Pretrain-100B

如果网络不稳定,可以考虑使用 modelscope

pip install modelscope

modelscope download --dataset BAAI/OpenSeek-Pretrain-100B --local_dir ./dir

下载完成,文件大小约为413.57GB.

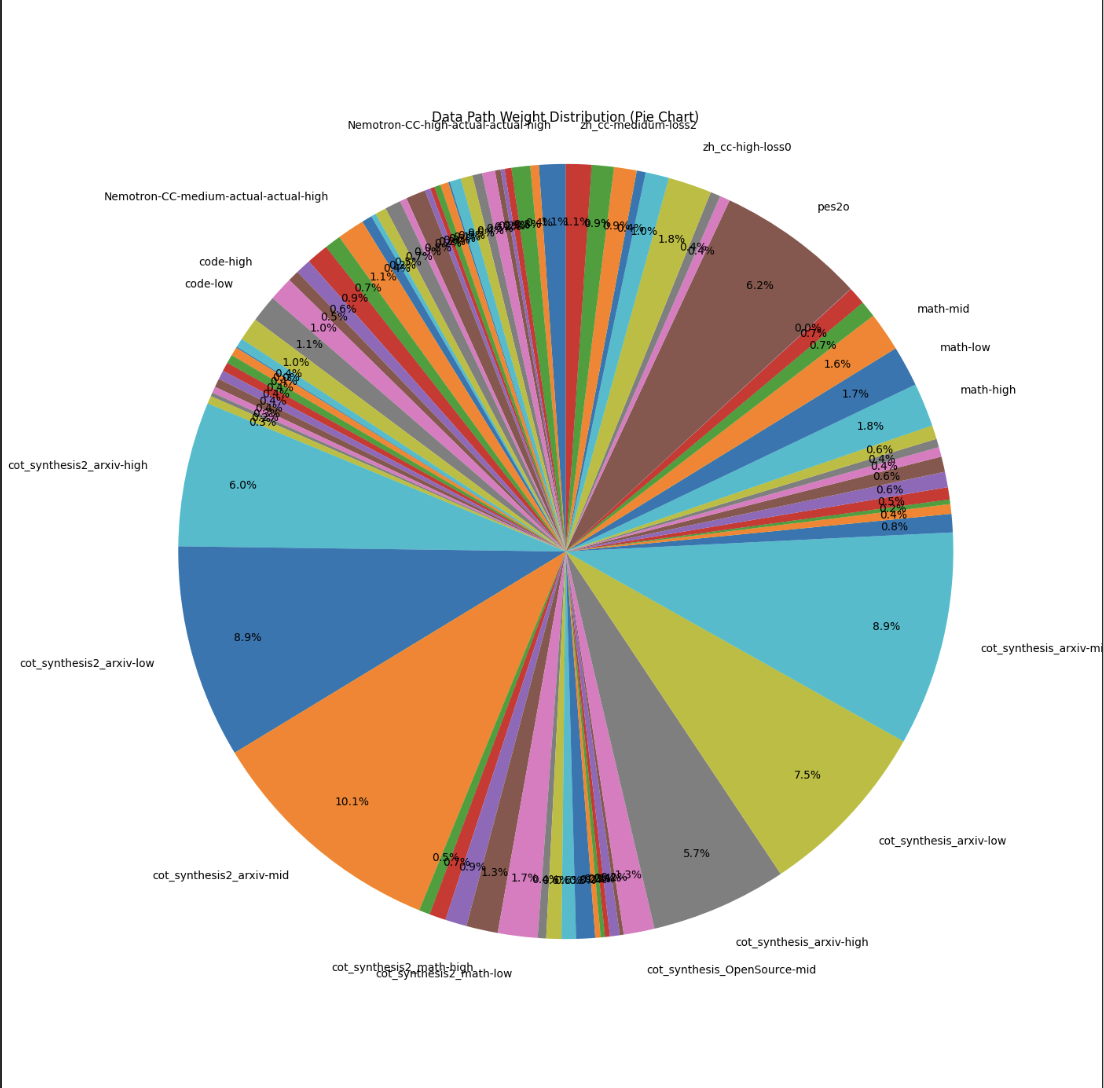

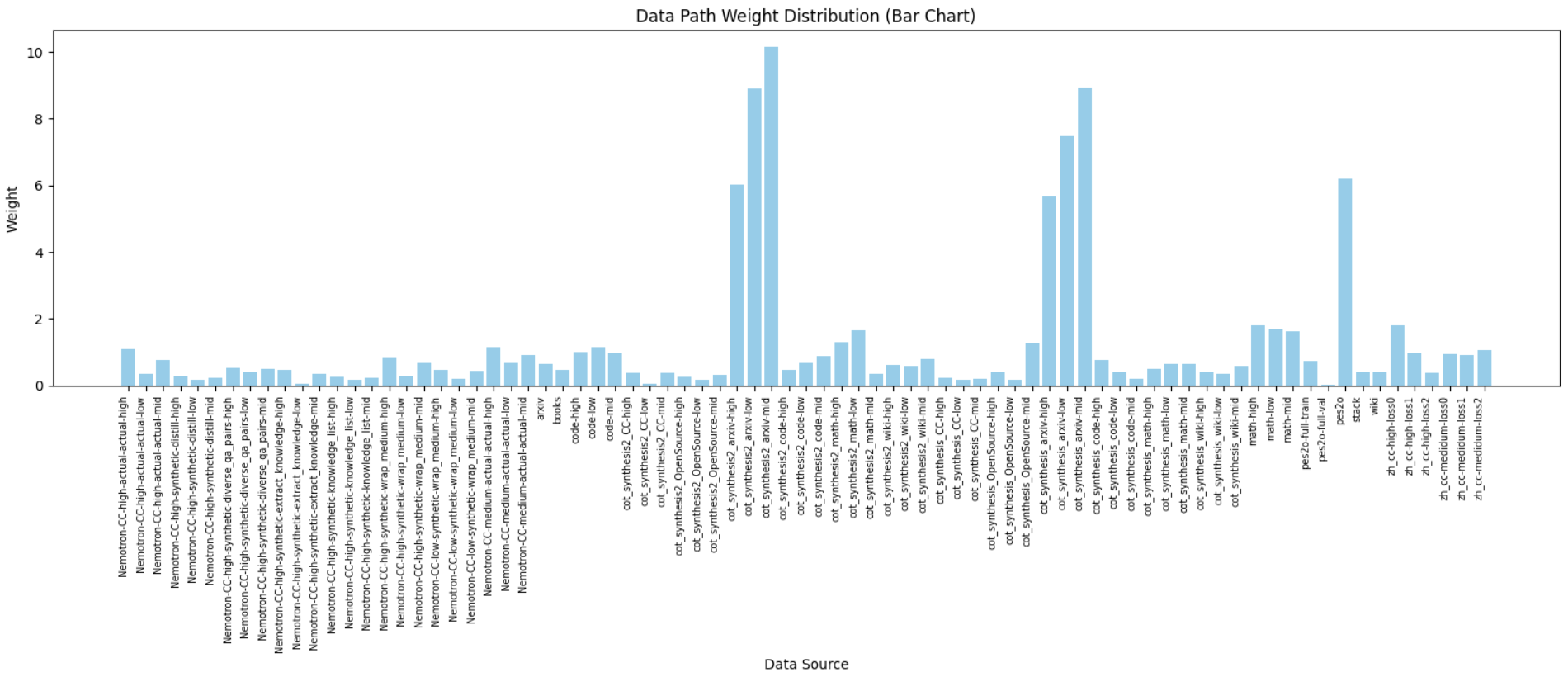

OpenSeek-Pretrain-100B 数据分布:

| Name | Tokens | Tokens(B) |

|---|---|---|

| Nemotron-CC-high-actual-actual-high | 1140543860 | 1.14 |

| Nemotron-CC-high-actual-actual-low | 368646238 | 0.37 |

| Nemotron-CC-high-actual-actual-mid | 801213010 | 0.80 |

| Nemotron-CC-high-synthetic-distill-high | 294569308 | 0.29 |

| Nemotron-CC-high-synthetic-distill-low | 172342068 | 0.17 |

| Nemotron-CC-high-synthetic-distill-mid | 240998642 | 0.24 |

| Nemotron-CC-high-synthetic-diverse_qa_pairs-high | 556137649 | 0.56 |

| Nemotron-CC-high-synthetic-diverse_qa_pairs-low | 418742390 | 0.42 |

| Nemotron-CC-high-synthetic-diverse_qa_pairs-mid | 515733187 | 0.52 |

| Nemotron-CC-high-synthetic-extract_knowledge-high | 475714119 | 0.48 |

| Nemotron-CC-high-synthetic-extract_knowledge-low | 68996838 | 0.07 |

| Nemotron-CC-high-synthetic-extract_knowledge-mid | 353316407 | 0.35 |

| Nemotron-CC-high-synthetic-knowledge_list-high | 268953064 | 0.27 |

| Nemotron-CC-high-synthetic-knowledge_list-low | 187973360 | 0.19 |

| Nemotron-CC-high-synthetic-knowledge_list-mid | 238373108 | 0.24 |

| Nemotron-CC-high-synthetic-wrap_medium-high | 848837296 | 0.85 |

| Nemotron-CC-high-synthetic-wrap_medium-low | 295324405 | 0.30 |

| Nemotron-CC-high-synthetic-wrap_medium-mid | 687328353 | 0.69 |

| Nemotron-CC-low-synthetic-wrap_medium-high | 479896420 | 0.48 |

| Nemotron-CC-low-synthetic-wrap_medium-low | 206574167 | 0.21 |

| Nemotron-CC-low-synthetic-wrap_medium-mid | 444865784 | 0.44 |

| Nemotron-CC-medium-actual-actual-high | 1174405205 | 1.17 |

| Nemotron-CC-medium-actual-actual-low | 698884310 | 0.70 |

| Nemotron-CC-medium-actual-actual-mid | 945401567 | 0.95 |

| arxiv | 660912931 | 0.66 |

| books | 483917796 | 0.48 |

| code-high | 1040945650 | 1.04 |

| code-low | 1175000655 | 1.18 |

| code-mid | 996826302 | 1.00 |

| cot_synthesis2_CC-high | 386941302 | 0.39 |

| cot_synthesis2_CC-low | 51390680 | 0.05 |

| cot_synthesis2_CC-mid | 1885475230 | 1.89 |

| cot_synthesis2_OpenSource-high | 265167656 | 0.27 |

| cot_synthesis2_OpenSource-low | 168830028 | 0.17 |

| cot_synthesis2_OpenSource-mid | 334976884 | 0.33 |

| cot_synthesis2_arxiv-high | 12894983685 | 12.89 |

| cot_synthesis2_arxiv-low | 9177670132 | 9.18 |

| cot_synthesis2_arxiv-mid | 10446468216 | 10.45 |

| cot_synthesis2_code-high | 473767419 | 0.47 |

| cot_synthesis2_code-low | 706636812 | 0.71 |

| cot_synthesis2_code-mid | 926436168 | 0.93 |

| cot_synthesis2_math-high | 1353517224 | 1.35 |

| cot_synthesis2_math-low | 1703361358 | 1.70 |

| cot_synthesis2_math-mid | 364330324 | 0.36 |

| cot_synthesis2_wiki-high | 650684154 | 0.65 |

| cot_synthesis2_wiki-low | 615978070 | 0.62 |

| cot_synthesis2_wiki-mid | 814947142 | 0.81 |

| cot_synthesis_CC-high | 229324269 | 0.23 |

| cot_synthesis_CC-low | 185148748 | 0.19 |

| cot_synthesis_CC-mid | 210471356 | 0.21 |

| cot_synthesis_OpenSource-high | 420505110 | 0.42 |

| cot_synthesis_OpenSource-low | 170987708 | 0.17 |

| cot_synthesis_OpenSource-mid | 1321855051 | 1.32 |

| cot_synthesis_arxiv-high | 5853027309 | 5.85 |

| cot_synthesis_arxiv-low | 7718911399 | 7.72 |

| cot_synthesis_arxiv-mid | 9208148090 | 9.21 |

| cot_synthesis_code-high | 789672525 | 0.79 |

| cot_synthesis_code-low | 417526994 | 0.42 |

| cot_synthesis_code-mid | 197436971 | 0.20 |

| cot_synthesis_math-high | 522900778 | 0.52 |

| cot_synthesis_math-low | 663320643 | 0.66 |

| cot_synthesis_math-mid | 660137084 | 0.66 |

| cot_synthesis_wiki-high | 412152225 | 0.41 |

| cot_synthesis_wiki-low | 367306600 | 0.37 |

| cot_synthesis_wiki-mid | 594421619 | 0.59 |

| math-high | 1871864190 | 1.87 |

| math-low | 1745580082 | 1.75 |

| math-mid | 1680811027 | 1.68 |

| pes2o | 6386997158 | 6.39 |

| pes2o-full-train | 1469110938 | 1.47 |

| pes2o-full-val | 14693152 | 0.01 |

| stack | 435813429 | 0.44 |

| wiki | 433002447 | 0.43 |

| zh_cc-high-loss0 | 1872431176 | 1.87 |

| zh_cc-high-loss1 | 1007405788 | 1.01 |

| zh_cc-high-loss2 | 383830893 | 0.38 |

| zh_cc-medidum-loss0 | 978118384 | 0.98 |

| zh_cc-medidum-loss1 | 951741139 | 0.95 |

| zh_cc-medidum-loss2 | 1096769115 | 1.10 |

根据 yaml 中的 radio 进行可视化

训练

确保您已完成上一节中所述的环境安装和配置,并且您的 OpenSeek 文件夹应如下所示:

OpenSeek

├── OpenSeek-Pretrain-100B (Dataset directory for downloaded datasets.)

├── FlagScale (FlagScale directory cloned from GitHub.)

├── OpenSeek-Small-v1-Baseline (Experiment directory will be created automatically and contains logs and model checkpoints etc.)

├── ...

接下来,您可以使用一个简单的命令运行基线:

bash openseek/baseline/run_exp.sh start

这个命令,主要是根据模型配置和数据配置来生成相应的训练cmds脚本。

其中,模型配置如下:

- 注意,envs 中默认使用单卡进行训练,如果需要修改为多卡,参照以下,修改 nnodes、nproc_per_node、VISIBLE_DEVICES、DEVICE_MAX_CONNECTIONS 即可。

- 对于训练cmds,如果默认使用的是docker-image,应该修改

before_start:source /root/miniconda3/bin/activate flagscale-train,通过这里配置,可以去修改 conda 的环境,以及进入的 conda 环境。

# DeepSeek 1_4B, 0_4A Model

defaults:

- _self_

- train: train_deepseek_v3_1_4b.yaml

experiment:

exp_name: OpenSeek-Small-v1-Baseline

dataset_base_dir: ../OpenSeek-Pretrain-100B

seed: 42

save_steps: 600

load: null

exp_dir: ${experiment.exp_name}

ckpt_format: torch

task:

type: train

backend: megatron

entrypoint: flagscale/train/train_gpt.py

runner:

no_shared_fs: false

backend: torchrun

rdzv_backend: static

ssh_port: 22

# nnodes: 1

nnodes: 8

# nproc_per_node: 1

nproc_per_node: 8

hostfile: null

cmds:

# before_start: "ulimit -n 1048576 && source /root/miniconda3/bin/activate flagscale"

before_start: "ulimit -n 1048576 && source /root/miniconda3/bin/activate flagscale-train"

# before_start: "ulimit -n 1048576 && source /data/anaconda3/bin/activate flagscale-train"

envs:

# VISIBLE_DEVICES: 0

# DEVICE_MAX_CONNECTIONS: 1

VISIBLE_DEVICES: 0,1,2,3,4,5,6,7

DEVICE_MAX_CONNECTIONS: 8

action: run

hydra:

run:

dir: ${experiment.exp_dir}/hydra

如何验证程序是否正常运行

执行 bash openseek/baseline/run_exp.sh start 后,您可以按照以下步骤确认您的程序是否按预期运行。

-

导航到 OpenSeek 根目录 。您会注意到,在此目录中创建了一个名为

OpenSeek-Small-v1-Baseline的新文件夹。这是 log 目录。 -

您可以通过使用文本编辑器(如