ffmpeg ,zlmediakit,c++,opencv,win10

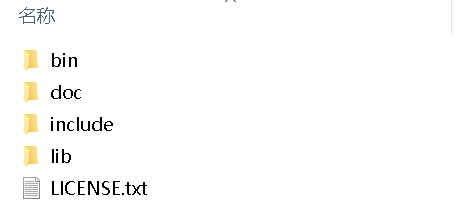

1.win10系统下载ffmpeg库与zlmediakit;ffmpeg下载完成之后如图;

。

。

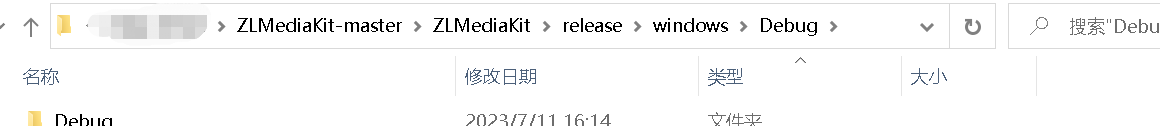

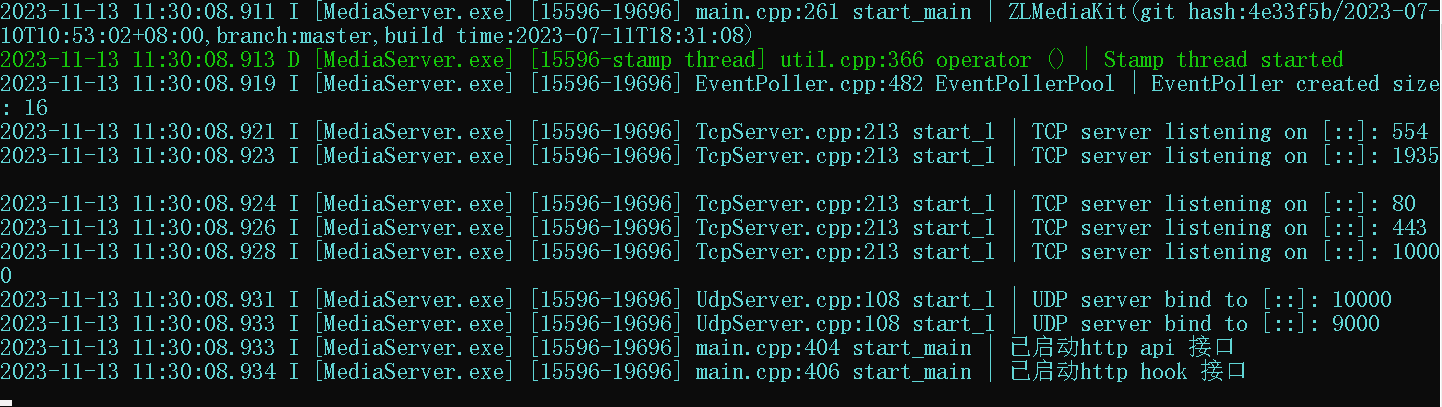

2.进入下载的zlmedia文件夹,如图路径,打开mediaserver服务器;

![]()

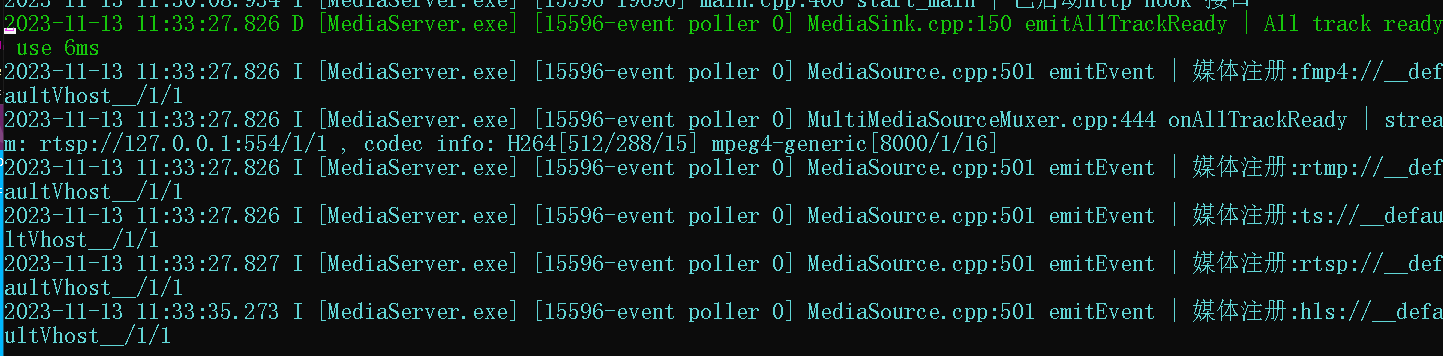

3.打开命令行,ffmpeg推流到该服务器,cuc.h264为d盘视频文件;成功之后会有如下变化

ffmpeg -re -i D:\edge_down\cuc.h264 -c copy -rtsp_transport tcp -f rtsp rtsp://127.0.0.1/1/1

![]()

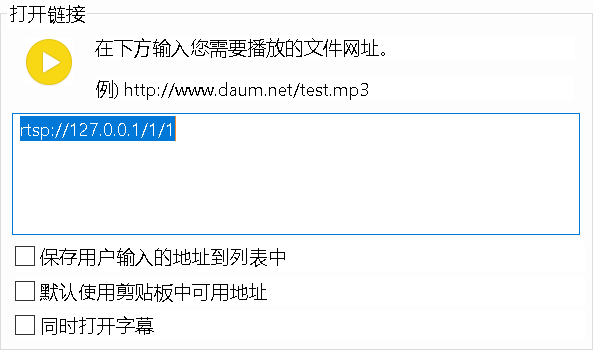

4.用potplayer软件对该地址进行拉流,实现视频播放;

5.实现基于ffmpeg的c++代码对视频流拉流,后续可以逐帧对画面二次处理,在头文件配置处需要将之前下载的ffmpeg库包含;

#include <iostream>

#include<string>

#include <windows.h>

#include <opencv2/opencv.hpp>

extern "C" {

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavutil/avutil.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

#include "libavutil/frame.h"

}

//初始化固定参数

//t1 数据流地址

void* init(std::string t1, AVDictionary* options, AVFormatContext* pFormatCtx, int &videoindex, AVPacket* av_packet, AVFrame* frame,

AVFrame* framergb, SwsContext* pSwsCtx, AVCodecContext* avc_cxt, uint8_t* buf)

{

int status_error_ = -1;

std::string videourl = t1;

avformat_network_init();

//执行网络库的全局初始化。

//此函数仅用于解决旧版GNUTLS或OpenSSL库的线程安全问题。

//一旦删除对较旧的GNUTLS和OpenSSL库的支持,此函数将被弃用,并且此函数将不再有任何用途。

av_dict_set(&options, "buffer_size", "4096", 0); //设置缓存大小

av_dict_set(&options, "rtsp_transport", "tcp", 0); //以tcp的方式打开,

av_dict_set(&options, "stimeout", "5000000", 0); //设置超时断开链接时间,单位us, 5s

av_dict_set(&options, "max_delay", "500000", 0); //设置最大时延

//pFormatCtx = avformat_alloc_context(); //用来申请AVFormatContext类型变量并初始化默认参数,申请的空间

//打开网络流或文件流

if (avformat_open_input(&pFormatCtx, videourl.c_str(), NULL, &options) != 0)

{

std::cout << "Couldn't open input stream.\n"

<< std::endl;

return nullptr;

}

//获取视频文件信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0)

{

std::cout << "Couldn't find stream information." << std::endl;

return nullptr;

}

std::cout << "av_dict_get:" << std::endl;

AVDictionaryEntry* tag = NULL;

//av_dict_set(&pFormatCtx->metadata, "rotate", "0", 0);这里可以设置一些属性

while ((tag = av_dict_get(pFormatCtx->metadata, "", tag, AV_DICT_IGNORE_SUFFIX)))

{

std::string key = tag->key;

std::string value = tag->value;

std::cout << "av_dict_get:" << key << ":" << value << std::endl;

}

//查找码流中是否有视频流

unsigned i = 0;

for (i = 0; i < pFormatCtx->nb_streams; i++)

{

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

videoindex = i;

break;

}

}

if (videoindex == -1)

{

std::cout << "Didn't find a video stream.\n"

<< std::endl;

return nullptr;

}

//avc_cxt = avcodec_alloc_context3(NULL);

avcodec_parameters_to_context(avc_cxt, pFormatCtx->streams[videoindex]->codecpar);

enum AVCodecID codecId = avc_cxt->codec_id;

AVCodec* codec = (AVCodec*)avcodec_find_decoder(codecId);

if (!codec) {

av_log(NULL, AV_LOG_ERROR, "没有找到解码器\n");

return nullptr;

}

int ret = avcodec_open2(avc_cxt, codec, NULL);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "解码器无法打开\n");

return nullptr;

}

//av_packet = av_packet_alloc();

int PictureSize = av_image_get_buffer_size(AV_PIX_FMT_BGR24, avc_cxt->width,

avc_cxt->height, 16);

//frame = av_frame_alloc();

//FILE* fp_YUV = fopen("getYUV.yuv", "wb");

//int k = 0;

AVStream* video_stream = pFormatCtx->streams[videoindex];

//framergb = av_frame_alloc();

//buf = (uint8_t*)av_malloc(PictureSize);

av_image_fill_arrays((uint8_t**)framergb, framergb->linesize,

buf, AV_PIX_FMT_BGR24, avc_cxt->width,

avc_cxt->height, 1);

//pSwsCtx = sws_getContext(avc_cxt->width, avc_cxt->height,

// avc_cxt->pix_fmt,

// avc_cxt->width, avc_cxt->height, AV_PIX_FMT_BGR24, SWS_BICUBIC, NULL, NULL, NULL);

}

//读取视频流,转化为Mat格式

void read_frame(AVFormatContext* pFormatCtx, int& videoindex, AVPacket* av_packet, AVFrame* frame,

AVFrame* framergb, SwsContext* pSwsCtx, AVCodecContext* avc_cxt, cv::Mat& mRGB)

{

unsigned int k = 0;

while (av_read_frame(pFormatCtx, av_packet) >= 0)

{

if (k > 100000)

break;

k++;

if (av_packet && av_packet->stream_index == videoindex)

{

std::cout << "\ndata size is:" << av_packet->size;

int t1 = avcodec_send_packet(avc_cxt, av_packet);

int t2 = avcodec_receive_frame(avc_cxt, frame);

if (t1 >= 0 && t2 >= 0)

{

//转换图像格式,将解压出来的YUV420P的图像转换为BRG24的图像

sws_scale(pSwsCtx, (const uint8_t* const*)frame->data,

frame->linesize, 0, avc_cxt->height, framergb->data,

framergb->linesize);

//构造Mat格式

//mRGB = cv::Mat(cv::Size(avc_cxt->width, avc_cxt->height), CV_8UC3);

mRGB.data = (uchar*)framergb->data[0];

cv::imshow("t", mRGB);

cv::waitKey(20);

//printf("w: %d, H: %d\n", video_stream->codecpar->width, video_stream->codecpar->height);

}

}

av_packet_unref(av_packet);

}

}

//释放开辟的空间

void release(AVFormatContext* pFormatCtx, AVPacket* av_packet, AVFrame* frame,

AVFrame* framergb, SwsContext* pSwsCtx, AVCodecContext* avc_cxt, uint8_t* buf)

{

sws_freeContext(pSwsCtx);

av_packet_unref(av_packet);

av_free(buf);

av_free(avc_cxt);

av_frame_free(&framergb);

av_frame_free(&frame);

av_packet_free(&av_packet);

avformat_close_input(&pFormatCtx);

}

int main()

{

//保存单帧rgb格式

cv::Mat mRGB;

AVFormatContext* pFormatCtx = NULL;

AVPacket* av_packet = NULL; // AVPacket暂存解码之前的媒体数据

int videoindex = -1;

AVCodecContext* avc_cxt{};

//单帧原始图片数据的首地址指针

AVFrame* frame{};

//单帧rgb图片数据的首地址指针

AVFrame* framergb{};

uint8_t* buf{};

SwsContext* pSwsCtx{};

AVDictionary* options = NULL;

pFormatCtx = avformat_alloc_context(); //用来申请AVFormatContext类型变量并初始化默认参数,申请的空间

avc_cxt = avcodec_alloc_context3(NULL);

av_packet = av_packet_alloc();

framergb = av_frame_alloc();

buf = (uint8_t*)av_malloc(6220800);

frame = av_frame_alloc();

pSwsCtx = sws_getContext(1920, 1080,

AV_PIX_FMT_YUV420P,

1920, 1080, AV_PIX_FMT_BGR24, SWS_BICUBIC, NULL, NULL, NULL);

mRGB = cv::Mat(cv::Size(1920, 1080), CV_8UC3);

//"rtsp://192.168.1.120:554/live/1_0"

init("rtsp://127.0.0.1:554/1/1", options, pFormatCtx, videoindex, av_packet,

frame, framergb, pSwsCtx, avc_cxt, buf);

read_frame(pFormatCtx, videoindex, av_packet, frame, framergb, pSwsCtx, avc_cxt, mRGB);

return 0;

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号