使用requests库爬取豆瓣电影Top250相关数据

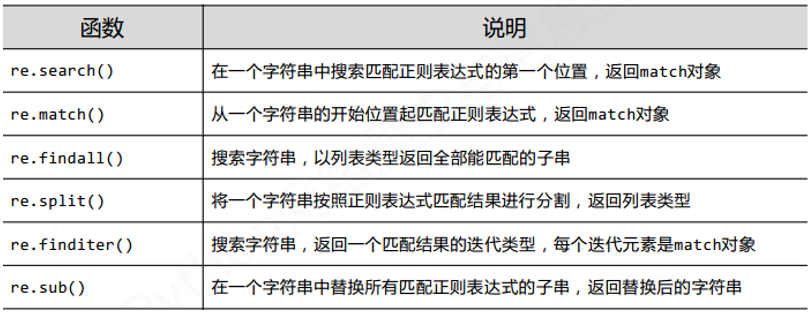

re库

re库的基本使用:https://www.cnblogs.com/ikventure/p/14853758.html

菜鸟教程:https://www.runoob.com/python3/python3-reg-expressions.html

-

findall() 以列表形式返回所有匹配的子串

-

finditer() 以迭代器形式返回所有匹配的子串

-

search() 搜索第一个匹配的子串

-

match() 只在开始位置匹配

-

compile() 可以对正则进行预加载

pat = re.compile(r'\d{3}') res = pat.search('leooooo223') print(res.group()) #223 -

单独提取正则中的内容(分组)

import re

s = """

<div class='messi'><span id='10'>梅西</span></div>

<div class='neymar'><span id='11'>内马尔</span></div>

<div class='kun'><span id='19'>阿圭罗</span></div>

<div class='suarez'><span id='9'>苏亚雷斯</span></div>

<div class='rakitic'><span id='4'>拉基蒂奇</span></div>

"""

# 需求匹配到 messi 10 梅西

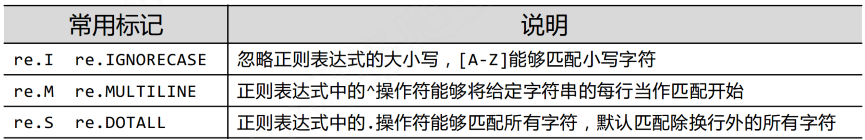

# re.S 让.能匹配换行符

obj1 = re.compile(r"<div class='.*?'><span id='\d+'>.*?</span></div>", re.S)

result1 = obj1.finditer(s)

for i in result1:

print(i.group())

# 在待匹配字符前加上 ?P<分类名称>,并用()括起来,如 <span id='(?P<number>\d+)'>

obj2 = re.compile(r"<div class='.*?'><span id='(?P<number>\d+)'>(?P<ChineseName>.*?)</span></div>", re.S)

result2 = obj2.finditer(s)

for j in result2:

print(j.group("number"), j.group('ChineseName'))

豆瓣电影top250

网址:https://movie.douban.com/top250

每页25条数据,第二页:https://movie.douban.com/top250?start=25&filter=

修改’start=‘后的数字即可获取完整数据。

- 判断网页为服务器渲染还是客户端渲染(查看页面源代码) --服务器

- 获取页面源代码 requests.get()

- 解析数据 re.compile() 通过正则表达式分组匹配

- 匹配数据 finditer

- 展示数据 fstring填充 中文空格 chr(12288)

- 保存数据 csv

import requests

import re

import csv

url = "https://movie.douban.com/top250"

ua = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.131 Safari/537.36"

}

page = requests.get(url, headers=ua)

page_source = page.text

pat = re.compile(r'<div class="item">.*?<span class="title">(?P<name>.*?)</span>'

r'.*?<p class="">.*?<br>(?P<year>.*?) '

r'.*?<span class="rating_num" property="v:average">(?P<rate>.*?)</span>'

r'.*?<span>(?P<number>.*?)人评价</span>', re.S)

result = pat.finditer(page_source)

f = open('dbtop250.csv', mode='w', encoding='utf-8', newline='')

csv_writer = csv.writer(f)

for item in result:

"""

显示测试 (电影名称中英文混合时依然会对不齐)

name = item.group("name")

year = item.group("year").strip()

rate = item.group("rate")

number = item.group("number")

print(f"{name:{chr(12288)}<15}{year:<10}{rate:<10}{number:>10}")

"""

dic = item.groupdict()

dic['year'] = dic['year'].strip()

csv_writer.writerow(dic.values())

f.close()

page.close()

print("over!")

优化代码结构,获取完整数据

"""

modified douban movie top250

"""

import requests

import re

import csv

# url = "https://movie.douban.com/top250"

ua = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.131 Safari/537.36"

}

def get_page(url):

page = requests.get(url, headers=ua)

page_source = page.text

pat = re.compile(r'<div class="item">.*?<span class="title">(?P<name>.*?)</span>'

r'.*?<p class="">.*?<br>(?P<year>.*?) '

r'.*?<span class="rating_num" property="v:average">(?P<rate>.*?)</span>'

r'.*?<span>(?P<number>.*?)人评价</span>', re.S)

res = pat.finditer(page_source)

page.close()

return res

with open('db250.csv', 'w', encoding='utf-8', newline='') as f:

csv_writer = csv.writer(f)

for start in range(0, 250, 25):

douban_url = f'https://movie.douban.com/top250?start={start}&filter='

result = get_page(douban_url)

for item in result:

dic = item.groupdict()

dic['year'] = dic['year'].strip()

csv_writer.writerow(dic.values())

print("over!")

电影天堂

- 在主页面选择需要获取的版块

- 找到该板块下各电影的链接

- 获取该链接的页面源代码,找到下载地址。

import requests

import re

domain = "https://dytt89.com/"

page = requests.get(domain)

page.encoding = 'gb2312' # page中可以查看charset=gb2312

page_source = page.text

# 定位‘2021必看热片’

pat1 = re.compile(r"2021必看热片.*?<ul>(?P<ul>.*?)</ul>", re.S)

# 定位 超链接 <a href=''>xx</a>

pat2 = re.compile(r"<a href='(?P<link>.*?)'", re.S)

# 定位 片名

pat3 = re.compile(r"◎片 名(?P<name>.*?)<br />", re.S)

# 定位 下载地址

pat4 = re.compile(r'<td style="WORD-WRAP: break-word" bgcolor="#fdfddf"><a href="(?P<download>.*?)">')

res1 = pat1.search(page_source)

ul = res1.group("ul")

res2 = pat2.finditer(ul)

child_url_list = [] # 子页面链接列表

for item2 in res2:

child_link = item2.group('link')

child_url = domain + child_link

child_url_list.append(child_url)

# 提取子页面内容

for url in child_url_list:

child_page = requests.get(url)

child_page.encoding = 'gb2312'

child_page_source = child_page.text

# 部分页面没有片名,换成标题名称

try:

res3 = pat3.search(child_page_source).group("name").strip()

except AttributeError:

pat_back = re.compile(r'<div class="title_all"><h1>(?P<title>.*?)</h1>')

res3 = pat_back.search(child_page_source).group("title").strip()

print(res3)

res4 = pat4.search(child_page_source).group("download")

print(res4)

page.close()

浙公网安备 33010602011771号

浙公网安备 33010602011771号