一个完整的大作业

1.选一个自己感兴趣的主题。

选择广州商学院网页,网址为http://news.gzcc.cn/html/xiaoyuanxinwen/

2.网络上爬取相关的数据。

import requests import re from bs4 import BeautifulSoup url='http://news.gzcc.cn/html/xiaoyuanxinwen/' res=requests.get(url) res.encoding='utf-8' soup=BeautifulSoup(res.text,'html.parser') #获取点击次数 def getclick(newurl): id=re.search('_(.*).html',newurl).group(1).split('/')[1] clickurl='http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(id) click=int(requests.get(clickurl).text.split(".")[-1].lstrip("html('").rstrip("');")) return click #获取内容 def getonpages(listurl): res=requests.get(listurl) res.encoding='utf-8' soup=BeautifulSoup(res.text,'html.parser') for news in soup.select('li'): if len(news.select('.news-list-title'))>0: title=news.select('.news-list-title')[0].text #标题 time=news.select('.news-list-info')[0].contents[0].text#时间 url1=news.select('a')[0]['href'] #url bumen=news.select('.news-list-info')[0].contents[1].text#部门 description=news.select('.news-list-description')[0].text #描述 resd=requests.get(url1) resd.encoding='utf-8' soupd=BeautifulSoup(resd.text,'html.parser') detail=soupd.select('.show-content')[0].text click=getclick(url1) #调用点击次数 print(title,click) count=int(soup.select('.a1')[0].text.rstrip("条")) pages=count//10+1 for i in range(2,4): pagesurl="http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html".format(i) getonpages(pagesurl)

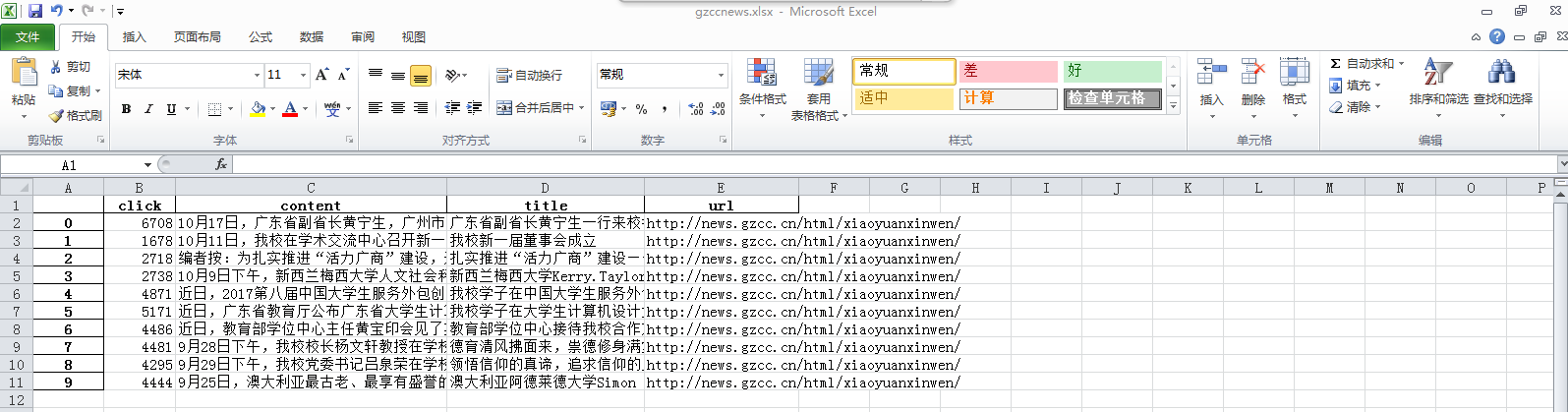

获得数据

import requests import re import pandas from bs4 import BeautifulSoup url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/' res = requests.get(url) res.encoding = 'utf-8' soup = BeautifulSoup(res.text, 'html.parser') # 获取点击次数 def getclick(newurl): id = re.search('_(.*).html', newurl).group(1).split('/')[1] clickurl = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(id) click = int(requests.get(clickurl).text.split(".")[-1].lstrip("html('").rstrip("');")) return click # 给定单挑新闻链接,返回新闻细节的字典 def getdetail(listurl): res = requests.get(listurl) res.encoding = 'utf-8' soup = BeautifulSoup(res.text, 'html.parser') news={} news['url']=url news['title']=soup.select('.show-title')[0].text info = soup.select('.show-info')[0].text #news['dt']=datetime.strptime(info.lstrip('发布时间')[0:19],'%Y-%m-%d %H:%M:') #news['source']=re.search('来源:(.*)点击',info).group(1).strip() news['content']=soup.select('.show-content')[0].text.strip() news['click']=getclick(listurl) return (news) #print(getonpages('http://news.gzcc.cn/html/2017/xiaoyuanxinwen_1017/8338.html')) #给定新闻列表页的链接,返回所有新闻细节字典 def onepage(pageurl): res = requests.get(pageurl) res.encoding = 'utf-8' soup = BeautifulSoup(res.text, 'html.parser') newsls=[] for news in soup.select('li'): if len(news.select('.news-list-title')) > 0: newsls.append(getdetail(news.select('a')[0]['href'])) return(newsls) #print(onepage('http://news.gzcc.cn/html/xiaoyuanxinwen/')) newstotal=[] for i in range(2,3): listurl='http://news.gzcc.cn/html/xiaoyuanxinwen/' newstotal.extend(onepage(listurl)) #print(len(newstotal)) df =pandas.DataFrame(newstotal) #print(df.head()) #print(df['title']) df.to_excel('gzccnews.xlsx') #with aqlite3.connect('gzccnewsdb2.sqlite') as db: #df.to_sql('gzccnewdb2',con=db)

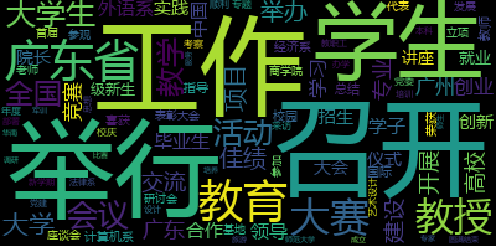

3.进行文本分析,生成词云。

import jieba output = open('D:\\dd.txt', 'a',encoding='utf-8') txt = open('D:\\bb.txt',"r",encoding='utf-8').read() #去除一些出现频率较高且意义不大的词 ex = {'我院','我校','学院','一行'} ls = [] words = jieba.lcut(txt) counts = {} for word in words: ls.append(word) if len(word) == 1 or word in ex: continue else: counts[word] = counts.get(word,0)+1 #for word in ex: # del(word) items = list(counts.items()) items.sort(key = lambda x:x[1], reverse = True) for i in range(100): word , count = items[i] print ("{:<10}{:>5}".format(word,count)) output.write("{:<10}{:>5}".format(word,count) + '\n') output.close()

#coding:utf-8 import jieba from wordcloud import WordCloud import matplotlib.pyplot as plt text =open("D:\\cc.txt",'r',encoding='utf-8').read() print(text) wordlist = jieba.cut(text,cut_all=True) wl_split = "/".join(wordlist) mywc = WordCloud().generate(text) plt.imshow(mywc) plt.axis("off") plt.show()

4.对文本分析结果解释说明。

根据词云分析可知学校的网址以工作与活动为主。

5.写一篇完整的博客,附上源代码、数据爬取及分析结果,形成一个可展示的成果。

浙公网安备 33010602011771号

浙公网安备 33010602011771号