import tensorflow as tf

from tensorflow import keras

import numpy as np

print(tf.__version__)

2.5.0

# 参数 num_words=10000 保留了训练数据中最常出现的 10,000 个单词。为了保持数据规模的可管理性,低频词将被丢弃

imdb=keras.datasets.imdb

(train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/imdb.npz

17465344/17464789 [==============================] - 0s 0us/step

<string>:6: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify 'dtype=object' when creating the ndarray

/usr/local/lib/python3.7/dist-packages/tensorflow/python/keras/datasets/imdb.py:155: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify 'dtype=object' when creating the ndarray

x_train, y_train = np.array(xs[:idx]), np.array(labels[:idx])

/usr/local/lib/python3.7/dist-packages/tensorflow/python/keras/datasets/imdb.py:156: VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tuple of lists-or-tuples-or ndarrays with different lengths or shapes) is deprecated. If you meant to do this, you must specify 'dtype=object' when creating the ndarray

x_test, y_test = np.array(xs[idx:]), np.array(labels[idx:])

train_data.shape

(25000,)

for i in train_data[:10]:

print(len(train_data[i]))

218

189

141

550

147

43

123

562

233

130

# 整数转化为单词

# 一个映射单词到整数索引的词典

word_index = imdb.get_word_index()

word_index

{'fawn': 34701,

'tsukino': 52006,

'nunnery': 52007,

'sonja': 16816,

'vani': 63951,

'woods': 1408,

'spiders': 16115,

'hanging': 2345,

'woody': 2289,

'trawling': 52008,

"hold's": 52009,

'comically': 11307,

'localized': 40830,

'disobeying': 30568,

"'royale": 52010,

"harpo's": 40831,

'canet': 52011,

'aileen': 19313,

'acurately': 52012,

"diplomat's": 52013,

'rickman': 25242,

'arranged': 6746,

'rumbustious': 52014,

'familiarness': 52015,

"spider'": 52016,

'hahahah': 68804,

"wood'": 52017,

'transvestism': 40833,

"hangin'": 34702,

'bringing': 2338,

'seamier': 40834,

'wooded': 34703,

'bravora': 52018,

'grueling': 16817,

'wooden': 1636,

'wednesday': 16818,

"'prix": 52019,

'altagracia': 34704,

'circuitry': 52020,

'crotch': 11585,

'busybody': 57766,

"tart'n'tangy": 52021,

'burgade': 14129,

'thrace': 52023,

"tom's": 11038,

'snuggles': 52025,

'francesco': 29114,

'complainers': 52027,

'templarios': 52125,

'272': 40835,

'273': 52028,

'zaniacs': 52130,

'275': 34706,

'consenting': 27631,

'snuggled': 40836,

'inanimate': 15492,

'uality': 52030,

'bronte': 11926,

'errors': 4010,

'dialogs': 3230,

"yomada's": 52031,

"madman's": 34707,

'dialoge': 30585,

'usenet': 52033,

'videodrome': 40837,

"kid'": 26338,

'pawed': 52034,

"'girlfriend'": 30569,

"'pleasure": 52035,

"'reloaded'": 52036,

"kazakos'": 40839,

'rocque': 52037,

'mailings': 52038,

'brainwashed': 11927,

'mcanally': 16819,

"tom''": 52039,

'kurupt': 25243,

'affiliated': 21905,

'babaganoosh': 52040,

"noe's": 40840,

'quart': 40841,

'kids': 359,

'uplifting': 5034,

'controversy': 7093,

'kida': 21906,

'kidd': 23379,

"error'": 52041,

'neurologist': 52042,

'spotty': 18510,

'cobblers': 30570,

'projection': 9878,

'fastforwarding': 40842,

'sters': 52043,

"eggar's": 52044,

'etherything': 52045,

'gateshead': 40843,

'airball': 34708,

'unsinkable': 25244,

'stern': 7180,

"cervi's": 52046,

'dnd': 40844,

'dna': 11586,

'insecurity': 20598,

"'reboot'": 52047,

'trelkovsky': 11037,

'jaekel': 52048,

'sidebars': 52049,

"sforza's": 52050,

'distortions': 17633,

'mutinies': 52051,

'sermons': 30602,

'7ft': 40846,

'boobage': 52052,

"o'bannon's": 52053,

'populations': 23380,

'chulak': 52054,

'mesmerize': 27633,

'quinnell': 52055,

'yahoo': 10307,

'meteorologist': 52057,

'beswick': 42577,

'boorman': 15493,

'voicework': 40847,

"ster'": 52058,

'blustering': 22922,

'hj': 52059,

'intake': 27634,

'morally': 5621,

'jumbling': 40849,

'bowersock': 52060,

"'porky's'": 52061,

'gershon': 16821,

'ludicrosity': 40850,

'coprophilia': 52062,

'expressively': 40851,

"india's": 19500,

"post's": 34710,

'wana': 52063,

'wang': 5283,

'wand': 30571,

'wane': 25245,

'edgeways': 52321,

'titanium': 34711,

'pinta': 40852,

'want': 178,

'pinto': 30572,

'whoopdedoodles': 52065,

'tchaikovsky': 21908,

'travel': 2103,

"'victory'": 52066,

'copious': 11928,

'gouge': 22433,

"chapters'": 52067,

'barbra': 6702,

'uselessness': 30573,

"wan'": 52068,

'assimilated': 27635,

'petiot': 16116,

'most\x85and': 52069,

'dinosaurs': 3930,

'wrong': 352,

'seda': 52070,

'stollen': 52071,

'sentencing': 34712,

'ouroboros': 40853,

'assimilates': 40854,

'colorfully': 40855,

'glenne': 27636,

'dongen': 52072,

'subplots': 4760,

'kiloton': 52073,

'chandon': 23381,

"effect'": 34713,

'snugly': 27637,

'kuei': 40856,

'welcomed': 9092,

'dishonor': 30071,

'concurrence': 52075,

'stoicism': 23382,

"guys'": 14896,

"beroemd'": 52077,

'butcher': 6703,

"melfi's": 40857,

'aargh': 30623,

'playhouse': 20599,

'wickedly': 11308,

'fit': 1180,

'labratory': 52078,

'lifeline': 40859,

'screaming': 1927,

'fix': 4287,

'cineliterate': 52079,

'fic': 52080,

'fia': 52081,

'fig': 34714,

'fmvs': 52082,

'fie': 52083,

'reentered': 52084,

'fin': 30574,

'doctresses': 52085,

'fil': 52086,

'zucker': 12606,

'ached': 31931,

'counsil': 52088,

'paterfamilias': 52089,

'songwriter': 13885,

'shivam': 34715,

'hurting': 9654,

'effects': 299,

'slauther': 52090,

"'flame'": 52091,

'sommerset': 52092,

'interwhined': 52093,

'whacking': 27638,

'bartok': 52094,

'barton': 8775,

'frewer': 21909,

"fi'": 52095,

'ingrid': 6192,

'stribor': 30575,

'approporiately': 52096,

'wobblyhand': 52097,

'tantalisingly': 52098,

'ankylosaurus': 52099,

'parasites': 17634,

'childen': 52100,

"jenkins'": 52101,

'metafiction': 52102,

'golem': 17635,

'indiscretion': 40860,

"reeves'": 23383,

"inamorata's": 57781,

'brittannica': 52104,

'adapt': 7916,

"russo's": 30576,

'guitarists': 48246,

'abbott': 10553,

'abbots': 40861,

'lanisha': 17649,

'magickal': 40863,

'mattter': 52105,

"'willy": 52106,

'pumpkins': 34716,

'stuntpeople': 52107,

'estimate': 30577,

'ugghhh': 40864,

'gameplay': 11309,

"wern't": 52108,

"n'sync": 40865,

'sickeningly': 16117,

'chiara': 40866,

'disturbed': 4011,

'portmanteau': 40867,

'ineffectively': 52109,

"duchonvey's": 82143,

"nasty'": 37519,

'purpose': 1285,

'lazers': 52112,

'lightened': 28105,

'kaliganj': 52113,

'popularism': 52114,

"damme's": 18511,

'stylistics': 30578,

'mindgaming': 52115,

'spoilerish': 46449,

"'corny'": 52117,

'boerner': 34718,

'olds': 6792,

'bakelite': 52118,

'renovated': 27639,

'forrester': 27640,

"lumiere's": 52119,

'gaskets': 52024,

'needed': 884,

'smight': 34719,

'master': 1297,

"edie's": 25905,

'seeber': 40868,

'hiya': 52120,

'fuzziness': 52121,

'genesis': 14897,

'rewards': 12607,

'enthrall': 30579,

"'about": 40869,

"recollection's": 52122,

'mutilated': 11039,

'fatherlands': 52123,

"fischer's": 52124,

'positively': 5399,

'270': 34705,

'ahmed': 34720,

'zatoichi': 9836,

'bannister': 13886,

'anniversaries': 52127,

"helm's": 30580,

"'work'": 52128,

'exclaimed': 34721,

"'unfunny'": 52129,

'274': 52029,

'feeling': 544,

"wanda's": 52131,

'dolan': 33266,

'278': 52133,

'peacoat': 52134,

'brawny': 40870,

'mishra': 40871,

'worlders': 40872,

'protags': 52135,

'skullcap': 52136,

'dastagir': 57596,

'affairs': 5622,

'wholesome': 7799,

'hymen': 52137,

'paramedics': 25246,

'unpersons': 52138,

'heavyarms': 52139,

'affaire': 52140,

'coulisses': 52141,

'hymer': 40873,

'kremlin': 52142,

'shipments': 30581,

'pixilated': 52143,

"'00s": 30582,

'diminishing': 18512,

'cinematic': 1357,

'resonates': 14898,

'simplify': 40874,

"nature'": 40875,

'temptresses': 40876,

'reverence': 16822,

'resonated': 19502,

'dailey': 34722,

'2\x85': 52144,

'treize': 27641,

'majo': 52145,

'kiya': 21910,

'woolnough': 52146,

'thanatos': 39797,

'sandoval': 35731,

'dorama': 40879,

"o'shaughnessy": 52147,

'tech': 4988,

'fugitives': 32018,

'teck': 30583,

"'e'": 76125,

'doesn’t': 40881,

'purged': 52149,

'saying': 657,

"martians'": 41095,

'norliss': 23418,

'dickey': 27642,

'dicker': 52152,

"'sependipity": 52153,

'padded': 8422,

'ordell': 57792,

"sturges'": 40882,

'independentcritics': 52154,

'tempted': 5745,

"atkinson's": 34724,

'hounded': 25247,

'apace': 52155,

'clicked': 15494,

"'humor'": 30584,

"martino's": 17177,

"'supporting": 52156,

'warmongering': 52032,

"zemeckis's": 34725,

'lube': 21911,

'shocky': 52157,

'plate': 7476,

'plata': 40883,

'sturgess': 40884,

"nerds'": 40885,

'plato': 20600,

'plath': 34726,

'platt': 40886,

'mcnab': 52159,

'clumsiness': 27643,

'altogether': 3899,

'massacring': 42584,

'bicenntinial': 52160,

'skaal': 40887,

'droning': 14360,

'lds': 8776,

'jaguar': 21912,

"cale's": 34727,

'nicely': 1777,

'mummy': 4588,

"lot's": 18513,

'patch': 10086,

'kerkhof': 50202,

"leader's": 52161,

"'movie": 27644,

'uncomfirmed': 52162,

'heirloom': 40888,

'wrangle': 47360,

'emotion\x85': 52163,

"'stargate'": 52164,

'pinoy': 40889,

'conchatta': 40890,

'broeke': 41128,

'advisedly': 40891,

"barker's": 17636,

'descours': 52166,

'lots': 772,

'lotr': 9259,

'irs': 9879,

'lott': 52167,

'xvi': 40892,

'irk': 34728,

'irl': 52168,

'ira': 6887,

'belzer': 21913,

'irc': 52169,

'ire': 27645,

'requisites': 40893,

'discipline': 7693,

'lyoko': 52961,

'extend': 11310,

'nature': 873,

"'dickie'": 52170,

'optimist': 40894,

'lapping': 30586,

'superficial': 3900,

'vestment': 52171,

'extent': 2823,

'tendons': 52172,

"heller's": 52173,

'quagmires': 52174,

'miyako': 52175,

'moocow': 20601,

"coles'": 52176,

'lookit': 40895,

'ravenously': 52177,

'levitating': 40896,

'perfunctorily': 52178,

'lookin': 30587,

"lot'": 40898,

'lookie': 52179,

'fearlessly': 34870,

'libyan': 52181,

'fondles': 40899,

'gopher': 35714,

'wearying': 40901,

"nz's": 52182,

'minuses': 27646,

'puposelessly': 52183,

'shandling': 52184,

'decapitates': 31268,

'humming': 11929,

"'nother": 40902,

'smackdown': 21914,

'underdone': 30588,

'frf': 40903,

'triviality': 52185,

'fro': 25248,

'bothers': 8777,

"'kensington": 52186,

'much': 73,

'muco': 34730,

'wiseguy': 22615,

"richie's": 27648,

'tonino': 40904,

'unleavened': 52187,

'fry': 11587,

"'tv'": 40905,

'toning': 40906,

'obese': 14361,

'sensationalized': 30589,

'spiv': 40907,

'spit': 6259,

'arkin': 7364,

'charleton': 21915,

'jeon': 16823,

'boardroom': 21916,

'doubts': 4989,

'spin': 3084,

'hepo': 53083,

'wildcat': 27649,

'venoms': 10584,

'misconstrues': 52191,

'mesmerising': 18514,

'misconstrued': 40908,

'rescinds': 52192,

'prostrate': 52193,

'majid': 40909,

'climbed': 16479,

'canoeing': 34731,

'majin': 52195,

'animie': 57804,

'sylke': 40910,

'conditioned': 14899,

'waddell': 40911,

'3\x85': 52196,

'hyperdrive': 41188,

'conditioner': 34732,

'bricklayer': 53153,

'hong': 2576,

'memoriam': 52198,

'inventively': 30592,

"levant's": 25249,

'portobello': 20638,

'remand': 52200,

'mummified': 19504,

'honk': 27650,

'spews': 19505,

'visitations': 40912,

'mummifies': 52201,

'cavanaugh': 25250,

'zeon': 23385,

"jungle's": 40913,

'viertel': 34733,

'frenchmen': 27651,

'torpedoes': 52202,

'schlessinger': 52203,

'torpedoed': 34734,

'blister': 69876,

'cinefest': 52204,

'furlough': 34735,

'mainsequence': 52205,

'mentors': 40914,

'academic': 9094,

'stillness': 20602,

'academia': 40915,

'lonelier': 52206,

'nibby': 52207,

"losers'": 52208,

'cineastes': 40916,

'corporate': 4449,

'massaging': 40917,

'bellow': 30593,

'absurdities': 19506,

'expetations': 53241,

'nyfiken': 40918,

'mehras': 75638,

'lasse': 52209,

'visability': 52210,

'militarily': 33946,

"elder'": 52211,

'gainsbourg': 19023,

'hah': 20603,

'hai': 13420,

'haj': 34736,

'hak': 25251,

'hal': 4311,

'ham': 4892,

'duffer': 53259,

'haa': 52213,

'had': 66,

'advancement': 11930,

'hag': 16825,

"hand'": 25252,

'hay': 13421,

'mcnamara': 20604,

"mozart's": 52214,

'duffel': 30731,

'haq': 30594,

'har': 13887,

'has': 44,

'hat': 2401,

'hav': 40919,

'haw': 30595,

'figtings': 52215,

'elders': 15495,

'underpanted': 52216,

'pninson': 52217,

'unequivocally': 27652,

"barbara's": 23673,

"bello'": 52219,

'indicative': 12997,

'yawnfest': 40920,

'hexploitation': 52220,

"loder's": 52221,

'sleuthing': 27653,

"justin's": 32622,

"'ball": 52222,

"'summer": 52223,

"'demons'": 34935,

"mormon's": 52225,

"laughton's": 34737,

'debell': 52226,

'shipyard': 39724,

'unabashedly': 30597,

'disks': 40401,

'crowd': 2290,

'crowe': 10087,

"vancouver's": 56434,

'mosques': 34738,

'crown': 6627,

'culpas': 52227,

'crows': 27654,

'surrell': 53344,

'flowless': 52229,

'sheirk': 52230,

"'three": 40923,

"peterson'": 52231,

'ooverall': 52232,

'perchance': 40924,

'bottom': 1321,

'chabert': 53363,

'sneha': 52233,

'inhuman': 13888,

'ichii': 52234,

'ursla': 52235,

'completly': 30598,

'moviedom': 40925,

'raddick': 52236,

'brundage': 51995,

'brigades': 40926,

'starring': 1181,

"'goal'": 52237,

'caskets': 52238,

'willcock': 52239,

"threesome's": 52240,

"mosque'": 52241,

"cover's": 52242,

'spaceships': 17637,

'anomalous': 40927,

'ptsd': 27655,

'shirdan': 52243,

'obscenity': 21962,

'lemmings': 30599,

'duccio': 30600,

"levene's": 52244,

"'gorby'": 52245,

"teenager's": 25255,

'marshall': 5340,

'honeymoon': 9095,

'shoots': 3231,

'despised': 12258,

'okabasho': 52246,

'fabric': 8289,

'cannavale': 18515,

'raped': 3537,

"tutt's": 52247,

'grasping': 17638,

'despises': 18516,

"thief's": 40928,

'rapes': 8926,

'raper': 52248,

"eyre'": 27656,

'walchek': 52249,

"elmo's": 23386,

'perfumes': 40929,

'spurting': 21918,

"exposition'\x85": 52250,

'denoting': 52251,

'thesaurus': 34740,

"shoot'": 40930,

'bonejack': 49759,

'simpsonian': 52253,

'hebetude': 30601,

"hallow's": 34741,

'desperation\x85': 52254,

'incinerator': 34742,

'congratulations': 10308,

'humbled': 52255,

"else's": 5924,

'trelkovski': 40845,

"rape'": 52256,

"'chapters'": 59386,

'1600s': 52257,

'martian': 7253,

'nicest': 25256,

'eyred': 52259,

'passenger': 9457,

'disgrace': 6041,

'moderne': 52260,

'barrymore': 5120,

'yankovich': 52261,

'moderns': 40931,

'studliest': 52262,

'bedsheet': 52263,

'decapitation': 14900,

'slurring': 52264,

"'nunsploitation'": 52265,

"'character'": 34743,

'cambodia': 9880,

'rebelious': 52266,

'pasadena': 27657,

'crowne': 40932,

"'bedchamber": 52267,

'conjectural': 52268,

'appologize': 52269,

'halfassing': 52270,

'paycheque': 57816,

'palms': 20606,

"'islands": 52271,

'hawked': 40933,

'palme': 21919,

'conservatively': 40934,

'larp': 64007,

'palma': 5558,

'smelling': 21920,

'aragorn': 12998,

'hawker': 52272,

'hawkes': 52273,

'explosions': 3975,

'loren': 8059,

"pyle's": 52274,

'shootout': 6704,

"mike's": 18517,

"driscoll's": 52275,

'cogsworth': 40935,

"britian's": 52276,

'childs': 34744,

"portrait's": 52277,

'chain': 3626,

'whoever': 2497,

'puttered': 52278,

'childe': 52279,

'maywether': 52280,

'chair': 3036,

"rance's": 52281,

'machu': 34745,

'ballet': 4517,

'grapples': 34746,

'summerize': 76152,

'freelance': 30603,

"andrea's": 52283,

'\x91very': 52284,

'coolidge': 45879,

'mache': 18518,

'balled': 52285,

'grappled': 40937,

'macha': 18519,

'underlining': 21921,

'macho': 5623,

'oversight': 19507,

'machi': 25257,

'verbally': 11311,

'tenacious': 21922,

'windshields': 40938,

'paychecks': 18557,

'jerk': 3396,

"good'": 11931,

'prancer': 34748,

'prances': 21923,

'olympus': 52286,

'lark': 21924,

'embark': 10785,

'gloomy': 7365,

'jehaan': 52287,

'turaqui': 52288,

"child'": 20607,

'locked': 2894,

'pranced': 52289,

'exact': 2588,

'unattuned': 52290,

'minute': 783,

'skewed': 16118,

'hodgins': 40940,

'skewer': 34749,

'think\x85': 52291,

'rosenstein': 38765,

'helmit': 52292,

'wrestlemanias': 34750,

'hindered': 16826,

"martha's": 30604,

'cheree': 52293,

"pluckin'": 52294,

'ogles': 40941,

'heavyweight': 11932,

'aada': 82190,

'chopping': 11312,

'strongboy': 61534,

'hegemonic': 41342,

'adorns': 40942,

'xxth': 41346,

'nobuhiro': 34751,

'capitães': 52298,

'kavogianni': 52299,

'antwerp': 13422,

'celebrated': 6538,

'roarke': 52300,

'baggins': 40943,

'cheeseburgers': 31270,

'matras': 52301,

"nineties'": 52302,

"'craig'": 52303,

'celebrates': 12999,

'unintentionally': 3383,

'drafted': 14362,

'climby': 52304,

'303': 52305,

'oldies': 18520,

'climbs': 9096,

'honour': 9655,

'plucking': 34752,

'305': 30074,

'address': 5514,

'menjou': 40944,

"'freak'": 42592,

'dwindling': 19508,

'benson': 9458,

'white’s': 52307,

'shamelessness': 40945,

'impacted': 21925,

'upatz': 52308,

'cusack': 3840,

"flavia's": 37567,

'effette': 52309,

'influx': 34753,

'boooooooo': 52310,

'dimitrova': 52311,

'houseman': 13423,

'bigas': 25259,

'boylen': 52312,

'phillipenes': 52313,

'fakery': 40946,

"grandpa's": 27658,

'darnell': 27659,

'undergone': 19509,

'handbags': 52315,

'perished': 21926,

'pooped': 37778,

'vigour': 27660,

'opposed': 3627,

'etude': 52316,

"caine's": 11799,

'doozers': 52317,

'photojournals': 34754,

'perishes': 52318,

'constrains': 34755,

'migenes': 40948,

'consoled': 30605,

'alastair': 16827,

'wvs': 52319,

'ooooooh': 52320,

'approving': 34756,

'consoles': 40949,

'disparagement': 52064,

'futureistic': 52322,

'rebounding': 52323,

"'date": 52324,

'gregoire': 52325,

'rutherford': 21927,

'americanised': 34757,

'novikov': 82196,

'following': 1042,

'munroe': 34758,

"morita'": 52326,

'christenssen': 52327,

'oatmeal': 23106,

'fossey': 25260,

'livered': 40950,

'listens': 13000,

"'marci": 76164,

"otis's": 52330,

'thanking': 23387,

'maude': 16019,

'extensions': 34759,

'ameteurish': 52332,

"commender's": 52333,

'agricultural': 27661,

'convincingly': 4518,

'fueled': 17639,

'mahattan': 54014,

"paris's": 40952,

'vulkan': 52336,

'stapes': 52337,

'odysessy': 52338,

'harmon': 12259,

'surfing': 4252,

'halloran': 23494,

'unbelieveably': 49580,

"'offed'": 52339,

'quadrant': 30607,

'inhabiting': 19510,

'nebbish': 34760,

'forebears': 40953,

'skirmish': 34761,

'ocassionally': 52340,

"'resist": 52341,

'impactful': 21928,

'spicier': 52342,

'touristy': 40954,

"'football'": 52343,

'webpage': 40955,

'exurbia': 52345,

'jucier': 52346,

'professors': 14901,

'structuring': 34762,

'jig': 30608,

'overlord': 40956,

'disconnect': 25261,

'sniffle': 82201,

'slimeball': 40957,

'jia': 40958,

'milked': 16828,

'banjoes': 40959,

'jim': 1237,

'workforces': 52348,

'jip': 52349,

'rotweiller': 52350,

'mundaneness': 34763,

"'ninja'": 52351,

"dead'": 11040,

"cipriani's": 40960,

'modestly': 20608,

"professor'": 52352,

'shacked': 40961,

'bashful': 34764,

'sorter': 23388,

'overpowering': 16120,

'workmanlike': 18521,

'henpecked': 27662,

'sorted': 18522,

"jōb's": 52354,

"'always": 52355,

"'baptists": 34765,

'dreamcatchers': 52356,

"'silence'": 52357,

'hickory': 21929,

'fun\x97yet': 52358,

'breakumentary': 52359,

'didn': 15496,

'didi': 52360,

'pealing': 52361,

'dispite': 40962,

"italy's": 25262,

'instability': 21930,

'quarter': 6539,

'quartet': 12608,

'padmé': 52362,

"'bleedmedry": 52363,

'pahalniuk': 52364,

'honduras': 52365,

'bursting': 10786,

"pablo's": 41465,

'irremediably': 52367,

'presages': 40963,

'bowlegged': 57832,

'dalip': 65183,

'entering': 6260,

'newsradio': 76172,

'presaged': 54150,

"giallo's": 27663,

'bouyant': 40964,

'amerterish': 52368,

'rajni': 18523,

'leeves': 30610,

'macauley': 34767,

'seriously': 612,

'sugercoma': 52369,

'grimstead': 52370,

"'fairy'": 52371,

'zenda': 30611,

"'twins'": 52372,

'realisation': 17640,

'highsmith': 27664,

'raunchy': 7817,

'incentives': 40965,

'flatson': 52374,

'snooker': 35097,

'crazies': 16829,

'crazier': 14902,

'grandma': 7094,

'napunsaktha': 52375,

'workmanship': 30612,

'reisner': 52376,

"sanford's": 61306,

'\x91doña': 52377,

'modest': 6108,

"everything's": 19153,

'hamer': 40966,

"couldn't'": 52379,

'quibble': 13001,

'socking': 52380,

'tingler': 21931,

'gutman': 52381,

'lachlan': 40967,

'tableaus': 52382,

'headbanger': 52383,

'spoken': 2847,

'cerebrally': 34768,

"'road": 23490,

'tableaux': 21932,

"proust's": 40968,

'periodical': 40969,

"shoveller's": 52385,

'tamara': 25263,

'affords': 17641,

'concert': 3249,

"yara's": 87955,

'someome': 52386,

'lingering': 8424,

"abraham's": 41511,

'beesley': 34769,

'cherbourg': 34770,

'kagan': 28624,

'snatch': 9097,

"miyazaki's": 9260,

'absorbs': 25264,

"koltai's": 40970,

'tingled': 64027,

'crossroads': 19511,

'rehab': 16121,

'falworth': 52389,

'sequals': 52390,

...}

word_index = {k:(v+3) for k,v in word_index.items()}

word_index["<PAD>"] = 0

word_index["<START>"] = 1

word_index["<UNK>"] = 2 # unknown

word_index["<UNUSED>"] = 3

reserse_word_index=dict([(value,key) for (key,value) in word_index.items()])

# 解码函数

def decode_review(text):

return " ".join([reserse_word_index.get(i,'?') for i in text])

decode_review(train_data[0])

"<START> this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert <UNK> is an amazing actor and now the same being director <UNK> father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for <UNK> and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also <UNK> to the two little boy's that played the <UNK> of norman and paul they were just brilliant children are often left out of the <UNK> list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all"

# 由于电影评论长度必须相同,我们将使用 pad_sequences 函数来使长度标准化:

# 长的去除,短的补0

# sequences:浮点数或整数构成的两层嵌套列表

# maxlen:None或整数,为序列的最大长度。大于此长度的将被截断,小于此长度的序列将在后面填0.

# dtype:返回的numpy array的数据类型。

# padding:pre或post,确定当需要补0时,在序列的起始还是结尾补。

# truncating:pre或post,确定需要截断序列时,从起始还是结尾截断。

# value:浮点数,用于填充序列

train_data=tf.keras.preprocessing.sequence.pad_sequences(

train_data,value=word_index["<PAD>"],

padding="post",

maxlen=256

)

test_data=tf.keras.preprocessing.sequence.pad_sequences(

test_data,value=word_index["<PAD>"],

padding="post",

maxlen=256

)

decode_review(train_data[0])

"<START> this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert <UNK> is an amazing actor and now the same being director <UNK> father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for <UNK> and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also <UNK> to the two little boy's that played the <UNK> of norman and paul they were just brilliant children are often left out of the <UNK> list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD> <PAD>"

for i in range(10):

print(len(train_data[i]))

256

256

256

256

256

256

256

256

256

256

# 构建模型 MLP

vocab_size=10000

model=keras.Sequential()

# keras.layers.Embedding(input_dim, output_dim, embeddings_initializer='uniform', embeddings_regularizer=None, activity_regularizer=None, embeddings_constraint=None, mask_zero=False, input_length=None)

#https://keras.io/zh/layers/embeddings/

model.add(keras.layers.Embedding(vocab_size,16))

model.add(keras.layers.GlobalAveragePooling1D())

model.add(keras.layers.Dense(16,activation='relu'))

model.add(keras.layers.Dense(1,activation='sigmoid'))

model.summary()

# 第一层是嵌入(Embedding)层。该层采用整数编码的词汇表,并查找每个词索引的嵌入向量(embedding vector)。这些向量是通过模型训练学习到的。向量向输出数组增加了一个维度。得到的维度为:(batch, sequence, embedding)。

# 接下来,GlobalAveragePooling1D 将通过对序列维度求平均值来为每个样本返回一个定长输出向量。这允许模型以尽可能最简单的方式处理变长输入。

# 该定长输出向量通过一个有 16 个隐层单元的全连接(Dense)层传输。

# 最后一层与单个输出结点密集连接。使用 Sigmoid 激活函数,其函数值为介于 0 与 1 之间的浮点数,表示概率或置信度

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, None, 16) 160000

_________________________________________________________________

global_average_pooling1d (Gl (None, 16) 0

_________________________________________________________________

dense (Dense) (None, 16) 272

_________________________________________________________________

dense_1 (Dense) (None, 1) 17

=================================================================

Total params: 160,289

Trainable params: 160,289

Non-trainable params: 0

_________________________________________________________________

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

batch_size=512

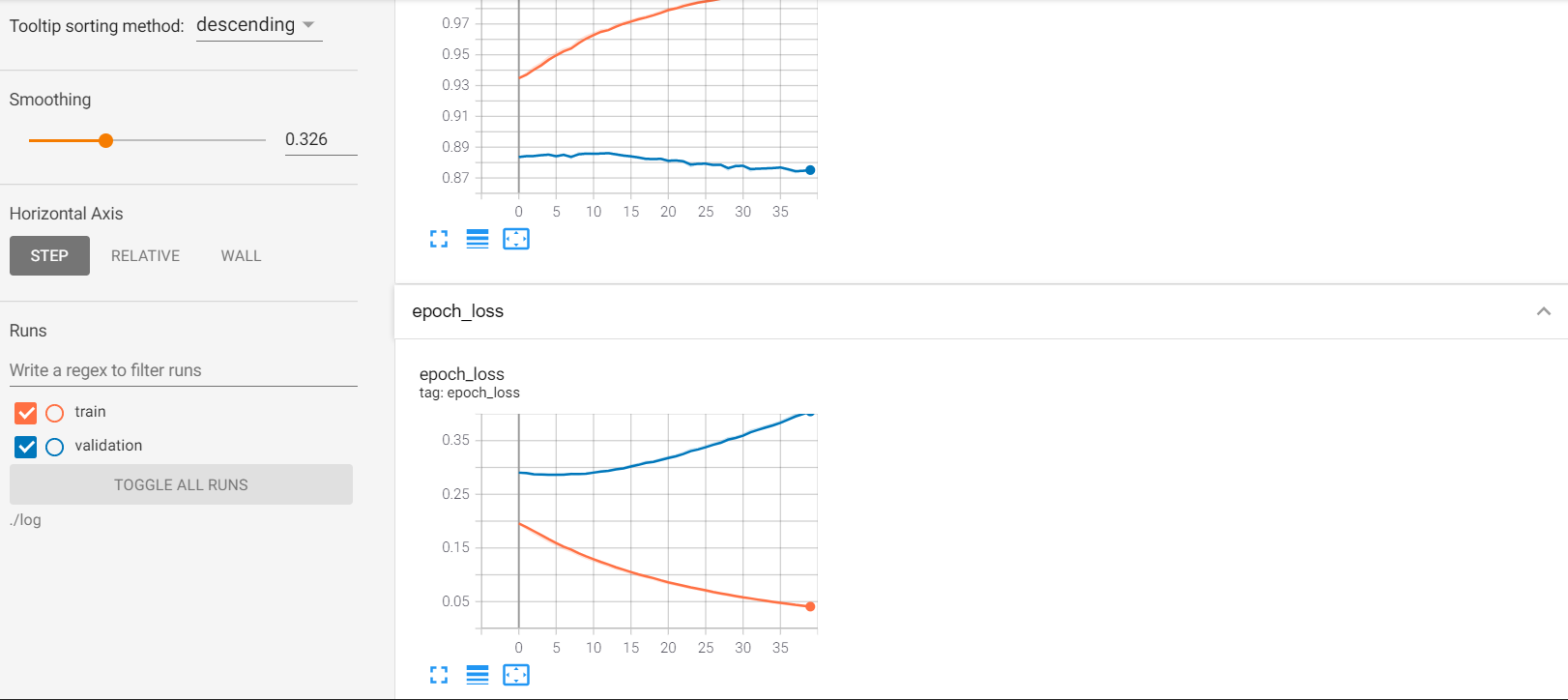

# 导入tensorboard

from tensorflow.keras.callbacks import TensorBoard

tbCallBack = TensorBoard(log_dir='./log', histogram_freq=1,

write_graph=True,

write_grads=True,

batch_size=batch_size,

write_images=True)

WARNING:tensorflow:`write_grads` will be ignored in TensorFlow 2.0 for the `TensorBoard` Callback.

WARNING:tensorflow:`write_grads` will be ignored in TensorFlow 2.0 for the `TensorBoard` Callback.

WARNING:tensorflow:`batch_size` is no longer needed in the `TensorBoard` Callback and will be ignored in TensorFlow 2.0.

WARNING:tensorflow:`batch_size` is no longer needed in the `TensorBoard` Callback and will be ignored in TensorFlow 2.0.

x_val = train_data[:10000]

partial_x_train = train_data[10000:]

y_val = train_labels[:10000]

partial_y_train = train_labels[10000:]

# callbacks=[tbCallBack])

# verbose:日志显示

# verbose = 0 为不在标准输出流输出日志信息

# verbose = 1 为输出进度条记录

# verbose = 2 为每个epoch输出一行记录

# 注意: 默认为 1

history = model.fit(partial_x_train,

partial_y_train,

epochs=40,

batch_size=512,

validation_data=(x_val, y_val),

callbacks=[tbCallBack]

)

Epoch 1/40

30/30 [==============================] - 1s 39ms/step - loss: 0.1956 - accuracy: 0.9347 - val_loss: 0.2903 - val_accuracy: 0.8836

Epoch 2/40

30/30 [==============================] - 1s 25ms/step - loss: 0.1869 - accuracy: 0.9377 - val_loss: 0.2892 - val_accuracy: 0.8843

Epoch 3/40

30/30 [==============================] - 1s 25ms/step - loss: 0.1782 - accuracy: 0.9416 - val_loss: 0.2866 - val_accuracy: 0.8843

Epoch 4/40

30/30 [==============================] - 1s 27ms/step - loss: 0.1705 - accuracy: 0.9445 - val_loss: 0.2866 - val_accuracy: 0.8850

Epoch 5/40

30/30 [==============================] - 1s 24ms/step - loss: 0.1628 - accuracy: 0.9484 - val_loss: 0.2860 - val_accuracy: 0.8853

Epoch 6/40

30/30 [==============================] - 1s 24ms/step - loss: 0.1561 - accuracy: 0.9510 - val_loss: 0.2863 - val_accuracy: 0.8837

Epoch 7/40

30/30 [==============================] - 1s 18ms/step - loss: 0.1493 - accuracy: 0.9535 - val_loss: 0.2869 - val_accuracy: 0.8854

Epoch 8/40

30/30 [==============================] - 1s 22ms/step - loss: 0.1442 - accuracy: 0.9549 - val_loss: 0.2881 - val_accuracy: 0.8831

Epoch 9/40

30/30 [==============================] - 1s 23ms/step - loss: 0.1370 - accuracy: 0.9591 - val_loss: 0.2877 - val_accuracy: 0.8861

Epoch 10/40

30/30 [==============================] - 1s 18ms/step - loss: 0.1314 - accuracy: 0.9619 - val_loss: 0.2887 - val_accuracy: 0.8860

Epoch 11/40

30/30 [==============================] - 1s 21ms/step - loss: 0.1264 - accuracy: 0.9638 - val_loss: 0.2913 - val_accuracy: 0.8857

Epoch 12/40

30/30 [==============================] - 1s 25ms/step - loss: 0.1213 - accuracy: 0.9659 - val_loss: 0.2932 - val_accuracy: 0.8859

Epoch 13/40

30/30 [==============================] - 1s 23ms/step - loss: 0.1167 - accuracy: 0.9665 - val_loss: 0.2942 - val_accuracy: 0.8862

Epoch 14/40

30/30 [==============================] - 1s 24ms/step - loss: 0.1116 - accuracy: 0.9693 - val_loss: 0.2977 - val_accuracy: 0.8850

Epoch 15/40

30/30 [==============================] - 1s 23ms/step - loss: 0.1074 - accuracy: 0.9709 - val_loss: 0.2993 - val_accuracy: 0.8842

Epoch 16/40

30/30 [==============================] - 1s 24ms/step - loss: 0.1030 - accuracy: 0.9722 - val_loss: 0.3036 - val_accuracy: 0.8838

Epoch 17/40

30/30 [==============================] - 1s 18ms/step - loss: 0.0990 - accuracy: 0.9737 - val_loss: 0.3062 - val_accuracy: 0.8830

Epoch 18/40

30/30 [==============================] - 1s 18ms/step - loss: 0.0957 - accuracy: 0.9748 - val_loss: 0.3105 - val_accuracy: 0.8820

Epoch 19/40

30/30 [==============================] - 1s 19ms/step - loss: 0.0923 - accuracy: 0.9763 - val_loss: 0.3114 - val_accuracy: 0.8822

Epoch 20/40

30/30 [==============================] - 1s 20ms/step - loss: 0.0878 - accuracy: 0.9779 - val_loss: 0.3157 - val_accuracy: 0.8825

Epoch 21/40

30/30 [==============================] - 1s 18ms/step - loss: 0.0844 - accuracy: 0.9798 - val_loss: 0.3192 - val_accuracy: 0.8806

Epoch 22/40

30/30 [==============================] - 1s 24ms/step - loss: 0.0813 - accuracy: 0.9805 - val_loss: 0.3223 - val_accuracy: 0.8815

Epoch 23/40

30/30 [==============================] - 1s 24ms/step - loss: 0.0781 - accuracy: 0.9823 - val_loss: 0.3270 - val_accuracy: 0.8806

Epoch 24/40

30/30 [==============================] - 1s 26ms/step - loss: 0.0750 - accuracy: 0.9833 - val_loss: 0.3331 - val_accuracy: 0.8777

Epoch 25/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0728 - accuracy: 0.9843 - val_loss: 0.3350 - val_accuracy: 0.8794

Epoch 26/40

30/30 [==============================] - 1s 23ms/step - loss: 0.0698 - accuracy: 0.9851 - val_loss: 0.3397 - val_accuracy: 0.8793

Epoch 27/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0666 - accuracy: 0.9857 - val_loss: 0.3442 - val_accuracy: 0.8781

Epoch 28/40

30/30 [==============================] - 1s 24ms/step - loss: 0.0642 - accuracy: 0.9869 - val_loss: 0.3477 - val_accuracy: 0.8786

Epoch 29/40

30/30 [==============================] - 1s 24ms/step - loss: 0.0617 - accuracy: 0.9879 - val_loss: 0.3546 - val_accuracy: 0.8754

Epoch 30/40

30/30 [==============================] - 1s 19ms/step - loss: 0.0594 - accuracy: 0.9883 - val_loss: 0.3566 - val_accuracy: 0.8783

Epoch 31/40

30/30 [==============================] - 1s 21ms/step - loss: 0.0569 - accuracy: 0.9893 - val_loss: 0.3613 - val_accuracy: 0.8780

Epoch 32/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0551 - accuracy: 0.9897 - val_loss: 0.3686 - val_accuracy: 0.8748

Epoch 33/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0527 - accuracy: 0.9907 - val_loss: 0.3721 - val_accuracy: 0.8761

Epoch 34/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0506 - accuracy: 0.9915 - val_loss: 0.3764 - val_accuracy: 0.8763

Epoch 35/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0487 - accuracy: 0.9923 - val_loss: 0.3805 - val_accuracy: 0.8765

Epoch 36/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0469 - accuracy: 0.9928 - val_loss: 0.3852 - val_accuracy: 0.8770

Epoch 37/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0451 - accuracy: 0.9928 - val_loss: 0.3918 - val_accuracy: 0.8752

Epoch 38/40

30/30 [==============================] - 1s 26ms/step - loss: 0.0431 - accuracy: 0.9931 - val_loss: 0.3979 - val_accuracy: 0.8738

Epoch 39/40

30/30 [==============================] - 1s 25ms/step - loss: 0.0417 - accuracy: 0.9934 - val_loss: 0.4017 - val_accuracy: 0.8747

Epoch 40/40

30/30 [==============================] - 1s 24ms/step - loss: 0.0399 - accuracy: 0.9938 - val_loss: 0.4068 - val_accuracy: 0.8754

%load_ext tensorboard

#使用tensorboard 扩展

%tensorboard --logdir './log'

#定位tensorboard读取的文件目录

# logs是存放tensorboard文件的目录

%load_ext tensorboard

%tensorboard --logdir './log'

The tensorboard extension is already loaded. To reload it, use:

%reload_ext tensorboard

<IPython.core.display.Javascript object>

results = model.evaluate(test_data, test_labels, verbose=2)

print(results)

782/782 - 1s - loss: 0.4329 - accuracy: 0.8610

[0.4328615665435791, 0.8610399961471558]

model.

array([ 6, 176, 7, 5063, 88, 12, 2679, 23, 1310, 5, 109,

943, 4, 114, 9, 55, 606, 5, 111, 7, 4, 139,

193, 273, 23, 4, 172, 270, 11, 7216, 2, 4, 8463,

2801, 109, 1603, 21, 4, 22, 3861, 8, 6, 1193, 1330,

10, 10, 4, 105, 987, 35, 841, 2, 19, 861, 1074,

5, 1987, 2, 45, 55, 221, 15, 670, 5304, 526, 14,

1069, 4, 405, 5, 2438, 7, 27, 85, 108, 131, 4,

5045, 5304, 3884, 405, 9, 3523, 133, 5, 50, 13, 104,

51, 66, 166, 14, 22, 157, 9, 4, 530, 239, 34,

8463, 2801, 45, 407, 31, 7, 41, 3778, 105, 21, 59,

299, 12, 38, 950, 5, 4521, 15, 45, 629, 488, 2733,

127, 6, 52, 292, 17, 4, 6936, 185, 132, 1988, 5304,

1799, 488, 2693, 47, 6, 392, 173, 4, 2, 4378, 270,

2352, 4, 1500, 7, 4, 65, 55, 73, 11, 346, 14,

20, 9, 6, 976, 2078, 7, 5293, 861, 2, 5, 4182,

30, 3127, 2, 56, 4, 841, 5, 990, 692, 8, 4,

1669, 398, 229, 10, 10, 13, 2822, 670, 5304, 14, 9,

31, 7, 27, 111, 108, 15, 2033, 19, 7836, 1429, 875,

551, 14, 22, 9, 1193, 21, 45, 4829, 5, 45, 252,

8, 2, 6, 565, 921, 3639, 39, 4, 529, 48, 25,

181, 8, 67, 35, 1732, 22, 49, 238, 60, 135, 1162,

14, 9, 290, 4, 58, 10, 10, 472, 45, 55, 878,

8, 169, 11, 374, 5687, 25, 203, 28, 8, 818, 12,

125, 4, 3077], dtype=int32)

a=model.predict_classes(test_data)

/usr/local/lib/python3.7/dist-packages/tensorflow/python/keras/engine/sequential.py:455: UserWarning: `model.predict_classes()` is deprecated and will be removed after 2021-01-01. Please use instead:* `np.argmax(model.predict(x), axis=-1)`, if your model does multi-class classification (e.g. if it uses a `softmax` last-layer activation).* `(model.predict(x) > 0.5).astype("int32")`, if your model does binary classification (e.g. if it uses a `sigmoid` last-layer activation).

warnings.warn('`model.predict_classes()` is deprecated and '

test_data.shape

(25000, 256)

a[1]

array([1], dtype=int32)

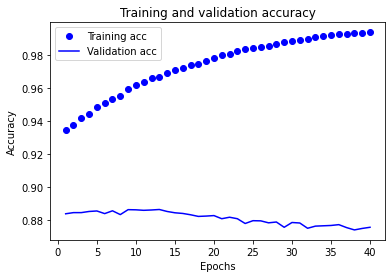

history_dict = history.history

history_dict.keys()

dict_keys(['loss', 'accuracy', 'val_loss', 'val_accuracy'])

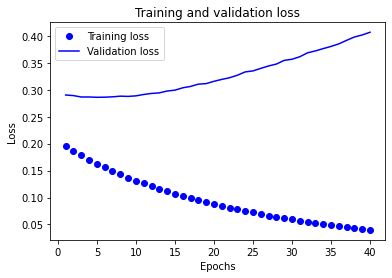

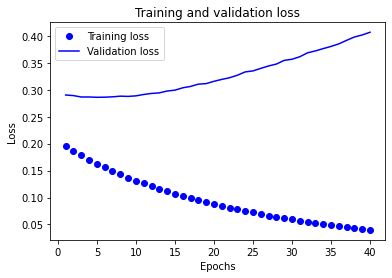

import matplotlib.pyplot as plt

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(acc) + 1)

# “bo”代表 "蓝点"

plt.plot(epochs, loss, 'bo', label='Training loss')

# b代表“蓝色实线”

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

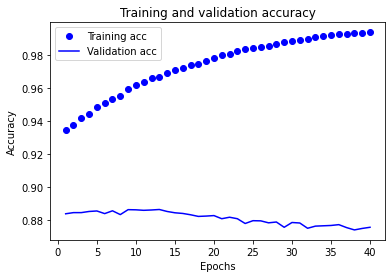

plt.clf() # 清除数字

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

```

浙公网安备 33010602011771号

浙公网安备 33010602011771号