hj_20251215

按量收费腾讯云服务器2c8g 操作系统: OpenCloudOS Server 9 磁盘30g

docker 版:

#!/bin/bash set -e echo ">>> Step 0: 卸载残留 Docker" sudo systemctl stop docker || true sudo systemctl disable docker || true sudo dnf remove -y docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin || true sudo rm -rf /var/lib/docker /var/run/docker /etc/docker /usr/bin/docker /usr/bin/dockerd /usr/lib/systemd/system/docker* /etc/systemd/system/docker* /etc/systemd/system/multi-user.target.wants/docker* sudo systemctl daemon-reload echo ">>> Step 1: 安装 dnf 插件管理器" sudo dnf install -y dnf-plugins-core echo ">>> Step 2: 添加阿里云 Docker CE 镜像仓库" sudo tee /etc/yum.repos.d/docker-ce.repo <<'EOF' [docker-ce-stable] name=Docker CE Stable - aliyun baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/9/x86_64/stable/ enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg EOF echo ">>> Step 3: 清理缓存并安装 Docker" sudo dnf clean all sudo dnf makecache sudo dnf install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin echo ">>> Step 4: 配置国内镜像加速" sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<'EOF' { "registry-mirrors": ["https://mirror.ccs.tencentyun.com"], "exec-opts": ["native.cgroupdriver=systemd"], "storage-driver": "overlay2" } EOF echo ">>> Step 5: 修正 systemd 启动参数加载 daemon.json" sudo mkdir -p /etc/systemd/system/docker.service.d sudo tee /etc/systemd/system/docker.service.d/override.conf <<'EOF' [Service] ExecStart= ExecStart=/usr/bin/dockerd --config-file=/etc/docker/daemon.json -H fd:// --containerd=/run/containerd/containerd.sock EOF echo ">>> Step 6: 重载 systemd 并启动 Docker" sudo systemctl daemon-reload sudo systemctl enable --now docker echo ">>> Step 7: 验证 Docker" docker version docker info | grep -A 5 "Registry Mirrors" docker run --rm mirror.ccs.tencentyun.com/library/hello-world echo ">>> ✅ Docker 安装完成并启用国内镜像加速!"

验证: docker run --rm mirror.ccs.tencentyun.com/library/hello-world

https://www.azul.com/downloads/?package=jdk https://mirrors.edge.kernel.org/pub/software/scm/git/git-2.47.1.tar.gz https://archive.apache.org/dist/maven/maven-3/3.9.6/binaries/apache-maven-3.9.6-bin.tar.gz https://github.com/adoptium/temurin8-binaries/releases/download/jdk8u402-b06/OpenJDK8U-jdk_x64_linux_hotspot_8u402b06.tar.gz 这些下载链接,可以下载对应文件上传到服务器,然后安装; #!/bin/bash # hj_install_env.sh # 一键安装 JDK8 / JDK17 / Maven / Git # 默认 JDK: jdk17 # 安装目录: /home/install # 离线包目录: /home/install/packages set -e INSTALL_DIR="/home/install" PACKAGE_DIR="/home/install/packages" echo "====================================" echo " 🚀 开始离线安装 JDK17 / JDK8 / Maven / Git" echo "====================================" echo "📁 安装目录: $INSTALL_DIR" echo "📦 包目录: $PACKAGE_DIR" mkdir -p $INSTALL_DIR # ----------------- 安装 JDK 17 ----------------- echo "====================================" echo " 🔧 开始安装 JDK 17" echo "====================================" if [ ! -d "$INSTALL_DIR/jdk17" ]; then tar -xzf "$PACKAGE_DIR/jdk17.tar.gz" -C $INSTALL_DIR mv $INSTALL_DIR/$(ls $INSTALL_DIR | grep -E 'jdk.*17') $INSTALL_DIR/jdk17 || true echo "JDK17 安装到: $INSTALL_DIR/jdk17" else echo "✔ JDK17 已存在,跳过" fi # ----------------- 安装 JDK 8 ----------------- echo "====================================" echo " 🔧 开始安装 JDK 8" echo "====================================" if [ ! -d "$INSTALL_DIR/jdk8" ]; then tar -xzf "$PACKAGE_DIR/jdk8.tar.gz" -C $INSTALL_DIR mv $INSTALL_DIR/$(ls $INSTALL_DIR | grep -E 'jdk.*8') $INSTALL_DIR/jdk8 || true echo "JDK8 安装到: $INSTALL_DIR/jdk8" else echo "✔ JDK8 已存在,跳过" fi # ----------------- 安装 Maven ----------------- echo "====================================" echo " 🔧 开始安装 Maven" echo "====================================" if [ ! -d "$INSTALL_DIR/maven" ]; then tar -xzf "$PACKAGE_DIR/maven.tar.gz" -C $INSTALL_DIR mv $INSTALL_DIR/$(ls $INSTALL_DIR | grep -E 'apache-maven') $INSTALL_DIR/maven || true echo "Maven 安装到: $INSTALL_DIR/maven" else echo "✔ Maven 已存在,跳过" fi # ----------------- 安装 Git ----------------- echo "====================================" echo " 🔧 开始安装 Git" echo "====================================" if [ ! -d "$INSTALL_DIR/git" ]; then tar -xzf "$PACKAGE_DIR/git.tar.gz" -C $INSTALL_DIR mv $INSTALL_DIR/$(ls $INSTALL_DIR | grep -E '^git') $INSTALL_DIR/git || true echo "Git 安装到: $INSTALL_DIR/git" else echo "✔ Git 已存在,跳过" fi # ----------------- 配置环境变量 ----------------- echo "====================================" echo " 📝 配置环境变量" echo "====================================" ENV_FILE="/etc/profile.d/hj_env.sh" cat > $ENV_FILE <<EOF # hj_install_env export JAVA_HOME=${INSTALL_DIR}/jdk17 export PATH=\$JAVA_HOME/bin:${INSTALL_DIR}/maven/bin:${INSTALL_DIR}/git/bin:\$PATH EOF chmod +x $ENV_FILE # 当前 shell 立即生效 export JAVA_HOME=${INSTALL_DIR}/jdk17 export PATH=$JAVA_HOME/bin:${INSTALL_DIR}/maven/bin:${INSTALL_DIR}/git/bin:$PATH # ----------------- 验证安装 ----------------- echo "====================================" echo " 🔍 验证安装" echo "====================================" echo "➡ java -version" java -version || echo "❌ java 未生效" echo "➡ mvn -v" mvn -v || echo "❌ Maven 未生效" echo "➡ git --version" git --version || echo "❌ Git 未生效" echo "====================================" echo " 🎉 安装完成!" echo "====================================" source /etc/profile.d/hj_env.sh 这个使环境变量生效

#!/bin/bash # 一键安装 MySQL 9.4 (Docker 版),默认字符集 utf8mb4 MYSQL_VERSION=9.4 MYSQL_PORT=3311 MYSQL_ROOT_PASSWORD="Jens20250826001hjIsOk" MYSQL_DATA_DIR="/home/jens/mysql" # 创建数据目录 mkdir -p ${MYSQL_DATA_DIR} chmod -R 777 ${MYSQL_DATA_DIR} # 停掉并删除可能已有的容器 docker stop mysql9.4 >/dev/null 2>&1 docker rm mysql9.4 >/dev/null 2>&1 # 拉取镜像 echo "正在拉取 MySQL ${MYSQL_VERSION} 镜像..." docker pull mysql:${MYSQL_VERSION} # 启动容器 echo "正在启动 MySQL 容器..." docker run -d \ --name mysql9.4 \ -p ${MYSQL_PORT}:3306 \ -e MYSQL_ROOT_PASSWORD=${MYSQL_ROOT_PASSWORD} \ -v ${MYSQL_DATA_DIR}:/var/lib/mysql \ mysql:${MYSQL_VERSION} \ --character-set-server=utf8mb4 \ --collation-server=utf8mb4_general_ci # 检查运行状态 sleep 3 docker ps | grep mysql9.4 && echo "✅ MySQL ${MYSQL_VERSION} 已经启动成功,端口 ${MYSQL_PORT}" || echo "❌ MySQL 启动失败"

#!/bin/bash # 一键安装 Redis 7.4.1 (Docker 版) # 端口: 6380 # 密码: Jens20250826001hjIsOk # 数据保存路径: /home/jens/redis REDIS_VERSION="7.4.1" REDIS_PORT=6380 REDIS_PASSWORD="Jens20250826001hjIsOk" REDIS_DATA_DIR="/home/jens/redis" REDIS_CONTAINER_NAME="redis7.4.1_6380" echo ">>> Step 1: 创建数据和日志目录" mkdir -p ${REDIS_DATA_DIR}/data mkdir -p ${REDIS_DATA_DIR}/logs chmod -R 777 ${REDIS_DATA_DIR} echo ">>> Step 2: 创建 Redis 配置文件" cat > ${REDIS_DATA_DIR}/redis.conf <<EOF # 数据目录 dir /data # 密码 requirepass ${REDIS_PASSWORD} # 日志目录 logfile /logs/redis.log # 开发环境关闭保护模式 protected-mode no # 数据持久化配置 save 900 1 save 300 10 save 60 10000 # 绑定地址和端口 bind 0.0.0.0 port 6379 EOF # 设置权限给 Redis 容器默认 UID sudo chown -R 999:999 ${REDIS_DATA_DIR}/data sudo chown -R 999:999 ${REDIS_DATA_DIR}/logs sudo chown 999:999 ${REDIS_DATA_DIR}/redis.conf echo ">>> Step 3: 停掉并删除已有容器" docker stop ${REDIS_CONTAINER_NAME} >/dev/null 2>&1 docker rm ${REDIS_CONTAINER_NAME} >/dev/null 2>&1 echo ">>> Step 4: 拉取 Redis 镜像" docker pull redis:${REDIS_VERSION} echo ">>> Step 5: 启动 Redis 容器" docker run -d \ --name ${REDIS_CONTAINER_NAME} \ -p ${REDIS_PORT}:6379 \ -v ${REDIS_DATA_DIR}/data:/data \ -v ${REDIS_DATA_DIR}/logs:/logs \ -v ${REDIS_DATA_DIR}/redis.conf:/usr/local/etc/redis/redis.conf \ redis:${REDIS_VERSION} \ redis-server /usr/local/etc/redis/redis.conf sleep 3 echo ">>> Step 6: 检查运行状态" docker ps | grep ${REDIS_CONTAINER_NAME} && echo "✅ Redis ${REDIS_VERSION} 已启动成功,端口 ${REDIS_PORT}" || echo "❌ Redis 启动失败" echo ">>> Step 7: 测试 Redis" echo "使用以下命令测试:" echo "redis-cli -h 127.0.0.1 -p ${REDIS_PORT} -a ${REDIS_PASSWORD} ping"

#!/bin/bash # =================================================== # 一键安装 Node Exporter(系统监控插件) # 适用于:OpenCloudOS / CentOS / TencentOS / 其他 Linux # 监控端口:9100 # 版本号:v1.8.2(非 latest,稳定) # =================================================== NODE_EXPORTER_VERSION="v1.8.2" NODE_EXPORTER_NAME="node_exporter" NODE_EXPORTER_PORT=9100 echo ">>> 停止并删除旧容器..." docker stop ${NODE_EXPORTER_NAME} >/dev/null 2>&1 docker rm ${NODE_EXPORTER_NAME} >/dev/null 2>&1 echo ">>> 拉取 Node Exporter 镜像..." docker pull prom/node-exporter:${NODE_EXPORTER_VERSION} echo ">>> 启动 Node Exporter 容器..." docker run -d \ --name ${NODE_EXPORTER_NAME} \ --add-host=host.docker.internal:host-gateway \ --restart=always \ -p ${NODE_EXPORTER_PORT}:9100 \ -v "/proc:/host/proc:ro" \ -v "/sys:/host/sys:ro" \ -v "/:/rootfs:ro" \ prom/node-exporter:${NODE_EXPORTER_VERSION} \ --path.rootfs=/rootfs sleep 3 echo ">>> 检查容器状态:" docker ps | grep node_exporter && echo "✅ Node Exporter 已启动成功!访问: http://服务器IP:9100/metrics" || echo "❌ 启动失败"

1 下载源码 修改properties里面的配置文件打成jar包 2 Dockerfile # 基础镜像使用java FROM openjdk:17 # 作者 MAINTAINER hj # VOLUME 容器挂载目录/tmp。 VOLUME /root/hj/container/xxl-job/tmp # 将jar包添加到容器中并更名为admin.jar ADD xxl-job-admin-3.2.1-SNAPSHOT.jar xxlJob.jar #将配置文件放到容器里 ADD application.yml application.yml # 时区 RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime RUN echo 'Asia/Shanghai' >/etc/timezone # 暴露端口 EXPOSE 8989 # 包前面的add命令把jar复制添加,这个touch命令的作用是修改这个文件的(访问,修改时间)为当前时间,可有可无 RUN bash -c 'touch /xxlJob.jar' # 运行jar ENTRYPOINT ["java","-jar","-Dspring.config.additional-location=application.yml","/xxlJob.jar"] 3 xxl_job.sh app_name='xxl_job_admin' app_port='8989' # 停止正在运行的容器 echo '......stop container xxl_job_admin......' docker stop ${app_name} # 删除容器 echo '......rm container xxl_job_admin......' docker rm ${app_name} # 删除 名称为 app_name 镜像 echo '......rmi none images xxl_job_admin......' docker rmi `docker images | grep ${app_name} | awk '{print $3}'` # 构建镜像 docker build -f Dockerfile -t ${app_name} . # 重新生成并运行容器 echo '......start container xxl_job_admin......' # 挂载日志文件到外部 docker run -d \ --name ${app_name} -p ${app_port}:${app_port} \ --restart=always \ --privileged=true \ -v /etc/localtime:/etc/localtime \ -v /data/tmp:/data/tmp \ -v /root/hj/xxl_job/logs:/logs \ ${app_name} # 重新生成并运行容器 echo '......Success xxl_job_admin......' 4 application.yml server: port: 8989 spring: datasource: url: jdbc:mysql://1.13.163.82:3311/xxl_job?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&serverTimezone=Asia/Shanghai username: root password: Jens20250826001hjIsOk mail: host: smtp.qq.com port: 465 username: 836403311@qq.com from: 836403311@qq.com password: vlawossizkmdbcgj properties: mail: smtp: auth: true ssl: enable: true xxl: job: accessToken: default_token 5 一键启动 .sh

pom 引入 <dependency> <groupId>com.xuxueli</groupId> <artifactId>xxl-job-core</artifactId> <version>3.2.0</version> </dependency> yml配置 xxl: job: enable: true admin-addresses: http://1.13.163.82:8989/xxl-job-admin username: admin password: Jens20250826001hjIsOk accessToken: default_token timeout: 3 app-name: hj-server log-retention-days: 30 port: 7707 failRetryCount: 1 配置文件类 XxlJobConfig package com.hj.server.config.xxl; import lombok.Data; import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty; import org.springframework.boot.context.properties.ConfigurationProperties; import org.springframework.context.annotation.Configuration; @Data @Configuration @ConfigurationProperties(prefix = "xxl.job") @ConditionalOnProperty(name = "xxl.job.enable", havingValue = "true") public class XxlJobConfig { private String adminAddresses; private String username; private String password; private String addresses; private String accessToken; private int timeout; private String appName; private String ip; private int port; private String logPath; private int logRetentionDays; private int failRetryCount; } XxlJobInintConfig package com.hj.server.config.xxl; import com.xxl.job.core.executor.impl.XxlJobSpringExecutor; import jakarta.annotation.Resource; import lombok.extern.slf4j.Slf4j; import org.springframework.boot.autoconfigure.condition.ConditionalOnBean; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; @Slf4j @Configuration @ConditionalOnBean(value = {XxlJobConfig.class}) public class XxlJobInitConfig { @Resource XxlJobConfig xxlJobConfig; @Bean public XxlJobSpringExecutor xxlJobExecutor() { log.info(">>>>>>>>>>> xxl-job config init."); XxlJobSpringExecutor xxlJobSpringExecutor = new XxlJobSpringExecutor(); xxlJobSpringExecutor.setAdminAddresses(xxlJobConfig.getAdminAddresses()); xxlJobSpringExecutor.setAppname(xxlJobConfig.getAppName()); xxlJobSpringExecutor.setAddress(xxlJobConfig.getAddresses()); xxlJobSpringExecutor.setIp(xxlJobConfig.getIp()); xxlJobSpringExecutor.setPort(xxlJobConfig.getPort()); xxlJobSpringExecutor.setAccessToken(xxlJobConfig.getAccessToken()); xxlJobSpringExecutor.setTimeout(xxlJobConfig.getTimeout()); xxlJobSpringExecutor.setLogPath(xxlJobConfig.getLogPath()); xxlJobSpringExecutor.setLogRetentionDays(xxlJobConfig.getLogRetentionDays()); return xxlJobSpringExecutor; } } TestClass @XxlJob("testXxlJob")

#!/bin/bash # 一键安装 Prometheus v3.1.0 (Docker 版) # 安装目录: /home/jens/prometheus # 访问端口: 7090 PROMETHEUS_VERSION="v3.1.0" PROMETHEUS_PORT=7090 PROMETHEUS_DIR="/home/jens/prometheus" echo "📦 创建 Prometheus 数据目录..." mkdir -p ${PROMETHEUS_DIR}/data mkdir -p ${PROMETHEUS_DIR}/config echo "🧾 生成默认配置文件 prometheus.yml ..." cat > ${PROMETHEUS_DIR}/config/prometheus.yml <<EOF global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'prometheus' static_configs: - targets: ['localhost:7090'] # 示例:监控 Spring Boot 应用(暴露 prometheus 端点) - job_name: 'hj-server' metrics_path: '/actuator/prometheus' static_configs: - targets: ['host.docker.internal:8081'] # 替换为实际服务地址或IP EOF echo "🔐 设置目录权限..." sudo chown -R 65534:65534 ${PROMETHEUS_DIR} echo "🛑 停止并删除旧容器(如果存在)..." docker stop prometheus >/dev/null 2>&1 docker rm prometheus >/dev/null 2>&1 echo "⬇️ 拉取 Prometheus 镜像 (${PROMETHEUS_VERSION})..." docker pull prom/prometheus:${PROMETHEUS_VERSION} echo "🚀 启动 Prometheus 容器..." docker run -d \ --name prometheus \ -p ${PROMETHEUS_PORT}:9090 \ --restart=always \ -v ${PROMETHEUS_DIR}/config/prometheus.yml:/etc/prometheus/prometheus.yml \ -v ${PROMETHEUS_DIR}/data:/prometheus \ prom/prometheus:${PROMETHEUS_VERSION} \ --config.file=/etc/prometheus/prometheus.yml \ --storage.tsdb.path=/prometheus \ --web.enable-lifecycle sleep 3 docker ps | grep prometheus && echo "✅ Prometheus ${PROMETHEUS_VERSION} 已启动成功,访问:http://<服务器IP>:${PROMETHEUS_PORT}" || echo "❌ Prometheus 启动失败"

#!/bin/bash # 一键安装 Grafana(Docker 版),默认端口 7300 GRAFANA_VERSION="12.2.1" GRAFANA_PORT=7300 GRAFANA_NAME="grafana" GRAFANA_DATA_DIR="/home/jens/grafana" # 创建数据目录 mkdir -p ${GRAFANA_DATA_DIR} chmod -R 777 ${GRAFANA_DATA_DIR} echo "🧹 停止并删除旧容器..." docker stop ${GRAFANA_NAME} >/dev/null 2>&1 docker rm ${GRAFANA_NAME} >/dev/null 2>&1 echo "📦 拉取 Grafana 镜像(版本 ${GRAFANA_VERSION})..." docker pull grafana/grafana:${GRAFANA_VERSION} echo "🚀 启动 Grafana 容器..." docker run -d \ --name ${GRAFANA_NAME} \ -p ${GRAFANA_PORT}:3000 \ -v ${GRAFANA_DATA_DIR}:/var/lib/grafana \ --restart=always \ grafana/grafana:${GRAFANA_VERSION} sleep 3 docker ps | grep ${GRAFANA_NAME} && echo "✅ Grafana 已启动成功,访问地址:http://<服务器IP>:${GRAFANA_PORT}" || echo "❌ Grafana 启动失败"

http://119.45.240.223:7300/ admin/admin 登录后修改密码,配置Prometheus数据源 <!-- Spring Boot Actuator --> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-actuator</artifactId> </dependency> <!-- Micrometer Prometheus 适配器 --> <dependency> <groupId>io.micrometer</groupId> <artifactId>micrometer-registry-prometheus</artifactId> </dependency> management: server: port: 7778 # 可选:如果你希望管理端口与业务端口分离,可打开这个配置 endpoints: web: exposure: # include: '*' include: health,info,prometheus,metrics # 顺序不重要,但保持规范可读性更好 endpoint: prometheus: enabled: true health: show-details: always prometheus: metrics: export: enabled: true cmd curl http://localhost:7778/actuator/prometheus prometheus.yml global: scrape_interval: 15s # 默认15秒抓取一次 evaluation_interval: 15s scrape_configs: # 监控 Prometheus 自身 - job_name: 'prometheus' static_configs: - targets: ['localhost:9090'] # 监控 Spring Boot 应用(hj-server) - job_name: 'hj-server' metrics_path: '/actuator/prometheus' static_configs: - targets: ['119.45.240.223:7778']

global: scrape_interval: 15s # 默认15秒抓取一次 evaluation_interval: 15s scrape_configs: # 监控 Prometheus 自身 - job_name: 'prometheus' static_configs: - targets: ['localhost:9090'] # 监控 Spring Boot 应用(hj-server) - job_name: 'hj-server' metrics_path: '/actuator/prometheus' static_configs: - targets: ['175.27.241.201:7778'] - job_name: 'hj-server2' metrics_path: '/actuator/prometheus' static_configs: - targets: ['175.27.241.201:7776'] - job_name: 'xxl-job' metrics_path: '/xxl-job-admin/actuator/prometheus' static_configs: - targets: ['175.27.241.201:8989'] - job_name: 'server-metrics' static_configs: - targets: ['175.27.241.201:9100'] promethesu配置如上, xxl-job的注意前缀 grafana页面配置好Prometheus数据源,然后仪表板引入模板就好.按需修改模板,下面放三个模板链接 1 Spring Boot 3.x Statistics https://grafana.com/grafana/dashboards/19004-spring-boot-statistics/?utm_source=chatgpt.com 2 Node Exporter Full https://grafana.com/grafana/dashboards/1860-node-exporter-full/ 3 SpringBoot APM Dashboard(中文版本) https://grafana.com/grafana/dashboards/21319-springboot-apm-dashboard/

#!/bin/bash # 一键安装并启动 Grafana(Docker 版),自动初始化配置文件 GRAFANA_VERSION="12.2.1" GRAFANA_PORT=7300 GRAFANA_NAME="grafana" GRAFANA_BASE_DIR="/home/jens/grafana" GRAFANA_DATA_DIR="${GRAFANA_BASE_DIR}/data" GRAFANA_CONF_DIR="${GRAFANA_BASE_DIR}/conf" echo "📁 创建目录..." mkdir -p ${GRAFANA_DATA_DIR} mkdir -p ${GRAFANA_CONF_DIR} chmod -R 777 ${GRAFANA_BASE_DIR} echo "🧹 停止并删除旧容器..." docker stop ${GRAFANA_NAME} >/dev/null 2>&1 docker rm ${GRAFANA_NAME} >/dev/null 2>&1 echo "📦 拉取 Grafana 镜像(版本 ${GRAFANA_VERSION})..." docker pull grafana/grafana:${GRAFANA_VERSION} # 如果配置文件不存在,则从临时容器中拷贝默认配置 if [ ! -f "${GRAFANA_CONF_DIR}/grafana.ini" ]; then echo "📄 配置文件为空,准备从临时容器复制默认配置..." docker run --name grafana_temp -d grafana/grafana:${GRAFANA_VERSION} sleep 3 echo "➡️ 复制 /etc/grafana 到 ${GRAFANA_CONF_DIR}" docker cp grafana_temp:/etc/grafana/. ${GRAFANA_CONF_DIR} docker stop grafana_temp >/dev/null docker rm grafana_temp >/dev/null chmod -R 777 ${GRAFANA_CONF_DIR} echo "✔ 默认配置复制完成" fi echo "🚀 启动 Grafana 容器..." docker run -d \ --name ${GRAFANA_NAME} \ --add-host=host.docker.internal:host-gateway \ -p ${GRAFANA_PORT}:3000 \ -v ${GRAFANA_DATA_DIR}:/var/lib/grafana \ -v ${GRAFANA_CONF_DIR}:/etc/grafana \ --restart=always \ grafana/grafana:${GRAFANA_VERSION} sleep 2 if docker ps | grep -q ${GRAFANA_NAME}; then echo "✅ Grafana 启动成功" echo "🌐 访问地址: http://<你的公网IP>:${GRAFANA_PORT}" echo "👤 默认账号: admin / admin" else echo "❌ Grafana 启动失败,请检查日志" docker logs ${GRAFANA_NAME} fi 配置 grafana.ini [smtp] enabled = true host = smtp.qq.com:465 user = 8364658511@qq.com # QQ 邮箱授权码(不是登录密码) password = vladdwossizkmdgdadb from_address = 8364655811@qq.com from_name = Grafana skip_verify = true # 465 端口必须使用 smtps(SSL) startTLS_policy = NoStartTLS ;enabled = false ;host = localhost:25 ;user = # If the password contains # or ; you have to wrap it with triple quotes. Ex """#password;""" ;password = ;cert_file = ;key_file =

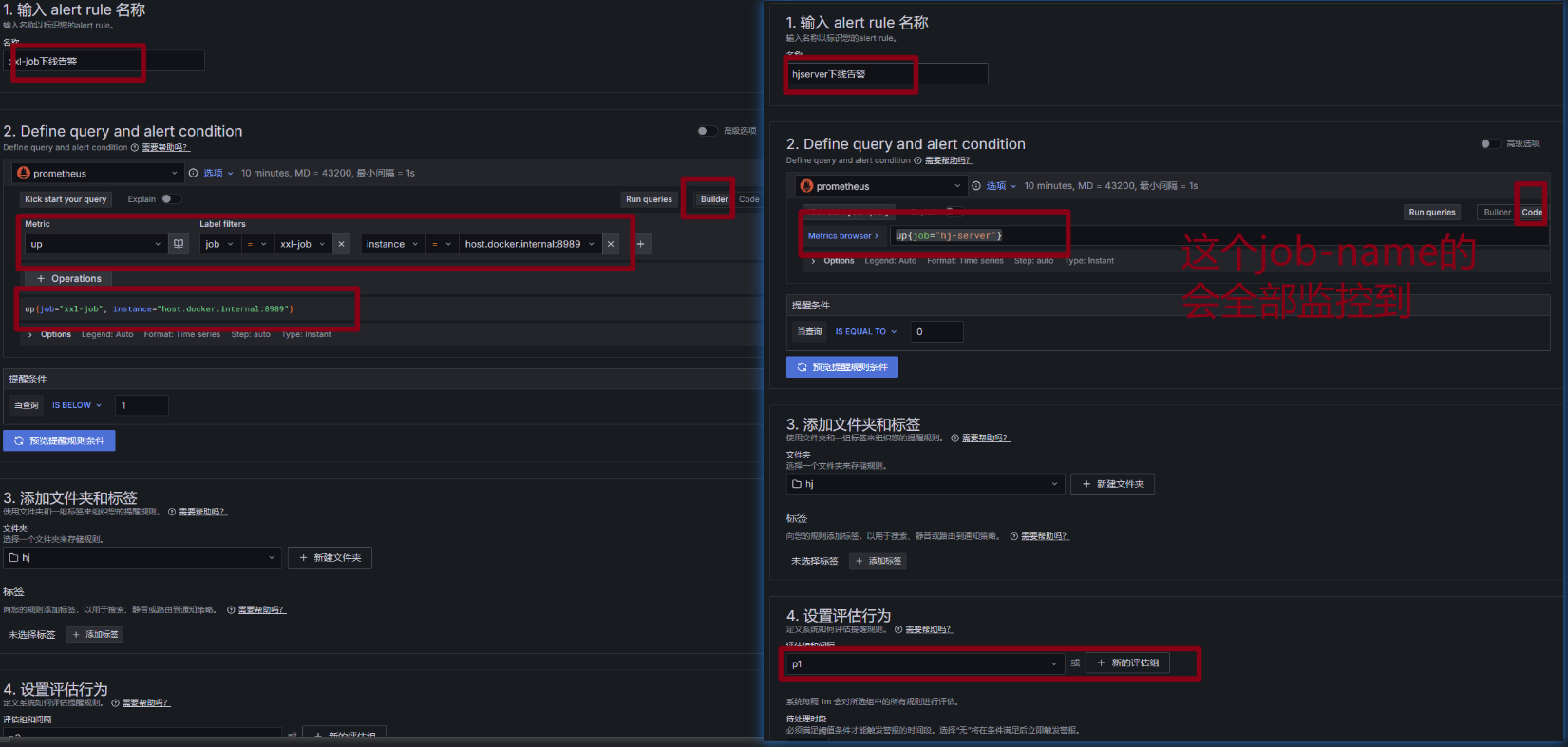

配置警报规则

1 cpu使用率告警 100 - (avg by (instance)(irate(node_cpu_seconds_total{mode="idle"}[5m])) * 100) IS ABOVE 70 CPU 使用率高于70%,请检查! 2 内存使用率告警 (1 - (node_memory_MemAvailable_bytes / (node_memory_MemTotal_bytes)))* 100 IS ABOVE 70 内存使用率高于70%,请检查! 3 磁盘使用率告警 (node_filesystem_size_bytes{fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}-node_filesystem_free_bytes{fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}) *100/(node_filesystem_avail_bytes {fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}+(node_filesystem_size_bytes{fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}-node_filesystem_free_bytes{fstype=~"ext.*|xfs",mountpoint !~".*pod.*"})) IS ABOVE 70 磁盘使用率高于70%,请检查! 4 磁盘IO利用率告警 100 * (rate(node_disk_io_time_seconds_total{instance != "3.78.34.82:9100"}[5m])) IS ABOVE 70 磁盘IO利用率高于70%,请检查! 5 网络上传流量告警 irate(node_network_transmit_bytes_total{device=~"ens5|eth0"}[5m])*8/1024/1024 IS ABOVE 1024 网络上传流量超过1 Gbps,请检查! 6 网络下载流量告警 irate(node_network_receive_bytes_total{device=~"ens5|eth0"}[5m])*8/1024/1024 IS ABOVE 1024 网络下载流量超过1 Gbps,请检查! 7 MySQL 磁盘IO利用率告警 100 * (rate(node_disk_io_time_seconds_total{instance = "3.78.34.82:9100"}[5m])) IS ABOVE 80 MySql Temp库磁盘IO利用率高于80%,请检查!

#!/bin/bash # 一键安装 Jenkins (Docker 2.528.2-lts-jdk17) 并挂载宿主机 JDK/Maven/Git JENKINS_NAME="jenkins" JENKINS_PORT=8800 JENKINS_VERSION="2.528.2-lts-jdk17" JENKINS_HOME="/home/jens/jenkins" # 宿主机工具目录 HOST_INSTALL="/home/install" echo "🧹 清理旧 Jenkins..." docker stop ${JENKINS_NAME} >/dev/null 2>&1 docker rm ${JENKINS_NAME} >/dev/null 2>&1 echo "📁 创建持久化目录..." mkdir -p ${JENKINS_HOME} chmod -R 777 ${JENKINS_HOME} echo "📦 拉取 Jenkins 镜像: ${JENKINS_VERSION}" docker pull jenkins/jenkins:${JENKINS_VERSION} echo "🚀 启动 Jenkins..." docker run -d \ --name ${JENKINS_NAME} \ --user=root \ --add-host=host.docker.internal:host-gateway \ -p ${JENKINS_PORT}:8080 \ -p 50000:50000 \ -v ${JENKINS_HOME}:/var/jenkins_home \ -v /var/run/docker.sock:/var/run/docker.sock \ -v ${HOST_INSTALL}/jdk17:/opt/jdk17:ro \ -v ${HOST_INSTALL}/maven:/opt/maven:ro \ -v ${HOST_INSTALL}/git:/opt/git:ro \ -e JAVA_HOME=/opt/jdk17 \ -e PATH=/opt/jdk17/bin:/opt/maven/bin:/opt/git/bin:$PATH \ --restart=always \ jenkins/jenkins:${JENKINS_VERSION} sleep 5 echo "" echo "🎉 Jenkins 2.528.2-lts 已启动!访问: http://你的IP:${JENKINS_PORT}" echo "初始密码:" echo "docker exec -it jenkins cat /var/jenkins_home/secrets/initialAdminPassword" echo "" 安装推荐的插件,如果网络慢,也可以下载好文件上传安装 全局工具配置 JDK JAVA_HOME /opt/java/openjdk GIT Path to Git executable /usr/bin/git MAVEN MAVEN_HOME /opt/maven

用docker部署的Jenkins再部署项目,好麻烦,还得各种挂载目录,最后还得写监听程序,监听到jar包变化,再自动重启服务,直接宿主机装Jenkins比较好 #!/bin/bash # 一键安装 Jenkins (Docker 2.528.2-lts-jdk17) 并挂载宿主机 JDK/Maven/Git JENKINS_NAME="jenkins" JENKINS_PORT=8800 JENKINS_VERSION="2.528.2-lts-jdk17" JENKINS_HOME="/home/jens/jenkins" # 宿主机工具目录 HOST_INSTALL="/home/install" # 宿主机项目目录 HOST_SPRING="/root/hj" echo "🧹 清理旧 Jenkins..." docker stop ${JENKINS_NAME} >/dev/null 2>&1 docker rm ${JENKINS_NAME} >/dev/null 2>&1 echo "📁 创建持久化目录..." mkdir -p ${JENKINS_HOME} chmod -R 777 ${JENKINS_HOME} echo "📦 拉取 Jenkins 镜像: ${JENKINS_VERSION}" docker pull jenkins/jenkins:${JENKINS_VERSION} echo "🚀 启动 Jenkins..." docker run -d \ --name ${JENKINS_NAME} \ --user=root \ --add-host=host.docker.internal:host-gateway \ -p ${JENKINS_PORT}:8080 \ -p 50000:50000 \ -v ${JENKINS_HOME}:/var/jenkins_home \ -v /var/run/docker.sock:/var/run/docker.sock \ -v ${HOST_INSTALL}/jdk17:/opt/jdk17:ro \ -v ${HOST_INSTALL}/maven:/opt/maven:ro \ -v ${HOST_INSTALL}/maven_repo:/home/install/maven_repo \ -v ${HOST_SPRING}/:/root/hj \ -v ${HOST_INSTALL}/git:/opt/git:ro \ -e JAVA_HOME=/opt/jdk17 \ -e PATH=/opt/jdk17/bin:/opt/maven/bin:/opt/git/bin:$PATH \ --restart=always \ jenkins/jenkins:${JENKINS_VERSION} sleep 5 echo "" echo "🎉 Jenkins 2.528.2-lts 已启动!访问: http://你的IP:${JENKINS_PORT}" echo "初始密码:" echo "docker exec -it jenkins cat /var/jenkins_home/secrets/initialAdminPassword" echo "" autoRestart.sh #!/bin/bash TARGET_JAR="hj-server-0.0.1-SNAPSHOT.jar" JAR_PATH="/root/hj/hj_boot/${TARGET_JAR}" START_SCRIPT="/root/hj/hj_boot/hjServer.sh" echo "==============================" echo "🔄 检测到新 JAR:${TARGET_JAR}" echo "🔧 自动执行:hjServer.sh" echo "==============================" if [ ! -f "$JAR_PATH" ]; then echo "❌ JAR 文件不存在:$JAR_PATH" exit 1 fi if [ ! -x "$START_SCRIPT" ]; then echo "❌ 启动脚本不可执行,自动添加权限" chmod +x "$START_SCRIPT" fi echo "🚀 正在执行 hjServer.sh ..." $START_SCRIPT echo "🎉 服务重启完成!" watchJar.sh #!/bin/bash WATCH_DIR="/root/hj/hj_boot" TARGET_JAR="hj-server-0.0.1-SNAPSHOT.jar" echo "👀 正在监听目录:$WATCH_DIR" inotifywait -m -e modify,create "$WATCH_DIR" | while read path action file; do if [[ "$file" == "$TARGET_JAR" ]]; then echo "🔔 检测到 JAR 更新:$file" /root/hj/hj_boot/autoRestart.sh fi done /etc/systemd/system/hj-watch.service [Unit] Description=Watch hj-server JAR update and auto restart After=network.target [Service] ExecStart=/root/hj/watchJar.sh Restart=always User=root [Install] WantedBy=multi-user.target systemctl daemon-reload systemctl enable hj-watch systemctl start hj-watch Jenkins构建脚本 echo "=== 复制 hj-server jar 到宿主机 ===" JAR="hj-server/target/hj-server-0.0.1-SNAPSHOT.jar" if [ ! -f "$JAR" ]; then echo "❌ 未找到 JAR" exit 1 fi cp "$JAR" /root/hj/hj_boot/ echo "🎉 JAR 已复制到宿主机 → 自动触发重启流程!" 这一套没有实践,放这而已,不一定可行,因为实际上真没必要这样来玩

#!/bin/bash ############################################################## # 🚀 一键部署 Loki + Promtail # 所有运行参数集中在顶部,方便统一修改 ############################################################## ########## 基础配置(修改这里) ########## # 部署根目录 BASE_DIR="/home/jens" # Loki LOKI_NAME="loki" LOKI_PORT="3100" LOKI_VERSION="2.9.1" # Promtail PROMTAIL_NAME="promtail" PROMTAIL_PORT="9080" PROMTAIL_VERSION="2.9.1" # SpringBoot 日志目录(宿主机) HJ_SERVER_LOG="/app/hj/hj_boot/logs/hjBoot" HJ_SERVER2_LOG="/app/hj/hj_boot2/logs/hjBoot" ########## 运行目录 ########## LOKI_DIR="${BASE_DIR}/loki" PROMTAIL_DIR="${BASE_DIR}/promtail" PROMTAIL_CONF="${PROMTAIL_DIR}/promtail.yml" echo "==== 创建目录 ====" mkdir -p ${LOKI_DIR} mkdir -p ${PROMTAIL_DIR} ############################################################## # 2️⃣ 停止旧 Loki ############################################################## echo "==== 停止并删除旧 Loki ====" docker stop ${LOKI_NAME} >/dev/null 2>&1 docker rm ${LOKI_NAME} >/dev/null 2>&1 ############################################################## # 3️⃣ 启动 Loki ############################################################## echo "==== 启动 Loki ${LOKI_VERSION} ====" docker run -d \ --name ${LOKI_NAME} \ --add-host=host.docker.internal:host-gateway \ -p ${LOKI_PORT}:3100 \ -v ${LOKI_DIR}:/data \ -v ${LOKI_DIR}/loki.yaml:/etc/loki/loki.yaml \ --restart=always \ grafana/loki:${LOKI_VERSION} \ -config.file=/etc/loki/local-config.yaml ############################################################## # 4️⃣ 停止旧 Promtail ############################################################## echo "==== 停止并删除旧 Promtail ====" docker stop ${PROMTAIL_NAME} >/dev/null 2>&1 docker rm ${PROMTAIL_NAME} >/dev/null 2>&1 ############################################################## # 5️⃣ 启动 Promtail ############################################################## echo "==== 启动 Promtail ${PROMTAIL_VERSION} ====" docker run -d \ --name ${PROMTAIL_NAME} \ --add-host=host.docker.internal:host-gateway \ -p ${PROMTAIL_PORT}:${PROMTAIL_PORT} \ -v ${PROMTAIL_CONF}:/etc/promtail/promtail.yml \ -v ${HJ_SERVER_LOG}:/logs/hj-server \ -v ${HJ_SERVER2_LOG}:/logs/hj-server2 \ --restart=always \ grafana/promtail:${PROMTAIL_VERSION} \ -config.file=/etc/promtail/promtail.yml ############################################################## # 6️⃣ 完成 ############################################################## echo "" echo "==================================================" echo " 🚀 Loki + Promtail 已启动成功" echo "--------------------------------------------------" echo " Loki:" echo " http://<服务器IP>:${LOKI_PORT}" echo "" echo " Promtail:" echo " http://<服务器IP>:${PROMTAIL_PORT}" echo "" echo " Grafana 数据源配置:" echo " 类型:Loki" echo " URL:http://host.docker.internal:${LOKI_PORT}" echo "==================================================" promatail.yml server: http_listen_port: 9080 grpc_listen_port: 0 positions: filename: /tmp/promtail_position.yaml clients: - url: http://host.docker.internal:3100/loki/api/v1/push scrape_configs: # ========================================================================== # 🟢 hj-server 日志采集 # ========================================================================== - job_name: hj-server static_configs: - targets: - localhost labels: job: hj-server service: hj-server __path__: /logs/hj-server/*.log pipeline_stages: - regex: # 捕获 TRACE/DEBUG/INFO/WARN/ERROR/FATAL expression: "(?P<severity>TRACE|DEBUG|INFO|WARN|ERROR|FATAL)" # ========================================================================== # 🔵 hj-server2 日志采集 # ========================================================================== - job_name: hj-server2 static_configs: - targets: - localhost labels: job: hj-server2 service: hj-server2 __path__: /logs/hj-server2/*.log pipeline_stages: - regex: expression: "(?P<severity>TRACE|DEBUG|INFO|WARN|ERROR|FATAL)" loki.ymal auth_enabled: false server: http_listen_port: 3100 ingester: lifecycler: ring: kvstore: store: inmemory chunk_idle_period: 1h schema_config: configs: - from: 2024-01-01 store: boltdb-shipper object_store: filesystem schema: v13 index: prefix: index_ period: 24h storage_config: filesystem: directory: /loki/data boltdb_shipper: active_index_directory: /loki/index shared_store: filesystem limits_config: retention_period: 7d table_manager: retention_deletes_enabled: true retention_period: 7d C:\Users\Administrator>curl http://119.45.127.57:3100/loki/api/v1/labels http://146.56.196.100:3100/metrics http://146.56.196.100:3100/ready http://146.56.196.100:9080/targets grafana 配置数据源 loki 在探索那数据源选成loki即可查看日志 仪表盘 https://grafana.com/grafana/dashboards/13359-logs/?utm_source=chatgpt.com --这个模板 Container 这个在json模型那改成 job即可,因为现在项目配置的标签是job,service 日志配置文件 <?xml version="1.0" encoding="UTF-8"?> <Configuration status="ERROR" monitorInterval="1800"> <!-- status="ERROR":Log4j2 启动时的内部日志级别,仅打印 ERROR 及以上级别 monitorInterval="1800":每 1800 秒(30 分钟)监控一次配置文件变化并自动重新加载 --> <Properties> <!-- 应用名称 --> <property name="APP_NAME">hjBoot</property> <!-- 日志主目录 --> <property name="LOG_PATH">./logs/${APP_NAME}</property> <!-- 日志备份目录(存放归档文件) --> <property name="LOG_BACKUP_PATH">${LOG_PATH}/backup</property> <!-- 日志文件归档命名规则:按月归档,每天一个日志文件,支持压缩归档 --> <property name="FILE_PATTERN">${LOG_BACKUP_PATH}/${date:yyyy-MM}/${APP_NAME}-%d{yyyy-MM-dd}_%i.log.zip</property> <!-- 控制台日志输出格式 --> <property name="PATTERN_CONSOLE">%d{yyyy-MM-dd HH:mm:ss.SSS} | %-5level | %msg%n</property> <!-- 文件日志输出格式 --> <property name="PATTERN_FILE">%d{yyyy-MM-dd HH:mm:ss} %-5level %logger{36} - %msg%n</property> </Properties> <Appenders> <!-- 控制台输出,适合开发调试和 Promtail 采集 --> <Console name="ConsolePrint" target="SYSTEM_OUT"> <PatternLayout pattern="${PATTERN_CONSOLE}"/> </Console> <!-- INFO 日志文件 --> <RollingFile name="RollingFileInfo" fileName="${LOG_PATH}/${APP_NAME}-info.log" filePattern="${FILE_PATTERN}"> <Filters> <!-- 仅采集 INFO 级别日志 --> <ThresholdFilter level="WARN" onMatch="DENY" onMismatch="NEUTRAL"/> <ThresholdFilter level="INFO" onMatch="ACCEPT" onMismatch="DENY"/> </Filters> <PatternLayout pattern="${PATTERN_FILE}"/> <Policies> <!-- 按天归档 --> <TimeBasedTriggeringPolicy interval="1" modulate="true"/> <!-- 文件超过 16MB 也触发归档 --> <SizeBasedTriggeringPolicy size="16MB"/> </Policies> <!-- 保留最近 30 个归档文件 --> <DefaultRolloverStrategy max="30"/> </RollingFile> <!-- WARN 日志文件 --> <RollingFile name="RollingFileWarn" fileName="${LOG_PATH}/${APP_NAME}-warn.log" filePattern="${FILE_PATTERN}"> <Filters> <!-- 仅采集 WARN 级别日志 --> <ThresholdFilter level="ERROR" onMatch="DENY" onMismatch="NEUTRAL"/> <ThresholdFilter level="WARN" onMatch="ACCEPT" onMismatch="DENY"/> </Filters> <PatternLayout pattern="${PATTERN_FILE}"/> <Policies> <TimeBasedTriggeringPolicy interval="1" modulate="true"/> <SizeBasedTriggeringPolicy size="16MB"/> </Policies> <DefaultRolloverStrategy max="30"/> </RollingFile> <!-- ERROR 日志文件 --> <RollingFile name="RollingFileError" fileName="${LOG_PATH}/${APP_NAME}-error.log" filePattern="${FILE_PATTERN}"> <Filters> <!-- 仅采集 ERROR 级别日志 --> <ThresholdFilter level="FATAL" onMatch="DENY" onMismatch="NEUTRAL"/> <ThresholdFilter level="ERROR" onMatch="ACCEPT" onMismatch="DENY"/> </Filters> <PatternLayout pattern="${PATTERN_FILE}"/> <Policies> <TimeBasedTriggeringPolicy interval="1" modulate="true"/> <SizeBasedTriggeringPolicy size="16MB"/> </Policies> <DefaultRolloverStrategy max="30"/> </RollingFile> <!-- FATAL 日志文件 --> <RollingFile name="RollingFileFatal" fileName="${LOG_PATH}/${APP_NAME}-fatal.log" filePattern="${FILE_PATTERN}"> <Filters> <!-- 仅采集 FATAL 级别日志 --> <ThresholdFilter level="FATAL" onMatch="ACCEPT" onMismatch="DENY"/> </Filters> <PatternLayout pattern="${PATTERN_FILE}"/> <Policies> <TimeBasedTriggeringPolicy interval="1" modulate="true"/> <SizeBasedTriggeringPolicy size="16MB"/> </Policies> <DefaultRolloverStrategy max="30"/> </RollingFile> </Appenders> <Loggers> <!-- Root Logger,默认 INFO 级别,所有高于 INFO 的日志也会输出 --> <Root level="INFO" includeLocation="true"> <!-- 输出到控制台 --> <Appender-ref ref="ConsolePrint"/> <!-- 输出到 INFO/WARN/ERROR/FATAL 文件 --> <Appender-ref ref="RollingFileInfo"/> <Appender-ref ref="RollingFileWarn"/> <Appender-ref ref="RollingFileError"/> <Appender-ref ref="RollingFileFatal"/> </Root> </Loggers> </Configuration> ./hj_loki_promtail.sh

~~~~宿主机~~~~

jenkins_start.sh #!/bin/bash JENKINS_HOME=/home/jens/jenkins JENKINS_WAR=/home/install/packages/jenkins.war JAVA=/home/install/jdk17/bin/java LOG=/home/install/jenkins/jenkins.log PID_FILE=/home/install/jenkins/jenkins.pid # 创建必要目录 mkdir -p $(dirname $LOG) mkdir -p $JENKINS_HOME # 检查 Jenkins 是否已在运行 if [ -f "$PID_FILE" ] && kill -0 $(cat "$PID_FILE") 2>/dev/null; then echo "⚠ Jenkins 已经在运行,PID: $(cat $PID_FILE)" exit 1 fi echo "🚀 启动 Jenkins..." nohup $JAVA -DJENKINS_HOME=$JENKINS_HOME \ -jar $JENKINS_WAR \ --httpPort=8800 \ > $LOG 2>&1 & PID=$! echo $PID > $PID_FILE sleep 1 if kill -0 $PID 2>/dev/null; then echo "✔ Jenkins 启动成功!PID: $PID" echo "🌐 访问地址:http://你的服务器IP:8800" echo "📄 日志路径:$LOG" else echo "❌ Jenkins 启动失败,请检查日志:$LOG" fi jenkins_stop.sh #!/bin/bash PID_FILE=/home/install/jenkins/jenkins.pid if [ ! -f "$PID_FILE" ]; then echo "⚠ Jenkins PID 文件不存在,可能未启动。" exit 1 fi PID=$(cat $PID_FILE) echo "🛑 停止 Jenkins (PID=$PID)..." kill $PID sleep 2 if kill -0 $PID 2>/dev/null; then echo "⚠ 杀不死,强制 kill -9" kill -9 $PID fi rm -f $PID_FILE echo "✔ Jenkins 已停止" jenkins_restart.sh #!/bin/bash /home/install/jenkins/jenkins_stop.sh sleep 2 /home/install/jenkins/jenkins_start.sh 全局工具配置 jdk /home/install/jdk17 maven /home/install/maven git /home/install/git/bin/git 构建项目 源码管理 https://gitee.com/huajian2018/boot.git Credentials 选中配置的账号密码 Build Steps 执行shell #!/bin/bash set -e WORKSPACE=$WORKSPACE DEPLOY_DIR1="/app/hj/hj_boot" DEPLOY_DIR2="/app/hj/hj_boot2" MODULE_NAME="hj-server" # =============================== echo "📦 构建 Maven 项目..." /home/install/maven/bin/mvn clean package -Dmaven.test.skip=true JAR_FILE="$WORKSPACE/$MODULE_NAME/target/$MODULE_NAME-0.0.1-SNAPSHOT.jar" if [ ! -f "$JAR_FILE" ]; then echo "❌ 未找到打包文件: $JAR_FILE" exit 1 fi echo "✔ Jar 文件: $JAR_FILE" # =============================== for DEPLOY_DIR in "$DEPLOY_DIR1" "$DEPLOY_DIR2"; do echo "🚀 部署到 $DEPLOY_DIR ..." mkdir -p $DEPLOY_DIR/history # 备份旧 jar if [ -f "$DEPLOY_DIR/$MODULE_NAME-0.0.1-SNAPSHOT.jar" ]; then mv $DEPLOY_DIR/$MODULE_NAME-0.0.1-SNAPSHOT.jar \ $DEPLOY_DIR/history/$MODULE_NAME-0.0.1-SNAPSHOT.jar_$(date +%Y%m%d_%H%M%S) fi # 复制新 jar cp $JAR_FILE $DEPLOY_DIR/ # 启动服务 cd $DEPLOY_DIR chmod +x ./hjServer.sh ./hjServer.sh done echo "🎉 部署完成!"

部署到其他服务器上,与对方服务器建立shh连接 ssh-keygen -t rsa ssh-copy-id user@TARGET_IP ssh-keygen -t rsa ssh-copy-id root@1.13.247.96 ssh-copy-id root@1.13.247.97 ssh-copy-id root@1.13.247.98 实施 ssh-keygen -t rsa ssh-copy-id root@119.45.26.162 ssh -i /root/.ssh/id_rsa root@119.45.26.162 Jenkins build step 执行 shell 自由风项目 #!/bin/bash set -e # 本地 Maven 构建环境 WORKSPACE=$WORKSPACE DEPLOY_DIR="/app/hjserver" # 只有一个部署目录 MODULE_NAME="hj-server" # 远程服务器 IP 地址 REMOTE_SERVER="119.45.26.162" REMOTE_USER="root" # 远程服务器的 SSH 用户名 REMOTE_DIR="/tmp/hj_deploy" # 远程临时目录,用来存放上传的 JAR 文件 REMOTE_SSH_KEY="/root/.ssh/id_rsa" # 你的 SSH 私钥路径 # =============================== echo "📦 构建 Maven 项目..." /home/install/maven/bin/mvn clean package -Dmaven.test.skip=true JAR_FILE="$WORKSPACE/$MODULE_NAME/target/$MODULE_NAME-0.0.1-SNAPSHOT.jar" if [ ! -f "$JAR_FILE" ]; then echo "❌ 未找到打包文件: $JAR_FILE" exit 1 fi echo "✔ Jar 文件: $JAR_FILE" # =============================== echo "🚀 开始上传 JAR 文件到远程服务器 $REMOTE_SERVER..." # 使用 SCP 上传 JAR 文件到远程服务器的临时目录 # 确保远程目录存在,且有写权限 ssh -i "$REMOTE_SSH_KEY" "$REMOTE_USER@$REMOTE_SERVER" "mkdir -p $REMOTE_DIR" # 上传 JAR 文件 scp -i "$REMOTE_SSH_KEY" "$JAR_FILE" "$REMOTE_USER@$REMOTE_SERVER:$REMOTE_DIR/" # =============================== # 在远程服务器上部署 echo "🚀 开始在远程服务器 $REMOTE_SERVER 部署..." # 执行远程脚本 ssh -i "$REMOTE_SSH_KEY" "$REMOTE_USER@$REMOTE_SERVER" <<EOF # 设置部署目录 DEPLOY_DIR="/app/hjserver" MODULE_NAME="hj-server" BACKUP_DIR="\$DEPLOY_DIR/history" # 创建历史备份目录(如果不存在) mkdir -p \$BACKUP_DIR # 进入部署目录并备份旧的 JAR 文件 echo "🚀 部署到 \$DEPLOY_DIR ..." if [ -f "\$DEPLOY_DIR/\$MODULE_NAME-0.0.1-SNAPSHOT.jar" ]; then mv \$DEPLOY_DIR/\$MODULE_NAME-0.0.1-SNAPSHOT.jar \ \$BACKUP_DIR/\$MODULE_NAME-0.0.1-SNAPSHOT.jar_\$(date +%Y%m%d_%H%M%S) fi # 复制新 jar 文件 cp "$REMOTE_DIR/$MODULE_NAME-0.0.1-SNAPSHOT.jar" \$DEPLOY_DIR/ # 启动服务 cd \$DEPLOY_DIR chmod +x ./hjserver.sh ./hjserver.sh echo "🎉 部署完成!" EOF # =============================== echo "🎉 部署到远程服务器完成!"

#!/bin/bash # =============================================== # hj_install_env.sh # 离线安装 JDK17 / JDK8 / Maven / Git # AUTHOR: HJ # =============================================== set -e INSTALL_DIR="/home/install" PACKAGE_DIR="/home/install/packages" ENV_FILE="/etc/profile.d/hj_env.sh" echo "====================================" echo " 🚀 开始离线安装开发环境" echo "====================================" echo "📁 安装目录: $INSTALL_DIR" echo "📦 包目录: $PACKAGE_DIR" echo mkdir -p "$INSTALL_DIR" # ----------------- 工具函数 ----------------- install_pkg() { local pkg_name="$1" local pkg_pattern="$2" local install_path="$3" local pkg_tar="$PACKAGE_DIR/${pkg_name}.tar.gz" echo "------------------------------------" echo " 🔧 安装 ${pkg_name}" if [ -d "$install_path" ]; then echo "✔ ${pkg_name} 已存在,跳过" return fi if [ ! -f "$pkg_tar" ]; then echo "❌ 找不到安装包:$pkg_tar" exit 1 fi tar -xzf "$pkg_tar" -C "$INSTALL_DIR" pkg_dir=$(ls "$INSTALL_DIR" | grep -E "$pkg_pattern" | head -n 1 || true) if [ -z "$pkg_dir" ]; then echo "❌ 未找到解压后的目录,pattern=$pkg_pattern" exit 1 fi mv "$INSTALL_DIR/$pkg_dir" "$install_path" echo "✔ 安装成功 → $install_path" echo } append_env_once() { local line="$1" local file="$2" grep -F "$line" "$file" >/dev/null 2>&1 || echo "$line" >> "$file" } # ----------------- 安装 JDK / Maven ----------------- install_pkg "jdk17" "jdk.*17" "$INSTALL_DIR/jdk17" install_pkg "jdk8" "jdk.*8" "$INSTALL_DIR/jdk8" install_pkg "maven" "apache-maven" "$INSTALL_DIR/maven" # ----------------- 安装 Git(源码编译安装,支持 HTTPS,无 gitweb) ----------------- echo "------------------------------------" echo " 🔧 安装 Git(源码编译,支持 HTTPS & 无 gitweb)" GIT_INSTALL_DIR="$INSTALL_DIR/git" GIT_TAR="$PACKAGE_DIR/git.tar.gz" # 如果 Git 已存在,则跳过 if [ -d "$GIT_INSTALL_DIR" ] && [ -f "$GIT_INSTALL_DIR/bin/git" ]; then echo "✔ Git 已存在,跳过安装" exit 0 fi # 检查 Git 源码包 if [ ! -f "$GIT_TAR" ]; then echo "❌ 未找到 Git 安装包:$GIT_TAR" exit 1 fi echo "📌 安装依赖(确保 HTTPS 可用)..." yum install -y \ gcc gcc-c++ make \ curl curl-devel expat-devel \ openssl openssl-devel zlib-devel \ perl-ExtUtils-MakeMaker \ gettext-devel autoconf # -------- 🚨 磁盘空间检测,避免无空间中断 ---------- REQUIRED_MB=1536 AVAILABLE_MB=$(df -m / | awk 'NR==2{print $4}') if [ "$AVAILABLE_MB" -lt "$REQUIRED_MB" ]; then echo "❌ 磁盘空间不足!至少需要 ${REQUIRED_MB}MB,当前仅 ${AVAILABLE_MB}MB" exit 1 fi # ---------------------------------------------------- echo "📦 解压 Git 源码..." tar -xzf "$GIT_TAR" -C "$INSTALL_DIR" GIT_SRC_DIR=$(ls "$INSTALL_DIR" | grep -E "^git" | head -n 1) if [ -z "$GIT_SRC_DIR" ]; then echo "❌ Git 源码解压失败" exit 1 fi echo "➡ 进入源码目录:$INSTALL_DIR/$GIT_SRC_DIR" cd "$INSTALL_DIR/$GIT_SRC_DIR" echo "🧱 生成 configure..." make configure echo "⚙️ 配置编译参数..." ./configure \ --prefix="$GIT_INSTALL_DIR" \ --with-openssl \ --with-curl \ CURL_LIBS="-lcurl" \ OPENSSL_LIBS="-lssl -lcrypto" \ --without-tcltk \ --without-python echo "🧩 开始编译..." make NO_GITWEB=YesPlease -j$(nproc) echo "📥 安装 Git..." make NO_GITWEB=YesPlease install # ----------------- 写入环境变量 ----------------- echo "====================================" echo " 📝 写入环境变量" echo "====================================" cat > "$ENV_FILE" <<EOF # hj_install_env export JAVA_HOME=${INSTALL_DIR}/jdk17 export MAVEN_HOME=${INSTALL_DIR}/maven export GIT_HOME=${INSTALL_DIR}/git export PATH=\$JAVA_HOME/bin:\$MAVEN_HOME/bin:\$GIT_HOME/bin:\$PATH EOF chmod +x "$ENV_FILE" # shell 启动自动加载 if [ -f ~/.bashrc ]; then append_env_once "source $ENV_FILE" ~/.bashrc fi source "$ENV_FILE" # ----------------- 验证安装 ----------------- echo "====================================" echo " 🔍 验证安装" echo "====================================" echo echo "➡ Java:" java -version || echo "❌ Java 未生效" echo echo "➡ Maven:" mvn -v || echo "❌ Maven 未生效" echo echo "➡ Git:" if ! command -v git >/dev/null; then echo "❌ Git 未找到(可能编译失败或 PATH 未生效)" else git --version fi echo echo "====================================" echo " 🎉 全部安装完成!" echo "====================================" echo "已经写入环境变量:$ENV_FILE" jdk17,jdk8,maven,git 验证 git ls-remote -h https://gitee.com/huajian2018/boot.git

https://nodejs.org/en/download Linux x64 Standalone Binary(.xz) install_nodejs.sh #!/bin/bash # 定义变量 NODE_PACKAGE_PATH="/home/install/packages/node-v24.11.1-linux-x64.tar.xz" # Node.js 安装包路径 INSTALL_DIR="/home/install/nodejs" # Node.js 安装目录 NODE_BIN_DIR="$INSTALL_DIR/bin" # Node.js 可执行文件目录 # 创建安装目录 echo "🚀 创建安装目录: $INSTALL_DIR" mkdir -p $INSTALL_DIR # 解压 Node.js 安装包 echo "📦 解压 Node.js 安装包..." tar -xJf $NODE_PACKAGE_PATH -C $INSTALL_DIR --strip-components=1 # 配置环境变量 echo "📈 配置环境变量..." # 将环境变量添加到 /etc/profile echo "export PATH=\$PATH:$NODE_BIN_DIR" | sudo tee -a /etc/profile # 重新加载环境变量 source /etc/profile # 验证 Node.js 和 npm 是否安装成功 echo "✅ 验证 Node.js 和 npm..." node -v npm -v

http://nginx.org/download/nginx-1.2460.tar.gz [root@VM-0-8-opencloudos nginx]# sudo fuser -n tcp 80 80/tcp: 167495 167496 [root@VM-0-8-opencloudos nginx]# kill -9 167495 [root@VM-0-8-opencloudos nginx]# kill -9 167496 [root@VM-0-8-opencloudos nginx]# sudo systemctl stop nginx [root@VM-0-8-opencloudos nginx]# sudo systemctl start nginx [root@VM-0-8-opencloudos nginx]# install_nginx.sh #!/bin/bash # 定义安装包路径、源代码解压目录和目标安装目录 NODE_PACKAGE_PATH="/home/install/packages/nginx-1.26.0.tar.gz" SOURCE_DIR="/home/install/nginx-src" # 用于解压源代码的目录 INSTALL_DIR="/usr/local/nginx" # Nginx 安装目录 NGINX_BIN_DIR="$INSTALL_DIR/sbin" # Nginx 可执行文件目录 NGINX_CONF_DIR="$INSTALL_DIR/conf" # Nginx 配置文件目录 NGINX_LOG_DIR="$INSTALL_DIR/logs" # Nginx 日志文件目录 NGINX_HTML_DIR="$INSTALL_DIR/html" # Nginx HTML 文件目录 # 更新系统 echo "🚀 更新系统..." sudo yum -y update # 对于 CentOS/RHEL 系统 # sudo apt-get update # 对于 Ubuntu/Debian 系统 # 安装依赖包 echo "📦 安装依赖包..." sudo yum install -y gcc pcre-devel zlib-devel make # 对于 CentOS/RHEL # sudo apt-get install -y gcc make libpcre3 libpcre3-dev zlib1g zlib1g-dev # 对于 Ubuntu/Debian # 创建源代码解压目录和安装目录 echo "🚀 创建源代码解压目录和安装目录..." mkdir -p $SOURCE_DIR mkdir -p $INSTALL_DIR # 解压 Nginx 安装包到源代码目录 echo "📦 解压 Nginx 安装包到 $SOURCE_DIR ..." tar -zxvf $NODE_PACKAGE_PATH -C $SOURCE_DIR --strip-components=1 # 编译安装 Nginx echo "🔨 编译安装 Nginx..." cd $SOURCE_DIR sudo ./configure --prefix=$INSTALL_DIR --with-http_ssl_module sudo make sudo make install # 配置环境变量 echo "📈 配置环境变量..." echo "export PATH=\$PATH:$NGINX_BIN_DIR" >> ~/.bashrc source ~/.bashrc # 检查 Nginx 是否安装成功 echo "✅ 检查 Nginx 版本..." $NGINX_BIN_DIR/nginx -v # 启动 Nginx 服务 echo "🚀 启动 Nginx..." $NGINX_BIN_DIR/nginx # 配置 Nginx 开机自启 echo "🛠 配置 Nginx 开机自启..." if [ ! -f $NGINX_CONF_DIR/nginx.conf ]; then echo "❌ 找不到 nginx.conf 文件,请检查编译过程是否成功!" exit 1 fi # 创建符号链接 if [ -f /usr/bin/nginx ]; then echo "⚠️ /usr/bin/nginx 已经存在,删除它并创建新的符号链接..." sudo rm -f /usr/bin/nginx fi sudo ln -s $NGINX_BIN_DIR/nginx /usr/bin/nginx # 配置 systemd 服务文件 cat <<EOF | sudo tee /etc/systemd/system/nginx.service [Unit] Description=The Nginx HTTP and Reverse Proxy Server After=network.target [Service] Type=forking ExecStart=$NGINX_BIN_DIR/nginx ExecReload=$NGINX_BIN_DIR/nginx -s reload ExecStop=$NGINX_BIN_DIR/nginx -s quit PIDFile=$NGINX_LOG_DIR/nginx.pid Restart=on-failure [Install] WantedBy=multi-user.target EOF # 启动并启用 systemd 服务 sudo systemctl daemon-reload sudo systemctl start nginx sudo systemctl enable nginx # 输出安装相关信息 echo "✅ Nginx 安装完成,服务已启动并设置为开机自启!" echo "Nginx 安装目录: $INSTALL_DIR" echo "Nginx 可执行文件目录: $NGINX_BIN_DIR" echo "Nginx 配置文件目录: $NGINX_CONF_DIR" echo "Nginx 日志目录: $NGINX_LOG_DIR" echo "Nginx HTML 文件目录: $NGINX_HTML_DIR" echo "Nginx 源代码目录: $SOURCE_DIR"

#!/bin/bash set -e REDIS_PORT=6380 REDIS_PASS="Jens20250826001hjIsOk" REDIS_CONF_DIR="/etc/redis" REDIS_CONF_FILE="${REDIS_CONF_DIR}/redis.conf" REDIS_DATA_DIR="/var/lib/redis" REDIS_LOG_FILE="/var/log/redis.log" echo "======================================" echo " Redis OC9 干净重装脚本" echo "======================================" # 1️⃣ 停止 Redis 并清理残留进程 echo "停止 Redis 服务并清理残留进程..." systemctl stop redis || true pkill redis-server || true # 2️⃣ 清理 systemd 状态 systemctl daemon-reload systemctl reset-failed redis || true # 3️⃣ 卸载旧 Redis if rpm -q redis >/dev/null 2>&1; then echo "卸载旧 Redis..." yum remove -y redis fi # 4️⃣ 安装 Redis echo "安装 Redis..." yum install -y redis # 5️⃣ 备份原配置 if [ -f "$REDIS_CONF_FILE" ]; then cp $REDIS_CONF_FILE ${REDIS_CONF_FILE}.bak.$(date +%F_%T) fi # 6️⃣ 创建数据目录和日志文件,并设置权限 mkdir -p $REDIS_DATA_DIR chown redis:redis $REDIS_DATA_DIR chmod 755 $REDIS_DATA_DIR touch $REDIS_LOG_FILE chown redis:redis $REDIS_LOG_FILE chmod 644 $REDIS_LOG_FILE # 7️⃣ 生成干净配置文件 echo "生成干净配置..." cat > $REDIS_CONF_FILE <<EOF bind 0.0.0.0 port ${REDIS_PORT} requirepass ${REDIS_PASS} daemonize no supervised systemd pidfile /var/run/redis_6380.pid logfile ${REDIS_LOG_FILE} dir ${REDIS_DATA_DIR} dbfilename dump.rdb appendonly yes appendfilename "appendonly.aof" save 900 1 save 300 10 save 60 10000 maxmemory 1gb maxmemory-policy allkeys-lru tcp-backlog 511 timeout 0 tcp-keepalive 300 EOF # 8️⃣ 启动 Redis 并开机自启 systemctl daemon-reload systemctl enable redis systemctl restart redis sleep 2 # 9️⃣ 检查端口是否生效 if ss -tunlp | grep -q ":${REDIS_PORT}"; then echo "✔ Redis 已启动,端口 ${REDIS_PORT} 生效" else echo "❌ Redis 启动失败,请检查日志:${REDIS_LOG_FILE} 或 journalctl -u redis" exit 1 fi # 🔟 防火墙放行 if command -v firewall-cmd &>/dev/null && systemctl is-active firewalld &>/dev/null; then firewall-cmd --permanent --add-port=${REDIS_PORT}/tcp firewall-cmd --reload echo "✔ 防火墙已放行端口 ${REDIS_PORT}" else echo "⚠ 未检测到 firewalld 或未运行,跳过放行" fi echo "======================================" echo "✅ Redis 干净重装完成!" echo "- 端口:${REDIS_PORT}" echo "- 密码:${REDIS_PASS}" echo "- 配置文件:${REDIS_CONF_FILE}" echo "- 数据目录:${REDIS_DATA_DIR}" echo "- 日志文件:${REDIS_LOG_FILE}" echo "本机测试:redis-cli -p ${REDIS_PORT} -a ${REDIS_PASS} ping" echo "======================================"

#!/bin/bash set -e INSTALL_DIR="/usr/local/node_exporter" USER="node_exporter" PORT=9100 # 可指定版本,否则自动获取最新 NODE_EXPORTER_VERSION=${1:-} echo "======================================" echo " Node Exporter 安装/更新脚本" echo "======================================" # 1️⃣ 获取最新版本(如果没有指定) if [ -z "$NODE_EXPORTER_VERSION" ]; then echo "检测 GitHub 最新版本..." NODE_EXPORTER_VERSION=$(curl -s https://api.github.com/repos/prometheus/node_exporter/releases/latest | grep '"tag_name":' | sed -E 's/.*"v([^"]+)".*/\1/') echo "最新版本:$NODE_EXPORTER_VERSION" fi # 2️⃣ 创建用户(如果不存在) id -u $USER &>/dev/null || useradd -rs /bin/false $USER # 3️⃣ 下载 Node Exporter(如果不存在) DOWNLOAD_FILE="/tmp/node_exporter-${NODE_EXPORTER_VERSION}.linux-amd64.tar.gz" if [ ! -f "$DOWNLOAD_FILE" ]; then echo "下载 Node Exporter ${NODE_EXPORTER_VERSION}..." curl -Lo $DOWNLOAD_FILE https://github.com/prometheus/node_exporter/releases/download/v${NODE_EXPORTER_VERSION}/node_exporter-${NODE_EXPORTER_VERSION}.linux-amd64.tar.gz else echo "下载包已存在,跳过下载:$DOWNLOAD_FILE" fi # 4️⃣ 解压并安装(如果已存在则覆盖) if [ -d "$INSTALL_DIR" ]; then echo "安装目录已存在,覆盖更新..." rm -rf "$INSTALL_DIR" fi tar xzf $DOWNLOAD_FILE -C /tmp mv /tmp/node_exporter-${NODE_EXPORTER_VERSION}.linux-amd64 "$INSTALL_DIR" chown -R $USER:$USER "$INSTALL_DIR" # 5️⃣ 创建 systemd 服务(覆盖旧服务) echo "创建/更新 systemd 服务..." cat >/etc/systemd/system/node_exporter.service <<EOF [Unit] Description=Node Exporter Wants=network-online.target After=network-online.target [Service] User=${USER} Group=${USER} Type=simple ExecStart=${INSTALL_DIR}/node_exporter [Install] WantedBy=multi-user.target EOF # 6️⃣ 启动并开机自启 systemctl daemon-reload systemctl enable node_exporter systemctl restart node_exporter || true sleep 2 # 7️⃣ 防火墙放行端口 if command -v firewall-cmd &>/dev/null && systemctl is-active firewalld &>/dev/null; then firewall-cmd --permanent --add-port=${PORT}/tcp || true firewall-cmd --reload || true echo "✔ 防火墙已放行端口 ${PORT}" else echo "⚠ 未检测到 firewalld 或未运行,跳过放行" fi # 8️⃣ 检查端口是否生效 if ss -tunlp | grep -q ":${PORT}"; then echo "✔ Node Exporter 已启动,端口 ${PORT} 生效" else echo "❌ Node Exporter 启动失败,请检查 systemctl status node_exporter" fi echo "======================================" echo "✅ Node Exporter 安装/更新完成!" echo "访问:http://<服务器IP>:${PORT}/metrics" echo "服务管理:systemctl {start|stop|status} node_exporter" echo "======================================" 卸载脚本 #!/bin/bash set -e INSTALL_DIR="/usr/local/node_exporter" SERVICE_FILE="/etc/systemd/system/node_exporter.service" DOWNLOAD_DIR="/tmp" DOWNLOAD_PREFIX="node_exporter-" USER="node_exporter" echo "======================================" echo " Node Exporter 停止与卸载脚本" echo "======================================" # 1️⃣ 停止服务 if systemctl is-active node_exporter &>/dev/null; then echo "停止 Node Exporter 服务..." systemctl stop node_exporter else echo "Node Exporter 服务未运行,跳过停止" fi # 2️⃣ 禁用开机自启 if systemctl is-enabled node_exporter &>/dev/null; then echo "禁用开机自启..." systemctl disable node_exporter else echo "Node Exporter 服务未设置开机自启,跳过" fi # 3️⃣ 删除 systemd 服务文件 if [ -f "$SERVICE_FILE" ]; then echo "删除 systemd 服务文件..." rm -f "$SERVICE_FILE" systemctl daemon-reload systemctl reset-failed node_exporter || true else echo "systemd 服务文件不存在,跳过" fi # 4️⃣ 删除安装目录 if [ -d "$INSTALL_DIR" ]; then echo "删除安装目录 $INSTALL_DIR..." rm -rf "$INSTALL_DIR" else echo "安装目录不存在,跳过" fi # 5️⃣ 删除下载包(可选) DOWNLOAD_FILE=$(ls ${DOWNLOAD_DIR}/${DOWNLOAD_PREFIX}* 2>/dev/null || true) if [ -n "$DOWNLOAD_FILE" ]; then echo "删除下载包 $DOWNLOAD_FILE..." rm -f ${DOWNLOAD_DIR}/${DOWNLOAD_PREFIX}* else echo "下载包不存在,跳过" fi # 6️⃣ 删除系统用户(可选) if id -u $USER &>/dev/null; then echo "删除 Node Exporter 用户 $USER..." userdel -r $USER || true else echo "用户 $USER 不存在,跳过" fi # 7️⃣ 防火墙清理端口 if command -v firewall-cmd &>/dev/null && systemctl is-active firewalld &>/dev/null; then echo "移除防火墙端口 9100..." firewall-cmd --permanent --remove-port=9100/tcp || true firewall-cmd --reload || true else echo "未检测到 firewalld 或未运行,跳过防火墙清理" fi echo "======================================" echo "✅ Node Exporter 已彻底停止并卸载完成" echo "======================================"

#!/bin/bash set -e PROMTAIL_DIR="/usr/local/promtail" CONFIG_DIR="/etc/promtail" SERVICE_FILE="/etc/systemd/system/promtail.service" PROMTAIL_VERSION=${1:-} echo "======================================" echo " Promtail 安装/部署脚本" echo "======================================" # 1️⃣ 获取最新版本(如果没有指定) if [ -z "$PROMTAIL_VERSION" ]; then echo "检测 GitHub 最新版本..." PROMTAIL_VERSION=$(curl -s https://api.github.com/repos/grafana/loki/releases/latest \ | grep '"tag_name":' | sed -E 's/.*"v([^"]+)".*/\1/') echo "最新版本:$PROMTAIL_VERSION" fi # 2️⃣ 创建目录 mkdir -p "$PROMTAIL_DIR" "$CONFIG_DIR" echo "✔ 安装目录:$PROMTAIL_DIR" echo "✔ 配置目录:$CONFIG_DIR" # 3️⃣ 下载 Promtail(如果不存在) TAR_FILE="/tmp/promtail-${PROMTAIL_VERSION}.linux-amd64.tar.gz" if [ ! -f "$TAR_FILE" ]; then echo "下载 Promtail ${PROMTAIL_VERSION}..." curl -Lo $TAR_FILE https://github.com/grafana/loki/releases/download/v${PROMTAIL_VERSION}/promtail-linux-amd64.zip else echo "下载包已存在,跳过下载:$TAR_FILE" fi # 4️⃣ 解压并安装 unzip -o $TAR_FILE -d /tmp mv /tmp/promtail-linux-amd64 "$PROMTAIL_DIR/promtail" chmod +x "$PROMTAIL_DIR/promtail" # 5️⃣ 创建 systemd 服务 cat > "$SERVICE_FILE" <<EOF [Unit] Description=Promtail Service After=network.target [Service] Type=simple ExecStart=$PROMTAIL_DIR/promtail -config.file=$CONFIG_DIR/promtail.yml Restart=on-failure [Install] WantedBy=multi-user.target EOF # 6️⃣ 创建默认配置(如果不存在) if [ ! -f "$CONFIG_DIR/promtail.yml" ]; then cat > "$CONFIG_DIR/promtail.yml" <<EOC server: http_listen_port: 9080 grpc_listen_port: 0 positions: filename: /tmp/positions.yaml clients: - url: http://175.27.133.137:3100/loki/api/v1/push scrape_configs: - job_name: system static_configs: - targets: - localhost labels: job: varlogs __path__: /var/log/*.log - job_name: hjboot static_configs: - targets: - localhost labels: job: hjboot-job service: hjboot-ser __path__: /app/hjserver/logs/hjBoot/*.log pipeline_stages: - regex: # 捕获 TRACE/DEBUG/INFO/WARN/ERROR/FATAL expression: "(?P<severity>TRACE|DEBUG|INFO|WARN|ERROR|FATAL)" EOC fi # 7️⃣ systemd 启动 systemctl daemon-reload systemctl enable promtail systemctl restart promtail || true sleep 2 # 8️⃣ 检查状态 systemctl status promtail --no-pager echo "======================================" echo "✅ Promtail 安装完成" echo "安装目录:$PROMTAIL_DIR" echo "配置文件:$CONFIG_DIR/promtail.yml" echo "服务管理:systemctl {start|stop|status} promtail" echo "======================================" /etc/promtail/promtail.yml server: http_listen_port: 9080 grpc_listen_port: 0 positions: filename: /tmp/positions.yaml clients: - url: http://175.27.133.137:3100/loki/api/v1/push scrape_configs: - job_name: system static_configs: - targets: - localhost labels: job: varlogs __path__: /var/log/*.log - job_name: hjboot static_configs: - targets: - localhost labels: job: hjboot-job service: hjboot-ser __path__: /app/hjserver/logs/hjBoot/*.log pipeline_stages: - regex: # 捕获 TRACE/DEBUG/INFO/WARN/ERROR/FATAL expression: "(?P<severity>TRACE|DEBUG|INFO|WARN|ERROR|FATAL)"

#!/bin/bash echo "=== 1. 写入国内 Grafana Yum 源(清华镜像) ===" cat >/etc/yum.repos.d/grafana.repo <<EOF [grafana] name=Grafana baseurl=https://mirrors.tuna.tsinghua.edu.cn/grafana/yum/rpm repo_gpgcheck=0 enabled=1 gpgcheck=0 EOF echo "=== 2. 清理缓存并生成新的 Yum 缓存 ===" yum clean all yum makecache -y echo "=== 3. 安装 Grafana ===" yum install grafana -y if [ $? -ne 0 ]; then echo "!!! 安装失败,请检查网络或源配置 !!!" exit 1 fi echo "=== 4. 设置 Grafana 开机启动并启动服务 ===" systemctl enable grafana-server systemctl restart grafana-server echo "=== 5. 安装完成 ===" echo "默认访问地址: http://<服务器IP>:3000" echo "默认账号:admin" echo "默认密码:admin(首次登录会强制修改)" echo "=== 服务状态检查 ===" systemctl status grafana-server --no-pager echo "=== 📁 Grafana 路径说明 ===" echo "程序目录: /usr/share/grafana" echo "配置文件: /etc/grafana/grafana.ini" echo "数据目录: /var/lib/grafana/" echo "日志目录: /var/log/grafana/" echo "系统服务: grafana-server.service" echo "" echo "=== 🔧 常用命令 ===" echo "查看状态: systemctl status grafana-server" echo "启动服务: systemctl start grafana-server" echo "重启服务: systemctl restart grafana-server" echo "停止服务: systemctl stop grafana-server" echo "" echo "✨ Enjoy Monitoring!" loki http://175.27.133.137:3100 prometheus http://175.27.133.137:7090 模板ID grafana页面配置好Prometheus数据源,然后仪表板引入模板就好.按需修改模板,下面放三个模板链接 1 Spring Boot 3.x Statistics https://grafana.com/grafana/dashboards/19004-spring-boot-statistics/?utm_source=chatgpt.com 2 Node Exporter Full https://grafana.com/grafana/dashboards/1860-node-exporter-full/ 3 SpringBoot APM Dashboard(中文版本) https://grafana.com/grafana/dashboards/21319-springboot-apm-dashboard/ 4 https://grafana.com/grafana/dashboards/13359-logs/?utm_source=chatgpt.com --这个模板 Container 这个在json模型那改成 job即可,因为现在项目配置的标签是job,service

#!/bin/bash PROM_VERSION="3.7.3" echo "=== ⏳ 创建安装目录 ===" mkdir -p /app/prometheus cd /app/prometheus echo "=== 🚀 下载 Prometheus ${PROM_VERSION}(清华镜像)===" if [ ! -f prometheus-${PROM_VERSION}.linux-amd64.tar.gz ]; then wget https://mirrors.tuna.tsinghua.edu.cn/github-release/prometheus/prometheus/LatestRelease/prometheus-${PROM_VERSION}.linux-amd64.tar.gz if [ $? -ne 0 ]; then echo "❌ 下载失败,请检查网络!" exit 1 fi else echo "✔ 已检测到安装包,无需重新下载。" fi echo "=== 📦 解压并安装 ===" tar -xzf prometheus-${PROM_VERSION}.linux-amd64.tar.gz cp -r prometheus-${PROM_VERSION}.linux-amd64/* /app/prometheus/ rm -rf prometheus-${PROM_VERSION}.linux-amd64 echo "=== ⚙️ 创建 systemd 服务 ===" cat >/usr/lib/systemd/system/prometheus.service <<EOF [Unit] Description=Prometheus Server After=network.target [Service] ExecStart=/app/prometheus/prometheus \ --config.file=/app/prometheus/prometheus.yml \ --storage.tsdb.path=/app/prometheus/data Restart=on-failure [Install] WantedBy=multi-user.target EOF echo "=== 🔄 重新加载 systemd ===" systemctl daemon-reload echo "=== 🚀 启动 Prometheus 并设置开机自启 ===" systemctl enable prometheus systemctl restart prometheus echo "" echo "=======================================" echo "🎉 Prometheus 安装完成" echo "=======================================" echo "📍 安装目录:" echo " /app/prometheus/" echo "" echo "📄 配置文件:" echo " /app/prometheus/prometheus.yml" echo "" echo "💾 数据目录:" echo " /app/prometheus/data" echo "" echo "🔧 systemd 服务文件:" echo " /usr/lib/systemd/system/prometheus.service" echo "" echo "📢 Web 访问地址:" echo " http://<服务器IP>:9090" echo "" echo "🧪 健康检查命令:" echo " curl http://localhost:9090/-/healthy" echo "" echo "🕹 常用命令:" echo " systemctl start prometheus" echo " systemctl stop prometheus" echo " systemctl restart prometheus" echo " systemctl status prometheus" echo "" echo "📜 查看日志:" echo " journalctl -u prometheus -f" echo "" systemctl status prometheus --no-pager /app/prometheus/prometheus.yml # my global config global: scrape_interval: 15s evaluation_interval: 15s # Alertmanager configuration alerting: alertmanagers: - static_configs: - targets: [] rule_files: [] scrape_configs: # Prometheus 自身 - job_name: "prometheus" static_configs: - targets: ["localhost:9090"] labels: app: "prometheus-label" # 监控 Spring Boot 应用(hj-server) - job_name: 'hj-server' metrics_path: '/actuator/prometheus' static_configs: - targets: ['127.0.0.1:7780'] labels: app: "hjServer-label" # XXL-JOB - job_name: 'xxl-job' metrics_path: '/xxl-job-admin/actuator/prometheus' static_configs: - targets: ['127.0.0.1:8989'] labels: app: "xxlJob-label" # Node Exporter(服务器指标) - job_name: 'server-metrics' static_configs: - targets: ['127.0.0.1:9100'] labels: app: "serverMetrics-label"

1 https://github.com/grafana/loki/releases/download/v3.6.1/loki-linux-amd64.zip 下载,上传到/home/install/loki目录下 2 unzip loki-linux-amd64.zip cd到目录下 解压文件 3 chmod 777 loki-linux-amd64 赋予执行权限 4 ./loki-linux-amd64 -config.file=loki-config.yaml 执行文件,访问 curl http://localhost:3100/metrics 验证 5 sudo vi /etc/systemd/system/loki.service 配置到系统服务里面去 [Unit] Description=Loki After=network.target [Service] User=root ExecStart=/home/install/loki/loki-linux-amd64 -config.file=/home/install/loki/loki-config.yaml Restart=on-failure LimitNOFILE=4096 [Install] WantedBy=multi-user.target 6 systemctl daemon-reload 7 systemctl start loki.service 8 systemctl enable loki.service loki-config.yaml auth_enabled: false server: http_listen_port: 3100 grpc_listen_port: 9096 log_level: info grpc_server_max_concurrent_streams: 1000 common: instance_addr: 0.0.0.0 path_prefix: /home/install/loki storage: filesystem: chunks_directory: /home/install/loki/chunks rules_directory: /home/install/loki/rules replication_factor: 1 ring: kvstore: store: inmemory query_range: results_cache: cache: embedded_cache: enabled: true max_size_mb: 100 limits_config: metric_aggregation_enabled: true enable_multi_variant_queries: true schema_config: configs: - from: 2020-10-24 store: tsdb object_store: filesystem schema: v13 index: prefix: index_ period: 24h pattern_ingester: enabled: true metric_aggregation: loki_address: localhost:3100 ruler: alertmanager_url: http://localhost:9093 frontend: encoding: protobuf # By default, Loki will send anonymous, but uniquely-identifiable usage and configuration # analytics to Grafana Labs. These statistics are sent to https://stats.grafana.org/ # # Statistics help us better understand how Loki is used, and they show us performance # levels for most users. This helps us prioritize features and documentation. # For more information on what's sent, look at # https://github.com/grafana/loki/blob/main/pkg/analytics/stats.go # Refer to the buildReport method to see what goes into a report. # # If you would like to disable reporting, uncomment the following lines: #analytics: # reporting_enabled: false install_loki.sh #!/bin/bash # 配置 Loki 相关路径 LOKI_BIN="/home/install/loki/loki-linux-amd64" LOKI_CONFIG="/home/install/loki/loki-config.yaml" SYSTEMD_SERVICE="/etc/systemd/system/loki.service" # 检查 Loki 是否已在运行,如果在运行则停止 if systemctl is-active --quiet loki; then echo "Loki is running, stopping it first..." sudo systemctl stop loki.service else echo "Loki is not running, proceeding with setup..." fi # 创建 systemd 服务文件 echo "Creating systemd service..." sudo tee $SYSTEMD_SERVICE > /dev/null <<EOF [Unit] Description=Loki After=network.target [Service] User=root ExecStart=$LOKI_BIN -config.file=$LOKI_CONFIG Restart=on-failure LimitNOFILE=4096 [Install] WantedBy=multi-user.target EOF # 重新加载 systemd 配置 sudo systemctl daemon-reload # 启动 Loki 服务 echo "Starting Loki service..." sudo systemctl start loki.service # 设置开机自启 sudo systemctl enable loki.service echo "Loki service setup complete." echo "You can check the service status with: systemctl status loki.service" echo "Verify Loki metrics: curl http://localhost:3100/metrics"

install_mysql_rpm.sh #!/bin/bash set -e MYSQL_PORT=3311 MYSQL_ROOT_PASS="${MYSQL_ROOT_PASS:-Jens20250826001hjIsOk}" MYSQL_DATA_DIR="/var/lib/mysql" MYSQL_REPO_URL="https://dev.mysql.com/get/mysql80-community-release-el9-1.noarch.rpm" echo "============== 0. 基础校验 ==============" if [ "$(id -u)" != "0" ]; then echo "❌ ERROR: 必须以 root 身份执行" exit 1 fi if [[ -z "$MYSQL_DATA_DIR" || "$MYSQL_DATA_DIR" == "/" ]]; then echo "❌ 危险的数据目录:${MYSQL_DATA_DIR},脚本退出" exit 2 fi echo "============== 1. 卸载老旧 MySQL / MariaDB ==============" systemctl stop mysqld >/dev/null 2>&1 || true yum remove -y mysql* mariadb* >/dev/null 2>&1 || true echo "============== 2. 清理旧数据(谨慎) ==============" # rm -rf ${MYSQL_DATA_DIR}/* rm -f /var/log/mysqld.log || true echo "============== 3. 导入 GPG Key ==============" rpm --import https://repo.mysql.com/RPM-GPG-KEY-mysql-2023 echo "============== 4. 安装 MySQL 官方 Repo ==============" yum install -y ${MYSQL_REPO_URL} echo "============== 5. 清理 Yum 缓存 ==============" yum clean all echo "============== 6. 安装 MySQL 服务端 ==============" yum install -y mysql-community-server echo "============== 7. 生成 MySQL 配置文件 /etc/my.cnf.d/my.cnf ==============" cat > /etc/my.cnf <<EOF [client] port=${MYSQL_PORT} default-character-set=utf8mb4 [mysql] default-character-set=utf8mb4 [mysqld] port=${MYSQL_PORT} basedir=/usr datadir=${MYSQL_DATA_DIR} socket=${MYSQL_DATA_DIR}/mysql.sock user=mysql symbolic-links=0 skip-name-resolve bind-address=0.0.0.0 character-set-server=utf8mb4 collation-server=utf8mb4_general_ci init_connect='SET NAMES utf8mb4' default-storage-engine=INNODB innodb_file_per_table=1 innodb_default_row_format=DYNAMIC lower_case_table_names=1 max_connections=2000 log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid EOF echo "============== 8. 初始化 MySQL 数据目录 ==============" mysqld --initialize-insecure --user=mysql --datadir=${MYSQL_DATA_DIR} echo "============== 9. 启动 MySQL 服务并开机自启 ==============" systemctl enable mysqld systemctl start mysqld echo "============== 10. 等待 MySQL 启动 ==============" for i in {1..30}; do if mysqladmin ping &>/dev/null; then echo "✔ MySQL 已启动" break fi echo "等待 MySQL 启动中..." sleep 1 done if ! mysqladmin ping &>/dev/null; then echo "❌ ERROR: MySQL 启动失败" exit 3 fi # 设置 root 用户密码,并允许远程连接 echo "使用的 root 密码:${MYSQL_ROOT_PASS}" # 打印密码以确保它被正确读取 mysql -uroot <<EOF ALTER USER 'root'@'localhost' IDENTIFIED BY '${MYSQL_ROOT_PASS}'; CREATE USER IF NOT EXISTS 'root'@'%' IDENTIFIED BY '${MYSQL_ROOT_PASS}'; GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' WITH GRANT OPTION; FLUSH PRIVILEGES; EOF echo "============== 12. 开放防火墙端口(若启用) ==============" if systemctl is-active --quiet firewalld; then firewall-cmd --permanent --add-port=${MYSQL_PORT}/tcp >/dev/null 2>&1 firewall-cmd --reload >/dev/null 2>&1 else echo "⚠ firewalld 未运行,跳过开放端口" fi echo "============== 13. 重启 MySQL 应用配置 ==============" systemctl restart mysqld echo "============== MySQL 安装完成 🎉 ==============" echo "端口 : ${MYSQL_PORT}" echo "root 密码 : ${MYSQL_ROOT_PASS}" echo "数据目录 : ${MYSQL_DATA_DIR}" echo "字符集 : utf8mb4" echo "表名大小写 : lower_case_table_names=1" echo "===============================================" uninstall_mysql.sh #!/bin/bash set -e MYSQL_PORT=3311 MYSQL_DATA_DIR="/var/lib/mysql" MYSQL_LOG_FILE="/var/log/mysqld.log" echo "======================================" echo " MySQL 卸载脚本开始执行" echo "======================================" # ------------------------------- # 1. 停止 MySQL 服务 # ------------------------------- echo "【1】停止 MySQL 服务..." if systemctl list-unit-files | grep -q mysqld; then systemctl stop mysqld || true systemctl disable mysqld || true echo " → MySQL 服务已停止并禁用" else echo " → 未检测到 mysqld 服务" fi # ------------------------------- # 2. 卸载 MySQL 软件包 # ------------------------------- echo "【2】卸载 MySQL RPM 包..." MYSQL_RPMS=$(rpm -qa | grep -E 'mysql|mariadb' || true) if [[ -n "$MYSQL_RPMS" ]]; then echo " → 检测到以下 MySQL/MariaDB 包:" echo "$MYSQL_RPMS" yum remove -y $MYSQL_RPMS || true else echo " → 系统中未安装 MySQL 或 MariaDB" fi # ------------------------------- # 3. 删除 MySQL 数据目录 # ------------------------------- echo "【3】检查并删除 MySQL 数据目录..." if [[ -d "$MYSQL_DATA_DIR" ]]; then rm -rf "$MYSQL_DATA_DIR" echo " → 数据目录已删除:$MYSQL_DATA_DIR" else echo " → 数据目录不存在,跳过" fi # ------------------------------- # 4. 删除 MySQL 日志文件 # ------------------------------- echo "【4】删除 MySQL 日志文件..." if [[ -f "$MYSQL_LOG_FILE" ]]; then rm -f "$MYSQL_LOG_FILE" echo " → 日志文件已删除" else echo " → 日志文件不存在,跳过" fi # ------------------------------- # 5. 删除 MySQL 配置文件 # ------------------------------- echo "【5】删除 MySQL 配置文件..." [[ -f /etc/my.cnf ]] && rm -f /etc/my.cnf && echo " → 删除 /etc/my.cnf" [[ -d /etc/my.cnf.d ]] && rm -rf /etc/my.cnf.d && echo " → 删除 /etc/my.cnf.d" # ------------------------------- # 6. 清理 MySQL Yum 仓库 # ------------------------------- echo "【6】清理 MySQL Yum Repo..." for repo in mysql80-community-release mysql84-community-release mysql57-community-release mysql-community-release; do if rpm -qa | grep -q "$repo"; then yum remove -y "$repo" || true echo " → 已删除 Yum 仓库:$repo" fi done # 彻底清理 /etc/yum.repos.d 下的 MySQL repo find /etc/yum.repos.d -name "*mysql*" -exec rm -f {} \; && echo " → MySQL repo 文件已清理" # ------------------------------- # 7. 清理 Yum 缓存 # ------------------------------- echo "【7】清理 Yum 缓存..." yum clean all echo " → Yum 缓存已清理" # ------------------------------- # 8. 移除 Firewall 端口 # ------------------------------- echo "【8】检查防火墙状态..." if command -v firewall-cmd &>/dev/null && systemctl is-active firewalld &>/dev/null; then echo " → 防火墙运行中,尝试删除端口:$MYSQL_PORT" firewall-cmd --remove-port=${MYSQL_PORT}/tcp --permanent || true firewall-cmd --reload echo " → 防火墙端口删除完成" else echo " → firewalld 未运行或未安装,跳过" fi # ------------------------------- # 9. 删除 MySQL 用户 # ------------------------------- echo "【9】删除 mysql 用户和组..." getent passwd mysql &>/dev/null && userdel -r mysql && echo " → mysql 用户已删除" echo "======================================" echo " MySQL 卸载完成!" echo "======================================" backup_mysql.sh #!/bin/bash set -e ########################################### # 配置区域 ########################################### BACKUP_DIR="/home/install/mysql/backup" DB_NAMES=("hjtemp" "hj_boot" "xxl_job") # 需要备份的数据库 MYSQL_USER="root" MYSQL_PASS="Jens20250826001hjIsOk" DATE=$(date +'%Y%m%d_%H%M%S') LOG_FILE="${BACKUP_DIR}/backup.log" ########################################### # 必须先创建目录,否则 log 会报错 ########################################### mkdir -p "$BACKUP_DIR" touch "$LOG_FILE" ########################################### # log 函数 ########################################### log() { echo "$(date +'%Y-%m-%d %H:%M:%S') $1" | tee -a "$LOG_FILE" } log "=== MySQL 备份任务开始 ===" ########################################### # 开始执行 ########################################### log "=== MySQL 备份任务开始 ===" # 1. 检查 mysqldump 是否存在 if ! command -v mysqldump &>/dev/null; then log "[错误] 系统未安装 mysqldump" exit 1 fi # 2. 检查 MySQL 是否运行 if ! systemctl is-active mysqld &>/dev/null; then log "[错误] MySQL 服务未运行" exit 1 fi # 3. 创建备份目录(如果不存在) mkdir -p "$BACKUP_DIR" # 4. 循环检查每个数据库并执行备份 for DB_NAME in "${DB_NAMES[@]}"; do # 检查数据库是否存在 DB_EXISTS=$(mysql -u"$MYSQL_USER" -p"$MYSQL_PASS" -e "SHOW DATABASES LIKE '${DB_NAME}'" 2>/dev/null | wc -l) if [[ $DB_EXISTS -eq 0 ]]; then log "[错误] 数据库 ${DB_NAME} 不存在,跳过备份" continue fi # 5. 执行备份 BACKUP_FILE="${BACKUP_DIR}/${DB_NAME}_backup_${DATE}.sql.gz" log "开始备份数据库:${DB_NAME}" if mysqldump -u"$MYSQL_USER" -p"$MYSQL_PASS" "$DB_NAME" 2>>"$LOG_FILE" | gzip > "$BACKUP_FILE"; then log "数据库 ${DB_NAME} 成功备份到:$BACKUP_FILE" else log "[错误] mysqldump 备份 ${DB_NAME} 失败" continue fi done # 6. 删除 7 天前的备份文件 log "清理 7 天前的备份" find "$BACKUP_DIR" -name "*_backup_*.sql.gz" -type f -mtime +7 -exec rm -f {} \; log "=== MySQL 备份任务完成 ===" 加入到自动每天早上3点备份一次 1 创建 Service 文件 sudo vi /etc/systemd/system/mysql-backup.service [Unit] Description=MySQL Database Backup [Service] Type=oneshot ExecStart=/home/install/mysql/backup_mysql.sh 2 创建 Timer 文件 sudo vi /etc/systemd/system/mysql-backup.timer [Unit] Description=Run MySQL backup every day at 03:00 [Timer] OnCalendar=*-*-* 03:00:00 Persistent=true [Install] WantedBy=timers.target 3 启动 Timer systemctl daemon-reload systemctl enable mysql-backup.timer systemctl start mysql-backup.timer systemctl status mysql-backup.timer journalctl -u mysql-backup.service -n 100 --no-pager [root@VM-0-8-opencloudos ~]# sudo vi /etc/systemd/system/mysql-backup.service [root@VM-0-8-opencloudos ~]# sudo vi /etc/systemd/system/mysql-backup.timer [root@VM-0-8-opencloudos ~]# systemctl daemon-reload [root@VM-0-8-opencloudos ~]# systemctl enable mysql-backup.timer Created symlink /etc/systemd/system/timers.target.wants/mysql-backup.timer → /etc/systemd/system/mysql-backup.timer. [root@VM-0-8-opencloudos ~]# systemctl start mysql-backup.timer [root@VM-0-8-opencloudos ~]# systemctl status mysql-backup.timer ● mysql-backup.timer - Run MySQL backup every day at 03:00 Loaded: loaded (/etc/systemd/system/mysql-backup.timer; enabled; preset: disabled) Active: active (waiting) since Sat 2025-11-22 09:18:10 CST; 17s ago Trigger: Sun 2025-11-23 03:00:00 CST; 17h left Triggers: ● mysql-backup.service Nov 22 09:18:10 VM-0-8-opencloudos systemd[1]: Started mysql-backup.timer - Run MySQL backup every day at 03:00. [root@VM-0-8-opencloudos ~]# mysql

[root@VM-0-8-opencloudos mysql]# mysql --help | grep -A 1 "Default options"

Default options are read from the following files in the given order:

/etc/my.cnf /etc/mysql/my.cnf /usr/etc/my.cnf ~/.my.cnf

[root@VM-0-8-opencloudos mysql]# which mysqld

/usr/sbin/mysqld

[root@VM-0-8-opencloudos mysql]# ps aux | grep mysqld

mysql 234649 2.4 7.7 1759852 598384 ? Ssl 10:33 0:28 /usr/sbin/mysqld

root 279171 0.0 0.0 6460 2148 pts/4 S+ 10:53 0:00 grep --color=auto mysqld

[root@VM-0-8-opencloudos mysql]# rpm -qa | grep -i mysql

mysql80-community-release-el9-1.noarch

mysql-community-common-8.0.44-1.el9.x86_64

mysql-community-client-plugins-8.0.44-1.el9.x86_64

mysql-community-libs-8.0.44-1.el9.x86_64

mysql-community-client-8.0.44-1.el9.x86_64

mysql-community-icu-data-files-8.0.44-1.el9.x86_64

mysql-community-server-8.0.44-1.el9.x86_64

[root@VM-0-8-opencloudos mysql]#

war包下载链接 https://updates.jenkins.io/download/war/2.528.2/jenkins.war https://www.jenkins.io/doc/book/platform-information/support-policy-java/ https://plugins.jenkins.io/locale/ How to install 下载文件 .hpi https://plugins.jenkins.io/locale/releases/ Advanced settings 上传次.hpi 文件 安装脚本 #!/bin/bash ### ================================ ### Jenkins 一键安装 + systemd 自启脚本 ### 目录结构: ### /home/install/jenkins ### /home/install/packages/jenkins.war ### 使用默认端口:8080 ### ================================ BASE=/home/install JENKINS_HOME=$BASE/jenkins JENKINS_WAR=$BASE/packages/jenkins.war JAVA=$BASE/jdk17/bin/java LOG_DIR=$JENKINS_HOME/logs START_SCRIPT=$JENKINS_HOME/jenkins.sh SERVICE_FILE=/etc/systemd/system/jenkins.service echo "🚀 开始初始化 Jenkins 环境..." # 检查 Jenkins WAR 文件 if [ ! -f "$JENKINS_WAR" ]; then echo "❌ Jenkins WAR 不存在:$JENKINS_WAR" exit 1 fi # 检查 Java if [ ! -x "$JAVA" ]; then echo "❌ Java 不存在:$JAVA" exit 1 fi echo "📁 创建目录..." mkdir -p $JENKINS_HOME mkdir -p $LOG_DIR ### ========== 创建 Jenkins 启动脚本 ========== echo "📝 生成启动脚本:$START_SCRIPT" cat > $START_SCRIPT << 'EOF' #!/bin/bash JENKINS_HOME=/home/install/jenkins JENKINS_WAR=/home/install/packages/jenkins.war JAVA=/home/install/jdk17/bin/java LOG_DIR=/home/install/jenkins/logs LOG=$LOG_DIR/jenkins.log PID_FILE=/home/install/jenkins/jenkins.pid mkdir -p $LOG_DIR mkdir -p $JENKINS_HOME # 检查 Java if [ ! -x "$JAVA" ]; then echo "❌ Java 不存在:$JAVA" exit 1 fi # 检查 Jenkins WAR if [ ! -f "$JENKINS_WAR" ]; then echo "❌ Jenkins WAR 不存在:$JENKINS_WAR" exit 1 fi # 检查是否已运行 if [ -f "$PID_FILE" ]; then PID=$(cat $PID_FILE) if kill -0 $PID 2>/dev/null; then echo "⚠ Jenkins 正在运行中,PID: $PID" exit 1 else rm -f $PID_FILE fi fi echo "🚀 启动 Jenkins..." nohup $JAVA -DJENKINS_HOME=$JENKINS_HOME \ -jar $JENKINS_WAR \ --httpPort=8080 \ > $LOG 2>&1 & PID=$! echo $PID > $PID_FILE sleep 1 if kill -0 $PID 2>/dev/null; then echo "✔ Jenkins 启动成功!PID: $PID" echo "🌐 访问地址:http://你的服务器IP:8080" else echo "❌ Jenkins 启动失败,请查看日志:$LOG" fi EOF chmod +x $START_SCRIPT ### ========== 创建 systemd 服务 ========== echo "📝 创建 systemd 服务:$SERVICE_FILE" cat > $SERVICE_FILE << EOF [Unit] Description=Jenkins Service After=network.target [Service] Type=forking User=root ExecStart=$START_SCRIPT PIDFile=$JENKINS_HOME/jenkins.pid Restart=on-failure [Install] WantedBy=multi-user.target EOF ### ========== systemd 生效 ========== echo "🔄 重新加载 systemd..." systemctl daemon-reload echo "⚙ 设置开机自启..." systemctl enable jenkins echo "▶ 启动 Jenkins 服务..." systemctl start jenkins echo "✔ Jenkins 状态:" systemctl status jenkins --no-pager echo echo "🎉 Jenkins 已安装并开启自启动!访问地址:http://你的服务器IP:8080" echo "📄 日志文件:$LOG_DIR/jenkins.log" 初始密码: /home/install/jenkins/secrets/initialAdminPassword