PaddlePaddle实现线性回归

在本次实验中我们将使用PaddlePaddle来搭建一个简单的线性回归模型,并利用这一模型预测你的储蓄(在某地区)可以购买多大面积的房子。并且在学习模型搭建的过程中,了解到机器学习的若干重要概念,掌握一个机器学习预测的基本流程。

线性回归的基本概念

线性回归是机器学习中最简单也是最重要的模型之一,其模型建立遵循此流程:获取数据、数据预处理、训练模型、应用模型。

回归模型可以理解为:存在一个点集,用一条曲线去拟合它分布的过程。如果拟合曲线是一条直线,则称为线性回归。如果是一条二次曲线,则被称为二次回归。线性回归是回归模型中最简单的一种。

在线性回归中有几个基本的概念需要掌握:

- 假设函数(Hypothesis Function)

- 损失函数(Loss Function)

- 优化算法(Optimization Algorithm)

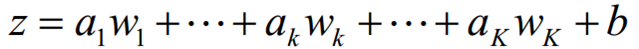

假设函数:

假设函数是指,用数学的方法描述自变量和因变量之间的关系,它们之间可以是一个线性函数或非线性函数。 在本次线性回顾模型中,我们的假设函数为 Y^=aX1+b\hat{Y}= aX_1+bY^=aX1+b ,其中,Y^\hat{Y}Y^表示模型的预测结果(预测房价),用来和真实的Y区分。模型要学习的参数即:a,b。

损失函数:

损失函数是指,用数学的方法衡量假设函数预测结果与真实值之间的误差。这个差距越小预测越准确,而算法的任务就是使这个差距越来越小。

建立模型后,我们需要给模型一个优化目标,使得学到的参数能够让预测值Y^\hat{Y}Y^尽可能地接近真实值Y。输入任意一个数据样本的目标值yiy_iyi和模型给出的预测值\hat{Y_i,损失函数输出一个非负的实值。这个实值通常用来反映模型误差的大小。

对于线性模型来讲,最常用的损失函数就是均方误差(Mean Squared Error, MSE)。

MSE=1n∑i=1n(Yi^−Yi)2MSE=\frac{1}{n}\sum_{i=1}^{n}(\hat{Y_i}-Y_i)^2MSE=n1∑i=1n(Yi^−Yi)2

即对于一个大小为n的测试集,MSE是n个数据预测结果误差平方的均值。

优化算法:

在模型训练中优化算法也是至关重要的,它决定了一个模型的精度和运算速度。本章的线性回归实例中主要使用了梯度下降法进行优化。

现在,让我们正式进入实验吧!

首先导入必要的包,分别是:

paddle.fluid--->PaddlePaddle深度学习框架

numpy---------->python基本库,用于科学计算

os------------------>python的模块,可使用该模块对操作系统进行操作

matplotlib----->python绘图库,可方便绘制折线图、散点图等图形

import paddle.fluid as fluid import paddle import numpy as np import os import matplotlib.pyplot as plt

Step1:准备数据。

(1)uci-housing数据集介绍

数据集共506行,每行14列。前13列用来描述房屋的各种信息,最后一列为该类房屋价格中位数。

PaddlePaddle提供了读取uci_housing训练集和测试集的接口,分别为paddle.dataset.uci_housing.train()和paddle.dataset.uci_housing.test()。

(2)train_reader和test_reader

paddle.reader.shuffle()表示每次缓存BUF_SIZE个数据项,并进行打乱

paddle.batch()表示每BATCH_SIZE组成一个batch

BUF_SIZE=500 BATCH_SIZE=20 #用于训练的数据提供器,每次从缓存中随机读取批次大小的数据 train_reader = paddle.batch( paddle.reader.shuffle(paddle.dataset.uci_housing.train(), buf_size=BUF_SIZE), batch_size=BATCH_SIZE) #用于测试的数据提供器,每次从缓存中随机读取批次大小的数据 test_reader = paddle.batch( paddle.reader.shuffle(paddle.dataset.uci_housing.test(), buf_size=BUF_SIZE), batch_size=BATCH_SIZE)

(3)打印看下数据是什么样的?PaddlePaddle接口提供的数据已经经过归一化等处理

(array([-0.02964322, -0.11363636, 0.39417967, -0.06916996, 0.14260276, -0.10109875, 0.30715859, -0.13176829, -0.24127857, 0.05489093, 0.29196451, -0.2368098 , 0.12850267]), array([15.6])),

#用于打印,查看uci_housing数据 train_data=paddle.dataset.uci_housing.train(); sampledata=next(train_data()) print(sampledata)

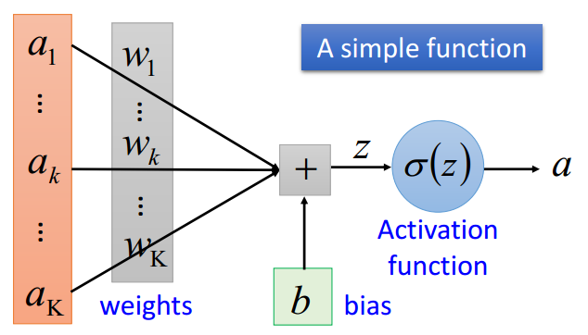

Step2:网络配置

(1)网络搭建:对于线性回归来讲,它就是一个从输入到输出的简单的全连接层。

对于波士顿房价数据集,假设属性和房价之间的关系可以被属性间的线性组合描述。

#定义张量变量x,表示13维的特征值 x = fluid.layers.data(name='x', shape=[13], dtype='float32') #定义张量y,表示目标值 y = fluid.layers.data(name='y', shape=[1], dtype='float32') #定义一个简单的线性网络,连接输入和输出的全连接层 #input:输入tensor; #size:该层输出单元的数目 #act:激活函数 y_predict=fluid.layers.fc(input=x,size=1,act=None)

(2)定义损失函数

此处使用均方差损失函数。

square_error_cost(input,lable):接受输入预测值和目标值,并返回方差估计,即为(y-y_predict)的平方

cost = fluid.layers.square_error_cost(input=y_predict, label=y) #求一个batch的损失值 avg_cost = fluid.layers.mean(cost) #对损失值求平均值

(3)定义优化函数

此处使用的是随机梯度下降。

test_program = fluid.default_main_program().clone(for_test=True) optimizer = fluid.optimizer.SGDOptimizer(learning_rate=0.001) opts = optimizer.minimize(avg_cost)

在上述模型配置完毕后,得到两个fluid.Program:fluid.default_startup_program() 与fluid.default_main_program() 配置完毕了。

参数初始化操作会被写入fluid.default_startup_program()

fluid.default_main_program()用于获取默认或全局main program(主程序)。该主程序用于训练和测试模型。fluid.layers 中的所有layer函数可以向 default_main_program 中添加算子和变量。default_main_program 是fluid的许多编程接口(API)的Program参数的缺省值。例如,当用户program没有传入的时候, Executor.run() 会默认执行 default_main_program 。

Step3.模型训练 and Step4.模型评估

(1)创建Executor

首先定义运算场所 fluid.CPUPlace()和 fluid.CUDAPlace(0)分别表示运算场所为CPU和GPU

Executor:接收传入的program,通过run()方法运行program。

use_cuda = False #use_cuda为False,表示运算场所为CPU;use_cuda为True,表示运算场所为GPU place = fluid.CUDAPlace(0) if use_cuda else fluid.CPUPlace() exe = fluid.Executor(place) #创建一个Executor实例exe exe.run(fluid.default_startup_program()) #Executor的run()方法执行startup_program(),进行参数初始化

(2)定义输入数据维度

DataFeeder负责将数据提供器(train_reader,test_reader)返回的数据转成一种特殊的数据结构,使其可以输入到Executor中。

feed_list设置向模型输入的向变量表或者变量表名

# 定义输入数据维度 feeder = fluid.DataFeeder(place=place, feed_list=[x, y])#feed_list:向模型输入的变量表或变量表名

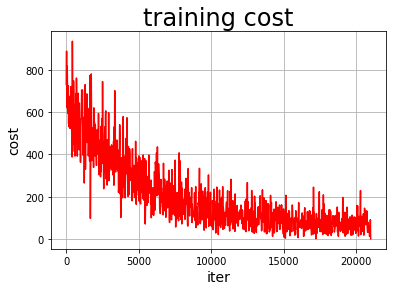

(3)定义绘制训练过程的损失值变化趋势的方法draw_train_process

iter=0; iters=[] train_costs=[] def draw_train_process(iters,train_costs): title="training cost" plt.title(title, fontsize=24) plt.xlabel("iter", fontsize=14) plt.ylabel("cost", fontsize=14) plt.plot(iters, train_costs,color='red',label='training cost') plt.grid() plt.show()

(4)训练并保存模型

Executor接收传入的program,并根据feed map(输入映射表)和fetch_list(结果获取表) 向program中添加feed operators(数据输入算子)和fetch operators(结果获取算子)。 feed map为该program提供输入数据。fetch_list提供program训练结束后用户预期的变量。

使用feed方式送入训练数据,先将reader数据转换为PaddlePaddle可识别的Tensor数据,传入执行器进行训练。

注:enumerate() 函数用于将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标,

EPOCH_NUM=50 model_save_dir = "/home/aistudio/work/fit_a_line.inference.model" for pass_id in range(EPOCH_NUM): #训练EPOCH_NUM轮 # 开始训练并输出最后一个batch的损失值 train_cost = 0 for batch_id, data in enumerate(train_reader()): #遍历train_reader迭代器 train_cost = exe.run(program=fluid.default_main_program(),#运行主程序 feed=feeder.feed(data), #喂入一个batch的训练数据,根据feed_list和data提供的信息,将输入数据转成一种特殊的数据结构 fetch_list=[avg_cost]) if batch_id % 40 == 0: print("Pass:%d, Cost:%0.5f" % (pass_id, train_cost[0][0])) #打印最后一个batch的损失值 iter=iter+BATCH_SIZE iters.append(iter) train_costs.append(train_cost[0][0]) # 开始测试并输出最后一个batch的损失值 test_cost = 0 for batch_id, data in enumerate(test_reader()): #遍历test_reader迭代器 test_cost= exe.run(program=test_program, #运行测试cheng feed=feeder.feed(data), #喂入一个batch的测试数据 fetch_list=[avg_cost]) #fetch均方误差 print('Test:%d, Cost:%0.5f' % (pass_id, test_cost[0][0])) #打印最后一个batch的损失值 #保存模型 # 如果保存路径不存在就创建 if not os.path.exists(model_save_dir): os.makedirs(model_save_dir) print ('save models to %s' % (model_save_dir)) #保存训练参数到指定路径中,构建一个专门用预测的program fluid.io.save_inference_model(model_save_dir, #保存推理model的路径 ['x'], #推理(inference)需要 feed 的数据 [y_predict], #保存推理(inference)结果的 Variables exe) #exe 保存 inference model draw_train_process(iters,train_costs)

----------------------------------------

Pass:0, Cost:730.52649 Test:0, Cost:129.28802 Pass:1, Cost:935.00702 Test:1, Cost:146.41402 Pass:2, Cost:561.50110 Test:2, Cost:188.96291 Pass:3, Cost:455.02338 Test:3, Cost:296.16476 Pass:4, Cost:347.46710 Test:4, Cost:122.57037 Pass:5, Cost:480.02325 Test:5, Cost:140.77341 Pass:6, Cost:464.05698 Test:6, Cost:127.89626 Pass:7, Cost:276.11606 Test:7, Cost:233.21486 Pass:8, Cost:337.44760 Test:8, Cost:163.82315 Pass:9, Cost:311.88654 Test:9, Cost:13.98091 Pass:10, Cost:308.88275 Test:10, Cost:170.74649 Pass:11, Cost:340.49243 Test:11, Cost:77.21281 Pass:12, Cost:301.12851 Test:12, Cost:31.04134 Pass:13, Cost:150.75267 Test:13, Cost:2.99113 Pass:14, Cost:174.88126 Test:14, Cost:39.84206 Pass:15, Cost:279.36380 Test:15, Cost:102.89651 Pass:16, Cost:184.16774 Test:16, Cost:208.48296 Pass:17, Cost:252.75090 Test:17, Cost:65.50356 Pass:18, Cost:125.28737 Test:18, Cost:5.14324 Pass:19, Cost:241.18799 Test:19, Cost:43.11307 Pass:20, Cost:333.37201 Test:20, Cost:48.84952 Pass:21, Cost:150.72885 Test:21, Cost:58.22155 Pass:22, Cost:73.52397 Test:22, Cost:113.02930 Pass:23, Cost:189.21335 Test:23, Cost:29.70313 Pass:24, Cost:182.14908 Test:24, Cost:16.74845 Pass:25, Cost:128.77292 Test:25, Cost:16.76190 Pass:26, Cost:117.02783 Test:26, Cost:10.72589 Pass:27, Cost:107.32870 Test:27, Cost:4.64500 Pass:28, Cost:138.55495 Test:28, Cost:6.51828 Pass:29, Cost:48.11888 Test:29, Cost:8.40414 Pass:30, Cost:127.07739 Test:30, Cost:123.49804 Pass:31, Cost:169.20230 Test:31, Cost:5.44257 Pass:32, Cost:88.83828 Test:32, Cost:7.61720 Pass:33, Cost:80.49153 Test:33, Cost:22.00040 Pass:34, Cost:59.16454 Test:34, Cost:46.63321 Pass:35, Cost:161.52925 Test:35, Cost:26.65326 Pass:36, Cost:81.94468 Test:36, Cost:28.30224 Pass:37, Cost:35.22042 Test:37, Cost:3.84092 Pass:38, Cost:72.79510 Test:38, Cost:16.40567 Pass:39, Cost:109.47186 Test:39, Cost:4.38933 Pass:40, Cost:59.62152 Test:40, Cost:0.58020 Pass:41, Cost:52.41791 Test:41, Cost:2.84398 Pass:42, Cost:139.88603 Test:42, Cost:11.51844 Pass:43, Cost:31.33353 Test:43, Cost:12.27122 Pass:44, Cost:33.70327 Test:44, Cost:11.24299 Pass:45, Cost:36.93304 Test:45, Cost:3.56746 Pass:46, Cost:69.01217 Test:46, Cost:12.32192 Pass:47, Cost:20.34635 Test:47, Cost:5.79740 Pass:48, Cost:37.24659 Test:48, Cost:9.30209 Pass:49, Cost:104.55357 Test:49, Cost:12.87949 save models to /home/aistudio/work/fit_a_line.inference.model

-------------------------------------

Step5.模型预测

(1)创建预测用的Executor

infer_exe = fluid.Executor(place) #创建推测用的executor inference_scope = fluid.core.Scope() #Scope指定作用域

(2)可视化真实值与预测值方法定义

infer_results=[] groud_truths=[] #绘制真实值和预测值对比图 def draw_infer_result(groud_truths,infer_results): title='Boston' plt.title(title, fontsize=24) x = np.arange(1,20) y = x plt.plot(x, y) plt.xlabel('ground truth', fontsize=14) plt.ylabel('infer result', fontsize=14) plt.scatter(groud_truths, infer_results,color='green',label='training cost') plt.grid() plt.show()

(3)开始预测

通过fluid.io.load_inference_model,预测器会从params_dirname中读取已经训练好的模型,来对从未遇见过的数据进行预测。

with fluid.scope_guard(inference_scope):#修改全局/默认作用域(scope), 运行时中的所有变量都将分配给新的scope。 #从指定目录中加载 推理model(inference model) [inference_program, #推理的program feed_target_names, #需要在推理program中提供数据的变量名称 fetch_targets] = fluid.io.load_inference_model(#fetch_targets: 推断结果 model_save_dir, #model_save_dir:模型训练路径 infer_exe) #infer_exe: 预测用executor #获取预测数据 infer_reader = paddle.batch(paddle.dataset.uci_housing.test(), #获取uci_housing的测试数据 batch_size=200) #从测试数据中读取一个大小为200的batch数据 #从test_reader中分割x test_data = next(infer_reader()) test_x = np.array([data[0] for data in test_data]).astype("float32") test_y= np.array([data[1] for data in test_data]).astype("float32") results = infer_exe.run(inference_program, #预测模型 feed={feed_target_names[0]: np.array(test_x)}, #喂入要预测的x值 fetch_list=fetch_targets) #得到推测结果 print("infer results: (House Price)") for idx, val in enumerate(results[0]): print("%d: %.2f" % (idx, val)) infer_results.append(val) print("ground truth:") for idx, val in enumerate(test_y): print("%d: %.2f" % (idx, val)) groud_truths.append(val) draw_infer_result(groud_truths,infer_results)

infer results: (House Price) 0: 13.23 1: 13.22 2: 13.23 3: 14.47 4: 13.76 5: 14.04 6: 13.25 7: 13.35 8: 11.67 9: 13.38 10: 11.03 11: 12.38 12: 12.95 13: 12.62 14: 12.13 15: 13.39 16: 14.32 17: 14.26 18: 14.61 19: 13.20 20: 13.78 21: 12.54 22: 14.22 23: 13.43 24: 13.58 25: 13.00 26: 14.03 27: 13.89 28: 14.73 29: 13.76 30: 13.53 31: 13.10 32: 13.08 33: 12.20 34: 12.07 35: 13.84 36: 13.83 37: 14.25 38: 14.40 39: 14.27 40: 13.21 41: 12.75 42: 14.15 43: 14.42 44: 14.38 45: 14.07 46: 13.33 47: 14.48 48: 14.61 49: 14.83 50: 13.20 51: 13.52 52: 13.10 53: 13.30 54: 14.40 55: 14.88 56: 14.38 57: 14.92 58: 15.04 59: 15.23 60: 15.58 61: 15.51 62: 13.54 63: 14.46 64: 15.13 65: 15.67 66: 15.32 67: 15.62 68: 15.71 69: 16.01 70: 14.49 71: 14.15 72: 14.95 73: 13.76 74: 14.71 75: 15.18 76: 16.25 77: 16.42 78: 16.55 79: 16.59 80: 16.13 81: 16.34 82: 15.44 83: 16.08 84: 15.77 85: 15.07 86: 14.48 87: 15.83 88: 16.46 89: 20.68 90: 20.86 91: 20.75 92: 19.60 93: 20.27 94: 20.51 95: 20.05 96: 20.16 97: 21.58 98: 21.32 99: 21.59 100: 21.49 101: 21.30 ground truth: 0: 8.50 1: 5.00 2: 11.90 3: 27.90 4: 17.20 5: 27.50 6: 15.00 7: 17.20 8: 17.90 9: 16.30 10: 7.00 11: 7.20 12: 7.50 13: 10.40 14: 8.80 15: 8.40 16: 16.70 17: 14.20 18: 20.80 19: 13.40 20: 11.70 21: 8.30 22: 10.20 23: 10.90 24: 11.00 25: 9.50 26: 14.50 27: 14.10 28: 16.10 29: 14.30 30: 11.70 31: 13.40 32: 9.60 33: 8.70 34: 8.40 35: 12.80 36: 10.50 37: 17.10 38: 18.40 39: 15.40 40: 10.80 41: 11.80 42: 14.90 43: 12.60 44: 14.10 45: 13.00 46: 13.40 47: 15.20 48: 16.10 49: 17.80 50: 14.90 51: 14.10 52: 12.70 53: 13.50 54: 14.90 55: 20.00 56: 16.40 57: 17.70 58: 19.50 59: 20.20 60: 21.40 61: 19.90 62: 19.00 63: 19.10 64: 19.10 65: 20.10 66: 19.90 67: 19.60 68: 23.20 69: 29.80 70: 13.80 71: 13.30 72: 16.70 73: 12.00 74: 14.60 75: 21.40 76: 23.00 77: 23.70 78: 25.00 79: 21.80 80: 20.60 81: 21.20 82: 19.10 83: 20.60 84: 15.20 85: 7.00 86: 8.10 87: 13.60 88: 20.10 89: 21.80 90: 24.50 91: 23.10 92: 19.70 93: 18.30 94: 21.20 95: 17.50 96: 16.80 97: 22.40 98: 20.60 99: 23.90 100: 22.00 101: 11.90

---------------------------------------------------------------------------------------------------------

data.txt

98.87, 599.0 68.74, 450.0 89.24, 440.0 129.19, 780.0 61.64, 450.0 74.0, 315.0 124.07, 998.0 65.0, 435.0 57.52, 435.0 60.42, 225.0 98.78, 685.0 63.3, 320.0 94.8, 568.0 49.95, 365.0 84.76, 530.0 127.82, 720.0 85.19, 709.0 79.91, 510.0 91.56, 600.0 59.0, 300.0 96.22, 580.0 94.69, 380.0 45.0, 210.0 68.0, 320.0 77.37, 630.0 104.0, 415.0 81.0, 798.0 64.33, 450.0 86.43, 935.0 67.29, 310.0 68.69, 469.0 109.01, 480.0 56.78, 450.0 53.0, 550.0 95.26, 625.0 142.0, 850.0 76.19, 450.0 83.42, 450.0 104.88, 580.0 149.13, 750.0 155.51, 888.0 105.15, 570.0 44.98, 215.0 87.48, 680.0 154.9, 1300.0 69.02, 340.0 74.0, 510.0 130.3, 1260.0 160.0, 640.0 88.31, 535.0 80.38, 680.0 94.99, 557.0 87.98, 435.0 88.0, 430.0 65.39, 320.0 89.0, 395.0 97.36, 535.0 53.0, 470.0 120.0, 632.0 129.0, 520.0 138.68, 878.0 81.25, 520.0 81.37, 550.0 101.98, 630.0 99.5, 540.0 59.53, 350.0 60.0, 220.0 50.51, 270.0 120.82, 745.0 107.11, 650.0 57.0, 252.0 145.08, 1350.0 111.8, 625.0 99.51, 518.0 87.09, 705.0 112.82, 510.0 148.93, 1280.0 199.24, 900.0 112.42, 600.0 94.17, 588.0 198.54, 1350.0 77.02, 460.0 153.47, 900.0 69.16, 435.0 84.54, 430.0 100.74, 590.0 110.9, 620.0 109.74, 618.0 107.98, 590.0 108.63, 700.0 67.37, 435.0 138.53, 790.0 44.28, 315.0 107.51, 816.0 59.53, 388.0 118.87, 1155.0 59.38, 260.0 55.0, 360.0 81.02, 410.0 109.68, 680.0 127.0, 630.0 109.23, 545.0 85.03, 699.0 107.27, 620.0 120.3, 480.0 127.08, 680.0 158.63, 850.0 123.25, 895.0 151.68, 1000.0 65.84, 438.0 96.87, 780.0 166.0, 1400.0 59.53, 410.0 137.4, 1150.0 45.0, 209.0 88.54, 722.0 87.22, 720.0 164.0, 2000.0 69.34, 248.0 103.67, 750.0 74.2, 595.0 71.0, 440.0 53.0, 475.0 60.86, 850.0 90.14, 530.0 57.0, 338.0 138.82, 1150.0 89.0, 760.0 98.0, 400.0 143.35, 1150.0 113.81, 575.0 152.2, 1150.0 63.32, 330.0 66.91, 218.0 44.28, 305.0 97.76, 590.0 69.0, 285.0 55.0, 380.0 148.0, 1300.0 154.59, 868.0 131.5, 1020.0 87.1, 780.0 148.68, 749.0 94.22, 590.0 96.79, 670.0 99.19, 578.0 199.0, 1380.0 125.03, 630.0 60.95, 520.0 127.04, 680.0 85.63, 460.0 77.14, 320.0 75.63, 508.0 140.18, 800.0 59.53, 365.0 109.09, 850.0 152.0, 1850.0 122.0, 980.0 111.21, 630.0 56.7, 260.0 84.46, 588.0 83.19, 500.0 132.18, 1260.0 76.14, 480.0 107.29, 585.0 137.71, 780.0 108.22, 610.0 98.81, 570.0 139.0, 1180.0 89.0, 1100.0 89.47, 800.0 75.61, 496.0 84.54, 460.0 75.87, 490.0 61.0, 450.0 83.72, 500.0 53.0, 458.0 86.0, 700.0 98.57, 760.0 84.86, 510.0 82.77, 600.0 102.49, 600.0 139.32, 730.0 145.0, 1290.0 148.0, 1100.0 65.82, 410.0 53.0, 240.0 88.96, 1000.0 86.36, 700.0 65.72, 455.0 88.0, 725.0 65.98, 600.0 99.0, 560.0 131.0, 975.0 59.53, 349.0 86.79, 508.0 110.19, 500.0 42.13, 320.0 89.91, 450.0 44.0, 320.0 107.16, 900.0 98.28, 574.0 109.68, 650.0 65.0, 450.0 103.8, 750.0 71.69, 440.0 94.38, 550.0 107.5, 760.0 85.29, 705.0 152.3, 1000.0 80.66, 665.0 88.0, 600.0 67.0, 350.0 87.0, 700.0 88.15, 430.0 104.14, 600.0 54.0, 250.0 65.44, 435.0 88.93, 525.0 51.0, 338.0 57.0, 500.0 66.94, 470.0 142.99, 800.0 69.16, 500.0 43.0, 202.0 177.0, 1120.0 131.73, 900.0 60.0, 220.0 83.0, 530.0 110.99, 630.0 49.95, 365.0 121.87, 748.0 70.0, 690.0 48.76, 230.0 88.73, 547.0 59.53, 355.0 85.49, 420.0 87.06, 570.0 77.0, 350.0 55.0, 354.0 120.94, 655.0 88.7, 560.0 76.31, 510.0 100.39, 610.0 124.88, 820.0 95.0, 480.0 44.28, 315.0 158.0, 1500.0 55.59, 235.0 87.32, 738.0 64.43, 440.0 77.2, 418.0 89.0, 750.0 130.4, 725.0 98.61, 505.0 55.34, 355.0 132.66, 944.0 88.7, 560.0 67.92, 408.0 88.88, 640.0 57.52, 370.0 71.0, 615.0 86.29, 650.0 51.0, 211.0 53.14, 350.0 63.38, 430.0 90.83, 660.0 95.05, 515.0 96.0, 650.0 135.24, 900.0 80.5, 640.0 132.0, 680.0 69.0, 450.0 56.39, 268.0 59.53, 338.0 74.22, 445.0 88.0, 780.0 112.41, 570.0 140.85, 760.0 108.33, 635.0 104.76, 612.0 86.67, 632.0 169.0, 1550.0 99.19, 570.0 95.0, 780.0 174.0, 1500.0 103.13, 565.0 107.0, 940.0 109.43, 880.0 91.93, 616.0 66.69, 296.0 57.11, 280.0 98.78, 590.0 40.09, 275.0 144.86, 850.0 110.99, 600.0 103.13, 565.0 87.0, 565.0 55.0, 347.0 53.75, 312.0 62.36, 480.0 135.4, 710.0 74.22, 450.0 96.35, 400.0 88.93, 542.0 98.0, 598.0 66.28, 530.0 119.2, 950.0 67.0, 415.0 68.9, 430.0 65.33, 400.0 55.0, 350.0 148.0, 1270.0 60.0, 307.0 88.83, 450.0 85.46, 430.0 137.57, 1130.0 90.59, 440.0 49.51, 220.0 96.87, 780.0 133.24, 820.0 84.0, 650.0 59.53, 420.0 59.16, 418.0 121.0, 670.0 53.0, 489.0 145.0, 1350.0 56.43, 260.0 71.8, 400.0 77.74, 370.0 59.16, 410.0 141.0, 820.0 87.28, 510.0 112.39, 666.0 119.0, 460.0 72.66, 395.0 120.8, 720.0 121.87, 750.0 64.43, 430.0 59.53, 400.0 106.69, 615.0 102.61, 575.0 61.64, 342.0 99.02, 750.0 88.04, 750.0 126.08, 750.0 145.0, 1280.0 84.0, 550.0 109.39, 700.0 199.96, 1850.0 88.0, 410.0 104.65, 750.0 81.0, 760.0 60.0, 230.0 108.0, 760.0 87.0, 550.0 88.15, 415.0 82.86, 490.0 152.0, 750.0 89.0, 565.0 43.5, 260.0 59.62, 356.0 96.6, 800.0 59.53, 450.0 67.48, 270.0 70.0, 455.0 102.84, 600.0 112.0, 423.0 90.23, 720.0 65.0, 380.0 89.67, 730.0 69.53, 480.0 182.36, 870.0 98.56, 569.0 65.0, 430.0 59.53, 325.0 159.83, 880.0 45.0, 260.0 92.64, 628.0 85.63, 413.0 100.43, 400.0 171.68, 1000.0 104.64, 720.0 52.46, 560.0 89.02, 420.0 166.11, 1160.0 67.21, 387.0 71.57, 420.0 68.07, 265.0 170.0, 1395.0 67.0, 455.0 73.5, 480.0 130.53, 760.0 96.04, 570.0 73.57, 265.0 128.6, 750.0 127.09, 870.0 71.0, 450.0 55.43, 230.0 103.0, 560.0 169.0, 1600.0 107.7, 815.0 153.61, 770.0 71.8, 450.0 87.76, 568.0 122.0, 970.0 58.75, 420.0 65.33, 310.0 80.63, 520.0 93.0, 350.0 59.62, 400.0 124.0, 890.0 105.79, 680.0 122.83, 668.0 67.09, 420.0 71.0, 350.0 127.23, 720.0 128.0, 780.0 77.9, 498.0 55.73, 213.0 91.39, 430.0 114.43, 860.0 125.0, 730.0 73.57, 270.0 59.56, 268.0 71.74, 395.0 88.12, 565.0 65.5, 340.0 81.0, 400.0 154.95, 900.0 67.0, 600.0 80.6, 580.0 148.0, 850.0 52.33, 475.0 122.54, 950.0 70.21, 400.0 63.0, 460.0 97.0, 750.0 100.19, 600.0 179.0, 895.0 69.8, 450.0 63.4, 480.0 65.72, 439.0 77.0, 350.0 137.68, 800.0 95.0, 590.0 68.07, 270.0 136.37, 1100.0 57.39, 218.0 83.72, 510.0 125.42, 920.0 99.51, 580.0 73.33, 625.0 53.17, 490.0 53.0, 480.0 51.0, 400.0 131.27, 780.0 95.37, 625.0 59.53, 400.0 88.8, 525.0 67.0, 310.0 129.76, 660.0 98.28, 580.0 101.44, 550.0 89.05, 710.0 157.77, 1310.0 84.73, 640.0 93.96, 540.0 55.24, 365.0 86.0, 740.0 65.8, 395.0 139.0, 1150.0 99.19, 540.0 88.0, 678.0 65.0, 440.0 138.37, 1060.0 65.33, 350.0 140.6, 850.0 90.46, 518.0 53.0, 485.0 73.9, 370.0 71.7, 280.0 80.73, 485.0 113.0, 570.0 97.0, 570.0 65.5, 340.0 77.74, 350.0 145.0, 1280.0 97.46, 800.0 88.8, 530.0 198.04, 1600.0 50.0, 270.0 60.0, 220.0 136.0, 858.0 67.07, 370.0 49.51, 220.0 67.0, 600.0 108.56, 810.0 96.52, 565.0 68.48, 435.0 65.84, 450.0 102.61, 590.0 101.69, 600.0 73.93, 520.0 57.0, 256.0 123.5, 1115.0 154.89, 1260.0 160.34, 1320.0 88.0, 715.0 71.0, 269.0 74.93, 405.0 73.6, 630.0 59.5, 380.0 84.0, 650.0 59.53, 370.0 45.0, 210.0 51.0, 350.0 107.56, 780.0 76.46, 418.0 83.0, 398.0 77.14, 305.0 71.0, 300.0 86.0, 680.0 52.37, 450.0 99.99, 530.0 52.91, 540.0 63.36, 299.0 60.51, 520.0 122.0, 950.0 96.73, 740.0 138.82, 860.0 99.0, 520.0 109.75, 570.0 112.89, 870.0 65.36, 420.0 110.19, 558.0 132.59, 980.0 128.78, 900.0 89.0, 1296.0 182.36, 980.0 146.41, 521.0 90.0, 428.0 157.0, 1280.0 89.0, 528.0 85.19, 695.0 61.0, 686.0 91.56, 720.0 126.25, 670.0 81.0, 775.0 117.31, 745.0 50.6, 350.0 138.53, 740.0 151.66, 1550.0 87.0, 745.0 65.0, 300.0 83.4, 535.0 67.06, 288.0 55.0, 340.0 79.0, 340.0 95.0, 760.0 77.0, 370.0 50.0, 360.0 97.0, 750.0 53.0, 465.0 60.0, 220.0 65.33, 293.0 153.0, 900.0 52.41, 518.0 69.0, 290.0 89.54, 700.0 53.0, 465.0 125.0, 760.0 103.0, 580.0 67.0, 310.0 69.0, 275.0 87.09, 495.0 102.71, 620.0 132.53, 820.0 62.0, 570.0 121.62, 780.0 80.03, 480.0 138.82, 950.0 107.0, 900.0 149.0, 930.0 68.74, 455.0 101.27, 488.0 55.0, 350.0 60.0, 240.0 97.0, 400.0 67.92, 450.0 87.86, 435.0 65.33, 365.0 70.23, 335.0 119.0, 940.0 85.68, 570.0 86.0, 520.0 125.0, 760.0 184.23, 1350.0 77.0, 485.0 57.0, 470.0 108.45, 750.0 107.02, 575.0 65.0, 478.0 97.0, 780.0 107.16, 920.0 53.75, 310.0 122.83, 700.0 63.3, 252.0 80.0, 560.0 123.82, 600.0 87.31, 405.0 126.54, 540.0 132.82, 800.0 152.73, 1280.0 109.0, 650.0 103.0, 845.0 59.62, 420.0 66.28, 525.0 96.33, 400.0 86.29, 350.0 78.78, 308.0 137.36, 1100.0 119.69, 530.0 126.08, 750.0 73.84, 490.0 73.0, 635.0 67.22, 400.0 87.98, 435.0 68.36, 270.0 73.0, 415.0 94.0, 600.0 107.07, 800.0 49.0, 260.0 156.0, 880.0 107.03, 830.0 198.75, 1550.0 60.92, 300.0 83.45, 540.0 57.39, 248.0 68.48, 450.0 140.86, 750.0 146.0, 1150.0 59.53, 430.0 77.14, 350.0 55.0, 360.0 80.15, 615.0 118.6, 660.0 63.3, 330.0 59.53, 350.0 59.65, 325.0 115.59, 470.0 71.0, 460.0 113.0, 500.0 94.7, 650.0 79.15, 460.0 150.04, 975.0 152.73, 1250.0 47.0, 230.0 146.67, 680.0 184.7, 980.0 60.06, 300.0 71.21, 485.0 124.88, 738.0 67.0, 600.0 167.28, 770.0 78.9, 320.0 118.4, 700.0 74.0, 620.0 61.38, 510.0 106.14, 585.0 109.0, 668.0 89.52, 800.0 130.29, 1100.0 136.0, 850.0 99.5, 580.0 84.02, 350.0 118.87, 413.0 88.31, 550.0 88.99, 610.0 65.82, 430.0 59.53, 350.0 120.94, 650.0 67.22, 410.0 184.0, 1380.0 156.45, 1200.0 79.0, 320.0 53.0, 459.0 160.7, 980.0 70.81, 360.0 110.94, 578.0 103.0, 600.0 80.66, 670.0 74.82, 315.0 140.09, 1140.0 89.62, 950.0 97.95, 570.0 88.0, 450.0 50.0, 400.0 112.39, 610.0 148.0, 1350.0 102.85, 565.0 126.71, 700.0 65.0, 350.0 69.0, 439.0 168.0, 770.0 61.88, 399.0 147.26, 1230.0 48.76, 210.0 67.22, 365.0 138.0, 1150.0 71.0, 325.0 115.46, 840.0 96.81, 740.0 90.21, 530.0 120.26, 650.0 53.0, 500.0 136.0, 1030.0 87.0, 660.0 134.6, 900.0 161.61, 850.0 88.88, 635.0 84.66, 485.0 81.79, 500.0 50.0, 259.0 121.0, 760.0 84.94, 595.0 73.32, 630.0 53.0, 500.0 97.86, 580.0 154.0, 1280.0 89.0, 395.0 163.66, 1080.0 101.95, 628.0 55.0, 348.0 68.48, 480.0 154.16, 780.0 157.21, 1350.0 111.0, 600.0 108.73, 580.0 53.0, 390.0 137.69, 810.0 170.83, 1290.0 67.0, 243.0 112.93, 600.0 161.0, 650.0 168.1, 1500.0 86.24, 670.0 63.0, 530.0 128.4, 950.0 98.28, 556.0 107.27, 570.0 84.0, 650.0 79.53, 480.0 110.96, 570.0 107.48, 810.0 56.88, 342.0 106.54, 540.0 59.62, 380.0 60.42, 222.0 88.31, 500.0 58.97, 236.0 88.84, 530.0 44.98, 208.0 167.28, 780.0 65.33, 300.0 55.0, 355.0 77.74, 350.0 78.81, 415.0 135.5, 1100.0 70.3, 380.0 57.0, 248.0 68.47, 330.0 59.42, 360.0 53.0, 202.0 42.13, 326.0 144.5, 1230.0 148.0, 650.0 81.0, 667.0 105.18, 590.0 59.53, 370.0 59.53, 450.0 133.8, 1090.0 59.53, 380.0 55.0, 380.0 53.0, 250.0 57.36, 258.0 137.68, 690.0 96.66, 550.0 49.95, 350.0 67.92, 430.0 81.33, 500.0 98.6, 600.0 76.61, 275.0 87.0, 438.0 84.0, 520.0 111.62, 920.0 153.44, 950.0 61.7, 320.0 90.21, 530.0 68.07, 260.0 98.43, 740.0 135.0, 800.0 65.0, 340.0 139.41, 670.0 72.66, 395.0 56.0, 240.0 84.02, 500.0 161.38, 1300.0 58.64, 420.0 80.42, 400.0 83.45, 560.0 103.0, 545.0 55.0, 380.0 128.0, 750.0 63.2, 440.0 138.01, 700.0 106.99, 625.0 109.01, 480.0 172.71, 1020.0 153.0, 1100.0 112.41, 578.0 138.01, 700.0 139.48, 900.0 103.13, 550.0 71.0, 283.0 96.0, 760.0 133.23, 830.0 79.43, 460.0 87.29, 700.0 65.82, 410.0 70.3, 376.0 96.36, 740.0 155.35, 1150.0 184.0, 1350.0 98.0, 580.0 71.0, 260.0 72.83, 470.0 95.85, 710.0 115.5, 640.0 89.0, 635.0 76.0, 475.0 125.86, 888.0 102.22, 638.0 78.0, 310.0 97.09, 800.0 112.0, 645.0 105.23, 570.0 100.74, 580.0 47.5, 430.0 106.54, 530.0 145.1, 1350.0 108.0, 790.0 59.79, 280.0 107.92, 800.0 124.75, 880.0 126.76, 710.0 91.14, 730.0 67.0, 620.0 137.76, 650.0 99.99, 600.0 150.67, 850.0 107.47, 750.0 138.53, 730.0 65.0, 438.0 53.23, 320.0 89.7, 780.0 134.62, 850.0 89.0, 735.0 59.53, 360.0 97.0, 600.0

浙公网安备 33010602011771号

浙公网安备 33010602011771号