小土堆pytorch学习—P23-损失函数与反向传播

目标函数与实际输出时间的差距,称为损失。有损失之后,去指导输出,使其更接近于目标输出。

损失函数的作用:

- 计算实际输出与目标之间的差距

- 为更新输出提供一定依据(反向传播)

L1LossFunction 损失函数计算-直接算目标与实际之间的差值

直接计算差

\(mean = \dfrac{\left \vert x_{output}-y_{target}\right \vert}{n}\\sum=\left \vert x_{output}-y_{target}\right \vert\)

instance L1Loss code👇

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1,2,3] , dtype = torch.float32)

targets =torch.tensor([1,2,5] , dtype = torch.float32)

#1 batch_size , 1 channel , 1 raw , 1 column

inputs = torch.reshape(inputs , (1,1,1,3))

targets = torch.reshape(targets , (1,1,1,3))

loss = L1Loss(reduction = 'mean/sum')

result = loss(inputs , targets)

print(result)

平方差损失,reduction有sum和mean两种

$ \mathcal{l}{loss} = \sqrt{ \sum\left \vert x-y_{target}\right \vert ^2}\ \mathcal{l}{loss} = \sqrt{\dfrac{\sum\left \vert x-y_{target}\right \vert ^2}{n} }$

instance MSE Loss code👇

import torch

from torch.nn import MSELoss

inputs = torch.tensor([1,2,3] , dtype = torch.float32)

targets = torch.tensor([1,2,5] , dtype = torch.float32)

#1 batch_size , 1 channel , 1 raw , 1 column

inputs = torch.reshape(inputs , (1,1,1,3))

targets = torch.reshape(targets , (1,1,1,3))

mse_loss = MSELoss()

mse_result = mes_loss(inputs , targets)

print(mes_result)

交叉熵损失👇

交叉熵损失常用于分类。以下是pytorch官网提供的计算公式

\[\mathrm{loss}(x,class) = -\mathrm{log}\left(\dfrac{\exp(x[class])}{\sum_j\exp(x[j])} \right )=-x[class]+log\left(\sum_j\exp(x[j]) \right)

\]

比如现在有3个类别,人猫狗,对应得分分别是【0.1,0.2,0.3】,索引分别是【0,1,2】。而此时图片实际类别是猫。那此时针对该图片的损失计算应为:\(\mathrm{loss}(x,猫) = -0.2+log(e^{0.1}+e^{0.2}+e^{0.3})=1.1019\)

>>>import math

>>>-0.2+math.log(math.exp(0.1)+math.exp(0.2)+math.exp(0.3))

1.1019428482292442

所以这个公式就是说,它计算为实际类别的得分越高,那损失就越少。

import torch

from torch.nn import CrossEntropyLoss

x=torch.tensor([0.1 , 0.2 ,0.3])

y=torch.tensor([1])

x=torch.reshape(x , (1,3))

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x,y)

print(result_cross)

如何在之前神经网络中用到Loss Function

code👇

dataset = torchvision.datasets.CIFAR10(root = "", train = False , transform = torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset = dataset , batch_size =2)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,32,5,padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32 , 64 , 5 ,padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Flatten(),

nn.Linear(in_features = 1024 ,

out_features = 64),

nn.Linear(in_features = 64 ,

out_features = 10)

)

def forward(self , input):

input = self.model(input)

return input

tudui = Tudui()

loss = nn.CrossEntropyLoss()

for data in dataloader:

imgs , targets = data

outputs = tudui(imgs)

#看输出和targets什么样,再选择损失函数

print(f"targets = {targets}")

print("\n")

print(F"outputs = {outputs}")

print("="*111)

运行结果👇

可以看到输出是一个预测得分列表。

使用交叉熵损失函数code如下👇

dataset = torchvision.datasets.CIFAR10(root = "", train = False , transform = torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset = dataset , batch_size =2)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,32,5,padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32 , 64 , 5 ,padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Flatten(),

nn.Linear(in_features = 1024 ,

out_features = 64),

nn.Linear(in_features = 64 ,

out_features = 10)

)

def forward(self , input):

input = self.model(input)

return input

tudui = Tudui()

loss = nn.CrossEntropyLoss()

for data in dataloader:

imgs , targets = data

outputs = tudui(imgs)

result_loss = loss(outputs , targets )

print(f"result_loss = {result_loss}")

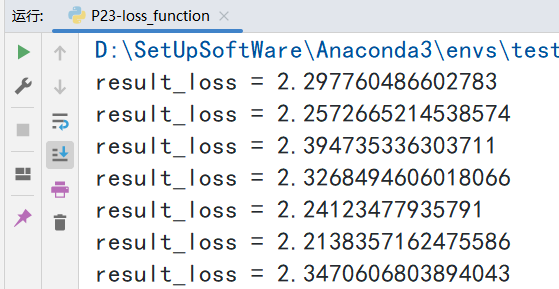

运行结果👇

浙公网安备 33010602011771号

浙公网安备 33010602011771号