使用正则表达式,取得点击次数,函数抽离

学会使用正则表达式

1. 用正则表达式判定邮箱是否输入正确。

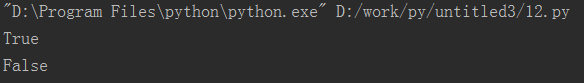

import re; def isEmailAccount(str): if(re.match("\w*@\w*(\.\w{2,3}){1,3}$",str)): return True else: return False print(isEmailAccount('123sdf_sd@qq.com.cm')) print(isEmailAccount('123sdf_sd@qq'))

2. 用正则表达式识别出全部电话号码。

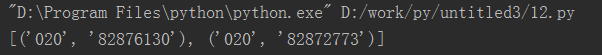

import re; import requests newurl='http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0404/9183.html' res = requests.get(newurl) res.encoding='utf-8' tel=re.findall("(\d{3,4}) *- *(\d{7,8})",res.text) print(tel)

3. 用正则表达式进行英文分词。re.split('',news)

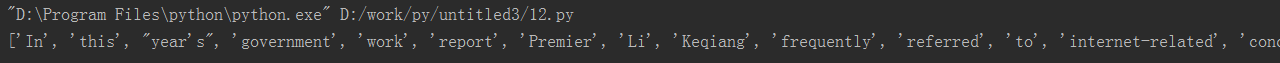

import re; str="""In this year's government work report, Premier Li Keqiang frequently referred to internet-related concepts, like Internet Plus, artificial intelligence, and Digital China, and noted that the Chinese government will give priority to "providing more and better online content".""" a=re.split('[\s,.?\" ]+',str) print(a)

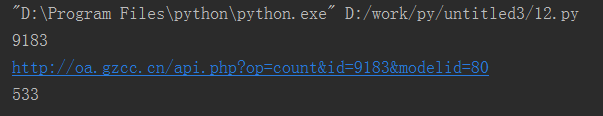

4. 使用正则表达式取得新闻编号

5. 生成点击次数的Request URL

6. 获取点击次数

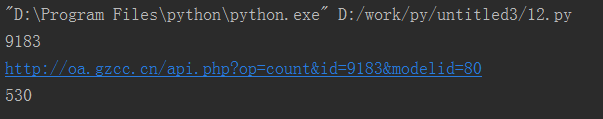

import re import requests url="http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0404/9183.html" newId=re.search("/(\d*).html$",url).group(1) print(newId) clickUrl ="http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80".format(newId) print(clickUrl) resc = requests.get(clickUrl) num = re.search(".html\('(\d*)'\)",resc.text).group(1) print(num)

7. 将456步骤定义成一个函数 def getClickCount(newsUrl):

import re import requests def getClickCount(newsUrl): newId=re.search("/(\d*).html$",newsUrl).group(1) print(newId) clickUrl ="http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80".format(newId) print(clickUrl) resc = requests.get(clickUrl) num = re.search(".html\('(\d*)'\)",resc.text).group(1) print(num) getClickCount("http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0404/9183.html");

8. 将获取新闻详情的代码定义成一个函数 def getNewDetail(newsUrl):

import re import requests from bs4 import BeautifulSoup from datetime import datetime def getClickCount(newsUrl): newId=re.search("/(\d*).html$",newsUrl).group(1) print(newId) clickUrl ="http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80".format(newId) print(clickUrl) resc = requests.get(clickUrl) num = re.search(".html\('(\d*)'\)",resc.text).group(1) print(num) def getNewDetail(newsUrl): res1 = requests.get(newsUrl) res1.encoding = 'utf-8' soup1 = BeautifulSoup(res1.text, 'html.parser') # 正文 content = soup1.select_one("#content").text info = soup1.select_one(".show-info").text # 发布时间 time = datetime.strptime(info.lstrip("发布时间:")[:19], "%Y-%m-%d %H:%M:%S") # 作者 author = info[info.find("作者:"):].split()[0].lstrip("作者:") # 来源 x = info.find("来源:") if x >= 0: source = info[x:].split()[0].lstrip("来源:") else: source = "" # 摄影 x = info.find("摄影:") if x >= 0: shot = info[x:].split()[0].lstrip("摄影:") else: shot = "" print(title) print(url) print(content) print(time) print(author) print(source) print(shot) # 点击次数 getClickCount(newsUrl); newsurl='http://news.gzcc.cn/html/xiaoyuanxinwen/' res = requests.get(newsurl) res.encoding='utf-8' soup = BeautifulSoup(res.text,'html.parser') li=soup.select_one(".news-list").select("li") for i in li: #标题 title=i.select_one(".news-list-title").text #链接 url=i.a.attrs.get('href') getNewDetail(url)

浙公网安备 33010602011771号

浙公网安备 33010602011771号