hadoop----2.hadoop环境配置

Hadoop文件配置

1.进入hadoop目录下配置hadoop文件

“hadoop-env.sh”与“slaves”两个文件的配置(两个文件同在“/opt/hadoop/etc/hadoop/"目录下)

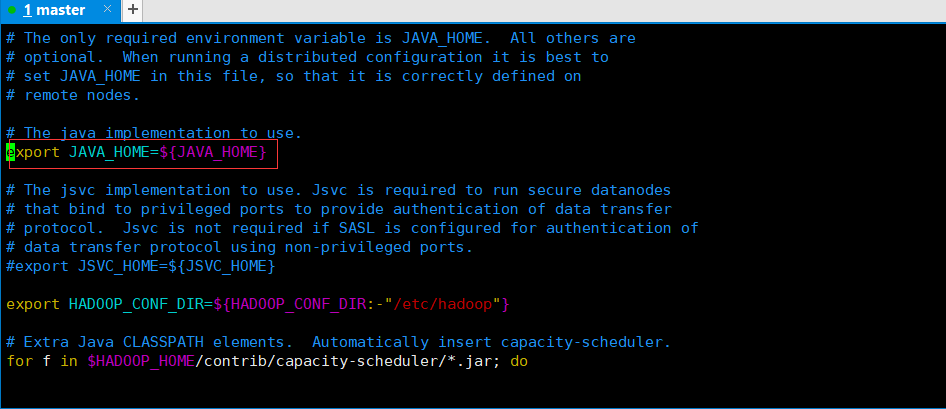

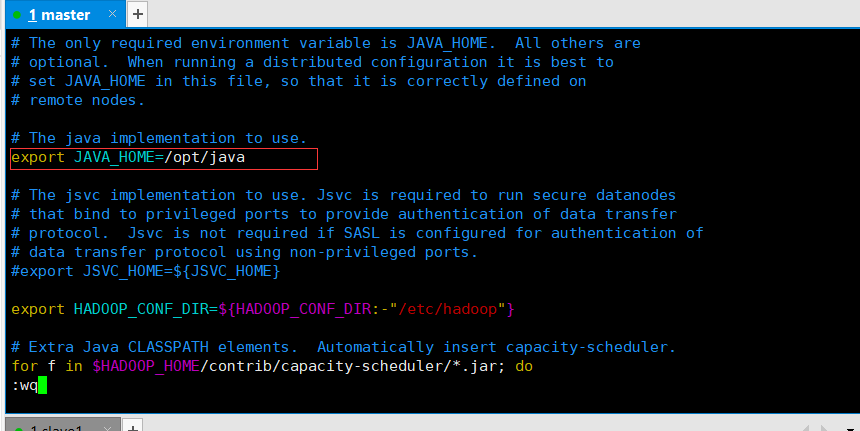

①:将hadoop-env.sh文件内的java路径更换

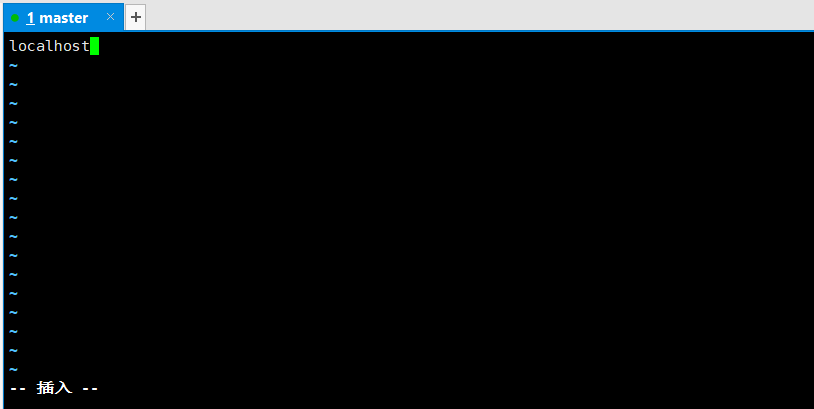

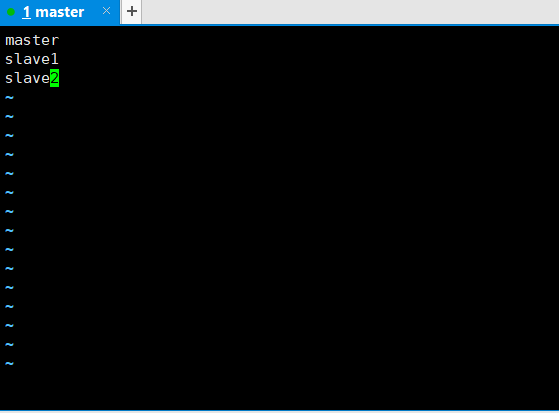

②:“slaves”文件中写入三个主机的名称

将localhost删除,写入三个主机名称(指定三个节点运行DataNode进程)

保存退出!

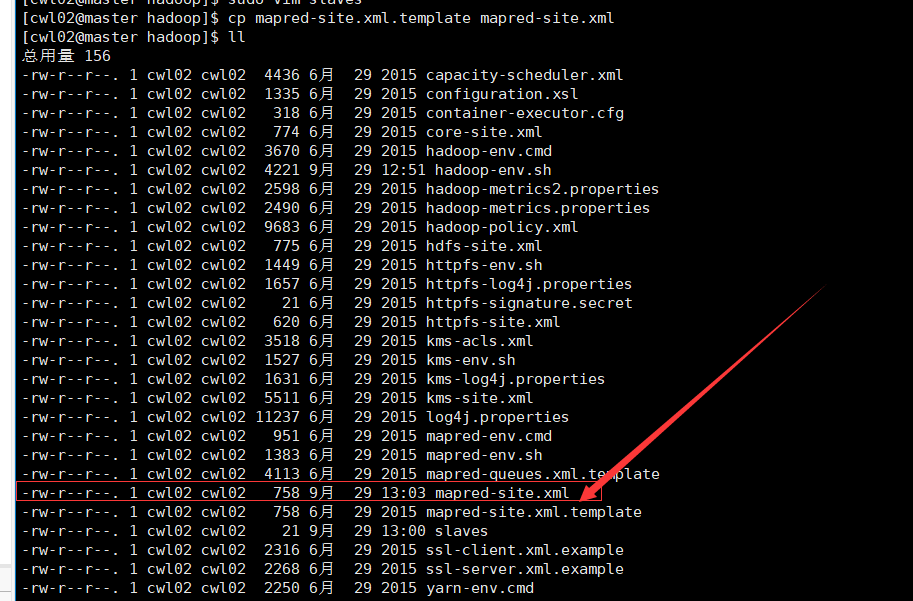

2.编辑文件“mapreduce-site.xml”(源文件为mapred-site.xml.template,因此需要拷贝出一份mapreduce-site.xml文件)

cp mapred-site.xml.template mapred-site.xml

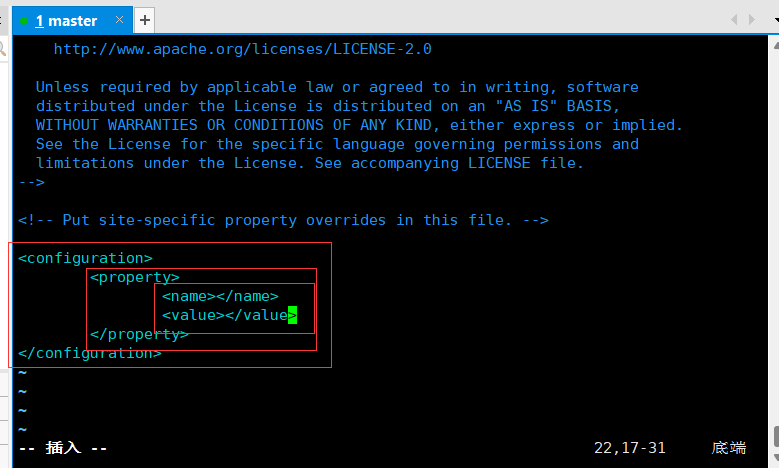

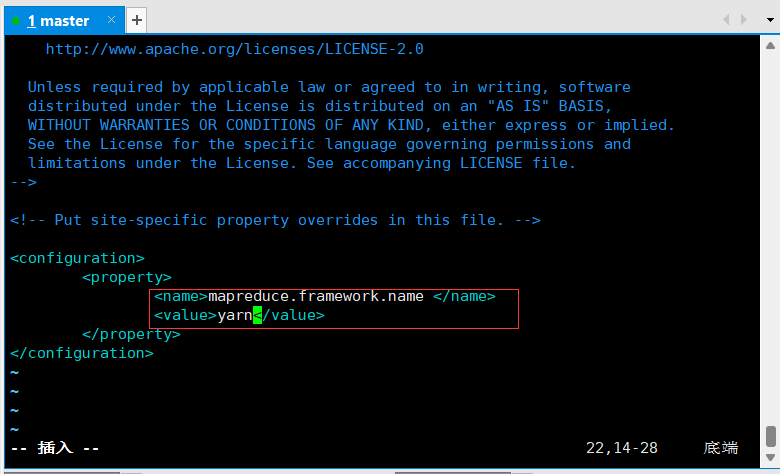

①:编辑mapreduce-site.xml文件(插入键值对,按此层次顺序编辑)

"sudo vim mapreduce-site.xml"指定marreduce在yarn上运行

②:编辑hdfs-site.xml文件

hdfs主要配置NameNode服务节点

点击查看hdfs-site.xml内容

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>ns1</value>

</property>

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>master:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>slave1:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>slave1:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master:8485;slave1:8485;slave2:8485/ns1</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/hadoop/journaldata</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/etc/ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>④:配置yarn-site.xml

点击查看代码

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>3.0</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>master</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>slave1</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>⑤:配置core-site.xml文件

点击查看core-site.xml代码

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000/ns</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/tmp/hdfs/name</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>3.将配置好的hadoop文件夹拷贝至其它两个节点

sudo scp -r /opt/hadoop @slave1:/opt/

sudo scp -r /opt/hadoop @slave2:/opt/

下面配置zookeeper-------------查看另一篇文章《zookeeper配置》

浙公网安备 33010602011771号

浙公网安备 33010602011771号