K8s 集群部署

K8s 集群的对象

Kubernetes 对象是 Kubernetes 系统中的持久实体, Kubernetes 是使用 Go 语言编写的, 所有交付到 K8s 里的实体都是对象, 一切皆对象, 也称为 K8s 的标准资源;

- Pod -- K8s 里容器的最小化, 最小单元;

- Namespace -- 名称空间, ( default(默认的) / kube-system / 自定义的名称空间 )

- Deployment -- Pod 控制器 ( 例如需要起几份, 或者以什么样的状态起来, 需不需要挂载数据卷 , 没有镜像从哪拉去, Pod死亡的时候持续多长时间杀死 等等 这些状态 )

- Daemonset -- P 控制器 ( 每一个node上面起一个, 有多少个 node 起多少个 Pod ; 不跟 Deployment 一样还需要设置起几份 )

- Service -- K8s 里的应用, 在集群内部是怎么被访问的, 更多的集群内部使用;

- Ingress -- K8s 1.10 起才有的对象, 把应用服务解析到集群外面, 外部访问集群内部时的一个入口为 Ingress;

- ...

K8s 集群的主要组件

Kubernetes 集群的主要组件

- Master 节点 ( 主控节点 )

- etcd -- K8s 提供默认的存储系统,保存所有集群的数据, 使用时需要给 etcd 做高可用;

- apiserver -- * 相当于 K8s 的大脑, 协调整个集群的工作(谁来访问我, 调度给谁, 完成什么样的工作等);

- scheduler -- 资源调度器, 通过预选策略和优选策略实现, 一个 Pod 对应多个 运算节点时, 在哪个或哪几个运算节点上启动的策略; 还有高级特性"污点""容忍度"(例如有一个Pod需要调度, 一个节点打上的有污点, Pod容忍不了这个污点, 这个节点永远不被调度);

- controller-manager -- K8s 的大管家, 保证集群的Pod按照既定好的执行的( pod 指定跑到哪个或者哪几个节点上 或者 异常的处理等)

- Node 节点 ( 运算节点 )

- kubelet -- 是和底层 docker 引擎进行通信的 ( Pod 作为容器, 通过 kubelet 操作 运行, 杀死, 扩容等 );

- kube-proxy -- 维护 Service 对应的 C 网络 ( K8s 里面有三条网络 Service 的 C 网络; Pod 网络为容器网络; 宿主机网络为 Node 网络 );

- Addons 插件

- coredns -- 维护 Service 和 C网络的一个对应关系;

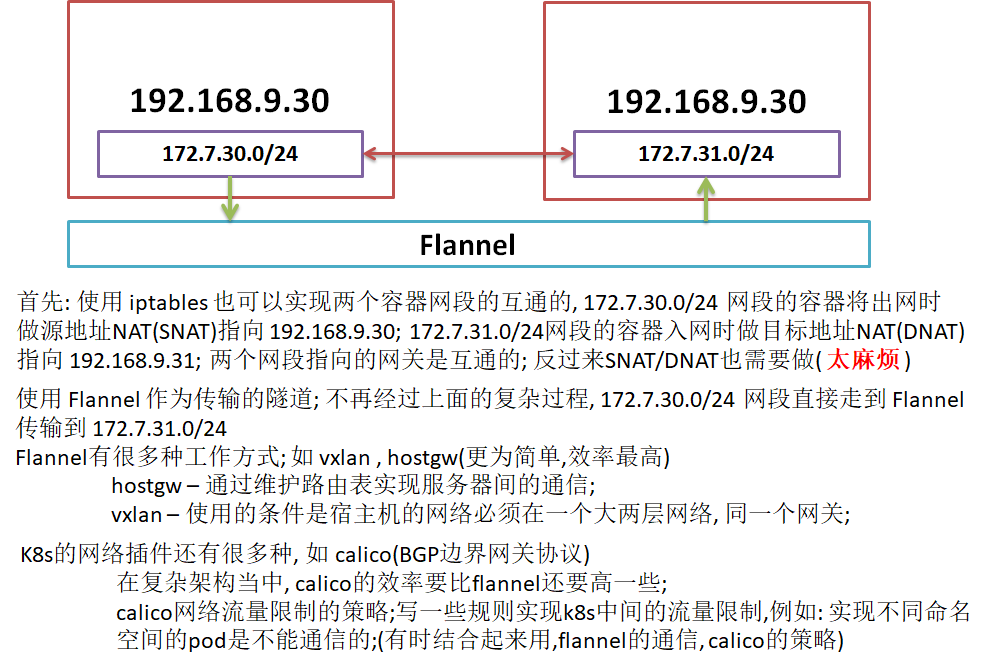

- flannel -- 维护 P 网络的;( 实现 Pod 网络能够跨宿主机进行通信);

- traefik -- Ingress 的一种软件实现 ( 集群内部交付的服务如何对集群外部提供服务 );

- dashboard -- 管理 K8s 集群的一个面板.

K8s 集群部署架构说明

集群架构示意图

贴近生产的架构

实现环境

- 基础架构

| 主机名 | 角色 | ip |

| zx28.zxjr.com | k8s代理节点 1 | 192.168.9.28 |

| zx29.zxjr.com | k8s代理节点 2 | 192.168.9.29 |

| zx30.zxjr.com | k8s主控和运算节点 | 192.168.9.30 |

| zx31.zxjr.com | k8s主控和运算节点 | 192.168.9.31 |

| zx32.zxjr.com | k8s运维节点 (docker仓库等) | 192.168.9.32 |

- 硬件环境

5 台 VM, 每台至少 2C 2G; ( 运算节点服务器的配置高一些 , 代理节点没有必要太高 )

- 软件环境

- docker: v1.13.1 -- v1.12.6 (比较稳定, 但 kubernetes 1.15.2 版本 最低要求是 docker api version 1.26 以上; ) <kubernetes v1.13版本可以使用 docker v1.12.6>

- kubernetes: v1.15.2

- etcd: v3.3.12 -- etcd-v3.3.12-linux-amd64.tar.gz

- flannel: v0.11.0

- harbor: v1.7.4

- 证书签发工具 CFSSL: R1.2

- bind9: v9.11.9

- docker: v1.13.1 -- v1.12.6 (比较稳定, 但 kubernetes 1.15.2 版本 最低要求是 docker api version 1.26 以上; ) <kubernetes v1.13版本可以使用 docker v1.12.6>

准备自建 DNS 服务

准备自签证书环境

K8s 绝大部分都是通过 https 来进行通信的; 证书签发的工具, 使用 cfssl ;也可以使用 openssl

运维主机上操作;安装CFSSL

// 下载签发工具,另存到/usr/bin目录下,给可执行权限; // 也可以本机下载后上传到服务器,进行更名操作; curl -s -L -o /usr/bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 curl -s -L -o /usr/bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 curl -s -L -o /usr/bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x /usr/bin/cfssl*

创建生成 CA 证书的 JSON 配置文件

// 创建证书签发环境的目录 mkdir /opt/certs cd /opt/certs // 创建ca证书的json文件 vim ca-config.json { "signing": { "default": { "expiry": "175200h" }, "profiles": { "server": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth" ] }, "client": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "client auth" ] }, "peer": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } // 整数类型 // client certificate: 客户端使用, 用于服务端认证客户端, 例如 etcdctl/etcd proxy/fleetctl/docker客户端 // server certificate: 服务端使用, 客户端以此验证服务端身份, 例如 docker 服务端/ kube-apiserver // peer certificate: 双向证书, 用于 etcd 集群成员间通信;

创建生成 CA 证书签名请求 (csr) 的 JSON 配置文件

// 生成 ca 证书签名请求 (csr) 的JSON 配置文件 vim ca-csr.json { "CN": "kubernetes-ca", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "zxjr", "OU": "ops" } ], "ca": { "expiry": "175200h" } } // CN: Common Name 浏览器使用该字段验证网段是否合法, 一般写的是域名. 非常重要,浏览器使用该字段验证网站是否合法; // C: Country, 国家 // ST: State, 州, 省 // L: Locality, 地区, 城市 // O: Organization Name: 组织名称, 公司名称 // OU: Organization Unit Name: 组织单位名称, 公司部门

生成 CA 证书和私钥

// 生成 CA 证书和私钥 cfssl gencert -initca ca-csr.json |cfssljson -bare ca - 2019/08/09 10:48:30 [INFO] generating a new CA key and certificate from CSR 2019/08/09 10:48:30 [INFO] generate received request 2019/08/09 10:48:30 [INFO] received CSR 2019/08/09 10:48:30 [INFO] generating key: rsa-2048 2019/08/09 10:48:31 [INFO] encoded CSR 2019/08/09 10:48:31 [INFO] signed certificate with serial number 322319757095296222374087682570446651777008983245 // 生成 ca.pem , ca.csr , ca-key.pem ( CA私钥,需要妥善保管 ) ll /opt/certs/ total 20 -rw-r--r-- 1 root root 836 Aug 8 16:54 ca-config.json -rw-r--r-- 1 root root 1001 Aug 9 10:48 ca.csr -rw-r--r-- 1 root root 332 Aug 9 10:39 ca-csr.json -rw------- 1 root root 1675 Aug 9 10:48 ca-key.pem -rw-r--r-- 1 root root 1354 Aug 9 10:48 ca.pem

部署 docker 环境

主控节点运算节点 及运维主机上都需要安装 docker ( zx30.zxjr.com / zx31.zxjr.com / zx32.zxjr.com ) < 192.168.9.30 / 192.168.9.31 / 192.168.9.32 >

运维主机操作

// 运维主机 -- 首先下载上传 docker 包; 或者直接使用 wget 进行下载 cd /opt ll total 19580 drwxr-xr-x 2 root root 93 Aug 9 10:48 certs -rw-r--r-- 1 root root 19509116 Aug 19 10:55 docker-engine-1.13.1-1.el7.centos.x86_64.rpm

-rw-r--r-- 1 root root 29024 Aug 19 10:55 docker-engine-selinux-1.13.1-1.el7.centos.noarch.rpm // 安装docker yum -y localinstall docker-engine-* // 创建配置文件 mkdir /etc/docker vim /etc/docker/daemon.json { "graph": "/data/docker", "storage-driver": "overlay", "insecure-registries": ["registry.access.redhat.com","quay.io","harbor.zxjr.com"], "bip": "192.168.9.254/24", "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true } // 这里 bip(网关) 要根据宿主机的ip变化; // 创建/data/docker 目录; 不手动创建也可以, 启动后自动生成; mkdir -p /data/docker // 启动 docker; 启动脚本 /usr/lib/systemcd/systemc/docker.service systemctl start docker systemctl enable docker // 查看 daemon.json 中指定的 /data/docker 目录 ll /data/docker/ total 0 drwx------ 2 root root 6 Aug 12 11:06 containers drwx------ 3 root root 21 Aug 12 11:06 image drwxr-x--- 3 root root 19 Aug 12 11:06 network drwx------ 2 root root 6 Aug 12 11:06 overlay drwx------ 2 root root 6 Aug 12 11:06 swarm drwx------ 2 root root 6 Aug 12 11:06 tmp drwx------ 2 root root 6 Aug 12 11:06 trust drwx------ 2 root root 25 Aug 12 11:06 volumes // 查看docker信息 docker info Containers: 0 Running: 0 Paused: 0 Stopped: 0 Images: 0 Server Version: 1.13.1 Storage Driver: overlay Backing Filesystem: xfs Logging Driver: json-file Cgroup Driver: systemd Plugins: Volume: local Network: null overlay host bridge Swarm: inactive Runtimes: runc Default Runtime: runc Security Options: seccomp Kernel Version: 3.10.0-693.el7.x86_64 Operating System: CentOS Linux 7 (Core) OSType: linux Architecture: x86_64 CPUs: 2 Total Memory: 1.796 GiB Name: zx32.zxjr.com ID: AKK4:GSZS:I7JH:EMPF:TG57:OZUL:Y3BN:N5UE:IA4A:K6NB:TML2:63RU Docker Root Dir: /data/docker Debug Mode (client): false Debug Mode (server): false Registry: https://index.docker.io/v1/ Insecure Registries: harbor.zxjr.com quay.io registry.access.redhat.com 127.0.0.0/8

两个主控和运算节点(zx30,zx31)

// 相同的操作,长传docker rpm包 cd /opt/ ll total 19580 -rw-r--r-- 1 root root 19509116 Aug 19 10:55 docker-engine-1.13.1-1.el7.centos.x86_64.rpm

-rw-r--r-- 1 root root 29024 Aug 19 10:55 docker-engine-selinux-1.13.1-1.el7.centos.noarch.rpm // 安装docker yum localinstall -y docker-engine-* // 编辑 daemon.json 配置文件 mkdir -p /etc/docker vim /etc/docker/daemon.json { "graph": "/data/docker", "storage-driver": "overlay", "insecure-registries": ["registry.access.redhat.com","quay.io","harbor.zxjr.com"], "bip": "172.7.30.1/24", "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true } // 上面是192.168.9.30服务器的配置; 下面是192.168.9.31 的配置; 主机bip同上面运维服务器的配置规律; { "graph": "/data/docker", "storage-driver": "overlay", "insecure-registries": ["registry.access.redhat.com","quay.io","harbor.zxjr.com"], "bip": "172.7.31.1/24", "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true } // 启动docker, 并设置开机自启 systemctl start docker systemctl enable docker // 查看docker 的信息 docker info

部署docker镜像的私有仓库 -- Harbor

运维主机上 zx32.zxjr.com (192.168.9.32)

// 首先下载 harbor 源码包 cd /opt/src/ ll total 559520 -rw-r--r-- 1 root root 19509116 Aug 19 10:55 docker-engine-1.13.1-1.el7.centos.x86_64.rpm

-rw-r--r-- 1 root root 29024 Aug 19 10:55 docker-engine-selinux-1.13.1-1.el7.centos.noarch.rpm -rw-r--r-- 1 root root 577920653 Aug 12 15:53 harbor-offline-installer-v1.7.4.tgz // 安装 docker-compose; 依赖 epel-release 源 yum -y install epel-release yum -y install python-pip pip install --upgrade pip // 有报错的话需要升级; php install docker-compose // 解压 harbor 源码包 tar xf harbor-offline-installer-v1.7.4.tgz -C /opt/src/ // 为 harbor 做软连接, 方便后期升级; ln -s /opt/src/harbor/ /opt/harbor ll /opt/ |grep harbor lrwxrwxrwx 1 root root 16 Aug 12 16:07 harbor -> /opt/src/harbor/ // 配置 harbor.cfg vim /opt/harbor/harbor.cfg ... ... hostname = harbor.zxjr.com ... ... // 配置 dockers-compose.yml , 将物理机的80及443 端口让出来, 这设置成 180 及 1443 端口; vim /opt/harbor/docker-compose.yml ... ... ports: - 180:80 - 1443:443 - 4443:4443 ... ... // 安装 harbor cd /opt/harbor/ ./install.sh // 检查 harbor 启动情况 docker-compose ps Name Command State Ports ------------------------------------------------------------------------------------------------ harbor-adminserver /harbor/start.sh Up (healthy) harbor-core /harbor/start.sh Up (healthy) harbor-db /entrypoint.sh postgres Up (healthy) 5432/tcp harbor-jobservice /harbor/start.sh Up harbor-log /bin/sh -c /usr/local/bin/ Up (healthy) 127.0.0.1:1514->10514/tcp ... harbor-portal nginx -g daemon off; Up (healthy) 80/tcp nginx nginx -g daemon off; Up (healthy) 0.0.0.0:1443->443/tcp, 0.0.0.0:4443->4443/tcp, 0.0.0.0:180->80/tcp redis docker-entrypoint.sh redis Up 6379/tcp ... registry /entrypoint.sh /etc/regist Up (healthy) 5000/tcp ... registryctl /harbor/start.sh Up (healthy) // web_dns上添加 harbor.zxjr.com 的解析; 有web界面的web界面添加, 没有web的,配置文件中添加; cat /var/named/zxjr.com.zone ... ... dns 60 IN A 192.168.9.28 harbor 60 IN A 192.168.9.32 zx28 60 IN A 192.168.9.28 zx29 60 IN A 192.168.9.29 zx30 60 IN A 192.168.9.30 zx31 60 IN A 192.168.9.31 zx32 60 IN A 192.168.9.32 // 检测解析 dig -t A harbor.zxjr.com @192.168.9.28 +short 192.168.9.32

安装并配置 Nginx

// 安装 yum -y install nginx // 配置 vim /etc/nginx/conf.d/harbor.zxjr.com.conf server { listen 80; server_name harbor.zxjr.com; client_max_body_size 1000m; location / { proxy_pass http://127.0.0.1:180; } } server { listen 443 ssl; server_name harbor.zxjr.com; ssl_certificate "certs/harbor.zxjr.com.pem"; ssl_certificate_key "certs/harbor.zxjr.com-key.pem"; ssl_session_cache shared:SSL:1m; ssl_session_timeout 10m; ssl_ciphers HIGH:!aNULL:!MD5; ssl_prefer_server_ciphers on; client_max_body_size 1000m; location / { proxy_pass http://127.0.0.1:180; } } // 使用 nginx -t 检测配置文件; 报错, 需要签发证书; nginx -t nginx: [emerg] BIO_new_file("/etc/nginx/certs/harbor.zxjr.com.pem") failed (SSL: error:02001002:system library:fopen:No such file or directory:fopen('/etc/nginx/certs/harbor.zxjr.com.pem','r') error:2006D080:BIO routines:BIO_new_file:no such file) nginx: configuration file /etc/nginx/nginx.conf test failed // 签发证书 cd /opt/certs/ (umask 077;openssl genrsa -out harbor.zxjr.com-key.pem) ll harbor.zxjr.com-key.pem -rw------- 1 root root 1675 Aug 13 09:47 harbor.zxjr.com-key.pem openssl req -new -key harbor.zxjr.com-key.pem -out harbor.zxjr.com.csr -subj "/CN=harbor.zxjr.com/ST=Beijing/L=Beijing/O=zxjr/OU=ops" ll harbor.zxjr.com.csr -rw-r--r-- 1 root root 989 Aug 13 09:51 harbor.zxjr.com.csr openssl x509 -req -in harbor.zxjr.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out harbor.zxjr.com.pem -days 3650 Signature ok subject=/CN=harbor.zxjr.com/ST=Beijing/L=Beijing/O=zxjr/OU=ops Getting CA Private Key // 将签发的证书放到nginx配置的路径下 mkdir /etc/nginx/certs cp harbor.zxjr.com-key.pem /etc/nginx/certs/ cp harbor.zxjr.com.pem /etc/nginx/certs/ // 检测nginx nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful // 启动 nginx nginx // 测试机配置hosts, 解析192.168.9.32 harbor.zxjr.com; 访问harbor.zxjr.com后,跳转到harbor的登录界面; // harbor登录名密码 vim /opt/harbor/harbor.cfg ... ... #The initial password of Harbor admin, only works for the first time when Harbor starts. #It has no effect after the first launch of Harbor. #Change the admin password from UI after launching Harbor. harbor_admin_password = Harbor12345 ... ...

K8s主控节点安装部署

部署 Master 节点服务

-

部署 etcd 集群

-

部署 kube-apiserver 集群

-

部署 controller-manager 集群

-

部署 kube-scheduler 集群

部署 etcd 集群

用于配置共享和服务发现的键值存储系统,其四个核心特点:

- 简单:基于HTTP+JSON的API让你用curl命令就可以轻松使用

- 安全:可选SSL客户认证机制

- 快速:每个实例每秒支持一千次写操作

- 可信:使用Raft算法充分实现了分布式

zx29.zxjr.com etcd lead 192.168.9.29

zx30.zxjr.com etcd follow 192.168.9.30

zx31.zxjr.com etcd follow 192.168.9.31

// 首先在运维主机上创建生成整数签名请求 (csr) 的 JSON 配置文件, peer 指双向证书验证; // 配置文件中hosts中可以多写几个主机ip; 此处是9.28备用, 使用的是 9.29/9.30/9.31; cd /opt/certs/ vim etcd-peer-csr.json { "CN": "etcd-peer", "hosts": [ "192.168.9.28", "192.168.9.29", "192.168.9.30", "192.168.9.31" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "zxjr", "OU": "ops" } ] } // 生成 etcd 证书和私钥 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssljson -bare etcd-peer ll etcd-peer* -rw-r--r-- 1 root root 1066 Aug 13 14:31 etcd-peer.csr -rw-r--r-- 1 root root 358 Aug 13 14:28 etcd-peer-csr.json -rw------- 1 root root 1679 Aug 13 14:31 etcd-peer-key.pem -rw-r--r-- 1 root root 1436 Aug 13 14:31 etcd-peer.pem // ******************************* zx29.zxjr.com 上操作 ********************************* // 创建 etcd 用户 useradd -s /sbin/nologin -M etcd // 下载(wget)或者上传软件; mkdir /opt/src/ ll /opt/src -rw-r--r-- 1 root root 11350736 Aug 13 11:22 etcd-v3.3.12-linux-amd64.tar.gz tar xf etcd-v3.3.12-linux-amd64.tar.gz ln -s /opt/src/etcd-v3.3.12-linux-amd64 /opt/etcd ll /opt/ total 0 lrwxrwxrwx 1 root root 33 Aug 13 14:37 etcd -> /opt/src/etcd-v3.3.12-linux-amd64 drwxr-xr-x 3 root root 77 Aug 13 14:36 src // 创建目录, 拷贝证书,私钥 mkdir -p /data/etcd /data/logs/etcd-server /opt/etcd/certs // 将运维主机上生成的 ca.pem , etcd-peer.pem , etcd-peer-key.pem 拷贝到本机 /opt/etcd/certs 目录中; // *** 私钥文件权限是 600; cd /opt/etcd/certs scp 192.168.9.32:/opt/certs/ca.pem ./ scp 192.168.9.32:/opt/certs/etcd-peer.pem ./ scp 192.168.9.32:/opt/certs/etcd-peer-key.pem ./ ll total 12 -rw-r--r-- 1 root root 1354 Aug 13 14:44 ca.pem -rw------- 1 root root 1679 Aug 13 14:45 etcd-peer-key.pem -rw-r--r-- 1 root root 1436 Aug 13 14:45 etcd-peer.pem // 创建 etcd 服务启动脚本;etcd集群各主机有所不同,注意 name 及 IP地址; vim /opt/etcd/etcd-server-startup.sh #!/bin/sh ./etcd --name etcd-server-9-29 \ --data-dir /data/etcd/etcd-server \ --listen-peer-urls https://192.168.9.29:2380 \ --listen-client-urls https://192.168.9.29:2379,http://127.0.0.1:2379 \ --quota-backend-bytes 8000000000 \ --initial-advertise-peer-urls https://192.168.9.29:2380 \ --advertise-client-urls https://192.168.9.29:2379,http://127.0.0.1:2379 \ --initial-cluster etcd-server-9-29=https://192.168.9.29:2380,etcd-server-9-30=https://192.168.9.30:2380,etcd-server-9-31=https://192.168.9.31:2380 \ --ca-file ./certs/ca.pem \ --cert-file ./certs/etcd-peer.pem \ --key-file ./certs/etcd-peer-key.pem \ --client-cert-auth \ --trusted-ca-file ./certs/ca.pem \ --peer-ca-file ./certs/ca.pem \ --peer-cert-file ./certs/etcd-peer.pem \ --peer-key-file ./certs/etcd-peer-key.pem \ --peer-client-cert-auth \ --peer-trusted-ca-file ./certs/ca.pem \ --log-output stdout chmod +x /opt/etcd/etcd-server-startup.sh // 安装 supervisord yum -y install supervisor // 创建 etcd-server 的启动配置; etcd集群各主机有所不同; vim /etc/supervisord.d/etcd-server.ini [program:etcd-server-9-29] command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/etcd ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=etcd ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/etcd-server/etcd.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动 supervisor 并设置开机启动 systemctl start supervisord systemctl enable supervisord // 修改目录的属主属组为 etcd chown -R etcd.etcd /data/etcd /data/logs/etcd-server /opt/etcd // 启动 etcd 服务并检查;若启动错误,查看日志/data/logs/etcd-server/etcd.stdout.log; supervisorctl start all supervisorctl status etcd-server-9-29 RUNNING pid 6668, uptime 0:05:49 // *************************** zx30.zxjr.com / zx31.zxjr.com 重复 zx29.zxjr.com 的部署 **************************** // 这两个主机上配置主机修改 /opt/etcd/etcd-server-startup.sh 和 /etc/supervisord.d/etcd-server.ini 配置文件; // 三台都部署好以后, 检查集群状态; 下面可以看出192.168.9.29上 isLeader 为 true; /opt/etcd/etcdctl cluster-health member 14528a1741b0792c is healthy: got healthy result from http://127.0.0.1:2379 member 2122f1d3d5e5fcf8 is healthy: got healthy result from http://127.0.0.1:2379 member d93531a3b8f59739 is healthy: got healthy result from http://127.0.0.1:2379 cluster is healthy /opt/etcd/etcdctl member list 14528a1741b0792c: name=etcd-server-9-30 peerURLs=https://192.168.9.30:2380 clientURLs=http://127.0.0.1:2379,https://192.168.9.30:2379 isLeader=false 2122f1d3d5e5fcf8: name=etcd-server-9-31 peerURLs=https://192.168.9.31:2380 clientURLs=http://127.0.0.1:2379,https://192.168.9.31:2379 isLeader=false d93531a3b8f59739: name=etcd-server-9-29 peerURLs=https://192.168.9.29:2380 clientURLs=http://127.0.0.1:2379,https://192.168.9.29:2379 isLeader=true

部署 kube-apiserver 集群

-

主控节点 apiserver 部署详解

zx30.zxjr.com kube-apiserver 192.168.9.30

zx31.zxjr.com kube-apiserver 192.168.9.31

简介

kube-apiserver 是k8s 最重要的核心之一,主要功能如下:

-提供集群管理的rest api接口,包括认证授权、数据校验一级集群状态变更等;

-提供与其他模块之间的数据交互和通信

**:其他模块通过api server 查询或修改数据,只有api server能直接操作etcd数据库

工作原理

kube-apiserver提供了k8s的rest api,实现了认证、授权和准入控制等安全功能,

同时也负责了集群状态的存储操作。

// ******************* 运维主机 zx32.zxjr.com 上签发client 及 kube-apiserver 证书; ******************** // 创建生成证书签名请求 (csr) 的 JSON 配置文件 cd /opt/certs vim client-csr.json { "CN": "k8s-node", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "zxjr", "OU": "ops" } ] } // 生成 client 证书和私钥 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssljson -bare client // 查看生成的证书 ll total 68 ... ... -rw-r--r-- 1 root root 993 Aug 14 11:20 client.csr -rw-r--r-- 1 root root 280 Aug 14 11:19 client-csr.json -rw------- 1 root root 1679 Aug 14 11:20 client-key.pem -rw-r--r-- 1 root root 1371 Aug 14 11:20 client.pem ... ... // 创建生成证书签名请求 (csr) 的 JSON 配置文件; hosts 中的ip地址可以多写几个, 以备后期apiserver出故障,在别的服务器上部署; vim apiserver-csr.json { "CN": "apiserver", "hosts": [ "127.0.0.1", "10.4.0.1", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local", "192.168.9.33", "192.168.9.30", "192.168.9.31", "192.168.9.34" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "zxjr", "OU": "ops" } ] } // 生成 kube-apiserver 证书和私钥 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssljson -bare apiserver // 查看生成证书 ll |grep apiserver -rw-r--r-- 1 root root 1245 Aug 14 11:23 apiserver.csr -rw-r--r-- 1 root root 574 Aug 14 11:23 apiserver-csr.json -rw------- 1 root root 1675 Aug 14 11:23 apiserver-key.pem -rw-r--r-- 1 root root 1598 Aug 14 11:23 apiserver.pem // *************************** 在 zx30.zxjr.com 及 zx31.zxjr.com 上部署 kubernetes *********************** // 上传二进制包到 /opt/src 目录下, 进行解压; cd /opt/src ls kubernetes-server-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz tar xf kube-apiserver-linux-amd64.tar.gz // 解压后的目录更名, 加上版本, 更直观 mv kubernetes/ kubernetes-1.15.2/ // 做软连接, 方便后期升级 ln -s /opt/src/kubernetes-1.15.2/ /opt/kubernetes // 进入/opt/kubernetes/server/bin 目录 cd /opt/kubernetes/server/bin // 删除没有的.tar 及 tag 结尾的文件 rm -f *.tar *tag // 为 kubectl 做软连接 到 /usr/local/bin 目录 ln -s /opt/kubernetes/server/bin/kubectl /usr/local/bin/kubectl // 创建放置证书的 certs 目录 mkdir certs && cd certs // 将以下证书及私钥拷贝到 certs 目录下, 注意私钥文件属性 600; ll /opt/kubernetes/server/bin/certs total 24 -rw------- 1 root root 1675 Aug 14 13:52 apiserver-key.pem -rw-r--r-- 1 root root 1598 Aug 14 13:52 apiserver.pem -rw------- 1 root root 1675 Aug 14 13:51 ca-key.pem -rw-r--r-- 1 root root 1354 Aug 14 13:51 ca.pem -rw------- 1 root root 1679 Aug 14 13:52 client-key.pem -rw-r--r-- 1 root root 1371 Aug 14 13:52 client.pem // 创建 conf 目录, 并添加配置 audit.yaml mkdir ../conf && cd ../conf vim audit.yaml apiVersion: audit.k8s.io/v1beta1 # This is required. kind: Policy # Don't generate audit events for all requests in RequestReceived stage. omitStages: - "RequestReceived" rules: # Log pod changes at RequestResponse level - level: RequestResponse resources: - group: "" # Resource "pods" doesn't match requests to any subresource of pods, # which is consistent with the RBAC policy. resources: ["pods"] # Log "pods/log", "pods/status" at Metadata level - level: Metadata resources: - group: "" resources: ["pods/log", "pods/status"] # Don't log requests to a configmap called "controller-leader" - level: None resources: - group: "" resources: ["configmaps"] resourceNames: ["controller-leader"] # Don't log watch requests by the "system:kube-proxy" on endpoints or services - level: None users: ["system:kube-proxy"] verbs: ["watch"] resources: - group: "" # core API group resources: ["endpoints", "services"] # Don't log authenticated requests to certain non-resource URL paths. - level: None userGroups: ["system:authenticated"] nonResourceURLs: - "/api*" # Wildcard matching. - "/version" # Log the request body of configmap changes in kube-system. - level: Request resources: - group: "" # core API group resources: ["configmaps"] # This rule only applies to resources in the "kube-system" namespace. # The empty string "" can be used to select non-namespaced resources. namespaces: ["kube-system"] # Log configmap and secret changes in all other namespaces at the Metadata level. - level: Metadata resources: - group: "" # core API group resources: ["secrets", "configmaps"] # Log all other resources in core and extensions at the Request level. - level: Request resources: - group: "" # core API group - group: "extensions" # Version of group should NOT be included. # A catch-all rule to log all other requests at the Metadata level. - level: Metadata # Long-running requests like watches that fall under this rule will not # generate an audit event in RequestReceived. omitStages: - "RequestReceived" // 创建启动脚本,添加可执行权限;service-cluster-ip-range设置为跟节点和 cd /opt/kubernetes/server/bin vim kube-apiserver.sh #!/bin/bash ./kube-apiserver \ --apiserver-count 2 \ --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \ // 审计日志放置路径 --audit-policy-file ./conf/audit.yaml \ // 策略文件 --authorization-mode RBAC \ // 认证方式; 很多种; RBAC 是最常用也是官方推荐的; --client-ca-file ./certs/ca.pem \ --requestheader-client-ca-file ./certs/ca.pem \ --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \ --etcd-cafile ./certs/ca.pem \ --etcd-certfile ./certs/client.pem \ --etcd-keyfile ./certs/client-key.pem \ --etcd-servers https://192.168.9.29:2379,https://192.168.9.30:2379,https://192.168.9.31:2379 \ --service-account-key-file ./certs/ca-key.pem \ --service-cluster-ip-range 10.4.0.0/16 \ // K8s有三条网络,与上面两段不冲突;node网络-192;pod网络--172 --service-node-port-range 3000-29999 \ // 端口的范围; --target-ram-mb=1024 \ // 使用内存; --kubelet-client-certificate ./certs/client.pem \ --kubelet-client-key ./certs/client-key.pem \ --log-dir /data/logs/kubernetes/kube-apiserver \ --tls-cert-file ./certs/apiserver.pem \ --tls-private-key-file ./certs/apiserver-key.pem \ --v 2 chmod +x kube-apiserver.sh // 创建kubernetes 放置日志的目录 mkdir -p /data/logs/kubernetes/kube-apiserver // 创建supervisor配置 vim /etc/supervisord.d/kube-apiserver.ini [program:kube-apiserver] command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动服务并检查 supervisorctl update supervisorctl status // 若是没启动成功, 查看错误日志,排错再启动 supervisorctl start kube-apiserver

同样安装部署启动检查所有集群规划主机上的kube-apiserver

-

主控节点 apiserver 代理部署

zx28.zxjr.com nginx+keepalived 192.168.9.28

zx29.zxjr.com nginx+keepalived 192.168.9.29

vip -- 192.168.9.33

// 配置 4 层反向代理, zx28.zxjr.com / zx29.zxjr.com // 使用 nginx 做反向代理, nginx 配置文件添加以下内容; 两台服务器上相同的配置; vim /etc/nginx/nginx.conf ... ... http { ... ... } stream { upstream kube-apiserver { server 192.168.9.30:6443 max_fails=3 fail_timeout=30s; server 192.168.9.31:6443 max_fails=3 fail_timeout=30s; } server { listen 7443; proxy_connect_timeout 2s; proxy_timeout 900s; proxy_pass kube-apiserver; } } ... ... // 启动nginx 并设置开机启动 systemctl start nginx systemctl enable nginx // 查看nginx 启动的7443端口 netstat -anpt |grep 7443 tcp 0 0 0.0.0.0:7443 0.0.0.0:* LISTEN 8772/nginx: master // 安装 keepalived 及 keepalived配置, 创建检测端口脚本, 给可执行权限; 两台服务器上相同操作; yum -y install keepalived vim /etc/keepalived/check_port.sh #!/bin/bash # keepalived 监控端口脚本 # 使用方法: # 在keepalived的配置文件中 # vrrp_script check_port { // 创建一个vrrp_script脚本,检查配置 # script "/etc/keepalived/check_port.sh 6379" // 配置监听的端口 # interval 2 // 检查脚本的频率,单位(秒) #} CHK_PORT=$1 if [ -n "$CHK_PORT" ];then PORT_PROCESS=`ss -lt|grep $CHK_PORT|wc -l` if [ $PORT_PROCESS -eq 0 ];then echo "Port $CHK_PORT Is Not Used,End." exit 1 fi else echo "Check Port Cant Be Empty!" fi chmod +x /etc/keepalived/check_port.sh // ************************ zx28.zxjr.com keepalived 主上配置 ******************************* vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id 192.168.9.28 } vrrp_script chk_nginx { script "/etc/keepalived/check_port.sh 7443" interval 2 weight -20 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 251 priority 100 advert_int 1 mcast_src_ip 192.168.9.28 nopreempt // 非抢占式;防止生产上因为一些网络抖动等原因, VIP来回漂; authentication { auth_type PASS auth_pass 11111111 } track_script { chk_nginx } virtual_ipaddress { 192.168.9.33 } } // ************************** zx29.zxjr.com keepalived 从配置 ****************************** vim /etc/keepalived/keepalived.conf // 从的配置中不存在非抢占式; ! Configuration File for keepalived global_defs { router_id 192.168.9.29 } vrrp_script chk_nginx { script "/etc/keepalived/check_port.sh 7443" interval 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 251 priority 90 advert_int 1 mcast_src_ip 192.168.9.29 authentication { auth_type PASS auth_pass 11111111 } track_script { chk_nginx } virtual_ipaddress { 192.168.9.33 } } // 主动顺序启动keepalived,并设置开机启动 systemctl start keepalived systemctl enable keepalived // 查看ip; vip-192.168.9.33 在 zx28.zxjr.com(主) 上; // 主 上停止nginx后, 监测脚本探测不到 7443 端口; vip 飘到 从 上; // 主 上在启动nginx后, 配置文件中的 nopreempt 起作用, 并不会因为权重值高而飘回来; // 想要 主 提供 vip, 只需要重启 主上的 keepalived 服务;

主控节点部署 controller-manager

zx30.zxjr.com controller-manager 192.168.9.30

zx31.zxjr.com controller-manager 192.168.9.31

// *************************** 两台服务器上相同的配置; ********************************* // 创建启动脚本 vim /opt/kubernetes/server/bin/kube-controller-manager.sh #!/bin/sh ./kube-controller-manager \ --cluster-cidr 172.7.0.0/16 \ // pod网络(包括两个子网 172.7.30.0/24和172.7.31.0/24) --leader-elect true \ // 能选择 leader --log-dir /data/logs/kubernetes/kube-controller-manager \ --master http://127.0.0.1:8080 \ --service-account-private-key-file ./certs/ca-key.pem \ --service-cluster-ip-range 10.4.0.0/16 \ --root-ca-file ./certs/ca.pem \ --v 2 // 给可执行权限; 创建日志目录 chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh mkdir -p /data/logs/kubernetes/kube-controller-manager // 创建 supervisor 配置 (将kube-controller-manager委托给supervisor管理) vim /etc/supervisord.d/kube-controller-manager.ini [program:kube-controller-manager] command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controll.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/kubernetes/kube-controller-manager/controll.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动服务并检查 supervisorctl update supervisorctl status etcd-server-9-30 RUNNING pid 14837, uptime 1 day, 0:38:39 kube-apiserver RUNNING pid 15677, uptime 2:39:19 kube-controller-manager RUNNING pid 15849, uptime 0:00:32

主控节点部署 scheduler

zx30.zxjr.com kube-scheduler 192.168.9.30

zx31.zxjr.com kube-scheduler 192.168.9.31

// ******************************* 两台服务器上相同的配置 ****************************** // 创建 scheduler 启动脚本 vim /opt/kubernetes/server/bin/kube-scheduler.sh #!/bin/sh ./kube-scheduler \ --leader-elect \ --log-dir /data/logs/kubernetes/kube-scheduler \ --master http://127.0.0.1:8080 \ --v 2 // 给执行权限,并创建放置日志的目录 chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh mkdir /data/logs/kubernetes/kube-scheduler // 创建 supervisor 配置 vim /etc/supervisord.d/kube-scheduler.ini [program:kube-scheduler] command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动服务并检查;同样要是启动失败,查看日志进行排错后再启动; supervisorctl update supervisorctl status etcd-server-9-30 RUNNING pid 14837, uptime 1 day, 1:11:25 kube-apiserver RUNNING pid 15677, uptime 3:12:05 kube-controller-manager RUNNING pid 15849, uptime 0:33:18 kube-scheduler RUNNING pid 15888, uptime 0:03:51 // 所有服务都起来了.. 使用kubectl查看主控节点上所有的服务状态; 任何一个节点都可以执行 // kubectl 是一个和 K8s 交互的一个命令行工具; 后续讲解; kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-1 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"} etcd-0 Healthy {"health":"true"}

K8s运算节点安装部署

运算节点 kubelet 部署 ( Node 节点 )

主机名 角色 ip

zx30.zxjr.com kubelet 192.168.9.30

zx31.zxjr.com kubelet 192.168.9.31

// 运维主机上签发 kubelet 证书; 创建生成证书签名请求( csr ) 的 JSON 配置文件; // hosts 下可以多写几个ip, 后期可能用的上的; 运算节点正常比主控节点多; vim /opt/certs/kubelet-csr.json { "CN": "kubelet-node", "hosts": [ "127.0.0.1", "192.168.9.28", "192.168.9.29", "192.168.9.30", "192.168.9.31", "192.168.9.32", "192.168.9.34", "192.168.9.35", "192.168.9.36" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "zxjr", "OU": "ops" } ] } // 生成 kubelet 证书和私钥 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssljson -bare kubelet // 查看生成的证书, 私钥 ll /opt/certs/ |grep kubelet -rw-r--r-- 1 root root 1110 Aug 19 09:32 kubelet.csr -rw-r--r-- 1 root root 462 Aug 19 09:32 kubelet-csr.json -rw------- 1 root root 1679 Aug 19 09:32 kubelet-key.pem -rw-r--r-- 1 root root 1468 Aug 19 09:32 kubelet.pem // 准备 infra_pod 基础镜像; docker pull xplenty/rhel7-pod-infrastructure:v3.4 // 登录harbor私有仓库; docker login harbor.zxjr.com Username (admin): admin Password: Login Succeeded // 登录后会生成 /root/.docker/config.json 文件; 文件中记录的加密登录用户名密码;查看加密的内容; echo YWRtaW46SGFyYm9yMTIzNDU=|base64 -d admin:Harbor12345 // 给镜像打标签(tag) docker images |grep v3.4 xplenty/rhel7-pod-infrastructure v3.4 34d3450d733b 2 years ago 205 MB docker tag 34d3450d733b harbor.zxjr.com/k8s/pod:v3.4 docker images |grep v3.4 harbor.zxjr.com/k8s/pod v3.4 34d3450d733b 2 years ago 205 MB xplenty/rhel7-pod-infrastructure v3.4 34d3450d733b 2 years ago 205 MB // 推送到 harbor 私有仓库 docker push harbor.zxjr.com/k8s/pod:v3.4 *************************************************************************************************** // 将kubelet的证书和私钥拷贝到各运算节点, 注意私钥文件属性 600 // 以下是运算节点上的操作, 以 zx30.zxjr.com 为例 scp 192.168.9.32:/opt/certs/kubelet*.pem /opt/kubernetes/server/bin/certs/ ll /opt/kubernetes/server/bin/certs/ |grep kubelet -rw------- 1 root root 1679 Aug 19 09:33 kubelet-key.pem -rw-r--r-- 1 root root 1468 Aug 19 09:33 kubelet.pem // set-cluster -- 在 conf 目录下 kubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \ --embed-certs=true \ --server=https://192.168.9.33:7443 \ --kubeconfig=kubelet.kubeconfig // set-credentials -- 在 conf 目录下 kubectl config set-credentials k8s-node \ --client-certificate=/opt/kubernetes/server/bin/certs/client.pem \ --client-key=/opt/kubernetes/server/bin/certs/client-key.pem \ --embed-certs=true \ --kubeconfig=kubelet.kubeconfig // set-context -- 在 conf 目录下 kubectl config set-context myk8s-context \ --cluster=myk8s \ --user=k8s-node \ --kubeconfig=kubelet.kubeconfig // use-context -- 在 conf 目录下 kubectl config use-context myk8s-context \ --kubeconfig=kubelet.kubeconfig // 创建资源配置文件 k8s-node.yaml; 文件中 k8s-node 在前面 client-csr.json 中定义过; vim k8s-node.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: k8s-node roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: k8s-node // 应用资源配置文件; /opt/kubenetes/server/bin/conf kubectl create -f k8s-node.yaml // 检查 kubectl get clusterrolebinding k8s-node NAME AGE k8s-node 4h24m // 创建 kubelet 启动脚本;各个节点注意 命名 及 --hostname-override vim /opt/kubernetes/server/bin/kubelet-930.sh #!/bin/sh ./kubelet \ --anonymous-auth=false \ --cgroup-driver systemd \ --cluster-dns 10.4.0.2 \ --cluster-domain cluster.local \ --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice \ --fail-swap-on="false" \ --client-ca-file ./certs/ca.pem \ --tls-cert-file ./certs/kubelet.pem \ --tls-private-key-file ./certs/kubelet-key.pem \ --hostname-override 192.168.9.30 \ --image-gc-high-threshold 20 \ --image-gc-low-threshold 10 \ --kubeconfig ./conf/kubelet.kubeconfig \ --log-dir /data/logs/kubernetes/kube-kubelet \ --pod-infra-container-image harbor.zxjr.com/k8s/pod:v3.4 \ --root-dir /data/kubelet // 检查配置的权限, 创建配置中的日志目录 ll /opt/kubernetes/server/bin/conf/kubelet.kubeconfig -rw------- 1 root root 6222 Aug 19 09:37 /opt/kubernetes/server/bin/conf/kubelet.kubeconfig chmod +x /opt/kubernetes/server/bin/kubelet-930.sh mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet // 创建 supervisor 配置; 每个节点注意的启动脚本; vim /etc/supervisord.d/kube-kubelet.ini [program:kube-kubelet] command=/opt/kubernetes/server/bin/kubelet-930.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动服务并检查; 若是启动错误; 查看日志进行排错, 再使用 supervisorctl start kube-kubelet; 或者再执行 updat; 注意版本的问题; supervisorctl update // zx30.zxjr.com 运算节点上检查 kubectl get node NAME STATUS ROLES AGE VERSION 192.168.9.30 Ready <none> 36s v1.15.2 // 另外的节点启动后, 所有的节点上都可查询 kubectl get node NAME STATUS ROLES AGE VERSION 192.168.9.30 Ready <none> 36s v1.15.2 192.168.9.31 Ready <none> 43s v1.15.2

运算节点 kube-proxy 部署

zx30.zxjr.com kube-proxy 192.168.9.30

zx31.zxjr.com kube-proxy 192.168.9.31

// **************************** 运维主机签发 kube-proxy 证书 ******************************* // 创建生成证书签名请求 (csr) 的JSON配置文件 vim /opt/certs/kube-proxy-csr.json { "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "zxjr", "OU": "ops" } ] } // 生成 kube-proxy 证书和私钥 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssljson -bare kube-proxy-client // 检查生成的证书, 私钥 ll /opt/certs/ |grep kube-proxy -rw-r--r-- 1 root root 1009 Aug 19 16:40 kube-proxy-client.csr -rw------- 1 root root 1675 Aug 19 16:40 kube-proxy-client-key.pem -rw-r--r-- 1 root root 1383 Aug 19 16:40 kube-proxy-client.pem -rw-r--r-- 1 root root 269 Aug 19 16:38 kube-proxy-csr.json // ****************************** 运算节点上操作 ********************************** // 拷贝运维主机上生成的证书及密钥; 注意私钥文件属性 600 cd /opt/kubernetes/server/bin/certs scp 192.168.9.32:/opt/certs/kube-proxy*.pem ./ ll total 40 -rw------- 1 root root 1675 Aug 19 15:16 apiserver-key.pem -rw-r--r-- 1 root root 1602 Aug 19 15:16 apiserver.pem -rw------- 1 root root 1675 Aug 14 13:51 ca-key.pem -rw-r--r-- 1 root root 1354 Aug 14 13:51 ca.pem -rw------- 1 root root 1679 Aug 19 15:08 client-key.pem -rw-r--r-- 1 root root 1371 Aug 19 15:08 client.pem -rw------- 1 root root 1679 Aug 19 09:33 kubelet-key.pem -rw-r--r-- 1 root root 1468 Aug 19 09:33 kubelet.pem -rw------- 1 root root 1675 Aug 19 16:41 kube-proxy-client-key.pem -rw-r--r-- 1 root root 1383 Aug 19 16:41 kube-proxy-client.pem // 创建配置; 其他的节点可以直接将生成的 kube-proxy.kubeconfig 直接拷贝到每个节点上; 都一样的; // set-cluster -- 在 conf 目录下 cd ../conf kubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \ --embed-certs=true \ --server=https://192.168.9.33:7443 \ --kubeconfig=kube-proxy.kubeconfig // set-credentials -- conf 目录下 kubectl config set-credentials kube-proxy \ --client-certificate=/opt/kubernetes/server/bin/certs/kube-proxy-client.pem \ --client-key=/opt/kubernetes/server/bin/certs/kube-proxy-client-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig // set-context -- conf 目录下 kubectl config set-context myk8s-context \ --cluster=myk8s \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig // use-context -- conf 目录下 kubectl config use-context myk8s-context \ --kubeconfig=kube-proxy.kubeconfig // 创建 kube-proxy 启动脚本; 每个节点注意脚本名称,及IP; vim /opt/kubernetes/server/bin/kube-proxy-930.sh #!/bin/sh ./kube-proxy \ --cluster-cidr 172.7.0.0/16 \ --hostname-override 192.168.9.30 \ --kubeconfig ./conf/kube-proxy.kubeconfig // 检查配置,添加权限, 创建日志目录 chmod +x /opt/kubernetes/server/bin/kube-proxy-930.sh ll /opt/kubernetes/server/bin/conf |grep proxy -rw------- 1 root root 6238 Aug 19 16:44 kube-proxy.kubeconfig mkdir -p /data/logs/kubernetes/kube-proxy/ // 创建 supervisor 配置; 注意每个节点中调用 kube-proxy 启动脚本的名称; vim /etc/supervisord.d/kube-proxy.ini [program:kube-proxy] command=/opt/kubernetes/server/bin/kube-proxy-930.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/kubernetes/kube-proxy/proxy.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动服务并检查,启动报错的话, 根据日志进行排错, 然后重新启动; supervisorctl update supervisorctl status etcd-server-9-30 RUNNING pid 14837, uptime 6 days, 16:52:23 kube-apiserver RUNNING pid 25342, uptime 17:36:12 kube-controller-manager RUNNING pid 15849, uptime 5 days, 16:14:16 kube-kubelet RUNNING pid 25203, uptime 17:42:35 kube-proxy RUNNING pid 26466, uptime 16:04:13 kube-scheduler RUNNING pid 15888, uptime 5 days, 15:44:49

验证 K8s 的基本功能

// 在 K8s 集群里去交付一个nginx; 随便找一个运算节点, zx30.zxjr.com // 配置文件中定义了两个标准的k8s对象,也可以叫做k8s资源; DaemonSet 及 Service vim /usr/local/yaml/nginx-ds.yaml apiVersion: extensions/v1beta1 kind: DaemonSet // 在每一个运算节点上都起一个container,使用的镜像是 nginx:1.7.9; metadata: name: nginx-ds labels: addonmanager.kubernetes.io/mode: Reconcile spec: template: metadata: labels: app: nginx-ds spec: containers: - name: my-nginx image: nginx:1.7.9 ports: - containerPort: 80 --- apiVersion: v1 kind: Service // nginx对外服务提供一个入口; metadata: name: nginx-ds labels: app: nginx-ds spec: type: NodePort selector: app: nginx-ds ports: - name: http port: 80 targetPort: 80 // 创建 kubectl apply -f nginx-ds.yaml daemonset.extensions/nginx-ds created service/nginx-ds created // 查看 kubectl get pods NAME READY STATUS RESTARTS AGE nginx-ds-4hrts 1/1 Running 0 3m41s nginx-ds-xcfr2 1/1 Running 0 3m40s kubectl get pods -o wide // 详细查看;node-ip为运算节点的ip; IP 为 pod ip; NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ds-4hrts 1/1 Running 0 5m13s 172.7.30.2 192.168.9.30 <none> <none> nginx-ds-xcfr2 1/1 Running 0 5m12s 172.7.31.2 192.168.9.31 <none> <none> // 使用 describe 可以看到k8s标准资源的运行过程 kubectl describe pods nginx-ds-4hrts Name: nginx-ds-4hrts Namespace: default Priority: 0 Node: 192.168.9.30/192.168.9.30 Start Time: Tue, 20 Aug 2019 10:56:39 +0800 Labels: app=nginx-ds controller-revision-hash=57cccf846d pod-template-generation=1 Annotations: <none> Status: Running IP: 172.7.30.2 Controlled By: DaemonSet/nginx-ds Containers: my-nginx: Container ID: docker://647c3577e637f764ea54247fd7925c6c01f9e5f0e7eb1027a03851e93ebc6b50 Image: nginx:1.7.9 Image ID: docker-pullable://nginx@sha256:e3456c851a152494c3e4ff5fcc26f240206abac0c9d794affb40e0714846c451 Port: 80/TCP Host Port: 0/TCP State: Running Started: Tue, 20 Aug 2019 10:57:12 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-878cn (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-878cn: Type: Secret (a volume populated by a Secret) SecretName: default-token-878cn Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/disk-pressure:NoSchedule node.kubernetes.io/memory-pressure:NoSchedule node.kubernetes.io/not-ready:NoExecute node.kubernetes.io/pid-pressure:NoSchedule node.kubernetes.io/unreachable:NoExecute node.kubernetes.io/unschedulable:NoSchedule Events: Type Reason Age From Message // 创建启动过程;首先 Sceduled 进行调度; ---- ------ ---- ---- ------- Normal Scheduled 7m46s default-scheduler Successfully assigned default/nginx-ds-4hrts to 192.168.9.30 Normal Pulling 7m36s kubelet, 192.168.9.30 Pulling image "nginx:1.7.9" Normal Pulled 7m4s kubelet, 192.168.9.30 Successfully pulled image "nginx:1.7.9" Normal Created 7m4s kubelet, 192.168.9.30 Created container my-nginx Normal Started 7m3s kubelet, 192.168.9.30 Started container my-nginx // 进入其中一个进行查看;分别进入互 ping 不通; kubectl exec -it nginx-ds-4hrts bash ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:ac:07:1e:02 brd ff:ff:ff:ff:ff:ff inet 172.7.30.2/24 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::42:acff:fe07:1e02/64 scope link valid_lft forever preferred_lft forever ping 172.7.31.2 PING 172.7.31.2 (172.7.31.2): 48 data bytes // 在node节点与pod节点对应的主机上,访问pod节点的ip;访问nginx正常;访问另外主机上pod的ip访问失败; // service 是 k8s 的标准资源; nginx-ds ; 作用是将 nginx 的80 端口映射到 宿主机上的 23126(随机) 端口; kubectl get service -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.4.0.1 <none> 443/TCP 5d21h <none> nginx-ds NodePort 10.4.77.185 <none> 80:23126/TCP 34m app=nginx-ds // 查看是 kube-proxy 监听了 23126 端口 netstat -anpt |grep 23126 tcp6 0 0 :::23126 :::* LISTEN 26467/./kube-proxy // 在运算节点上访问本机ip:23126; 有的时候能访问到, 有时候不能访问到; 这就根上面的两台pod节点互ping 不通有关; // proxy向下面的pod节点进行分发; 到本机的pod时可以访问, 到其他主机上的pod不能访问; 不通.. // 使用 flannel 实现互通;

K8s 的 addons 插件部署

安装部署 Flannel

工作原理

数据从源容器中发出后,经由所在主机的docker0虚拟网卡转发到flannel0虚拟网卡(这是个P2P的虚拟网卡),flanneld服务监听在网卡的另外一端

Flannel通过Etcd服务维护了一张节点间的路由表,详细记录了各节点子网网段

源主机的flanneld服务将原本的数据内容UDP封装后根据自己的路由表投递给目的节点的flanneld服务,数据到达以后被解包,然后直接进入目的节点的flannel0虚拟网卡,然后被转发到目的主机的docker0虚拟网卡,最后就像本机容器通信由docker0路由到达目标容器

集群规划

zx30.zxjr.com flannel 192.168.9.30

zx31.zxjr.com flannel 192.168.9.31

// 开启防火墙的情况下, 增加如下规则 // 优化 SNAT 规则, 各运算节点直接的各 POD 之间的网络通信不再出网; iptables -t nat -D POSTROUTING -s 172.7.30.0/24 ! -o docker0 -j MASQUERADE iptables -t nat -I POSTROUTING -s 172.7.30.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE // 在192.168.9.30 主机上, 来源是 172.7.30.0/24 段的 docker 的ip, 目标不是 172.7.0.0/16 段, 网络发包不从docker0桥设备出站的,才进行 SNAT 转换; // 各运算节点报错 iptables 规则; 防止重启后规则不存在; iptables-save > /etc/sysconfig/iptables // 下载或上传 flannel 二进制包 mkdir -p /opt/src/flannel-v0.11.0/certs/ cd /opt/src/ ll drwxr-xr-x 3 root root 6 Aug 22 2019 flannel-v0.11.0 -rw-r--r-- 1 root root 9565743 Aug 20 09:53 flannel-v0.11.0-linux-amd64.tar.gz // 解压 flannel 二进制包到 flannel-v0.11.0 目录中 tar xf flannel-v0.11.0-linux-amd64.tar.gz -C flannel-v0.11.0/ // 做软连接 ln -s /opt/src/flannel-v0.11.0/ /opt/flannel/ // 将运维主机上ca.pem / client.pem / client-key.pem 私钥及证书复制到本机的 /opt/flannel scp 192.168.9.32:/opt/certs/ca.pem /opt/flannel/certs/ scp 192.168.9.32:/opt/certs/client*.pem /opt/flannel/certs/ // 操作 etcd, 增加 host-gw; etcd集群在一台上操作即可.. ./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}' {"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}} // 创建 flannel 配置文件; 注意每个节点上的 FLANNEL_SUBNET 对应的ip cd /opt/flannel/ vim subnet.env FLANNEL_NETWORK=172.7.0.0/16 FLANNEL_SUBNET=172.7.30.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false // 创建启动脚本; 注意每个节点上 --public-ip; 脚本中使用到--etcd-endpoints(etcd集群) vim flanneld.sh #!/bin/sh ./flanneld \ --public-ip=192.168.9.30 \ --etcd-endpoints=https://192.168.9.29:2379,https://192.168.9.30:2379,https://192.168.9.31:2379 \ --etcd-keyfile=./certs/client-key.pem \ --etcd-certfile=./certs/client.pem \ --etcd-cafile=./certs/ca.pem \ --iface=eth0 \ --subnet-file=./subnet.env \ --healthz-port=2401 // 给脚本执行权限,并创建存放日志的目录 chmod +x flanneld.sh mkdir -p /data/logs/flanneld // 创建 supervisor 配置 vim /etc/supervisord.d/flanneld.ini [program:flanneld] command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/flannel ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=22 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=false ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) stderr_logfile=/data/logs/flanneld/flanneld.stderr.log ; stderr log path, NONE for none; default AUTO stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stderr_logfile_backups=4 ; # of stderr logfile backups (default 10) stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stderr_events_enabled=false ; emit events on stderr writes (default false) // 启动服务并检查 supervisorctl update supervisorctl status etcd-server-9-30 RUNNING pid 822, uptime -1 day, 21:14:26 flanneld RUNNING pid 816, uptime -1 day, 21:14:26 kube-apiserver RUNNING pid 819, uptime -1 day, 21:14:26 kube-controller-manager RUNNING pid 823, uptime -1 day, 21:14:26 kube-kubelet RUNNING pid 1919, uptime -1 day, 21:03:27 kube-proxy RUNNING pid 824, uptime -1 day, 21:14:26 kube-scheduler RUNNING pid 815, uptime -1 day, 21:14:26 // 安装部署启动检查所有集群规划主机上的 flannel 服务;

// 故障: 开始时一边能ping通, 另一边不能ping通,重启服务后都不通了; 后来发现docker服务不存在了, 都安装docker,并启动后, 通; // 正常启动后, 访问192.168.9.30:23126/192.168.9.31:23126每次都能访问到nginx;证实:9.30到9.31的pod节点通; 9.31到9.30的pod节点通; // 查看 k8s 的标准资源 kubectl get service -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.4.0.1 <none> 443/TCP 8d <none> nginx-ds NodePort 10.4.107.105 <none> 80:22667/TCP 23h app=nginx-ds // 删除nginx资源 kubectl delete -f /usr/local/yaml/nginx-ds.yaml service "nginx-ds" deleted daemonset.extensions "nginx-ds" deleted // 查看是否删除 kubectl get service -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.4.0.1 <none> 443/TCP 8d <none> // 修改nginx交付文件; 将类型 NodePort 修改为 ClusterIP; vim /usr/local/yaml/nginx-ds.yaml ... ... spec: type: ClusterIP ... ... // 创建 kubectl apply -f /usr/local/yaml/nginx-ds.yaml // 查看nginx-ds资源, 发现没有宿主机的端口映射, 类型为 ClusterIP; kubectl get service -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.4.0.1 <none> 443/TCP 8d <none> nginx-ds ClusterIP 10.4.113.94 <none> 80/TCP 9s app=nginx-ds // 在两台宿主机上可以直接使用 10.4.113.94(统一的一个接入点) 进行访问; 都能访问到 nginx // 但是使用ip访问不方便, 而且数量多了每次访问需要使用命令查看, 所有需要dns, 使用 coredns 插件

安装部署 coredns 插件

// 首先需要部署 k8s 资源配置清单的内网 httpd 服务; 如果资源配置清单放置到任意一个运算节点上都有可能丢失/记不清放置位置; 所以要统一管理; // 在运维主机上 zx32.zxjr.com 上, 配置一个 nginx 虚拟主机, 用以提供 k8s 同一的资源配置清单访问入口; vim /etc/nginx/conf.d/k8s-yaml.zxjr.com.conf server { listen 80; server_name k8s-yaml.zxjr.com; location / { autoindex on; default_type text/plain; root /data/k8s-yaml; } } // 创建家目录 mkdir /data/k8s-yaml // 所有的资源配置清单统一放置在运维主机的 /data/k8s-yaml 目录下;重启nginx nginx -s reload // 配置内网 dns(zx28.zxjr.com上操作); 可以在web上添加, 也可以直接在/var/named/zxjr.com.zone上添加 cat /var/named/zxjr.com.zone ... ... k8s-yaml 60 IN A 192.168.9.32 ... ... // win机dns没有指向dns服务器的话, 在hosts文件中添加域名解析到192.168.9.32;使用浏览器访问 k8s-yaml.zxjr.com; Index of / ../ // 部署 kube-dns ( coredns ); 镜像从hub.docker.com上拉取; 使用 1.5.2; 重新打标签, 上传至本地仓库; docker pull coredns/coredns:1.5.2 docker images |grep coredns coredns/coredns 1.5.2 d84a890ab740 7 weeks ago 41.8 MB docker tag d84a890ab740 harbor.zxjr.com/k8s/coredns:1.5.2 docker push harbor.zxjr.com/k8s/coredns:1.5.2 // 登录本地harbor仓库, 可以看到已上传成功; // 在运维主机上准备 资源配置清单;是从 github.com 上搜索kubernetes后 -- cluster -- addons -- dns -- coredns下的yaml; mkdir -p /data/k8s-yaml/coredns && cd /data/k8s-yaml/coredns // 将yaml分成四份 [ RBAC / ConfigMap / Deployment / Service(svc) ] vi /data/k8s-yaml/coredns/rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults addonmanager.kubernetes.io/mode: Reconcile name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults addonmanager.kubernetes.io/mode: EnsureExists name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system // 若是使用proxy报错/etc/coredns/Corefile:6 - Error during parsing: Unknown directive 'proxy' vi /data/k8s-yaml/coredns/configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors log health kubernetes cluster.local 10.4.0.0/16 forward . /etc/resolv.conf // coredns 1.5 版本后不使用 proxy; 而用 forward; cache 30 } // 若使用 proxy 会造成下列报错 kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-579d4d979f-z7h9h 0/1 CrashLoopBackOff 3 58s vi /data/k8s-yaml/coredns/deployment.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: coredns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: replicas: 1 selector: matchLabels: k8s-app: coredns template: metadata: labels: k8s-app: coredns spec: serviceAccountName: coredns containers: - name: coredns image: harbor.zxjr.com/k8s/coredns:1.5.2 args: - -conf - /etc/coredns/Corefile volumeMounts: - name: config-volume mountPath: /etc/coredns ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 dnsPolicy: Default imagePullSecrets: - name: harbor volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile vi /data/k8s-yaml/coredns/svc.yaml apiVersion: v1 kind: Service metadata: name: coredns namespace: kube-system labels: k8s-app: coredns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: coredns clusterIP: 10.4.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 // 使用浏览器访问 k8s-yaml.zxjr.com/coredns ,查看资源配置清单文件已创建; // ******************************** 任意一台运算节点上操作;*************************************** // deployment.yaml 中 image需要从本地私有仓库拉取镜像, 为了安全, 拉取镜像的方式是 imagePullSecretes; 所以需要先创建secretes, 把登录的用户名密码封装到secrets, 然后交付到 k8s 里; // 这样拉取镜像的时候直接去 secrets 里取用户名密码;用户名密码可根据自己的使用更改, 实际中不使用 admin kubectl create secret docker-registry harbor --docker-server=harbor.zxjr.com --docker-username=admin --docker-password=Harbor12345 --docker-email=983012***@qq.com -n kube-system // 查看命名空间里生成了一个 harbor 的secret; -n 跟命名空间; kubectl get secret -n kube-system NAME TYPE DATA AGE default-token-s2v78 kubernetes.io/service-account-token 3 8d harbor kubernetes.io/dockerconfigjson 1 9m13s // 查看 harbor kubectl get secret harbor -o yaml -n kube-system apiVersion: v1 data: .dockerconfigjson: eyJhdXRocyI6eyJoYXJib3Iuenhqci5jb20iOnsidXNlcm5hbWUiOiJhZG1pbiIsInBhc3N3b3JkIjoiSGFyYm9yMTIzNDUiLCJlbWFpbCI6Ijk4MzAxMioqKkBxcS5jb20iLCJhdXRoIjoiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9In19fQ== kind: Secret metadata: creationTimestamp: "2019-08-23T06:08:15Z" name: harbor namespace: kube-system resourceVersion: "990135" selfLink: /api/v1/namespaces/kube-system/secrets/harbor uid: a671312c-58a7-4c68-845e-98ab1b312037 type: kubernetes.io/dockerconfigjson // 查看加密的内容 echo eyJhdXRocyI6eyJoYXJib3Iuenhqci5jb20iOnsidXNlcm5hbWUiOiJhZG1pbiIsInBhc3N3b3JkIjoiSGFyYm9yMTIzNDUiLCJlbWFpbCI6Ijk4MzAxMioqKkBxcS5jb20iLCJhdXRoIjoiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9In19fQ== |base64 -d {"auths":{"harbor.zxjr.com":{"username":"admin","password":"Harbor12345","email":"983012***@qq.com","auth":"YWRtaW46SGFyYm9yMTIzNDU="}}} // 应用k8s-yaml.zxjr.com 上的资源清单 kubectl apply -f http://k8s-yaml.zxjr.com/coredns/rbac.yaml kubectl apply -f http://k8s-yaml.zxjr.com/coredns/configmap.yaml kubectl apply -f http://k8s-yaml.zxjr.com/coredns/deployment.yaml kubectl apply -f http://k8s-yaml.zxjr.com/coredns/svc.yaml // 有问题的情况;删除网络资源清单 kubectl delete -f http://k8s-yaml.zxjr.com/coredns/rbac.yaml kubectl delete -f http://k8s-yaml.zxjr.com/coredns/configmap.yaml kubectl delete -f http://k8s-yaml.zxjr.com/coredns/deployment.yaml kubectl delete -f http://k8s-yaml.zxjr.com/coredns/svc.yaml // 检查; pod 是选择在 zx31 上运行的, 通过 kube-scheduler 选择了一个节点运行; ubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-579d4d979f-qtk5b 1/1 Running 0 2m42s 172.7.31.3 192.168.9.31 <none> <none> kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE coredns ClusterIP 10.4.0.2 <none> 53/UDP,53/TCP 3m26s // cluster-ip 10.4.0.110 是在kubelet启动脚本中 --cluster-dns 指定好的; // 测试 coredns 解析 dig -t A nginx-ds.default.svc.cluster.local. @10.4.0.2 +short 10.4.113.94 kubectl get service -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.4.0.1 <none> 443/TCP 14d <none> nginx-ds ClusterIP 10.4.113.94 <none> 80/TCP 5d7h app=nginx-ds

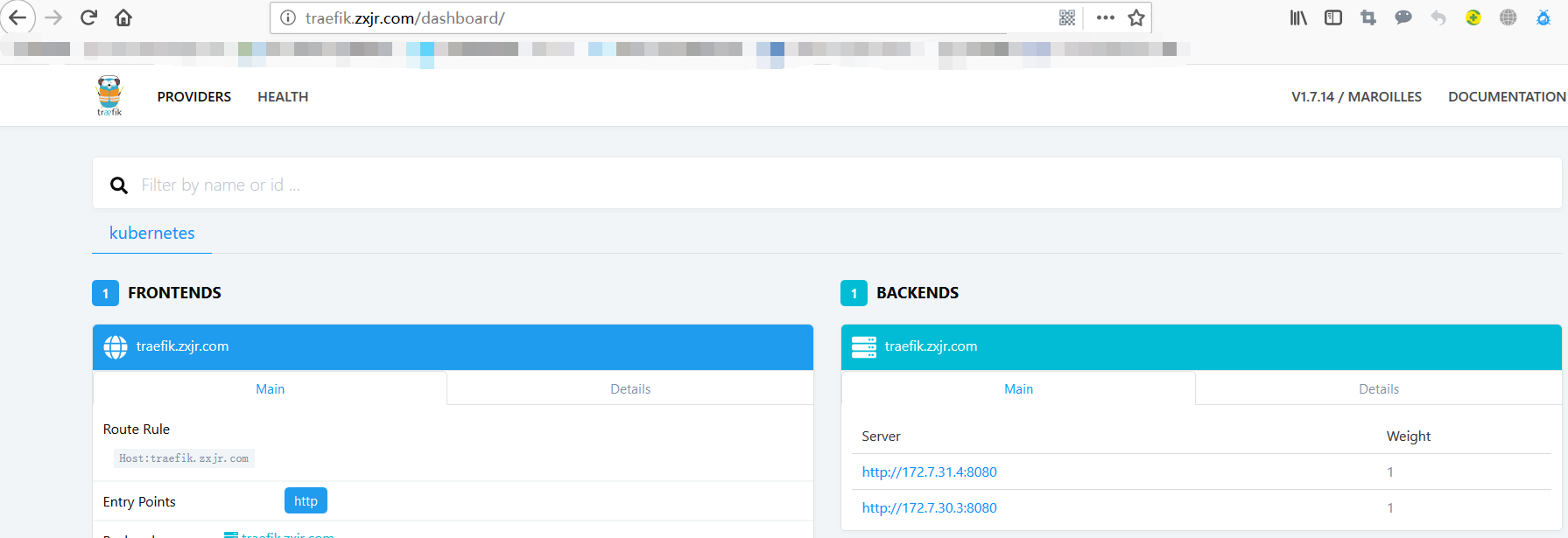

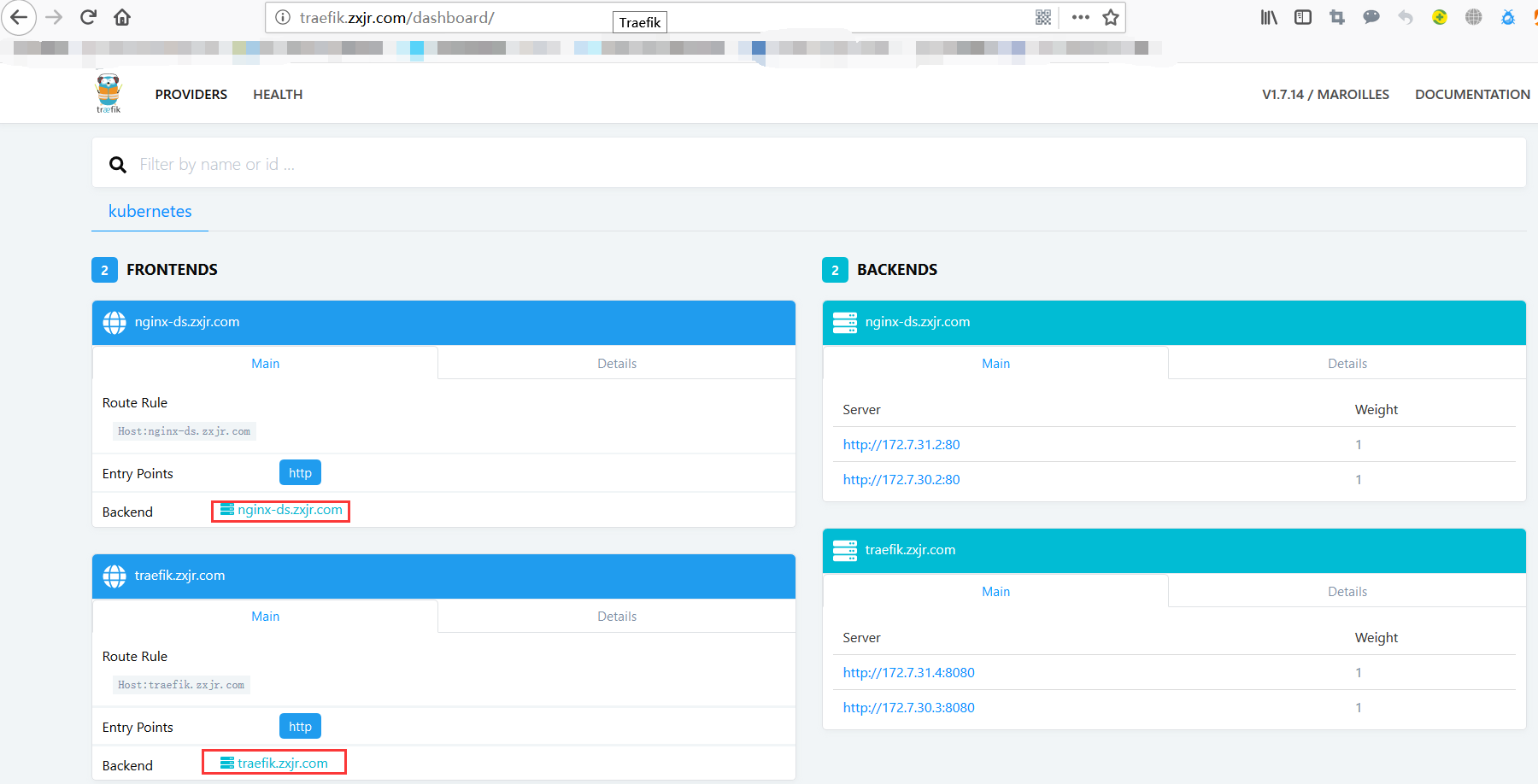

部署 traefik (ingress)

// 运维主机(zx32.zxjr.com)上操作, 准备 traefik镜像,并上传到 harbor 私有库 docker pull traefik:v1.7 docker images |grep "traefik" traefik v1.7 f12ee21b2b87 2 weeks ago 84.39 MB docker tag f12ee21b2b87 harbor.zxjr.com/k8s/traefik:v1.7 docker push harbor.zxjr.com/k8s/traefik:v1.7 // 运维主机上准备资源配置清单 mkdir -p /data/k8s-yaml/traefik && cd /data/k8s-yaml/traefik // 创建 rbac vim rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: traefik-ingress-controller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: traefik-ingress-controller rules: - apiGroups: - "" resources: - services - endpoints - secrets verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: traefik-ingress-controller subjects: - kind: ServiceAccount name: traefik-ingress-controller namespace: kube-system // 创建 DaemonSet vim daemonset.yaml apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: traefik-ingress-controller namespace: kube-system labels: k8s-app: traefik-ingress-lb spec: template: metadata: labels: k8s-app: traefik-ingress-lb name: traefik-ingress-lb spec: serviceAccountName: traefik-ingress-controller terminationGracePeriodSeconds: 60 containers: - image: harbor.zxjr.com/k8s/traefik:v1.7 name: traefik-ingress-lb ports: - name: http containerPort: 80 hostPort: 81 - name: admin containerPort: 8080 securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE args: - --api - --kubernetes - --logLevel=INFO - --insecureskipverify=true - --kubernetes.endpoint=https://192.168.9.33:7443 - --accesslog - --accesslog.filepath=/var/log/traefik_access.log - --traefiklog - --traefiklog.filepath=/var/log/traefik.log - --metrics.prometheus imagePullSecrets: - name: harbor // 创建 Service vim svc.yaml kind: Service apiVersion: v1 metadata: name: traefik-ingress-service namespace: kube-system spec: selector: k8s-app: traefik-ingress-lb ports: - protocol: TCP port: 80 name: web - protocol: TCP port: 8080 name: admin // 创建 Ingress vim ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: traefik-web-ui namespace: kube-system annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: traefik.zxjr.com http: paths: - backend: serviceName: traefik-ingress-service servicePort: 8080 // 解析域名; 在 web-dns 上直接添加, 或者在配置文件中添加 tail -1 /var/named/zxjr.com.zone traefik 60 IN A 192.168.9.33 // 在任意的运算节点上应用资源配置清单( 此处在 zx30.zxjr.com ) kubectl apply -f http://k8s-yaml.zxjr.com/traefik/rbac.yaml kubectl apply -f http://k8s-yaml.zxjr.com/traefik/daemonset.yaml kubectl apply -f http://k8s-yaml.zxjr.com/traefik/svc.yaml kubectl apply -f http://k8s-yaml.zxjr.com/traefik/ingress.yaml // zx28.zxjr.com 和 zx29.zxjr.com 两台主机上的 nginx 均需要配置反向代理(可以考虑saltstack/ansible) vim /etc/nginx/conf.d/zxjr.com.conf upstream default_backend_traefik { server 192.168.9.30:81 max_fails=3 fail_timeout=10s; server 192.168.9.31:81 max_fails=3 fail_timeout=10s; } server { server_name *.zxjr.com; location / { proxy_pass http://default_backend_traefik; proxy_set_header Host $http_host; proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for; } } // 重启 nginx nginx -s reload // 访问 traefik.zxjr.com

// 将 nginx-ds 服务也通过 ingress 标准资源配置顶到外部来, 在集群外部访问 nginx-ds; // nginx-ds 有 service 以及 cluster-ip, 集群内部是可以提供服务的; kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.4.0.1 <none> 443/TCP 20d <none> nginx-ds ClusterIP 10.4.113.94 <none> 80/TCP 12d app=nginx-ds // 依照 traefik 的交互方式, 创建一个 nginx-ingress.yaml 资源配置清单 cd /data/yaml vim nginx-ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: nginx-ds namespace: default annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: nginx-ds.zxjr.com http: paths: - backend: serviceName: nginx-ds servicePort: 80 // 创建 kubectl apply -f nginx-ingress.yaml ingress.extensions/nginx-ds created // 添加域名解析 tail -1 /var/named/zxjr.com.conf nginx-ds 60 IN A 192.168.9.33 // 访问 nginx-ds.zxjr.com 时,访问到的 Welcome to nginx! 页面 // 同时 traefik.zxjr.com 也有变动;

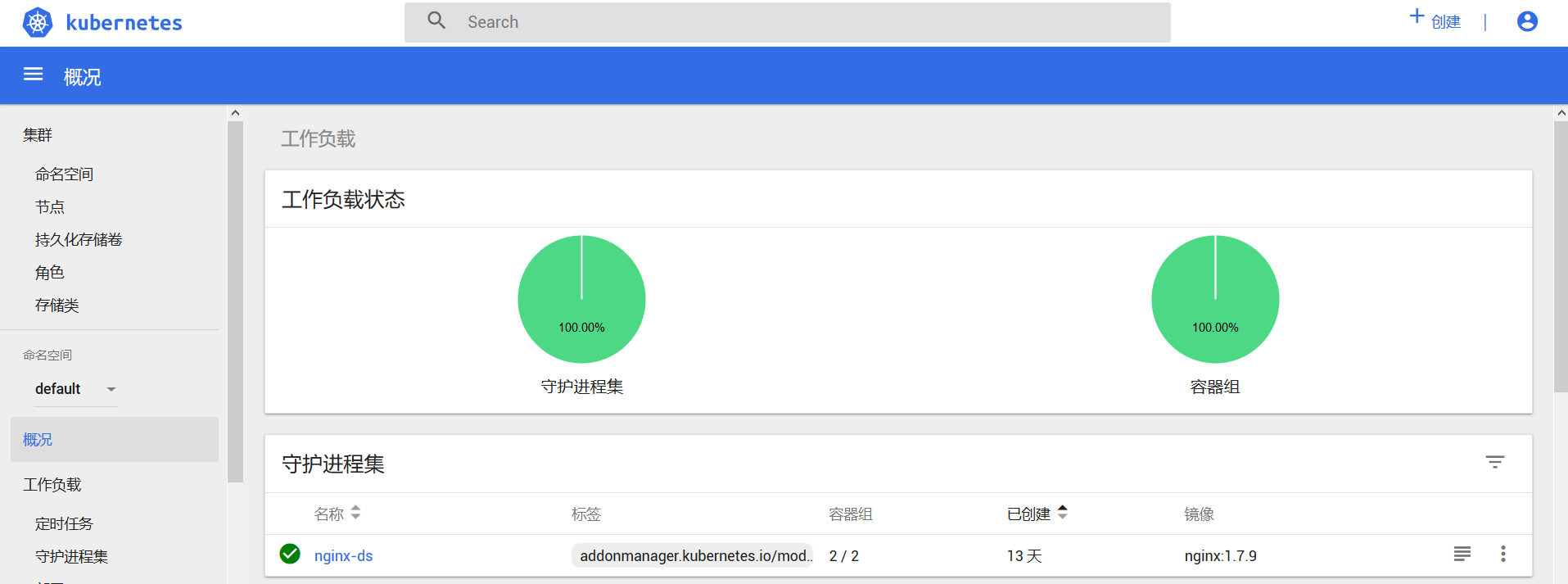

部署 dashboard

dashboard 大大的简化 k8s 的操作和应用; 依赖 coredns 和 traefik

// 运维主机上准备镜像, 并上传到 harbor 私有仓库 docker pull hexun/kubernetes-dashboard-amd64:v1.10.1 docker images |grep v1.10.1 hexun/kubernetes-dashboard-amd64 v1.10.1 f9aed6605b81 8 months ago 121.7 MB docker tag f9aed6605b81 harbor.zxjr.com/k8s/dashboard:v1.10.1 docker push harbor.zxjr.com/k8s/dashboard:v1.10.1 // 登录 harbor 仓库可以看到 dashboard 已经上传; // 准备资源配置清单(一般在github中kubernetes的cluster/addons/下有,拷贝下来更改) mkdir -p /data/k8s-yaml/dashboard && cd /data/k8s-yaml/dashboard vim rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile name: kubernetes-dashboard-admin namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard-admin namespace: kube-system labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: kubernetes-dashboard-admin namespace: kube-system vim secret.yaml // dashboard本身使用的https进行通信的,自己将https证书保存在secret里; apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-certs namespace: kube-system type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-key-holder namespace: kube-system type: Opaque vim configmap.yaml apiVersion: v1 kind: ConfigMap metadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-settings namespace: kube-system vim svc.yaml apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: k8s-app: kubernetes-dashboard type: NodePort // 使用nodepord 类型; ports: - nodePort: 8800 // 映射到宿主机 8800 端口,可以使用https://宿主机ip:8800 端口测试; port: 443 targetPort: 8443 vim deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical containers: - name: kubernetes-dashboard image: harbor.zxjr.com/k8s/dashboard:v1.10.1 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 50m memory: 100Mi ports: - containerPort: 8443 protocol: TCP args: # PLATFORM-SPECIFIC ARGS HERE - --auto-generate-certificates volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs - name: tmp-volume mountPath: /tmp livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard-admin tolerations: - key: "CriticalAddonsOnly" operator: "Exists" imagePullSecrets: - name: harbor vim ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: kubernetes-dashboard namespace: kube-system annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: dashboard.zxjr.com http: paths: - backend: serviceName: kubernetes-dashboard servicePort: 443 // 浏览器访问 k8s-yaml.zxjr.com; 查看资源配置清单已存在; // 解析 dashboard.zxjr.com tail -1 /var/named/zxjr.com.conf dashboard 60 IN A 192.168.9.33 // 在任何一个运算节点上应用资源配置清单 ( 此次选择 zx31.zxjr.com ) kubectl apply -f http://k8s-yaml.zxjr.com/dashboard/rbac.yaml kubectl apply -f http://k8s-yaml.zxjr.com/dashboard/secret.yaml kubectl apply -f http://k8s-yaml.zxjr.com/dashboard/configmap.yaml kubectl apply -f http://k8s-yaml.zxjr.com/dashboard/deployment.yaml kubectl apply -f http://k8s-yaml.zxjr.com/dashboard/svc.yaml kubectl apply -f http://k8s-yaml.zxjr.com/dashboard/ingress.yaml // 查看kube-system命名空间下的pod 节点 kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-579d4d979f-qtk5b 1/1 Running 0 6d18h kubernetes-dashboard-5bd4cbcf78-pt4k2 1/1 Running 0 59s traefik-ingress-controller-cnddr 1/1 Running 0 94m traefik-ingress-controller-dqfr4 1/1 Running 0 94m // zx28.zxjr.com 及 zx29.zxjr.com 的修改 nginx 配置, 走https协议 vim /etc/nginx/conf.d/dashboard.zxjr.com.conf server { listen 80; server_name dashboard.zxjr.com; rewrite ^(.*)$ https://${server_name}$1 permanent; } server { listen 443 ssl; server_name dashboard.zxjr.com; ssl_certificate "certs/dashboard.zxjr.com.crt"; ssl_certificate_key "certs/dashboard.zxjr.com.key"; ssl_session_cache shared:SSL:1m; ssl_session_timeout 10m; ssl_ciphers HIGH:!aNULL:!MD5; ssl_prefer_server_ciphers on; location / { proxy_pass http://default_backend_traefik; proxy_set_header Host $http_host; proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for; } } // 生成 dashboard.zxjr.com 的域名证书, 及添加信任 openssl genrsa -out ca.key 3072 openssl req -new -x509 -days 1095 -key ca.key -out ca.pem openssl genrsa -out dashboard.zxjr.com.key 3072 openssl req -new -key dashboard.zxjr.com.key -out dashboard.zxjr.com.csr openssl x509 -req -in dashboard.zxjr.com.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out dashboard.zxjr.com.crt -days 1095 cp ca.pem /etc/pki/ca-trust/source/anchors/ update-ca-trust enable update-ca-trust extract // 重启nginx nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful nginx -s reload // 若是平滑重启不成功, 可以先停掉nginx, 再启动; // 访问 https://dashboard.zxjr.com

// 获取 token ; 运算节点上执行 kubectl get secret -n kube-system NAME TYPE DATA AGE coredns-token-c88zh kubernetes.io/service-account-token 3 7d19h default-token-s2v78 kubernetes.io/service-account-token 3 21d harbor kubernetes.io/dockerconfigjson 1 7d20h heapster-token-fs5mv kubernetes.io/service-account-token 3 18h kubernetes-dashboard-admin-token-8t5f5 kubernetes.io/service-account-token 3 63m kubernetes-dashboard-certs Opaque 0 63m kubernetes-dashboard-key-holder Opaque 2 63m traefik-ingress-controller-token-g2f2l kubernetes.io/service-account-token 3 25h kubectl describe secret kubernetes-dashboard-admin-token-8t5f5 -n kube-system Name: kubernetes-dashboard-admin-token-8t5f5 Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin kubernetes.io/service-account.uid: 3d799225-094f-4a7c-a7f8-9dd3aef98eb5 Type: kubernetes.io/service-account-token Data ==== token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi04dDVmNSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjNkNzk5MjI1LTA5NGYtNGE3Yy1hN2Y4LTlkZDNhZWY5OGViNSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.R2Ce31CFJxry8dri9ljMrn5i5v_9XvS3P5ScWQeky2lxx5nYLCkCkkgPOcrqN_JPE73CCHENBrHLfK2-Qcdnfh_G2QDPtVTPGZRzwF_9cpJCj94_REcV4BC841C4-2dBn4DpnbUeXm1ihr2-zCTap-onnm7qYgyoGJnbq_0_Nvz8kYjffWlSESy2nURm7KLuFUWNEcC61tQS0TZTatcbxk-JADa4OSy2oAFqLzreyHqyy8OXljCHgJre1Z52CD78tjoMYoTUmACvay8D5jiyIVJ_es9fp3jIKLVCoUURDl_Wmhm6odHWBV_-nfILlksrsncmPF4IQMi-kPip0LeDmg ca.crt: 1354 bytes namespace: 11 bytes // 复制获得的 token 值, 浏览器使用令牌方式, 将token粘贴; 登录 // 查看 dashboard pod日志 2019/03/25 13:34:00 Metric client health check failed: the server could not find the requested resource (get services heapster). Retrying in 30 seconds. ... ... // 部署 heapster // 运维主机上操作/, 拉取镜像, 重新打标签, 上传到harbor私有库; docker pull bitnami/heapster:1.5.4 docker images |grep 1.5.4 bitnami/heapster 1.5.4 c359b95ad38b 6 months ago 135.6 MB docker tag c359b95ad38b harbor.zxjr.com/k8s/heapster:1.5.4 docker push harbor.zxjr.com/k8s/heapster:1.5.4 // 准备资源配置清单 mkdir /data/k8s-yaml/heapster && cd /data/k8s-yaml/heapster/ vim rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: heapster namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: heapster roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:heapster subjects: - kind: ServiceAccount name: heapster namespace: kube-system vim deployment.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: heapster namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: heapster spec: serviceAccountName: heapster containers: - name: heapster image: harbor.zxjr.com/k8s/heapster:1.5.4 imagePullPolicy: IfNotPresent command: - /opt/bitnami/heapster/bin/heapster - --source=kubernetes:https://kubernetes.default vim svc.yaml apiVersion: v1 kind: Service metadata: labels: task: monitoring # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: Heapster name: heapster namespace: kube-system spec: ports: - port: 80 targetPort: 8082 selector: k8s-app: heapster // 任意运算节点上应用资源配置清单 kubectl apply -f http://k8s-yaml.zxjr.com/heapster/rbac.yaml kubectl apply -f http://k8s-yaml.zxjr.com/heapster/deployment.yaml kubectl apply -f http://k8s-yaml.zxjr.com/heapster/svc.yaml 浏览器访问 dashboard (若heapster没起作用, 重启 dashboard)

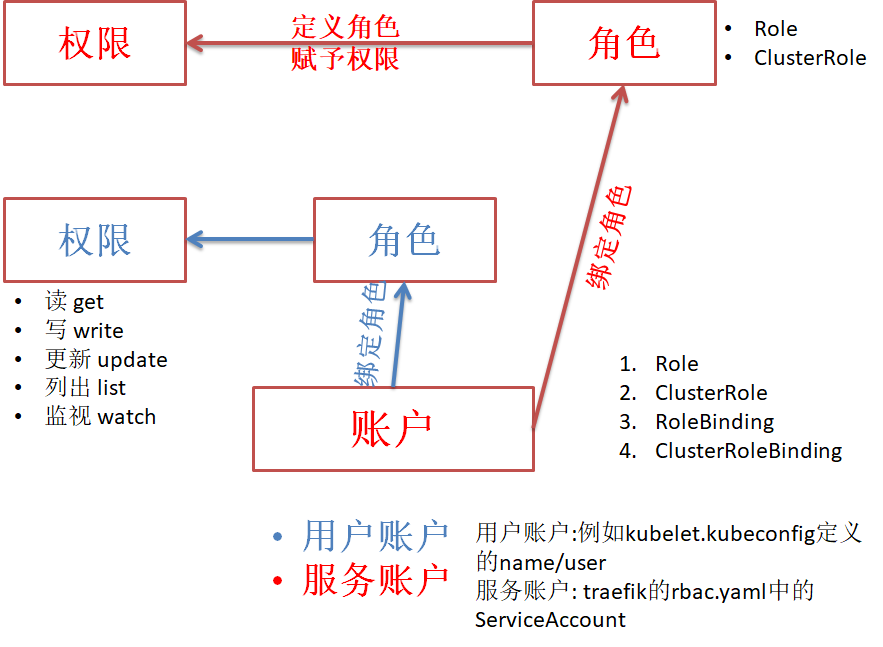

RBAC(Role Based Access Control) 授权规则

// 查看服务账户,sa为 ServiceAccount 的缩写 kubectl get sa -n default NAME SECRETS AGE default 1 21d kubectl get sa -n kube-system NAME SECRETS AGE coredns 1 7d22h default 1 21d kubernetes-dashboard-admin 1 147m traefik-ingress-controller 1 28h

作者:TZHR —— 世间一散人

出处:https://www.cnblogs.com/haorong/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明

浙公网安备 33010602011771号

浙公网安备 33010602011771号