Batch Normalization, Instance Normalization, Layer Normalization图解

转载:https://becominghuman.ai/all-about-normalization-6ea79e70894b

This short post highlights the structural nuances between popular normalization techniques employed while training deep neural networks.

I am hoping that a quick 2 minute glance at this would refresh my memory on the concept, sometime, in the not so distant future.

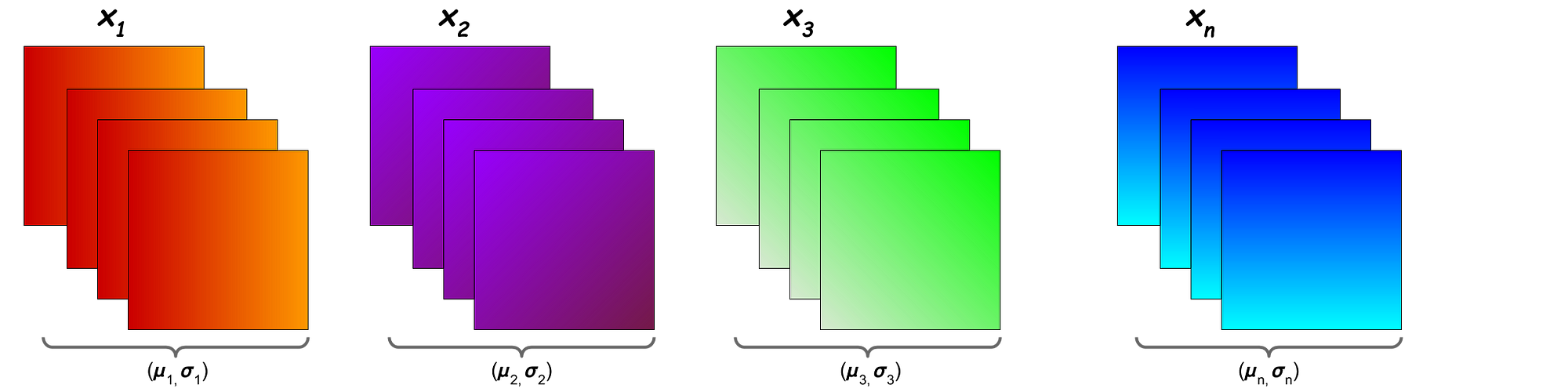

Let us establish some notations, that will make the rest of the content, easy to follow. We assume that the activations at any layer would be of the dimensions NxCxHxW (and, of course, in the real number space), where, N = Batch Size, C = Number of Channels (filters) in that layer, H = Height of each activation map, W = Width of each activation map.

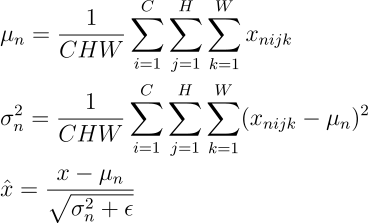

Generally, normalization of activations require shifting and scaling the activations by mean and standard deviation respectively. Batch Normalization, Instance Normalization and Layer Normalization differ in the manner these statistics are calculated.

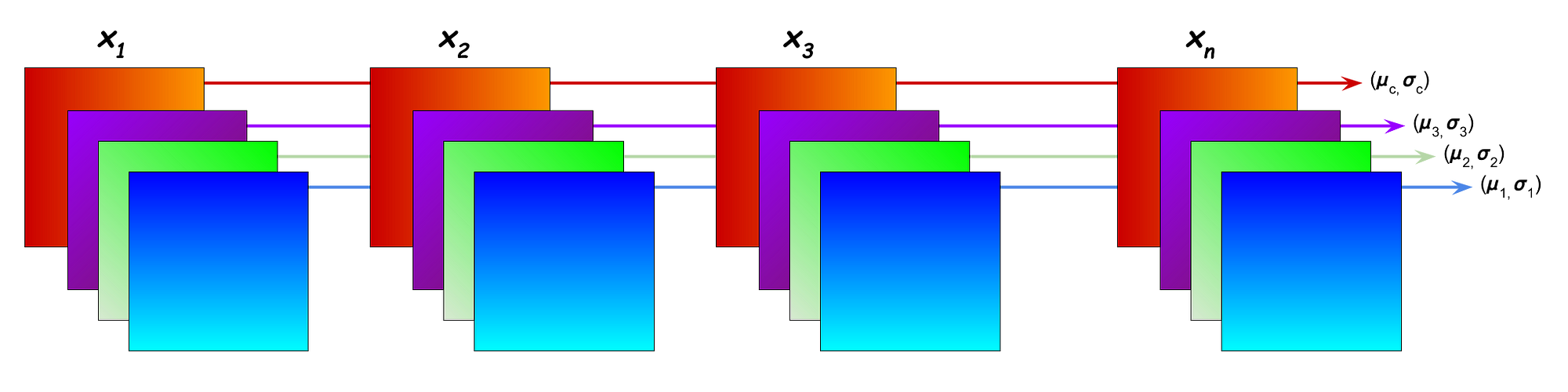

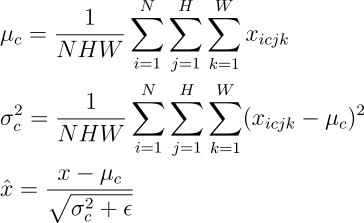

Batch Normalization

In “Batch Normalization”, mean and variance are calculated for each individual channel across all samples and both spatial dimensions.

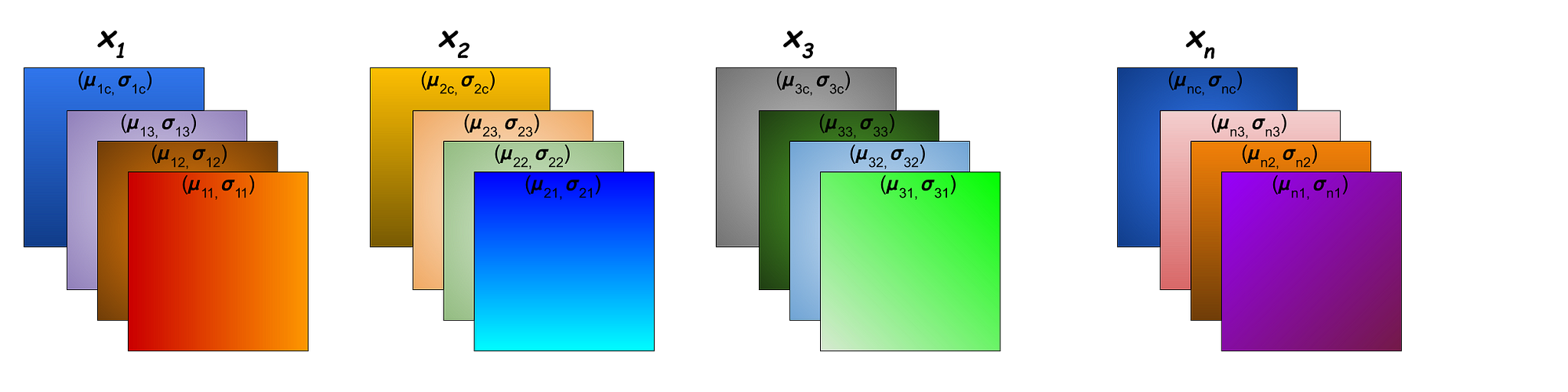

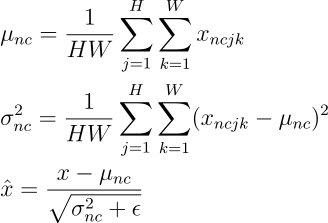

Instance Normalization

In “Instance Normalization”, mean and variance are calculated for each individual channel for each individual sample across both spatial dimensions.

Layer Normalization

In “Layer Normalization”, mean and variance are calculated for each individual sample across all channels and both spatial dimensions.

I firmly believe that pictures speak louder than words, and I hope this post brings forth the subtle distinctions between several popular normalization techniques.

posted on 2021-12-14 15:20 Sanny.Liu-CV&&ML 阅读(122) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号