Deep Multi-instance Learning with Dynamic Pooling的复现与思考

准确率是0.98

假设:正包中可能不止一个正示例,训练网络时网络能充分考虑这些示例就会进一步提升准确率。

本文就是通过动态池化去提升准确率。

训练的体会:如果神经网络层多的话学习率小模型的loss变化的较慢。

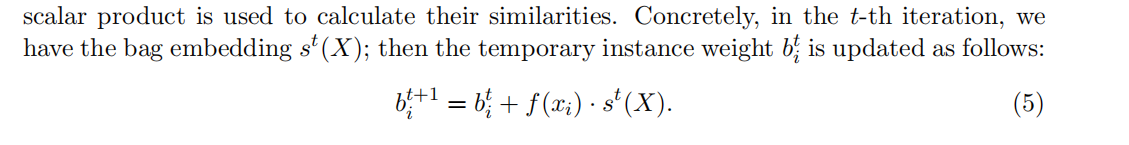

用迭代的方式生成b其实就是让最重要的向量发挥尽量大的作用,越迭代重要的向量的b越大。

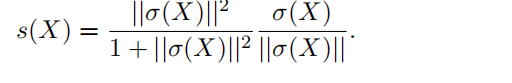

s表示负包的范式比较小接近于0,正包的范式比较大。

复现代码:

from enum import auto

from scipy.io import loadmat

import numpy as np

import torch

import torch.utils.data as data_utils

from torch import nn

import torch.optim as optim

m = loadmat(r"musk_2_original.mat")

daaa=m.keys()

from torch import nn

network=nn.Sequential(nn.Linear(166,256),nn.Sigmoid(),nn.Linear(256,128),nn.Sigmoid(),nn.Linear(128,64),nn.Sigmoid())

#network=nn.Sequential(nn.BatchNorm1d(166),nn.Linear(166,2),nn.Softmax(dim=1))

optimizer = optim.Adam(network.parameters(), lr=0.004)

m1 = loadmat(r"musk_1_original.mat")

val_bag=[]

val_bag_lable=[]

train_bag=[]

train_bag_lable=[]

for i in m1['pos_bag']:

for j in i:

val_bag.append(j.T)

val_bag_lable.append(1)

for i in m1['neg_bag']:

for j in i:

val_bag.append(j.T)

val_bag_lable.append(0)

for i in m['pos_bag']:

for j in i:

train_bag.append(j.T)

train_bag_lable.append(1)

for i in m['neg_bag']:

for j in i:

train_bag.append(j.T)

train_bag_lable.append(0)

t=0.1

bo=0.5

for epoch in range(500000):

r=0

e=0

er=0

acc=0

sum=0

network.train()

lo=0

train_ac=0

test_ac=0

train_ac1=0

train_ac0=0

val_ac1=0

val_ac0=0

for i in range(len(train_bag)):

y=network(torch.Tensor(train_bag[i]))

b=torch.zeros((len(y),1))

sof=nn.Softmax(dim=0)

y1=y*sof(b)

y1=torch.sum(y1,dim=0)

s=y1/torch.norm(y1)*torch.norm(y1)**2/(1+torch.norm(y1)**2)

for j in range(3):

b1=sof(torch.matmul(y,s.T))

b1=torch.reshape(b1,(-1,1))

b=b+b1

c=sof(b)

y1=y*sof(b)

y1=torch.sum(y1,dim=0)

s=y1/torch.norm(y1)*torch.norm(y1)**2/(1+torch.norm(y1)**2)

#print(torch.norm(s))

lo=lo+train_bag_lable[i]*(1-torch.norm(s))**2+(1-train_bag_lable[i])*(torch.norm(s))**2

#if train_bag_lable[i]==0:

#print(torch.norm(s))

if torch.norm(s)>bo and train_bag_lable[i]==1:

train_ac=train_ac+1

train_ac1=train_ac1+1

if torch.norm(s)<bo and train_bag_lable[i]==0:

train_ac=train_ac+1

train_ac0=train_ac0+1

optimizer.zero_grad()

lo.backward()

optimizer.step()

for i in range(len(val_bag)):

y=network(torch.Tensor(val_bag[i]))

b=torch.zeros((len(y),1))

sof=nn.Softmax(dim=0)

y1=y*sof(b)

y1=torch.sum(y1,dim=0)

s=y1/torch.norm(y1)*torch.norm(y1)**2/(1+torch.norm(y1)**2)

for j in range(3):

b1=sof(torch.matmul(y,s.T))

b1=torch.reshape(b1,(-1,1))

b=b+b1

c=sof(b)

y1=y*c

y1=torch.sum(y1,dim=0)

s=y1/torch.norm(y1)*torch.norm(y1)**2/(1+torch.norm(y1)**2)

if torch.norm(s)>bo and val_bag_lable[i]==1:

test_ac=test_ac+1

val_ac1=val_ac1+1

if torch.norm(s)<bo and val_bag_lable[i]==0:

test_ac=test_ac+1

val_ac0=val_ac0+1

print(epoch,lo,train_ac/len(train_bag),test_ac/len(val_bag),train_ac1,train_ac0,val_ac1,val_ac0)

浙公网安备 33010602011771号

浙公网安备 33010602011771号