北京信箱时间

- 采集北京市政百姓信件内容;

- 编写MapReduce程序清洗信件内容数据;

- 利用HiveSql语句离线分析信件内容数据;

- 利用Sqoop导出Hive分析数据到MySQL库;

- 开发JavaWeb+ECharts 完成信件数据图表展示过程。

1.采集

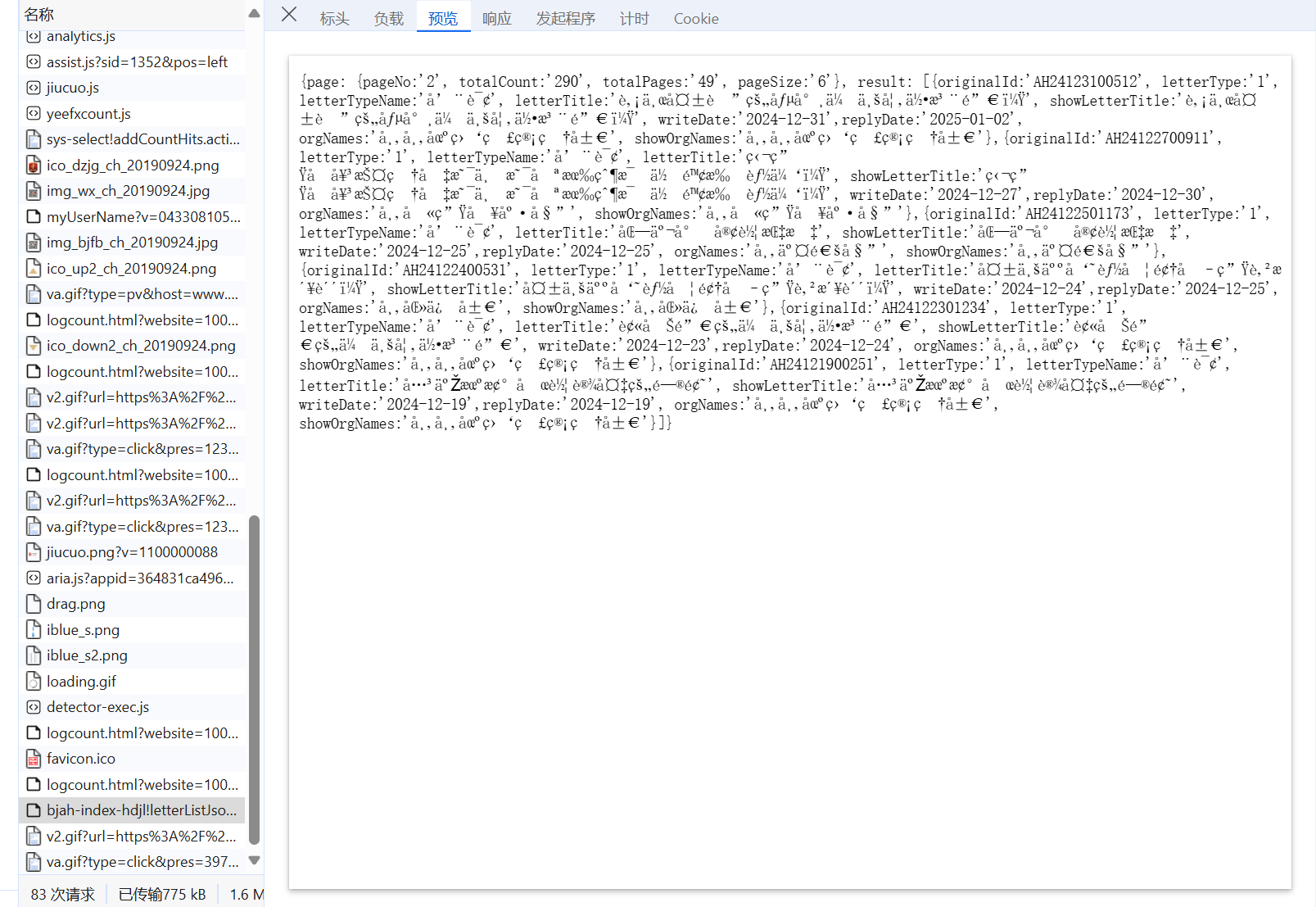

今天主要完成采集任务,一开始慢慢爬取的,使用了fpath来看看那些需要爬,直接爬取需要的东西,真的很麻烦,于是我就想到了看看他怎样发的请求,点开网络后,我还真发现了他所发取的请求,我点击了第二页后,多出来了几个请求,我点击了我最怀疑的,一次就中!

点开预览,发现正好是我需要的数据(虽然乱码),发现这的时候感觉真的好方便

当然这数据肯定不能直接用,他接收的是文本数据,我需要把他转一下json,而且还涉及到其他一些操作,下面我直接把我代码发一下吧

下面是我的爬虫代码

#主爬虫文件

import time

import requests

import json

import re

import pandas as pd

from lxml import etree

def fix_json_string(json_str):

# 替换单引号为双引号

json_str = json_str.replace("'", '"')

# 修正键名用双引号包围的问题

# 使用正则表达式来匹配没有双引号包围的键名

json_str = re.sub(r'(?<!")(\w+)(?=:)', r'"\1"', json_str)

return json_str

def load(pages):

all_letters=[]

for page in range(1,pages):

print(f"正在抓取第 {page} 页...")

url = f'https://www.beijing.gov.cn/hudong/hdjl/sindex/bjah-index-hdjl!letterListJson.action?keyword=&startDate=&endDate=&letterType=0&page.pageNo={page}&page.pageSize=6&orgtitleLength=26'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36 Edg/127.0.0.0',

'Accept': '*/*',

'Accept-Encoding': 'gzip, deflate, br, zstd',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'Connection': 'keep-alive',

'Cookie': '__jsluid_s=ec8eb5b6ca4daa68fa1ac38cc158ce8e; __jsluid_h=208602875127dce0b0683b617247fac1; bjah7webroute=83fabc8af7a68a44338f4ee9b2831e7d; BJAH7WEB1VSSTIMEID=92E27741C8580E168C1830ADA11DA5F9; arialoadData=false; _va_ref=%5B%22%22%2C%22%22%2C1723984565%2C%22https%3A%2F%2Fcn.bing.com%2F%22%5D; _va_ses=*; JSESSIONID=MGJkODhhOWQtNzUwNC00YWMzLWEwM2QtMTE0NGY0NGU4MzEx; _va_id=77a4f3b85c9314e9.1721094882.6.1723985122.1723984565.',

'Host': 'www.beijing.gov.cn',

'Origin': 'https://www.beijing.gov.cn',

'Referer': 'https://www.beijing.gov.cn/hudong/hdjl/sindex/hdjl-xjxd.html',

'Sec-CH-UA': '"Not)A;Brand";v="99", "Microsoft Edge";v="127", "Chromium";v="127"',

'Sec-CH-UA-Mobile': '?0',

'Sec-CH-UA-Platform': '"Windows"',

'Sec-Fetch-Dest': 'empty',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Site': 'same-origin',

'X-Requested-With': 'XMLHttpRequest'

}

response = requests.post(url, headers=headers,timeout=10)

# 检查响应状态码

if response.status_code == 200:

try:

# 修正响应字符串

fixed_json_str = fix_json_string(response.text)

# 将修正后的字符串解析为JSON

json_response = json.loads(fixed_json_str)

# 提取result数组

result = json_response.get('result', [])

print(result)

for letter in result:

mail = {

'originalId': letter['originalId'],

'letterTypeName': letter['letterTypeName'],

'letterTitle': letter['letterTitle'],

'writeDate': letter['writeDate'],

'replyDate': letter['replyDate'],

'href': f'https://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId={letter["originalId"]}',

'letterType':letter['letterType']

}

if letter['letterType']=='1':

param='consult'

param1='consultDetail'

else:

param='suggest'

param1='suggestDetail'

url1=f'https://www.beijing.gov.cn/hudong/hdjl/com.web.{param}.{param1}.flow?originalId={letter["originalId"]}'

all_letter=contents(mail['originalId'], mail['letterTypeName'], mail['letterTitle'], mail['writeDate'], mail['replyDate'],url1)

print(all_letter)

all_letters.append(all_letter)

time.sleep(1)

except json.JSONDecodeError as e:

print("JSON解析失败:", e)

print("修正后的响应文本:", fixed_json_str)

else:

print(f"请求失败,状态码: {response.status_code}")

print("响应内容:", response.text) # 打印响应内容以便调试

return all_letters

def contents(originalId,letterTypeName,letterTitle,writeDate,replyDate,url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36 Edg/127.0.0.0',

'Accept': '*/*',

'Accept-Encoding': 'gzip, deflate, br, zstd',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'Connection': 'keep-alive',

'Cookie': '__jsluid_s=ec8eb5b6ca4daa68fa1ac38cc158ce8e; __jsluid_h=208602875127dce0b0683b617247fac1; bjah7webroute=83fabc8af7a68a44338f4ee9b2831e7d; BJAH7WEB1VSSTIMEID=92E27741C8580E168C1830ADA11DA5F9; arialoadData=false; _va_ref=%5B%22%22%2C%22%22%2C1723984565%2C%22https%3A%2F%2Fcn.bing.com%2F%22%5D; _va_ses=*; JSESSIONID=MGJkODhhOWQtNzUwNC00YWMzLWEwM2QtMTE0NGY0NGU4MzEx; _va_id=77a4f3b85c9314e9.1721094882.6.1723985122.1723984565.',

'Host': 'www.beijing.gov.cn',

'Origin': 'https://www.beijing.gov.cn',

'Referer': 'https://www.beijing.gov.cn/hudong/hdjl/sindex/hdjl-xjxd.html',

'Sec-CH-UA': '"Not)A;Brand";v="99", "Microsoft Edge";v="127", "Chromium";v="127"',

'Sec-CH-UA-Mobile': '?0',

'Sec-CH-UA-Platform': '"Windows"',

'Sec-Fetch-Dest': 'empty',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Site': 'same-origin',

'X-Requested-With': 'XMLHttpRequest'

}

response = requests.get(url, headers=headers,timeout=10)

if response.status_code == 200:

HTML = etree.HTML(response.text)

mail={

'originalId': originalId,

'letterTypeName': letterTypeName,

'letterTitle': letterTitle,

'writeDate': writeDate,

'replyDate': replyDate,

'href':url,

'come_content':''.join(HTML.xpath('//*[@class="col-xs-12 col-md-12 column p-2 text-muted text-format mx-2"]/text()')).strip(), #<div class="col-xs-12 col-md-12 column p-2 text-muted text-format mx-2">非京户,在异地办理的结婚证,现在可以在北京补办吗?</div>

'replay_content':''.join(HTML.xpath('//*[@class="col-xs-12 col-md-12 column p-2 text-muted text-format mx-2 my-3"]/text()')).strip()

}

return mail

else:

print(f"请求失败,状态码: {response.status_code}")

print("响应内容:", response.text) # 打印响应内容以便调试

return {}

def showTotal():

pages = 54

all_letters = load(pages)

# 清理数据中的换行符

for letter in all_letters:

if 'replay_content' in letter:

letter['replay_content'] = letter['replay_content'].replace('\r\n', ' ').replace('\n', ' ').strip()

if 'come_content' in letter:

letter['come_content'] = letter['come_content'].replace('\r\n', ' ').replace('\n', ' ').strip()

df = pd.DataFrame(all_letters)

# df.to_csv('letters.csv',encoding='utf-8',index=False,sep=',')

# 使用 utf-8-sig 编码,添加 BOM 头,Excel 可以正确识别中文

# df.to_csv('letters.csv', encoding='utf-8-sig', index=False, sep=',')

# print("数据已导出到 letters.csv 文件中")

try:

# 使用 utf-8-sig 编码,添加 BOM 头,Excel 可以正确识别中文

df.to_csv('letters.csv', encoding='utf-8-sig', index=False, sep='|')

print("数据已导出到 letters.csv 文件中")

except PermissionError:

print("无法写入 letters.csv 文件。请确保:")

print("1. 文件未被其他程序(如 Excel)打开")

print("2. 程序具有写入权限")

print("请关闭文件后重试")

# 可以尝试使用一个新的文件名

try:

import datetime

timestamp = datetime.datetime.now().strftime("%Y%m%d_%H%M%S")

new_filename = f'letters_{timestamp}.csv'

df.to_csv(new_filename, encoding='utf-8-sig', index=False, sep=',')

print(f"数据已导出到新文件:{new_filename}")

except Exception as e:

print(f"保存到新文件也失败了:{str(e)}")

if __name__ == '__main__':

# pages=54

# all_letters=load(pages)

# print(all_letters)

# df = pd.DataFrame(all_letters)

# df.to_excel('letters.xlsx', index=False)

# print("数据已导出到 letters.xlsx 文件中")

showTotal()

浙公网安备 33010602011771号

浙公网安备 33010602011771号