外部prometheus监控k8s(k3s)集群

通过prometheus监控kubernetes时,在一些实际环境中,会存在把prometheus 部署到kubernetes集群外部,这时需要事先提供token和ca文件来做到自动发现。

创建monitor 命名空间

$ kubectl create namespace monitor

部署gpu_exporter

#gpu_exporter-daemonSet.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

namespace: monitor

labels:

app: gpu-exporter

name: gpu-exporter

spec:

selector:

matchLabels:

app: gpu-exporter

template:

metadata:

labels:

app: gpu-exporter

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: gpu

operator: In

values:

- "true"

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- image: gpu_exporter:latest

imagePullPolicy: Always

name: gpu-exporter

ports:

- containerPort: 9445

name: gpu-port

protocol: TCP

resources:

requests:

cpu: 100m

limits:

cpu: 100m

memory: 200Mi

restartPolicy: Always

serviceAccountName: ""

imagePullSecrets:

- name: <image-pull-secrets>

这里使用了节点亲和性,需要为gpu节点添加 gpu:true 的 标签

$ kubectl apply -f gpu_exporter-daemonSet.yaml

部署node-exporter

#node-exporter-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

labels:

name: node-exporter

name: node-exporter

namespace: monitor

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

creationTimestamp: null

labels:

name: node-exporter

spec:

containers:

- args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

image: prom/node-exporter:v0.18.1

imagePullPolicy: IfNotPresent

name: node-exporter

ports:

- containerPort: 9100

hostPort: 9100

protocol: TCP

resources:

requests:

cpu: 150m

securityContext:

privileged: true

volumeMounts:

- mountPath: /host/dev

name: dev

- mountPath: /host/proc

name: proc

- mountPath: /host/sys

name: sys

- mountPath: /rootfs

name: rootfs

hostIPC: true

hostNetwork: true

hostPID: true

restartPolicy: Always

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- hostPath:

path: /proc

type: ""

name: proc

- hostPath:

path: /dev

type: ""

name: dev

- hostPath:

path: /sys

type: ""

name: sys

- hostPath:

path: /

type: ""

name: rootfs

updateStrategy:

type: OnDelete

$ kubectl apply -f node-exporter-daemonset.yaml

创建用于Prometheus访问KUbenetes资源对象的RBAC对象

# prom.rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitor

$ kubectl apply -f prom.rbac.yaml

获取prometheus对应的Secret信息

$ kubectl get sa prometheus -n monitor -o yaml

...

secrets:

- name: prometheus-token-q84tx

$ kubectl describe secret prometheus-token-q84tx -n monitor

Name: prometheus-token-q84tx

Namespace: monitor

...

Data

====

namespace: 7 bytes

token: <token string>

ca.crt: 566 bytes

上面的token 就是我们用于访问APIServer 的数据,将token保存为k3s.token的文本文件中,放置于prometheus.yaml同级目录中。

添加prometheus job

添加prometheus监控外部k8s(k3s)集群数据的job,如下

#prometheus.yaml

- job_name: 'test-kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoint

api_server: https://<apiserver>:6443

tls_config:

insecure_skip_verify: true

bearer_token_file: /etc/prometheus/k3s.token

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /etc/prometheus/k3s.token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- target_label: __address__

replacement: <apiserver>:6443

- job_name: 'test-kubernetes-nodes'

scheme: http

tls_config:

insecure_skip_verify: true

bearer_token_file: /etc/prometheus/k3s.token

kubernetes_sd_configs:

- role: node

api_server: https://<apiserver>:6443

tls_config:

insecure_skip_verify: true

bearer_token_file: /etc/prometheus/k3s.token

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: '${1}'

action: replace

target_label: LOC

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: 'NODE'

action: replace

target_label: Type

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: 'K3S-test'

action: replace

target_label: Env

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'test-kubernetes-pods'

kubernetes_sd_configs:

- role: pod

api_server: https://<apiserver>:6443

tls_config:

insecure_skip_verify: true

bearer_token_file: /etc/prometheus/k3s.token

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_pod_template_hash]

regex: '(.*)'

replacement: 'K3S-test'

action: replace

target_label: Env

- job_name: 'test-kubernetes-gpu-node'

metrics_path: /metrics

kubernetes_sd_configs:

- role: node

api_server: https://<apiserver>:6443

tls_config:

insecure_skip_verify: true

bearer_token_file: /etc/prometheus/k3s.token

relabel_configs:

- source_labels: [__meta_kubernetes_node_label_gpu]

action: keep

regex: "true"

- source_labels: [__address__]

action: replace

regex: '(.*):10250'

replacement: '${1}:9445'

target_label: __address__

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: '${1}'

action: replace

target_label: LOC

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: 'GPU'

action: replace

target_label: Type

- source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region]

regex: '(.*)'

replacement: 'K3S-test'

action: replace

target_label: Env

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

这里 bearer_token_file 就是上面所保存的token,

reload使之生效

$ curl -X POST --connect-timeout 10 -m 20 http://127.0.0.1:9090/-/reload

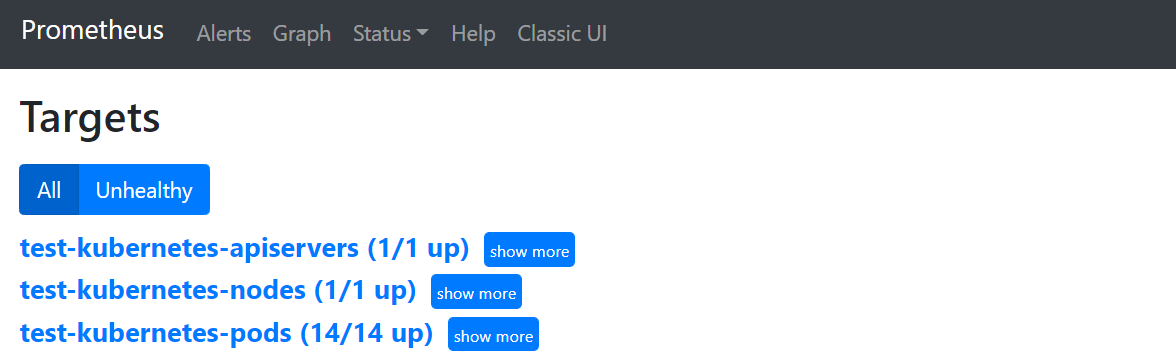

到现在为止,外部prometheus已经将k8s(k3s) 添加到监控列表里面,如下为最终结果

浙公网安备 33010602011771号

浙公网安备 33010602011771号