keepalived原理及nginx+keepalived

一、keepalived高可用简介

keepalived是一个类似与layer3、4和7交换机制的软件,keepalived软件有两种功能,分别是监控检查、VRRP(虚拟路由器冗余协议)

keepalived的作用是检测Web服务器的状态,比如有一台Web服务器、MySQL服务器宕机或工作出现故障,keepalived检测到后,会将故障的Web服务器或者MySQL服务器从系统中剔除,当服务器工作正常后keepalived自动将服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的值是修复故障的Web和MySQL服务器。layer3、4、7工作在TCP/IP协议栈的IP层、传输层、应用层,实现原理为:

layer3:keepalived使用layer3的方式工作时,keepalived会定期向服务器群中的服务器发送一个ICMP数据包,如果发现某台服务的IP地址无法ping通,keepalived便报告这台服务器失效,并将它从服务器集群中剔除。layer3的方式是以服务器的IP地址是否有效作为服务器工作是否正常的标准

layer4:layer4主要以TCP端口的状态来决定服务器工作是否正常。例如Web服务端口一般为80,如果keepalived检测到80端口没有启动,则keepalived把这台服务器从服务器集群中剔除

layer7:layer7工作在应用层,keepalived将根据用户的设定检查服务器的运行是否正常,如果与用户的设定不相符,则keepalived将把服务器从服务器集群中剔除

二、nginx+keepalived集群

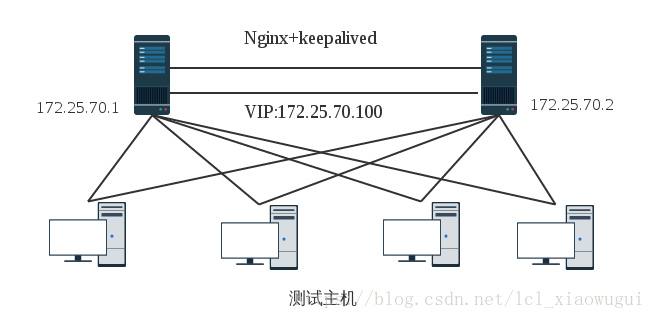

1、原理及环境

Nginx负载均衡一般位于整个架构的最前端或者中间层,如果为最前端时单台nginx会存在单点故障,一台nginx宕机,会影响用户对整个网站的访问。如果需要加入nginx备份服务器,nginx主服务器与备份服务器之间形成高可用,一旦发现nginx主宕机,能够快速将网站切换至备份服务器。

原理图:

准备环境:

nginx-1:172.25.70.1(master),主机名为:keep1

nginx-2:172.25.70.2(backup),主机名为:keep2

2、安装配置

(1)master和backup均安装nginx

##之前预编译需要的gcc、gcc-c++、openssl、openssl-devel等默认已经安装好 [root@keep1 ~]# yum install -y pcre-devel ##安装perl兼容的正则表达式库 [root@keep1 ~]# cd nginx-1.12.0 [root@keep1 nginx-1.12.0]# sed -i -e 's/1.12.0//g' -e 's/nginx\//TDTWS/g' -e 's/"NGINX"/"TDTWS"/g' src/core/nginx.h ##sed修改Nginx版本信息为TDTWS [root@keep1 nginx-1.12.0]# ./configure --prefix=/usr/local/nginx --user=www --group=www --with-http_stub_status_module --with-http_ssl_module [root@keep1 nginx-1.12.0]# make && make install [root@keep1 ~]# vim /usr/local/nginx/conf/nginx.conf 将该文件里面的user nobody的注释去掉 [root@keep1 ~]# ln -s /usr/local/nginx/sbin/nginx /sbin/nginx #创建命令快捷启动 [root@keep1 ~]# nginx #没有报错表示启动成功

(2)master和backup均安装keepalived

##安装依赖包 [root@keep1 ~]# yum -y install libnl libnl-devel libnfnetlink 此时还需要一个包libnfnetlink-devel,但因为redhat6.5自身的镜像源中没有,所以给大家提供一个地址,下载了之后直接用rpm -ivh安装即可 [root@localhost ~]# wget ftp://mirror.switch.ch/mirror/centos/6/os/x86_64/Packages/libnfnetlink-devel-1.0.0-1.el6.x86_64.rpm [root@keep1 keepalived-1.4.3]# rpm -ivh libnfnetlink-devel-1.0.0-1.el6.x86_64.rpm

##编译安装 [root@keep1 ~]# tar zxf keepalived-1.3.6.tar.gz [root@keep1 ~]# cd keepalived-1.3.6 [root@keep1 keepalived-1.3.6]# ./configure --prefix=/usr/local/keepalived --with-init=SYSV [root@keep1 keepalived-1.3.6]# make && make install ##做启动链接等 [root@keep1 keepalived-1.3.6]# ln -s /usr/local/keepalived/etc/keepalived /etc/ [root@keep1 keepalived-1.3.6]# ln -s /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ [root@keep1 keepalived-1.3.6]# ln -s /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ [root@keep1 keepalived-1.3.6]# ln -s /usr/local/keepalived/sbin/keepalived /sbin/ [root@keep1 keepalived-1.3.6]# chmod +x /usr/local/keepalived/etc/rc.d/init.d/keepalived

(3)master和backup分别配置keepalived配置文件

master

[root@keep1 ~]# vim /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost #健康检查报告通知邮箱 } notification_email_from keepalived@localhost #发送邮件的地址 smtp_server 127.0.0.1 #邮件服务器 smtp_connect_timeout 30 route_id LVS_DEVEL } vrrp_script_chk_nginx { script "/data/sh/check_nginx.sh" ##检查本地nginx是否存活脚本需要自己写,后面会有该脚本内容 interval 2 weight 2 } #VIP1 vrrp_instance VI_1 { state BACKUP interface eth0 lvs_sync_daemon_interface eth0 virtual_router_id 151 priority 100 advert_int 5 #健康检测频率 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.70.100 ##VIP } track_script { chk_nginx } } ##以下脚本用于检查本地nginx是否存活,如果不存活,则服务实现切换 [root@keep1 ~]# vim /data/sh/check_nginx.sh #!/bin/bash killall -0 nginx if [[ $? -ne 0 ]]; then /etc/init.d/keepalived stop fi ##编写一个nginx显示的html文件 [root@keep1 ~]# vim /usr/local/nginx/html/index.html <h1>172.25.70.1</h1> 重新启动nginx

backup

##backup的keepalived的配置文件和master只有优先级不一样 [root@keep2 ~]# vim /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost #健康检查报告通知邮箱 } notification_email_from keepalived@localhost #发送邮件的地址 smtp_server 127.0.0.1 #邮件服务器 smtp_connect_timeout 30 route_id LVS_DEVEL } vrrp_script_chk_nginx { script "/data/sh/check_nginx.sh" ##检查本地nginx是否存活脚本需要自己写,后面会有该脚本内容 interval 2 weight 2 } #VIP1 vrrp_instance VI_1 { state BACKUP interface eth0 lvs_sync_daemon_interface eth0 virtual_router_id 151 priority 90 advert_int 5 #健康检测频率 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.70.100 ##VIP } track_script { chk_nginx } } ##backup和master的/data/sh/check_nginx.sh文件相同,这里就不再显示了 ##编写一个nginx显示的html文件 [root@keep2 ~]# vim /usr/local/nginx/html/index.html <h1>172.25.70.2</h1> 重新启动nginx

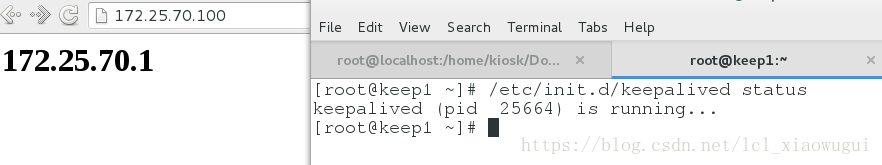

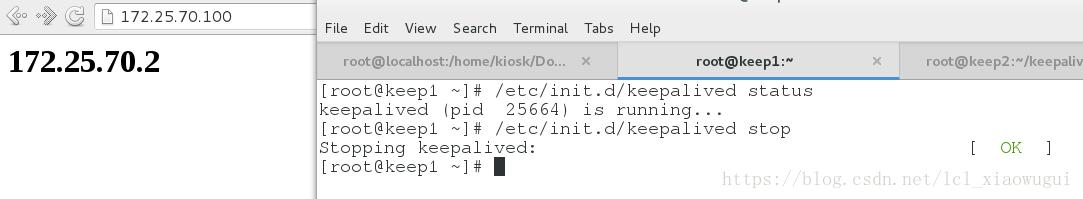

3、测试

1、两台主机的nginx和keepalived都正常工作,使用浏览器访问虚拟ip 172.25.70.100应该得到keep1主机的nginx页面

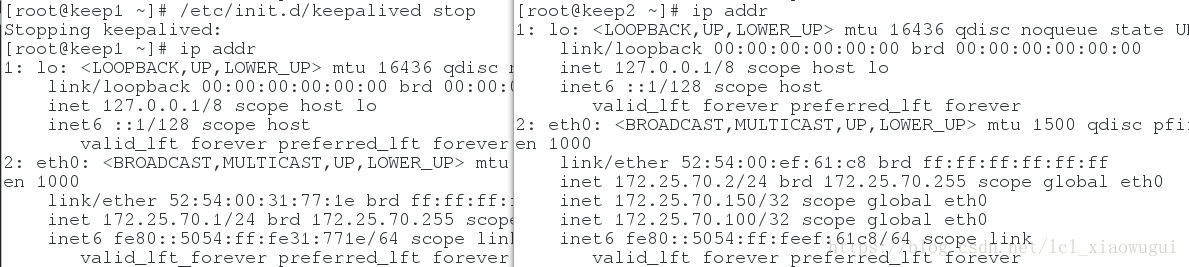

2、关闭keep1的keepalived,再用浏览器访问虚拟ip查看是否实现了高可用

如果在真实情况中,主的nginx宕掉了,两个nginx页面一致,那么会快速将网站切换到备份的服务器上面去

得到上图结果,表示该实验成功!

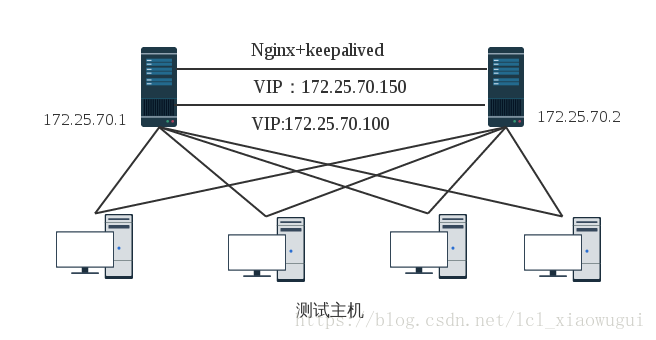

三、nginx+keepalived双主架构

nginx+keepalived主备模式,始终有一台服务器处于空闲状态。为了更好地利用服务器,可以把它们设置为双主模式,另一台为这一台的备份,同时它又是另外一个VIP的主服务器,两台同时对外提供不同服务,同时接收用户的请求。

原理图:

环境:

keep1:172.25.70.1

keep2:172.25.70.2

VIP1:172.25.70.100 ,主为keep1,从为keep2

VIP2:172.25.70.150,主为keep2,从为keep1

2、配置文件

(1)kepp1主机配置keepalived.conf

其实跟上面的集群都是一个套路,所以这里就没有注释了 keep1主机keepalived.conf配置文件内容如下: [root@keep1 ~]# vim /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 route_id LVS_DEVEL } vrrp_script_chk_nginx { script "/data/sh/check_nginx.sh" interval 2 weight 2 } #VIP1 vrrp_instance VI_1 { state MASTER interface eth0 lvs_sync_daemon_interface eth0 virtual_router_id 151 priority 100 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.70.100 } track_script { chk_nginx } } #VIP2 vrrp_instance VI_2 { state BACKUP interface eth0 lvs_sync_daemon_interface eth0 virtual_router_id 152 priority 90 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 2222 } virtual_ipaddress { 172.25.70.150 } track_script { chk_nginx } }

(2)keep2主机配置keepalived.conf

keep2主机配置keepalived.conf文件内容如下: [root@keep2 ~]# vim /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 route_id LVS_DEVEL } vrrp_script_chk_nginx { script "/data/sh/check_nginx.sh" interval 2 weight 2 } #VIP1 vrrp_instance VI_1 { state BACKUP interface eth0 lvs_sync_daemon_interface eth0 virtual_router_id 151 priority 90 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.25.70.100 } track_script { chk_nginx } } #VIP2 vrrp_instance VI_2 { state MASTER interface eth0 lvs_sync_daemon_interface eth0 virtual_router_id 152 priority 100 advert_int 5 nopreempt authentication { auth_type PASS auth_pass 2222 } virtual_ipaddress { 172.25.70.150 } track_script { chk_nginx } } 配置完成后重新启动服务

(3)两台服务器上检测脚本还是和集群实验中的脚本内容相同

3、测试

1、正常情况下,两个虚拟网卡在它自己为主的那个主机上,如下图

2、当其中一台主服务器DOWN掉,则会发现宕掉的那个VIP的从机开始工作,那么两个VIP此时都会在同一个主机上

那么双主架构实验则成功!

4、管理与维护

nginx+keepalived双主架构,日常维护和管理需要从以下几个方面:

keepalived主配置文件必须设置不同的VRRP名称,同时优先级和VIP也不相同

该实验中用到了两台服务器,nginx网站访问量为两台服务器访问之和,可以用脚本来统计

两个nginx都是master,有两个VIP地址,用户从外网访问VIP,需要配置域名映射到两个VIP上,可以通过外网DNS映射不同的VIP

可以通过zabbix监控等实时监控VIP访问是否正常

原文链接:https://blog.csdn.net/lcl_xiaowugui/java/article/details/81712925

nginx+keepalived高可用

负载均衡技术对于一个网站尤其是大型网站的web服务器集群来说是至关重要的!做好负载均衡架构,可以实现故障转移和高可用环境,避免单点故障,保证网站健康持续运行。

由于业务扩展,网站的访问量不断加大,负载越来越高。现需要在web前端放置nginx负载均衡,同时结合keepalived对前端nginx实现HA高可用。

介绍下Nginx和keepalive

1.Nginx

Nginx 是一个很强大的高性能Web和反向代理服务器,它具有很多非常优越的特性:

Nginx作为负载均衡服务器:Nginx 既可以在内部直接支持 Rails 和 PHP 程序对外进行服务,也可以支持作为 HTTP代理服务器对外进行服务。Nginx采用C进行编写,不论是系统资源开销还是CPU使用效率都比 Perlbal 要好很多。

2.keepalive

Keepalived是Linux下面实现VRRP备份路由的高可靠性运行件。基于Keepalived设计的服务模式能够真正做到主服务器和备份服务器故障时IP瞬间无缝交接。二者结合,可以构架出比较稳定的软件LB方案。

Nginx+keepalive高可用方式有两种:

1.Nginx+keepalived 主从配置

这种方案,使用一个vip地址,前端使用2台机器,一台做主,一台做备,但同时只有一台机器工作,另一台备份机器在主机器不出现故障的时候,永远处于浪费状态,对于服务器不多的网站,该方案不经济实惠。

2.Nginx+keepalived 双主配置

这种方案,使用两个vip地址,前端使用2台机器,互为主备,同时有两台机器工作,当其中一台机器出现故障,两台机器的请求转移到一台机器负担,非常适合于当前架构环境。所以在这里就详细介绍下双主模型配置

一、拓扑结构

二、测试环境介绍

系统centos7.4 64位

centos6.9 64位

前端node1服务器:DIP:192.168.92.136

VIP1:192.168.92.23

VIP2:192.168.92.24

前端node2服务器:DIP:192.168.92.133

VIP1:192.168.92.24

VIP2:192.168.92.23

后端服务器:web node3:192.168.92.123

web node4:192.168.92.124

web node5:192.168.92.125

我们开始之前先把防火墙和selinux关掉,很多时候我们服务器之间不通都是这些原因造成的。

三、软件安装

Nginx和keepalive的安装非常简单,我们可以直接使用yun来安装。

yum install keepalived nginx -y

后端服务器我们同样用yum来装上Nginx

后端node3

[root@node3 ~]# yum -y install nginx

[root@node3 ~]# echo "this is 192.168.92.123" > /usr/share/nginx/html/index.html

[root@node3 ~]# service nginx start

[root@node3 ~]# curl 192.168.92.123

this is 192.168.92.123后端node4

[root@node4 ~]# yum -y install nginx

[root@node4 ~]# echo "this is 192.168.92.124" > /usr/share/nginx/html/index.html

[root@node4 ~]# service nginx start

[root@node4 ~]# curl 192.168.92.124

this is 192.168.92.124后端node5

[root@node5 ~]# yum -y install nginx

[root@node5 ~]# echo "this is 192.168.92.125" > /usr/share/nginx/html/index.html

[root@node5 ~]# service nginx start

[root@node5 ~]# curl 192.168.92.125

this is 192.168.92.125四、在node1、node2上配置Nginx

[root@node2 ~]# vim /etc/nginx/conf.d/node2.conf #在扩展配置目录中配置需要注释掉主配置文件中的server部分

upstream web1 {

#ip_hash; #hash绑定ip

server 192.168.92.123:80;

server 192.168.92.124:80;

server 192.168.92.125:80;

}

server {

listen 80;

server_name www.node.com;

index index.html index.htm;

location / {

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_pass http://web1;

}

}五、在node1上配置keepalive

[root@node1 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_gruop4 224.0.100.23

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk_nginx.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER

interface ens37

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 111123

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.92.23

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens37

virtual_router_id 151

priority 98

advert_int 1

authentication {

auth_type PASS

auth_pass 123123

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.92.24

}

}六、在node2上配置keepalive

[root@node2 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_mcast_gruop4 224.0.100.23

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk_nginx.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens34

virtual_router_id 51

priority 98

advert_int 1

authentication {

auth_type PASS

auth_pass 111123

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.92.23

}

}

vrrp_instance VI_2 {

state MASTER

interface ens34

virtual_router_id 151

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123123

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.92.24

}

}七、在双主服务器上添加检测脚本

此脚本作用是检测Nginx是否运行,如果没有运行就启动Nginx

如果启动失败则停止keepalive,保证备用服务器正常运行。

[root@node2 ~]# cat /etc/keepalived/chk_nginx.sh

#!/bin/bash

status=$(ps -C nginx --no-heading|wc -l)

if [ "${status}" = "0" ]; then

systemctl start nginx

status2=$(ps -C nginx --no-heading|wc -l)

if [ "${status2}" = "0" ]; then

systemctl stop keepalived

fi

fi八、启动Nginx、keepalive服务

[root@node2 ~]# service nginx start

[root@node2 ~]# service keepalived start

[root@node3 ~]# service nginx start

[root@node3 ~]# service keepalived start九、查看VIP并测试访问

[root@node2 ~]# ip a

..........

ens34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ca:0b:2b brd ff:ff:ff:ff:ff:ff

inet 192.168.92.133/24 brd 192.168.92.255 scope global dynamic ens34

valid_lft 1293sec preferred_lft 1293sec

inet 192.168.92.24/32 scope global ens34

valid_lft forever preferred_lft forever

inet6 fe80::9bff:2e2b:aebb:e35/64 scope link

valid_lft forever preferred_lft forever

.........

[root@node1 ~]# ip a

..........

ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:04:b6:17 brd ff:ff:ff:ff:ff:ff

inet 192.168.92.136/24 brd 192.168.92.255 scope global dynamic ens37

valid_lft 1567sec preferred_lft 1567sec

inet 192.168.92.23/32 scope global ens37

valid_lft forever preferred_lft forever

inet6 fe80::7ff4:9608:5903:1a4b/64 scope link

valid_lft forever preferred_lft forever

..........[root@node1 ~]# curl http://192.168.92.23

this is 192.168.92.123

[root@node1 ~]# curl http://192.168.92.23

this is 192.168.92.124

[root@node1 ~]# curl http://192.168.92.23

this is 192.168.92.125

[root@node1 ~]# curl http://192.168.92.24

this is 192.168.92.124十、测试脚本是否能正常运行

手动停止Nginx后自动恢复启动

[root@node1 ~]# systemctl stop nginx

[root@node1 ~]# ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:80 *:* users:(("nginx",pid=20257,fd=6),("nginx",pid=20256,fd=6))

LISTEN 0 128 *:22 *:* users:(("sshd",pid=913,fd=3))

LISTEN 0 100 127.0.0.1:25 *:* users:(("master",pid=991,fd=13))

LISTEN 0 128 :::22 :::* users:(("sshd",pid=913,fd=4))

LISTEN 0 100 ::1:25 :::*

浙公网安备 33010602011771号

浙公网安备 33010602011771号